24 hours in AI news

More and more signs that AI paradise is not imminent

A bunch of small but important items in recently-breaking AI news:

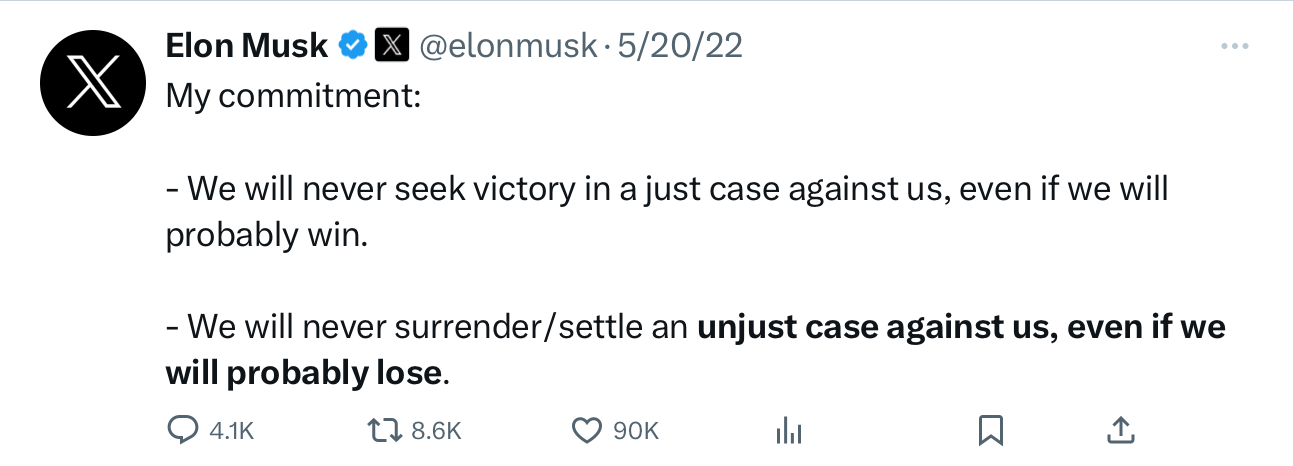

Tesla settled their lawsuit with the Huang family over Walter Huang’s death, for an undisclosed amount of money. “Although Huang’s family acknowledges he was distracted while the car was driving, they argued Tesla is at fault because it falsely marketed Autopilot as self-driving software. They alleged Tesla knew that Autopilot was not ready for prime time and had flaws that could make its use unsafe.” The settlement is striking in part because Musk had previously said this

Then again, as the Washington Post put it,

If Tesla went through with this trial, its technology would have been subject to intense scrutiny at a time when Musk is aggressively pushing his driver-assistance features out to the public.

• The Information reported that some late stage venture capital firms are sitting out the current GenAI rush. VC Paul Madera was quoted as saying “We are seeing a massive experimentation period here that can give you the head fake that [a startup] has a real business”. Another sign that the bubble may not last forever.

• In another bit of possible foreshadowing: GenAI is starting to get a tiny bit of Scarlet Letter feel. Two examples I spotted yesterday:

A second from Y Combinator co-founder Paul Graham:

• Tesla FSD is getting better. But is getting enough better? A well-known Tesla fan (and presumed investor) proudly posted a 45 minute video of error-free Tesla driving, asking rhetorically, “Watch this video of my Tesla performing two rideshare pick ups and drop offs with zero interventions for 45 minutes and explain to me why it's impossible to scale that up to 450 minutes?”. When another Tesla fan asked me about it, I took the challenge and articulated three concerns:

§

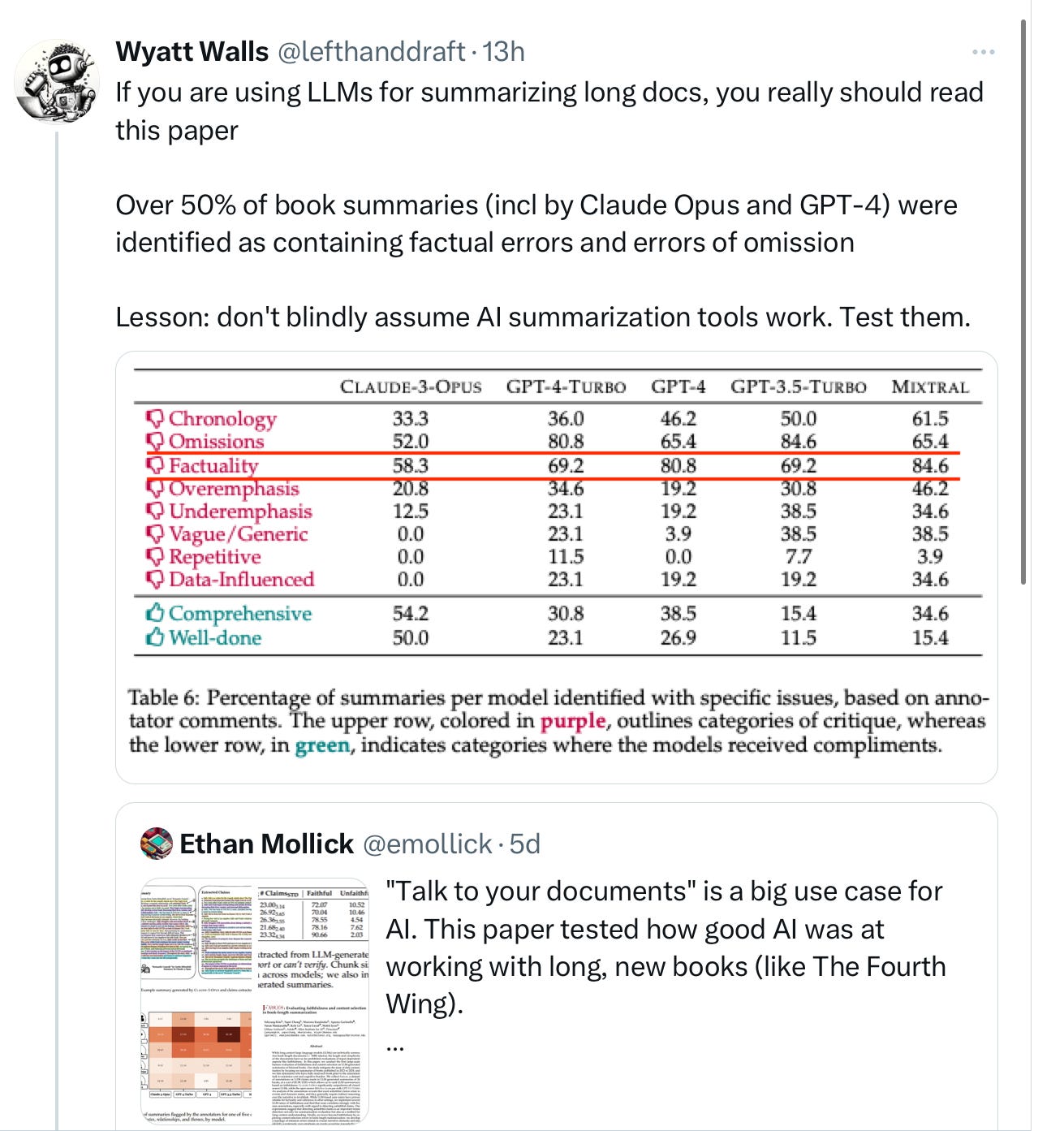

Meanwhile, Wyatt Walls just pointed out some gruesome facts about LLMs and summarization:

§

Bonus track: Politico Tech interviewed me this morning on their podcast:

The new article on AI policy that we discussed will be out tomorrow, at Politico. (Some of the ideas there draw from the thinking and research for my new book Taming Silicon Valley, which MIT Press will publish in September.)

Gary Marcus wants us all, collectively, to figure out how we get to a thriving, AI-positive world.

We are constructing a Real Time/Real World experiment to discover just how destructive stochastic parrots spewing word salad joined with the Argumentum ad Populum Fallacy is.

Re the "scarlet letter": This has become the new normal in science fiction magazines over the last year. The use of AI in SF art or storytelling is toxic. The highest-reputation, best-known, best-paying magazines won't accept AI submissions, with language like this quote from Asimov's: "Statement on the Use of “AI” writing tools such as ChatGPT: We will not consider any submissions written, developed, or assisted by these tools. Attempting to submit these works may result in being banned from submitting works in the future." And if they accidentally run an AI-generated cover, SF presses have been known to _withdraw it and apologize._