24 Seriously Embarrassing Hours for AI

All the recent goodwill and enthusiasm could evaporate fast

By my count, the following things have come to light in the last 24 hours or so.

Turns out Tesla staged their famous 2016 driverless car demo, with the famous tagline “The person in the driver’s seat is only there for legal reasons. He is not doing anything. The car is driving itself.”.1 What they showed, it seems, was aspirational, not real, not a single, unedited run taken by a single car. Roughly $100 billion in investment went in, partly on the strength of that demo and the excitement it generated, in part on Musk’s say so; in the subsequent six years, no car has yet achieved what Elon Musk promised would soon arrive, an intervention-free drive, without human assistance, from LA to NYC. (And highways miles are the easy part; nobody even pretends anymore to be close to doing that off the Interstate). Nobody is treating this whole episode like Thereanos—the official company legal line is failure is not fraud —but I expect John Carreyrou might find the whole thing interesting.

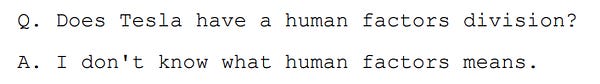

We also discovered from court testimony this week that some reasonably high level employees working on driverless cars were apparently unaware of human factors engineering (an absolute necessity if humans are in the loop). This tweet (and several others by the same author, Mahmood Hikmet, in the last couple days) blew me away, and not in a good way. Honestly, rereading it makes my stomach churn.

OpenAI turns out to have been using sweatshops behind the scenes. You might think that the ChatGPT is just a regular old massive neural network that soaks up a massive amount of training data from the web, but you’d only be partly correct. There is in fact a massive, massively trained large model behind the scenes, but it’s accompanied by a massive amount of human labor, built to filter our bad stuff. A bunch of that work was done poorly paid labor in Kenya, paid less than $2/hour to evaluate (e.g.) graphic descriptions of sexual situations involving children and animals that I prefer not to describe it in detail. Billy Perrigo’s expose at Time is a must read.

Riley Goodside, one of the people who best knows what large language models can and can’t do, put Claude, the latest large model to the test; the focus on this model is on alignment. You can read his detailed comparison for yourself, but one of the things that popped out to me is that the system still quickly lands in the land of hallucination that has so haunted ChatGPT.

CNET became the first casualty of the recently fashionable tendency to put too much faith in ChatGPT. Without making a big deal of it, they started posting ChatGPT-written stories. Mistakes were made. A lot of them. Oops.

The coup de grace? The musician Nick Cave got a listen to ChatGPT riffs on his music. If you can believe it, he was even more scathing than I am:

Fake demos, hallucination, arrogance and ignorance around basic human engineering, crappy song writing, misleading news stories and sweatshops.

None of this is a good look.

§

I wrote a little thread about the sweatshop stuff in particular:

Our choices here matter.

I hope we will make better choices.

Gary Marcus is a scientist, best-selling author, and entrepreneur. Ernest Davis is Professor of Computer Science at New York University. His most recent book, co-authored with Ernest Davis, Rebooting AI, is one of Forbes’s 7 Must Read Books in AI. You can also listen to him, on The Ezra Klein Show.

Apparently the NYT mentioned this before in a documentary on Tesla that I had meant to watch; for whatever reasons, it’s only really getting talked about now.

Regarding the data labeling "sweatshop". The headlines and lede in the story emphasize the pay level. But as you read the article, it becomes clear that the real issue is the horrifying nature of the work. Even if the workers were paid 100 times more or if the work was done in the US, it is psychologically punishing. We need to find a better way prevent toxic behavior of AI systems than creating large labeled data sets of horror.

Wow, this sentence jumped out at me:

"We also discovered from court testimony this week that some reasonably high level employees working on driverless cars were apparently unaware of human factors engineering (an absolute necessity if humans are in the loop)."

That sentence pretty much describes the big picture of our technological civilization. On every front it seems that engineers fail to take in to account the human factor.

Try asking such "experts" this question.

Do you think that human beings can successfully manage ever more, ever larger powers, delivered at an ever faster rate, without limit?

The entire knowledge explosion being driven by the "experts" is built upon a failure to address that question with intellectual honesty.

Really bad engineering. It's like designing a car that can go 500mph, and forgetting that close to no one can keep a car on the road at that speed.

Set your sights higher Marcus. Don't content yourself with debunking only the AI industry.