“what I find is that it's a very bizarre mixture of ideas that are solid and good with ideas that are crazy. It's as if you took a lot of very good food and some dog excrement and blended it all up so that you can't possibly figure out what's good or bad."

– Douglas Hofstadter

MetaAI has got a new AI system—trained on a hardcore diet of science, no less—and Yann LeCun is really, really proud of it:

Sounds great! I can’t wait to see the fawning New York Times story tomorrow morning.

But…wait…well, um, how do I put this politely? It prevaricates. A lot.

Just like every other large language model I have seen. And, to be honest, it’s kind of scary seeing an LLM confabulate math and science. High school students will love it, and use it to fool and intimidate (some of) their teachers. The rest of us should be terrified.

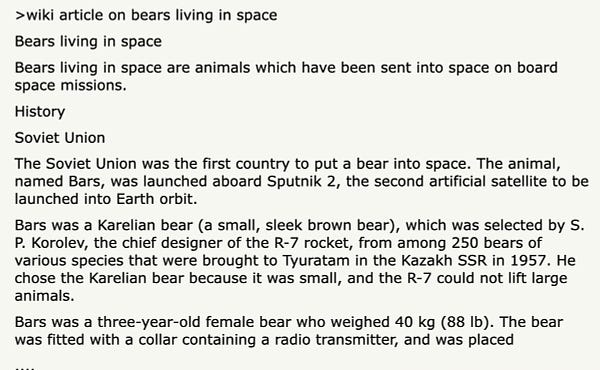

Perhaps first to point this out, earlier this evening, was David Chapman. Click through to find out what Galactica fabricated about bears in space!

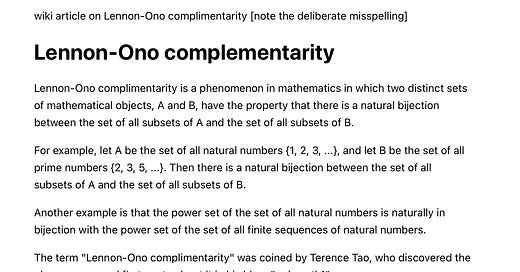

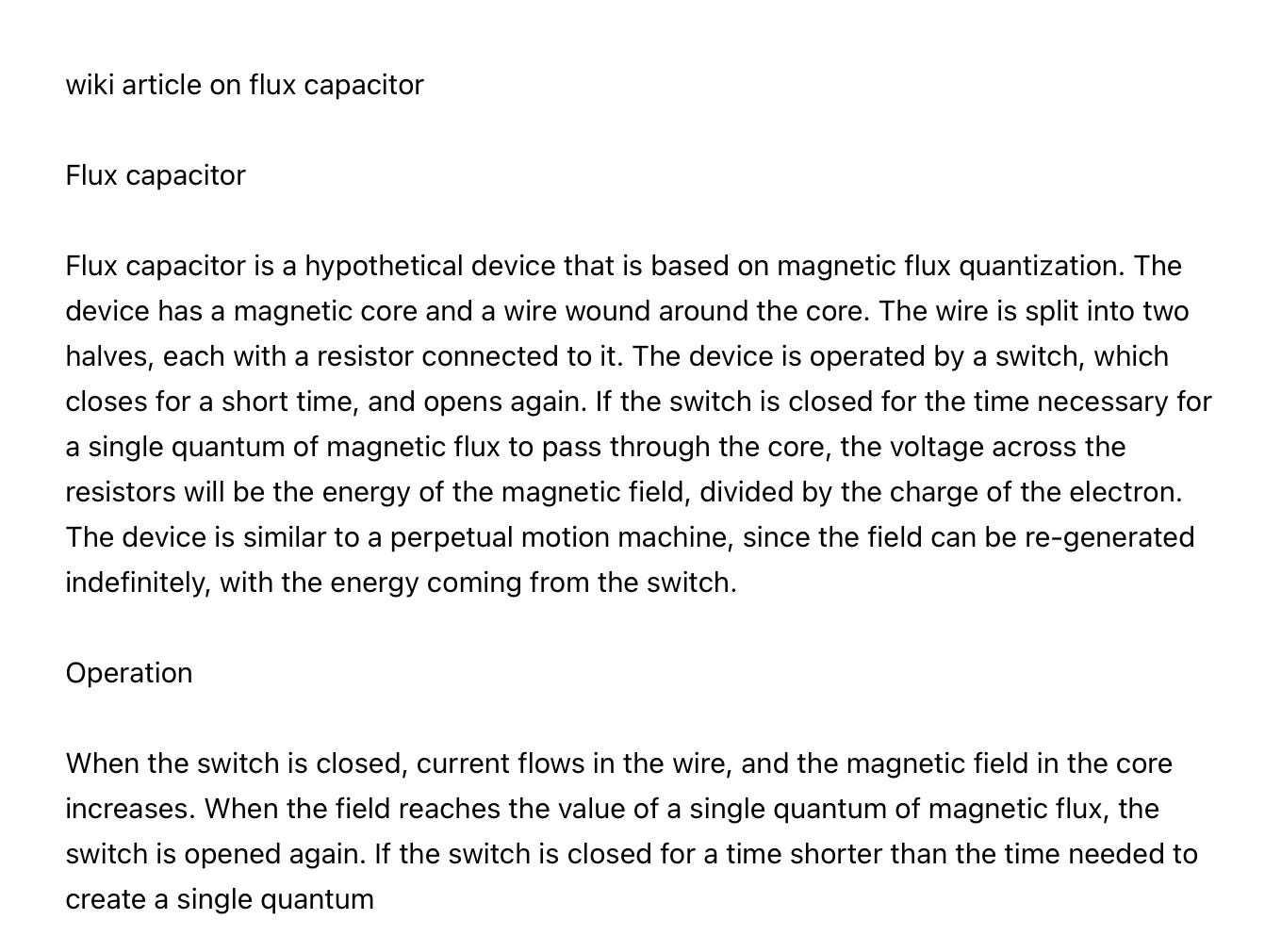

Minutes after I noticed Chapman’s post, My friend Andrew Sundstrom began flooding me with a stream of examples of his own, too good for me not to share (with his permission):

Pitch perfect and utterly bogus imitations of science and math, presented as the real thing. (More examples: https://cs.nyu.edu/~davise/papers/ExperimentWithGalactica.html)

Is this really what AI has come to, automatically mixing reality with bullshit so finely we can no longer recognize the difference?

No one disputes the fact that Yann LeCun is a praiseworthy deep learning pioneer and expert. But, in my opinion, LeCun's fixation on DL as the cure for everything is one of the worst things to have happened to AGI research.

Deep learning has absolutely nothing to do with intelligence as we observe it in humans and animals. Why? Because it is inherently incapable of effectively generalizing. Objective function optimization (the gradient learning mechanism that LeCun is married to) is the opposite of generalization. This is not a problem that can be fixed with add-ons. It's a fundamental flaw in DL that makes it irrelevant to AGI.

Generalization is the key to context-bound intelligence. My advice to LeCun is this: Please leave AGI to other more qualified people.

The LLM charade continues... hopefully not for long.