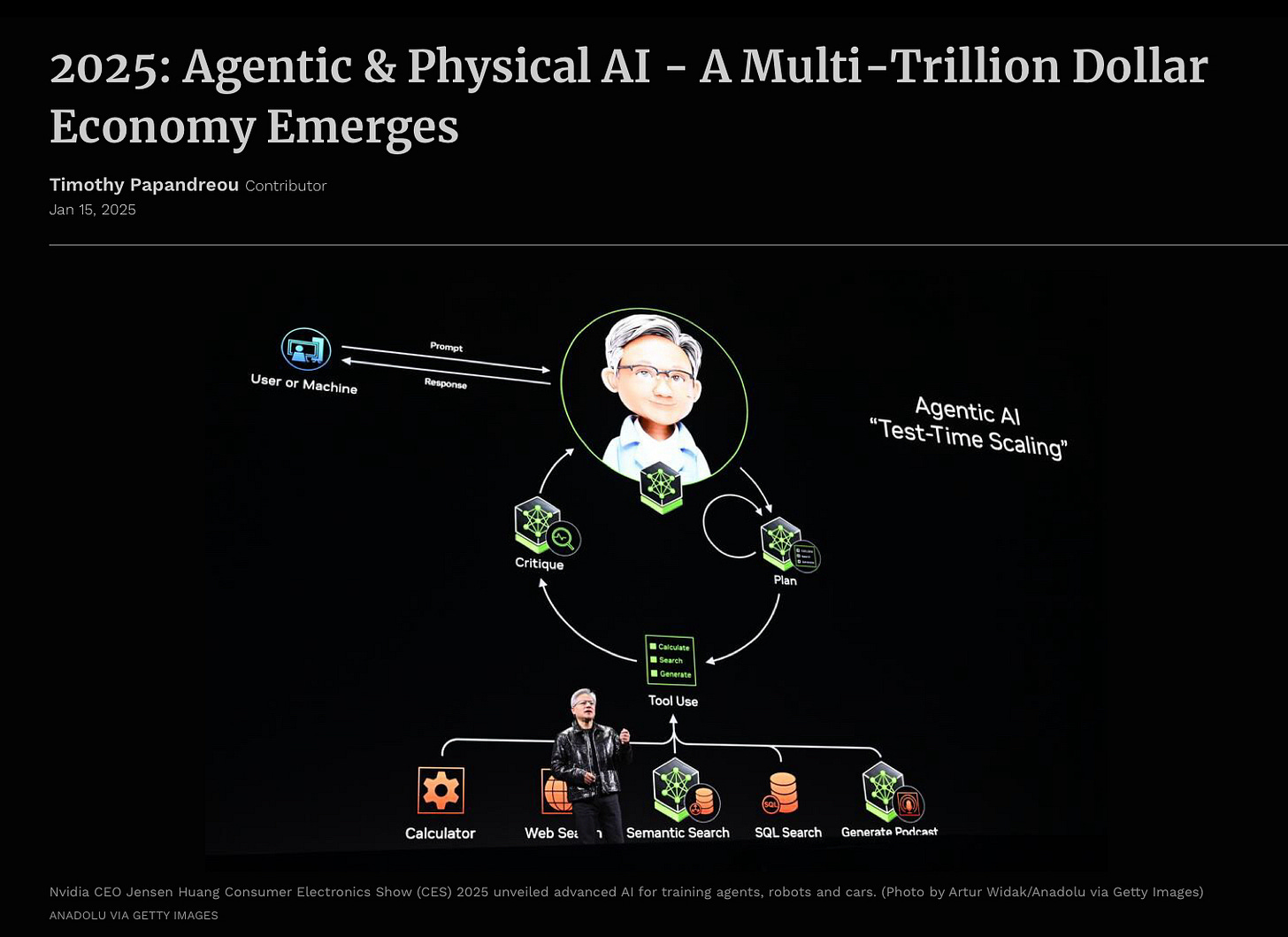

Agents are practically all that anyone in AI can talk about these days, and the hype is absolutely huge. Headlines like these are everywhere:

and

The funny thing is, this time I think the hype is right — at least in the long term.

I do genuinely think we will all have our own AI agents, and companies will have armies of them. And they will be worth trillions, since eventually (no time soon) they will do a huge fraction of all human knowledge work, and maybe physical labor too.

But not this year (or next, or the one after that, and probably not this decade, except in narrow use cases). All that we will have this year are demos.

And those demos won’t work all that reliably.

One of the first demos, a very minimal agents was released yesterday, was OpenAI’s “task” system that lets you schedule ChatGPT actions, a far cry from being able to do literally anything you might ask.

The thing about scheduling is that it needs to work reliably. And it won’t.

Already, in fact we are hearing reports that these agents are “extremely brittle”, fouled up by the pesky details of reality. Here’s one of the first reports, h/t

:§

We can safely expect many, many more reports like these throughout the year, akin to the endless and still ongoing reports of hallucinations over the last couple years. That is in part because you can’t build reliable agents unless you can solve the hallucination problem, and there is still no principled trustworthy answer to that problem.

More broadly, you can’t build agents unless you have common sense, sound reasoning, a tight grounding in reality, and systems that reliably do you what you ask of them, with enough theory of mind to infer your intentions.

If that’s what you want, generative just AI aren’t the ticket, because are exactly the sticking points that have stymied them over and over again.

§

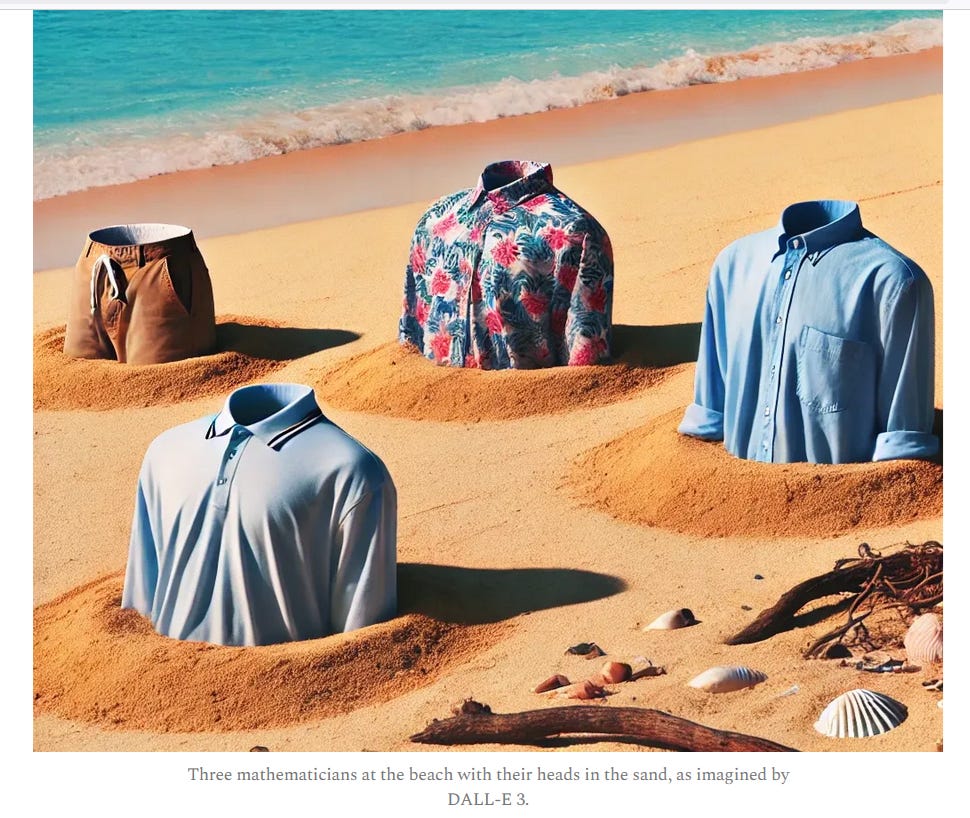

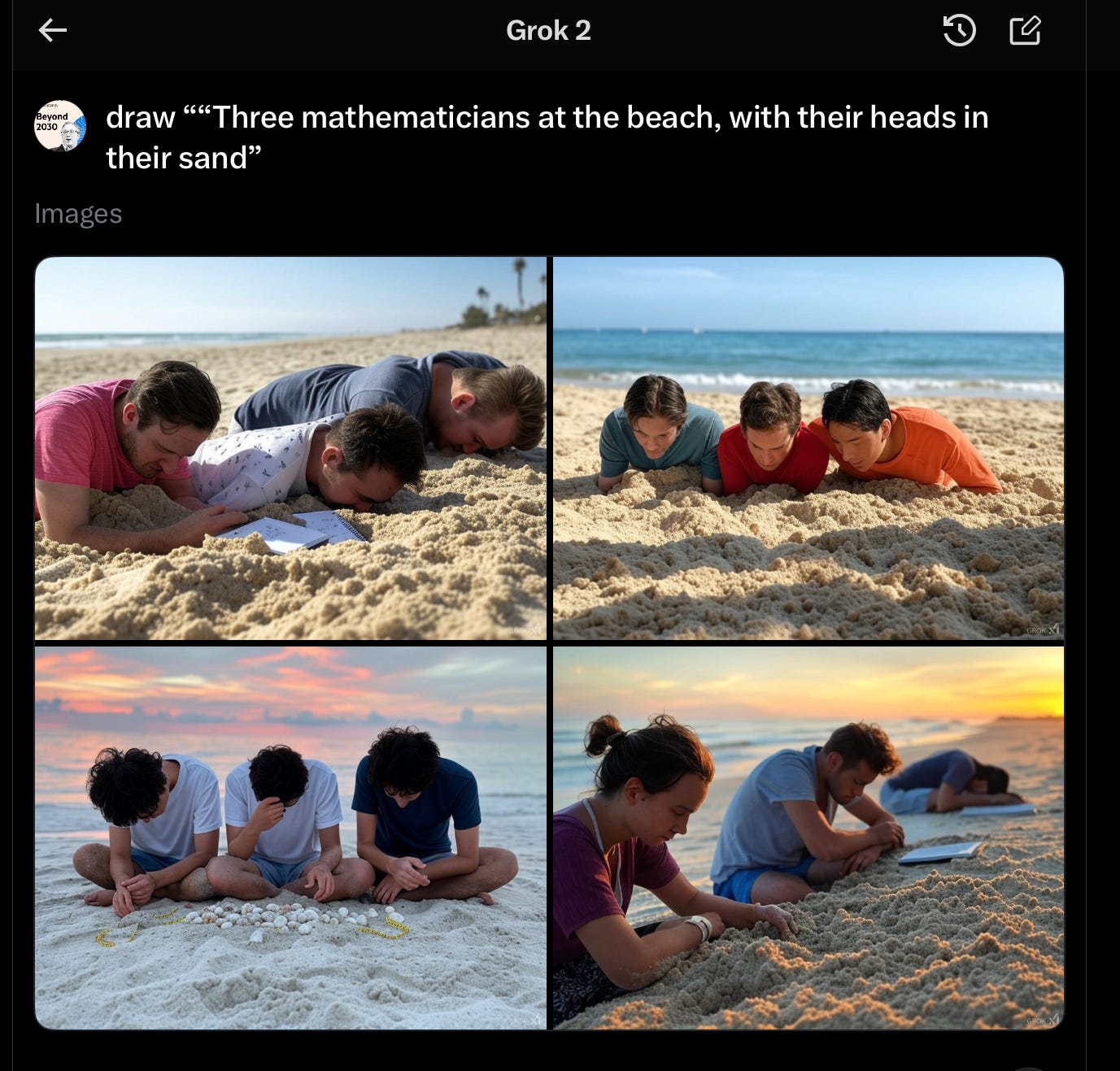

Perhaps no picture has ever captured those sticking points more than this one that Ernest Davis sent me this morning, a Dall-E image that just isn’t quite what most of would have expected1 from the prompt:

If that image, created by what you might call a drawing agent, isn’t a metaphor for the field of AI circa 2025, I don’t know what is.

Gary Marcus a scientist, author, and entrepreneur who has almost literally gone in blue in the face trying to warn people that LLMs are not the AI’s we are looking for.

GenAI is always stochastic; some efforts at this prompt will work, some will not. Some prompts will make similar errors (e.g. wrong number of people, or unexpected beheadings), some won’t. The same will be true of AI agents, some prompts, some of the time will work, and some of the time they will give strange results. It will be difficult to know in advance what to expect. Your mileage will vary.

"Nvidia's biggest customers delaying orders of latest AI racks, The Information reports"

https://finance.yahoo.com/news/nvidias-biggest-customers-delaying-orders-153930803.html

The report is about hardware problems, but an easily falsifiable prediction is that the vast over provisioning of redundant AI training datacenters worldwide will peak in 2025. Similar to how mass production factories overbuilt in the 1920s and the Internet-related infrastructure overbuilt in the 1990s.

Will not end well and this upcoming crash will be dubbed the "AI Bubble".

The other problem of Agents besides hallucinations is **interoperability**. This is now the domain of Communication not just Computing, and standards/protocols matter, like in the 7-layer OSI model. We need an "OSI model" for layers 5-6-7. There are developments there for sure, but the companies will need to collaborate a la IEEE, not as winner-take-all as typical in computing.