Perhaps no week of AI drama will ever match the week in which Sam got fired and rehired, but the writers for the AI reality series we are all watching just don’t quit.

For one thing, the bad press about Sam Altman and OpenAI, who once seemingly could do no wrong, just keeps coming.

• The Wall Street Journal had a long discussion of Altman’s financial holdings and possible conflicts of interest [“The Opaque Investment Empire Making OpenAI’s Sam Altman Rich: Many companies backed by CEO do business with the ChatGPT maker and benefit from the AI boom driven by the blockbuster startup, raising questions of conflicts”]

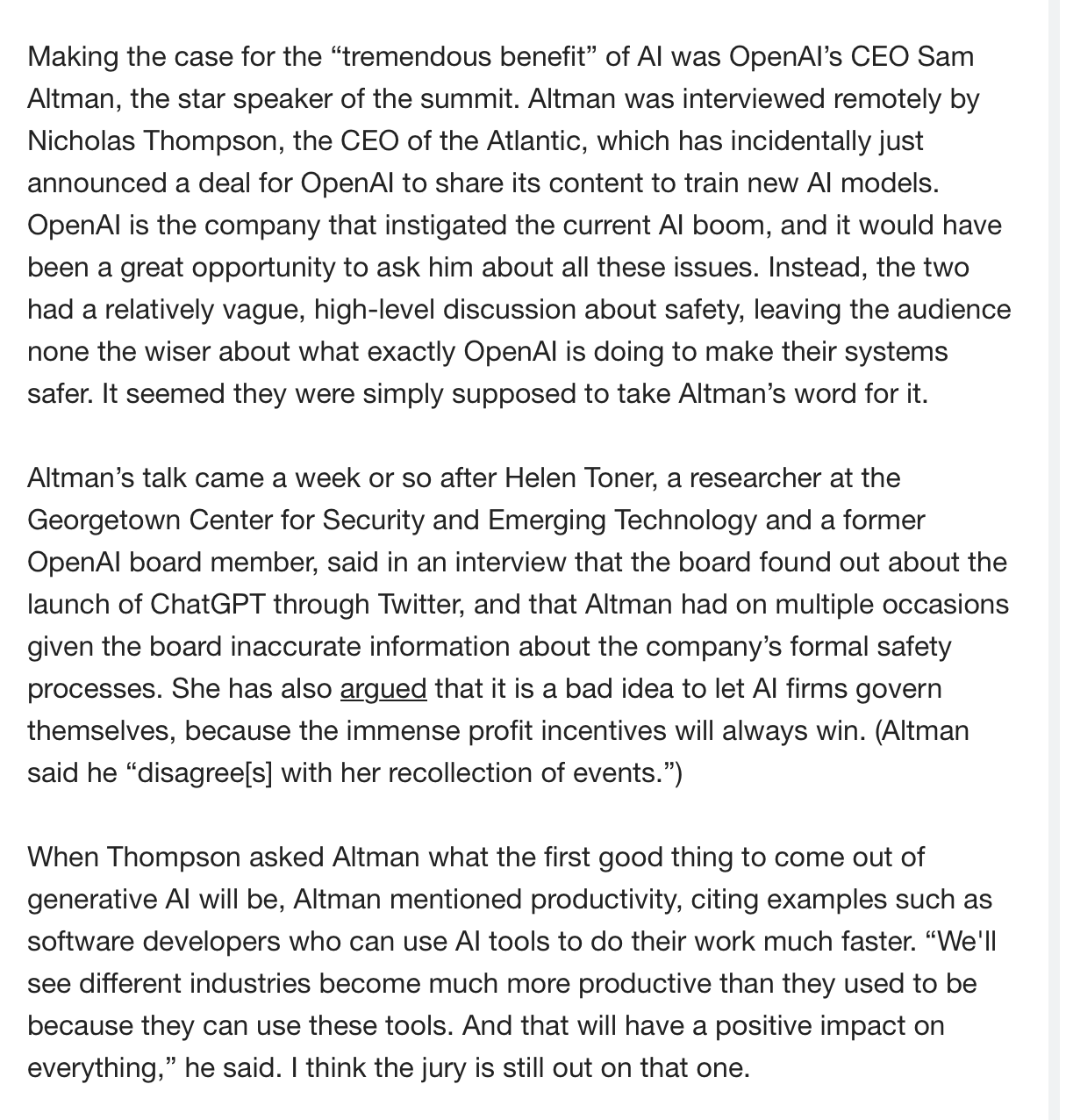

• Melissa Heikkilä at Technology Review more or less panned Altman’s recent fireside chat at AI for Good,

• Sam himself let slip, as part of his rather unconvincing rebuttal of Helen Toner’s allegations that OpenAI had finished training GPT-4 by November 2022; when I shared this on Twitter, internet sleuths reminded me that in fact OpenAI had shown GPT-4 to Bill Gates in August 2022. Which means (it suddently connected for me) that it has been almost two years since there’s been a bona fide GPT-4-sized breakthrough, despite the constant boasts of exponential progress, with no real solution to hallucinations, unreliability, poor reasoning or shaky alignment— even though the field has invested more than $50B in efforts to power beyond GPT-4.

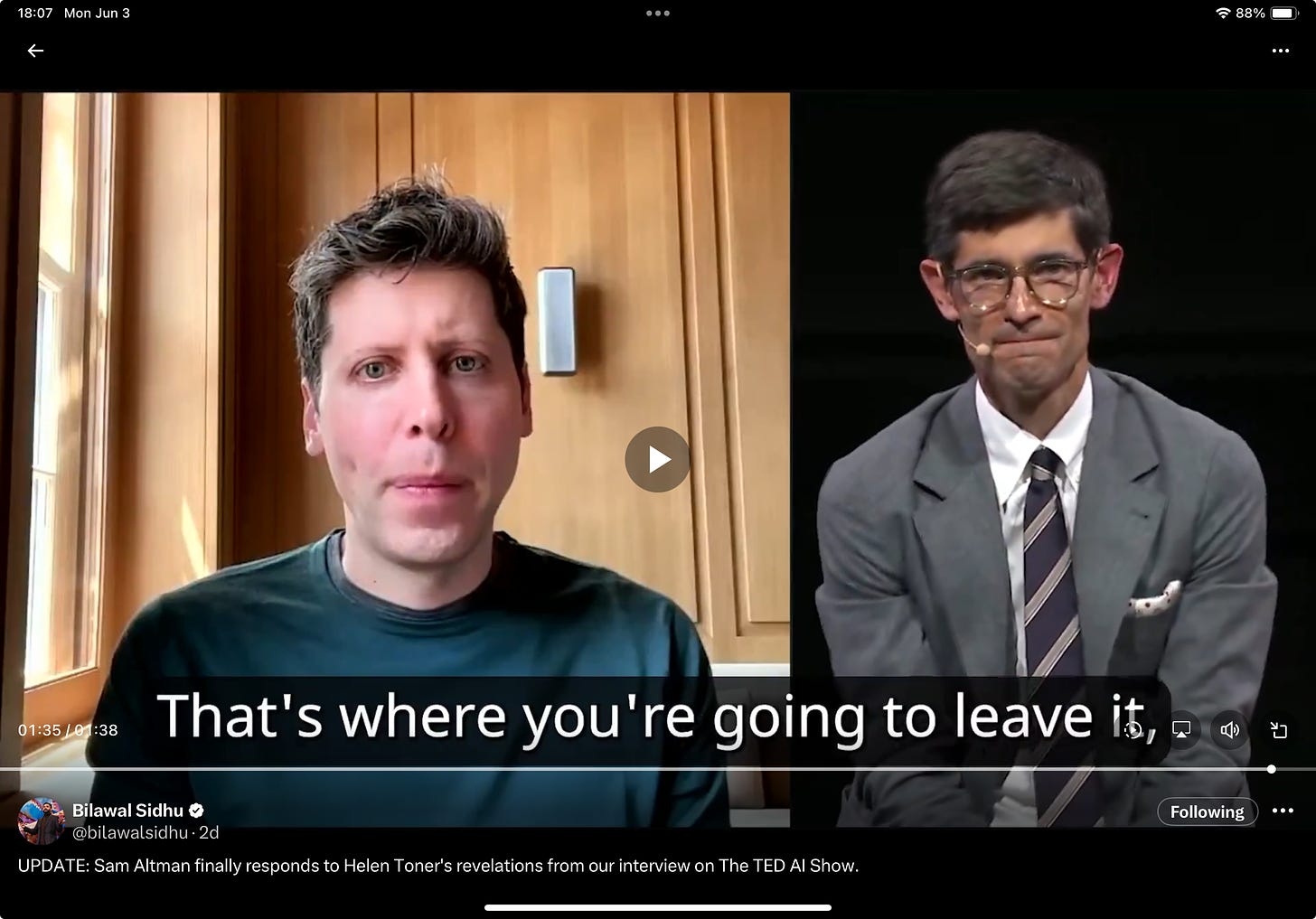

• Speaking of that rebuttal, I recommend watching his frowny nondenial for yourself. Yes, GPT-4 had been trained, but did the board know it was going to be released as a product?

• For bonus points, watch his eyes when he discusses Scarlett Johansson. As one person on X put it, “What I hear is someone that has a hard time with the truth.”

•In a separate Wall Street Journal piece, The AI Revolution is Already Losing Steam, Christoper Mims (who quoted me) echoed a lot of what I have been arguing here largely, writing that “The pace of innovation in AI is slowing, its usefulness is limited, and the cost of running it remains exorbitant.”

•Meanwhile, Paris Marx echoed my own feelings about Kara Swisher’s apparent lack of objectivity around Altman and went much further. As he summarized it on Instagram, “When Sam Altman was ousted as CEO, Kara Swisher pushed a story that framed him as the victim of a board captured by AI doomers. But as more detail has emerged about Altman’s lies and “toxic” conduct, it’s become clear she was a conduit for his narrative.”

§

All that said, not all the news was about OpenAI.

There was secondary plot, too, as the brawl between Musk and LeCun escalated. I haven’t followed all of it, but in breaking gossip department, Yann LeCun just pushed Elon Musk to the point of unfollowing him.

Who could have seen that coming? 🤷♂️

Tune in next week (or maybe just tomorrow) for more in As the AI World Turns.

Gary Marcus wishes AI these days was more about research than money, politics, and ego.

The author of the Blood in the Machine book about the Luddites, Brian Merchant, wrote an interesting piece about AI being shitty but still being able to take over jobs (it doesn't have to be good, the bosses need to believe — correctly or incorrectly — it is good enough). Like graphics and creative writing being replaced by GenAI. The result is shitty, but it's a lot cheaper. He makes interesting comparisons with the rapid growth phase of the industrial revolution. https://www.bloodinthemachine.com/p/understanding-the-real-threat-generative

In short, there will be productivity but — apart from agility — we pay for it with quality getting a 'cheap' product as result. The outcome of the GenAI adoption will be: humanity goes 'cheap'. Sounds like a worthwhile insight.

I teach introduction to artificial intelligence to lehigh non-tech students and CPA's in a continuing education course and always start with what is your definition of intelligence. For humans it is definately not data right to intelligence which is what all GPT systems do. It has a middle process called data to information to intelligence which has numerous more analysis mostly on meaning, trust and accuracy of the data. I truly believe no generative language can do this without constant human involvement and generative language as the basis for AI will be obsolute withing a few years as new approaches with neuron processing become available to create information from data. There are a number of examples where this is starting to occur with start ups and inside Google that would love your thoughts on