AI risks, short-term and long—and what we need to do about them

Finding common ground with Geoff Hinton

Geoffrey Hinton and are I not known for getting along, but I sent him a word of appreciation on Monday when he spoke up about AI risk, after stepping down from Google.

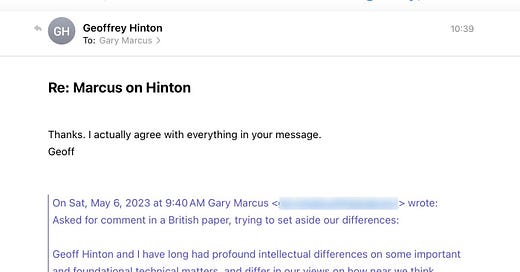

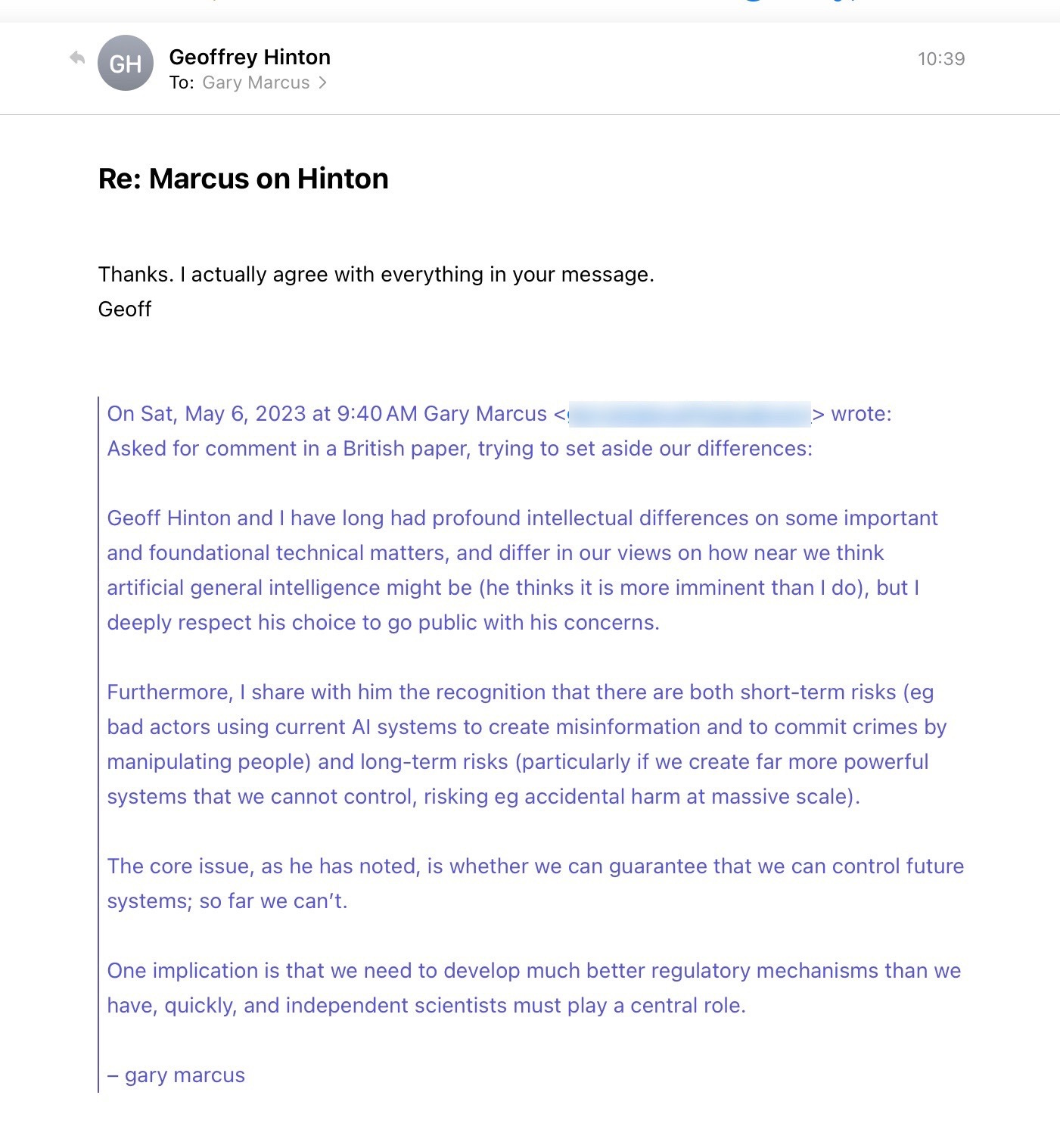

A few days later, The Daily Mail asked me to comment on Hinton’s stepping away from Google and what it meant. I compiled my thoughts, trying to set aside our differences, emphasizing that in a lot of important ways I felt that Hinton and I had converged.

And then … I passed them along to Hinton.

Here’s his reply, with my comments underneath. As you will see, we still differ in our views about the technical details, but have very much converged on a picture in which there is serious risk—both short-term and long— equally serious need to have independent scientists at the table:

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, deeply concerned about current AI but really hoping that we might do better. He is the co-author of Rebooting AI and host of Humans versus Machines.

The fact that important scientists with divergent views share deep concern about where AI is headed is both concerning…and inspiring. Thank you both for continuing to speak up publicly.

Bad actors are much more dangerous, leading to an internet filled with uncertainty regarding origins of the information we consume. Presumably software will be developed to identify human vs nonhuman origins, but the free market being what it is, such software may be priced at levels only well-off people can afford. Result a trusty reliable internet for some, a junked up internet for the rest of us. Two-tier internet.