Are social media and coordinated misinformation campaigns fomenting extremism?

Probably, yes.

Some disconcerting data. Mainly about anti-semitism, as it happens, but likely not unique.

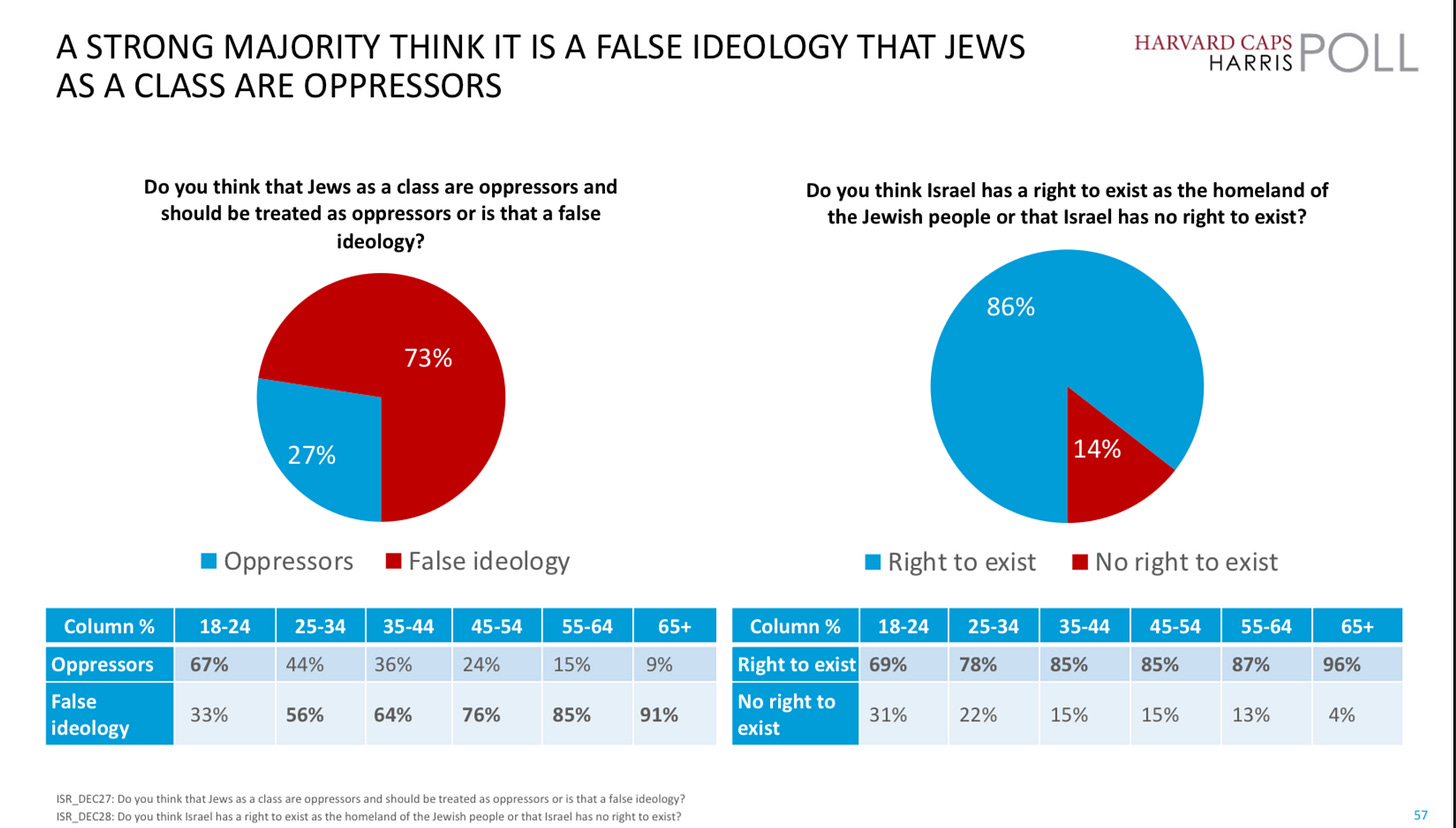

From the December Harvard CAPS poll, we see a huge trend towards a large number of (not all) younger people seeing Jews as oppressors (18-24 year-olds column in left panel below).

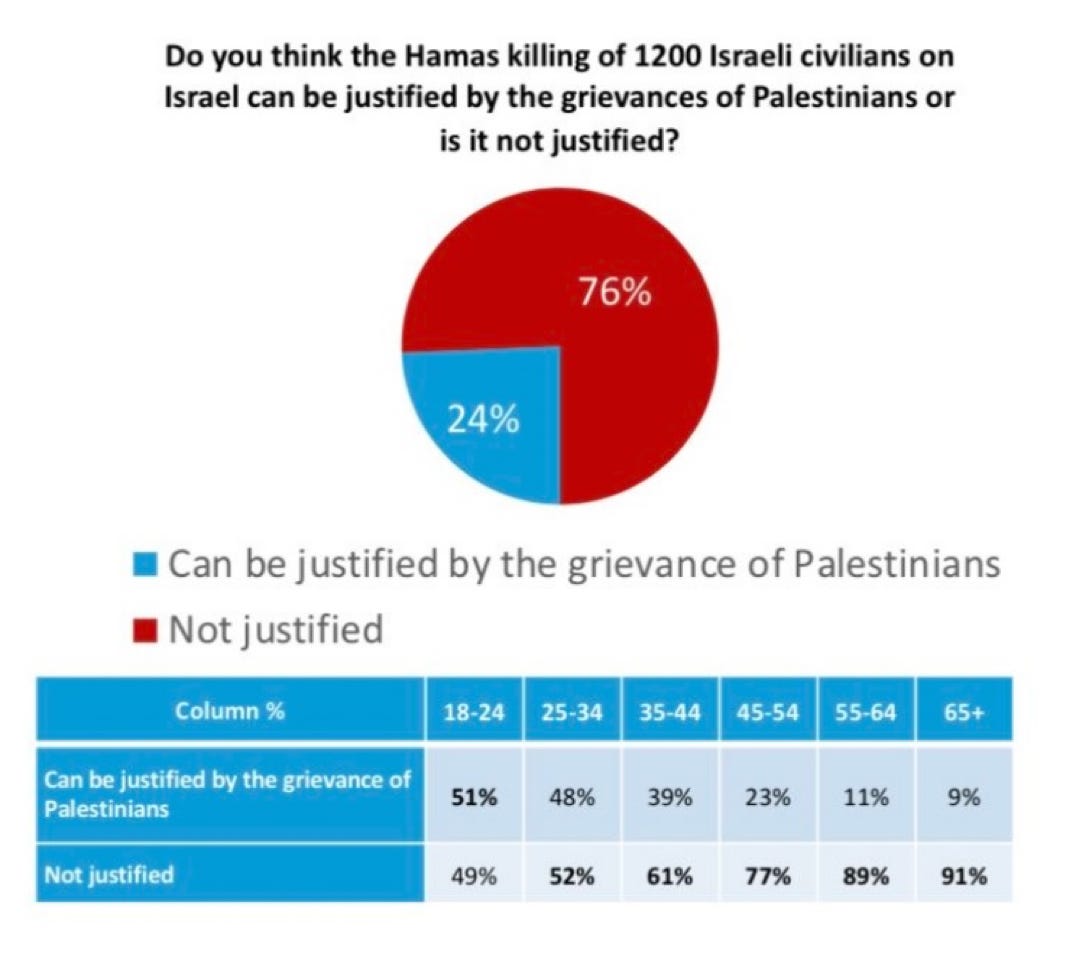

A related poll by the same Harvard CAPS consortium earlier in the year, reported on October 19, showed that younger people believe, far more than older people (who less actively use X and TikTok) that the massacre of Jews on October 7, which took place before the Gaza invasion, was justifiable:

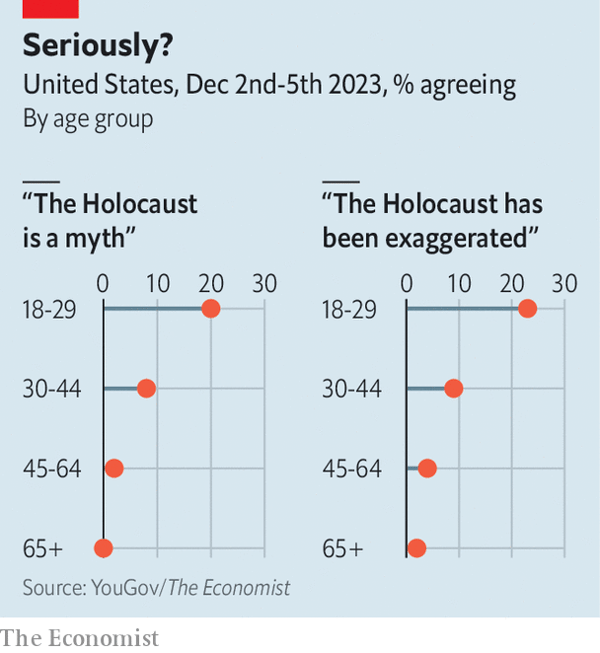

This one. from Economist, shows the same age trend:

§

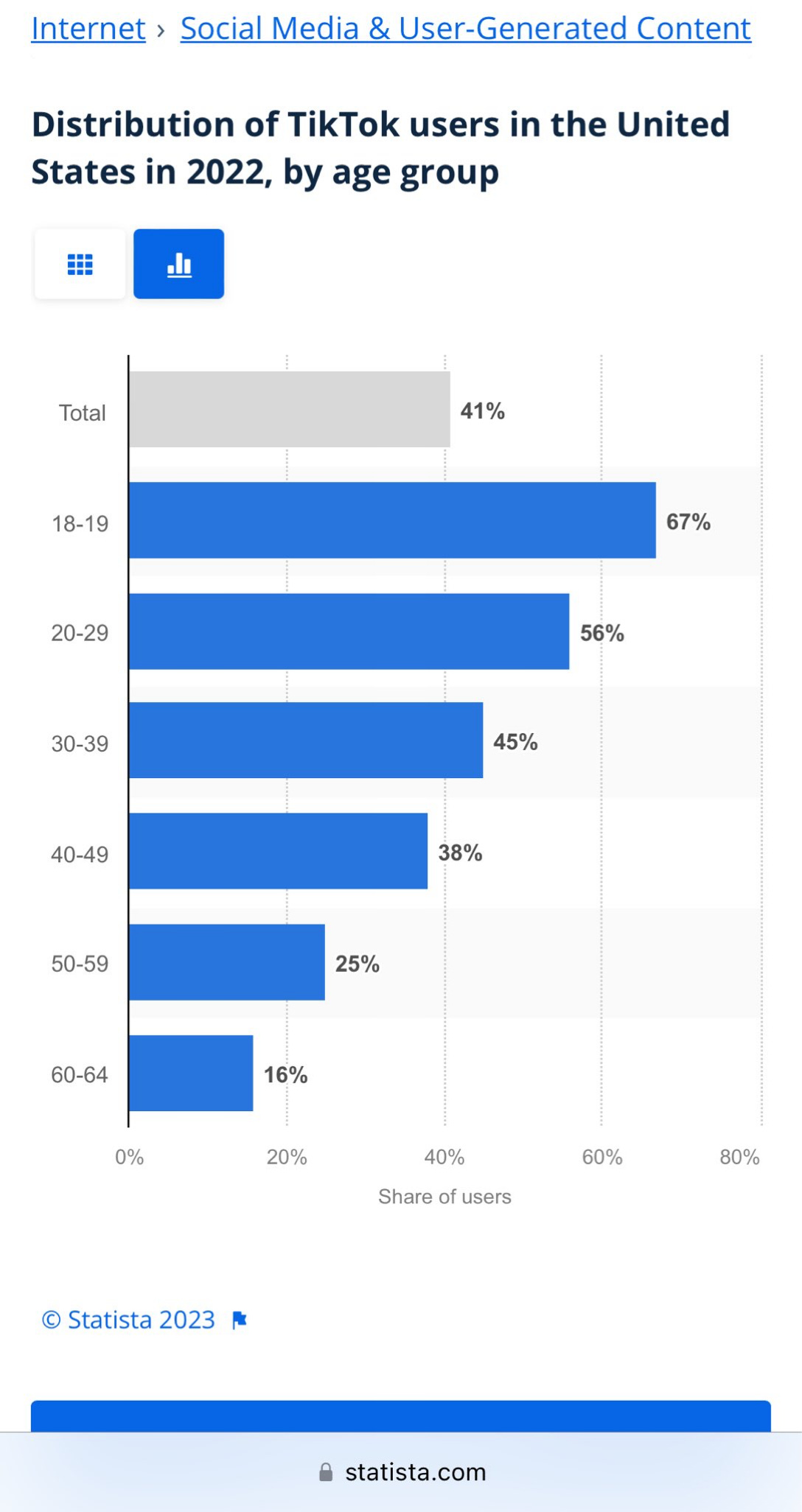

At least in the early part of the conflict, TikTok posts overwhelmingly sided against Israel:

News site Axios reported this week that since October 16, there have been 210,000 posts using the hashtag #StandwithPalestine and 17,000 using the hashtag #StandwithIsrael throughout the globe. Citing TikTok’s data, the news site stated that 87 percent of the audience makeup for #StandwithPalestine posts is under the age of 35, compared to 66% of the audience makeup for #StandwithIsrael posts. There are over 1 billion TikTok users worldwide.

A December 14 article in the Washington Post by Will Oremus reports that “Bigots use AI to make Nazi memes on 4chan. Verified users post them on X.” and that“AI-generated Nazi memes thrive on Musk’s X despite claims of crackdown.”

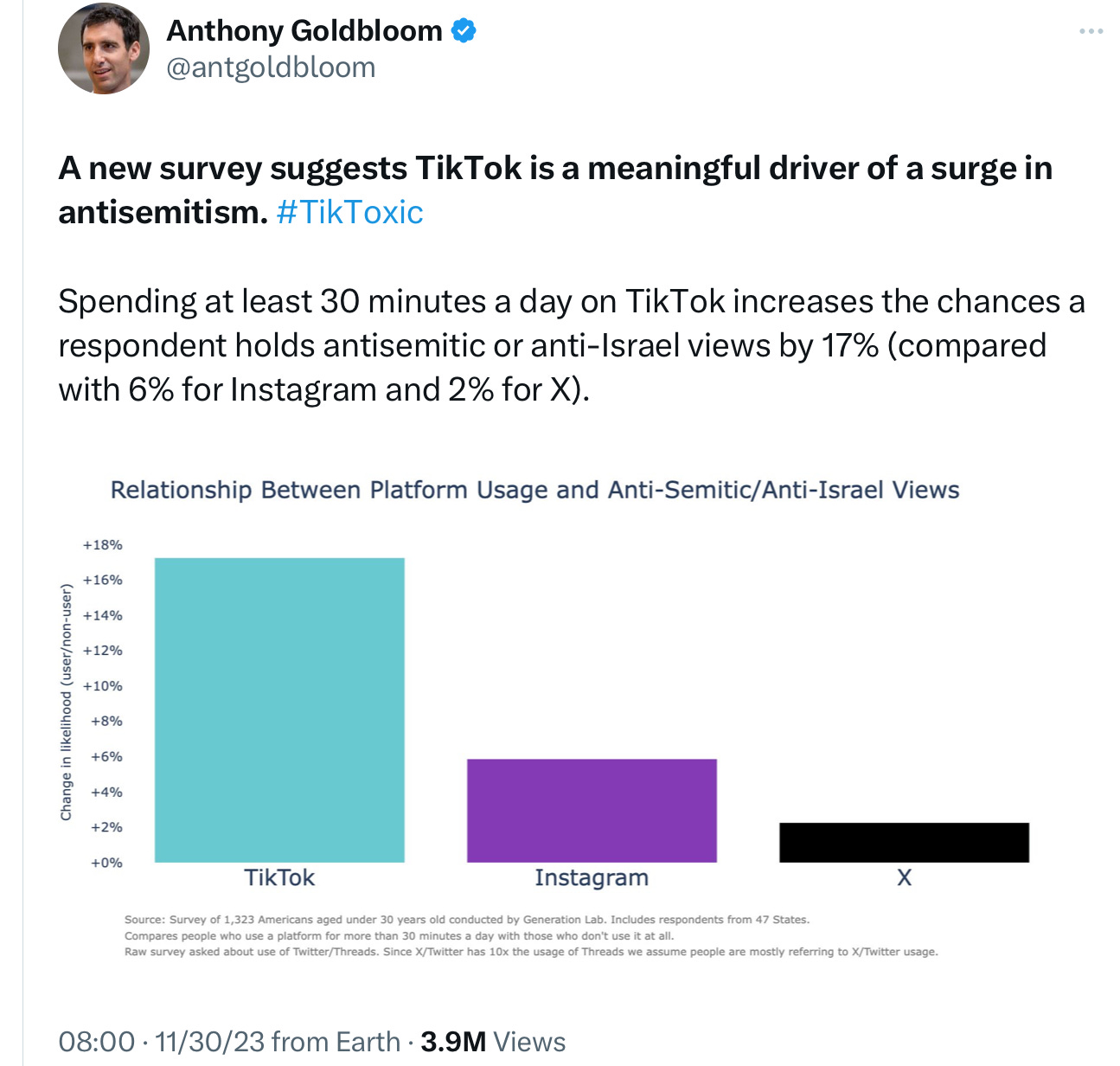

A survey by Generation Lab, publicized by Kaggle cofounder Anthony Goldbloom, points to TikTok as particularly influential; the study is of course merely observational, and not a causally controlled experiment, but is still disconcerting, especially in conjunction with the rest of the data in this essay:

§

How do all the data on age, from Harvard’s CAPS and the Economist, showing sharply different values and perceptions in 18-24 year-olds, line up with social media use?

Frighteningly well.

Consistent with all this, a Times story earlier this week focusing on a poll of Biden’s approach to Gaza reported “Those who identify as regular users of TikTok were the most adamant in their criticism”. One would really like to see a followup survey on views around anti-semitism, separating TikTok users from non-users. How do youths who use TikTok (or use it regularly) differ in their views from those who don’t? (And same for people of different age groups.) But prima facie the data really don’t look great.

Causality is hard to prove, but the circumstantial evidence is alarming.

§

The thought has crossed my mind that maybe some of these social media posts may be being deliberately manufactured, by foreign powers, such as Russia, which appear to be agitating for Hamas—as part of a classic active measures ploy to destabilize the United States:

The Russian government, which presumably backs Iran, which presumably backs Hamas, may be tearing the United States apart, as part of one of its most successful active measures campaigns in history.

It is not inconceivable that AI-generated propaganda could be significantly intensifying the scale and impact of such a campaign.

§

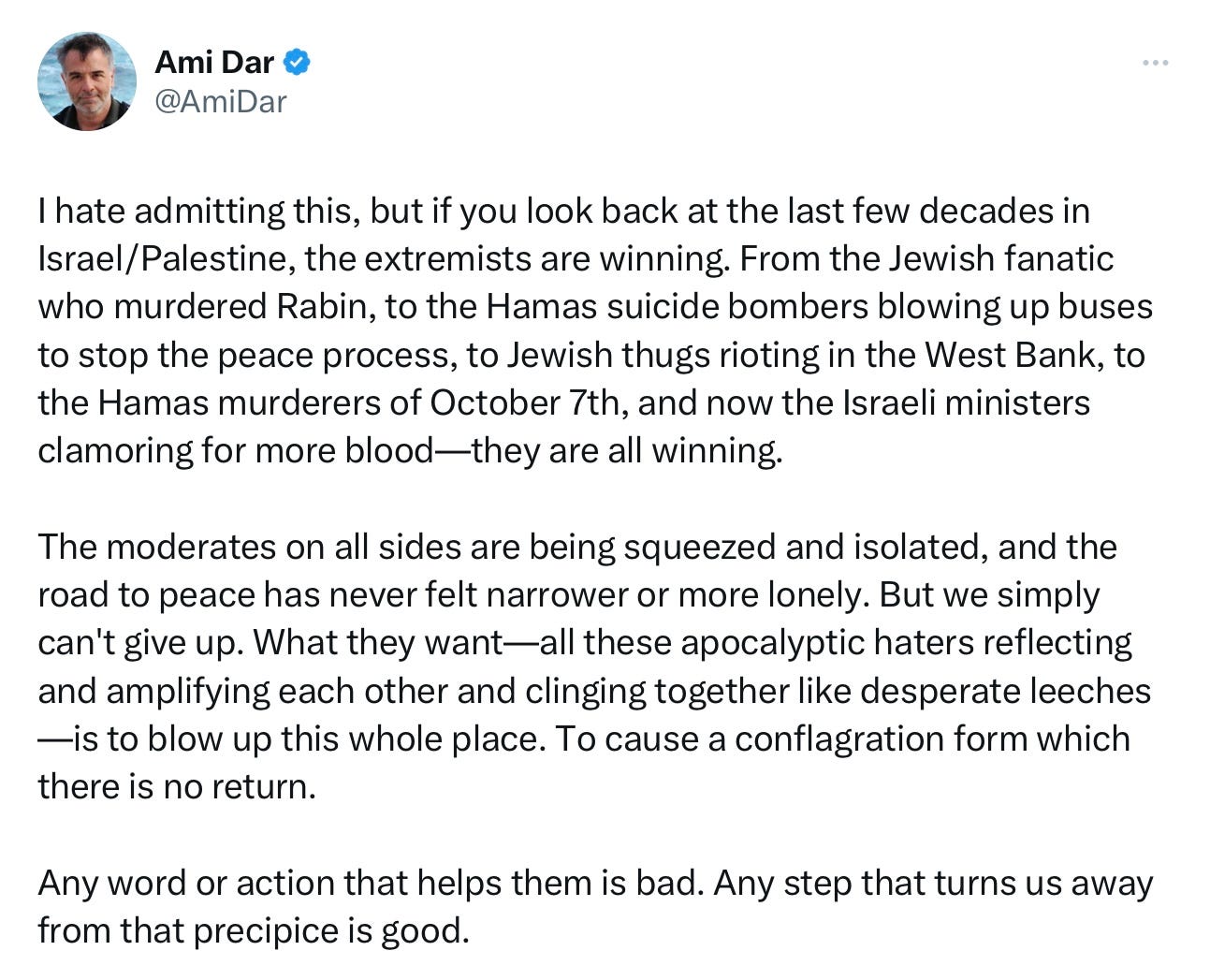

Whether or not foreign agents per se are driving the rise in antisemitism, a rise in extremism – on both sides – is helping no one.

Some of the reports on TikTok are of course true; there’s been atrocities and bad behavior on both sides. What social media does is to weaponize those atrocities, and those bad behaviors to amplify outrage, by presenting them without context and history. And the most outrage-inducing incidents, the most graphic and gory and moving, presumably wind up with most views.

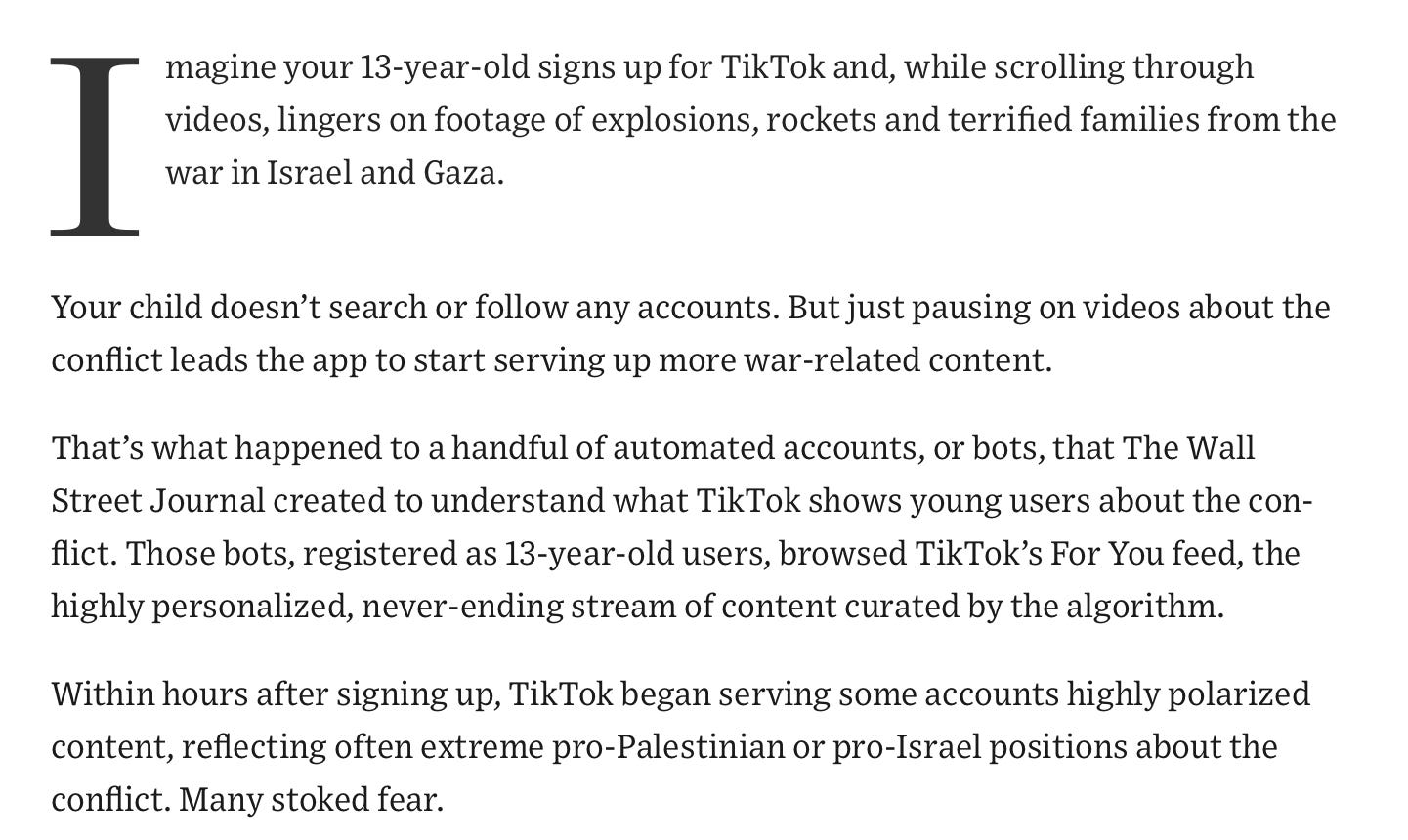

The viewer ends up seeing a tiny slice of the world, the most horrible behaviors from whatever side they are outraged by, and so the outrage escalates. Stereotypes are amplified, by exploiting a human cognitive bias towards vivid data over systematic data. The Wall St Journal illustrated this graphically today, in an experiment they did with bots

All in all WSJ tested 8 bots, the Pro-Palestinian videos massively outweighed the Pro-Israel videos, 2851-699 (1270 conflict-related videos were neutral). More than that, polarization was fast

Some of the accounts quickly fell into so-called rabbit holes, where half or more of the videos served to them were related to the war. On one account, the first conflict-related video came up as the 58th video TikTok served. After lingering on several videos like it, the account was soon inundated with videos of protests, suffering children and descriptions of death.”

TikTok has no interest in showing anyone a balanced or statistically representative view of the world. (I don’t happen to have the data but TikTok is probably making Jews angrier at Palestinians, too; the amplification of hate goes in all directions.)

The algorithm may be fine when it is directed at dancing bears, but when directed at the daily news, it is likely doing serious damage to our ability to co-exist as humans.

§

Needless to say, this is not what we need now. I give the last words to a voice of reason, Ami Dar:

Switching off social media would be a start.

Gary Marcus is deeply concerned about what social media is doing to our society.

It looks social media is trying to perform some of its tricks in your comments thread here, Gary. You poked the bear!

Some of what you touch on, specifically AI powered disinformation and how the Russians will use it to weaken the US, are explored in detail here. Gary, you seem to be one of the exceptions; but I don't think people have really grasped the potential for LLM AI content to be weaponized against the US on a large scale. But I'm pretty sure everyone in America will "get it" by the end of the 2024 elections. https://technoskeptic.substack.com/p/is-ai-an-opportunity-for-writers