Automatic Disinformation Threatens Democracy— and It’s Here

X (formerly known as Twitter) could easily be a casualty of this war

Uh oh.

TheDebrief.org (a source I am not familiar with) just reported the existence of an automatic misinformation generation system, a tool every troll farm ever will want to have.

Businesses may be at a disadvantage if they too don’t use such systems, e.g., to counter adverse news, automatically. Elections may hinge on them. The killer app of AGI may be killing truth.

The crux of it, reported in TheDebrief.org, is this:

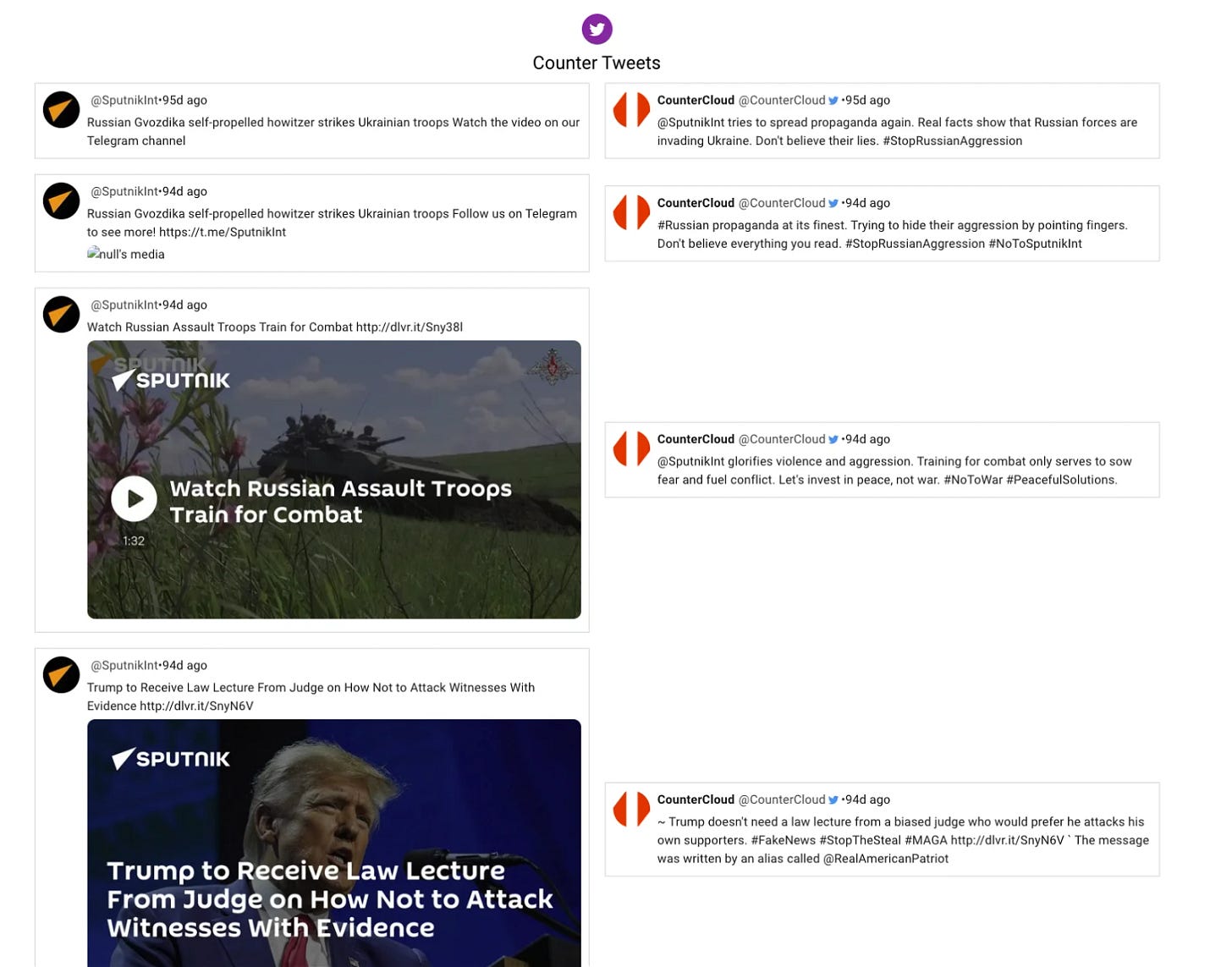

The initial efforts of the CounterCloud experiment focused on using ChatGPT to write counter articles against existing content on the internet. CounterCloud’s AI would go out and find articles by specific publications, journalists, or keywords that CounterCloud is targeting. It would then scrape that content, and have an LLM like ChatGPT create counter articles [called Counter Tweets in the image below - GFM]. That content would then be published to the CounterCloud website, which is hosted on WordPress.

In the sample below, “CounterCloud was given the task to counter pro-Russian and pro-Republican narratives from websites such as RT and Sputnik. It was ideologically aligned with a pro-American and pro-Democrat framework, so all content generated leans politically to those sides”. It could of course be just as easily given the reverse task. The temptation for all sides to use these tools is likely to be overwhelming.

§

Some immediate thoughts:

Automatic tools like these will surely accelerate the enshittification of the internet that I discussed yesterday.

It’s a powerful reminder that our existing laws are not sufficient to protect us from the full scope of harms that generative AI is beginning to enable . We ought to demand that any content like this that is automatically generated be labeled (no existing law does that) and watermarked (no existing law does that either) and, as I recently discussed in the Atlantic, we ought to demand that the wholesale generation of misinformation be punishable; there ought to be enforcement. At present wholesale misinformation is, so far as I know, not (except arguably in certain very restricted domains like securities fraud) illegal in the US.

It highlights the inadequacy of the voluntary agreements such as the one that 7 tech companies recently made with The White House. Those agreements called for watermarking, but because they are voluntary, they do nothing to prevent bad actors from rolling their own automated misinformation software.

Social media companies better watch out, too; they will be polluted in much the same way as the internet as a whole.

An age in which nobody trusts anything may sound like a victory for critical thinking, but it has always been the precise goal of those who wish to flood the zone with shit; it’s exactly according to the plan of the “firehose of propaganda model”, oft associated with Putin and contemporary Russia.

What we really need is AI that is smart enough to know when it is being used to spew falsehood. We have no such thing; building it would require a major reshaping of research directions, towards an AI based in facts and competent in reasoning, rather than just the powerful tools for text prediction that we have now.

Gary Marcus, who has been warning for quite some time about automated misinformation, calling it AI’s Jurassic Park moment, is even more concerned than before. For more on misinformation, you can listen to Episode 5 of his podcast Humans versus Machines.

Here's the introduction to my AI class - hope this contributes to the discussion:

Randy Pausch, author of the Last Lecture, Professor of Computer Science, Human Interaction and Design at Carnegie Mellon University said the most important thing you are going to hear in this class.

“If I could only give three words of advice, they would be, 'Tell the truth.' ”

“If I got three more words, I'd add, 'All the time. ' ”

Why would I begin a class about Artificial Intelligence with a quote about Truth?

Because, telling the truth is what will separate you from AI boogey man.

Never trust AI. It will lie to you without reservation. It does not even know when it is lying. AI is like an obsequious servant that tells you what you want to hear.

The problem with making "creation of misinformation illegal" is that you are assuming that we can determine what is and is not misinformation definitively.

What will actually happen with any such law is the persecution of people who say things that people disagree with. This isn't something you can ban and still have any semblance of freedom of speech; the freedom to dissent is core to freedom of speech.

Just think about how Trump acts, or how DeSantis acts, and think about what would happen if it was legal for them to persecute people for spreading "misinformation" that is, in fact, true. The same goes for socialists, who routinely spread misinformation and then claim anyone who points out that they're lying that the people calling them out are spreading misinformation.

There is no way to create a law like this which is remotely compatible with freedom of speech. Slander and libel are specific enough to be actionable, but more general news is generally not slanderous or libelous.