BREAKING: LLM “reasoning” continues to be deeply flawed

A new review underscores the breadth of the problem, and shows that close to a trillion dollars hasn’t changed that

As you may know, I have been harping on reasoning as a core challenge for deep learning for well over a decade, at least since a December 2012 New Yorker article:

“Realistically, deep learning is only part of the larger challenge of building intelligent machines. Such techniques lack ways of representing causal relationships (such as between diseases and their symptoms), and are likely to face challenges in acquiring abstract ideas … they have no obvious ways of performing logical inferences, and they are also still a long way from integrating abstract knowledge”

By now many, many others have pounded on the same point.

Silicon Valley’s response has always been to dismiss us. We got this covered, the Valley CEOs will tell you. Pay no attention to Gary or any of the other academics from Subbarao Kambhampati to Judea Pearl to Ernest Davis to Ken Forbus to Melanie Mitchell to Yann LeCun to Francesca Rossi, and many others (including more recently Ilya Sutskever), who are also skeptical. Ignore the big AAAI survey that said that LLMs won’t get us to AGI. Surely that Apple study must be biased too. Give us money. Lots of money. Lots and lots of money. Scale Scale Scale. AGI is coming next year!

Well, AGI still hasn’t come (even though they keep issuing the same promises, year after year). LLMs still hallucinate and continue to make boneheaded errors.

And reasoning is still one of the core issues.

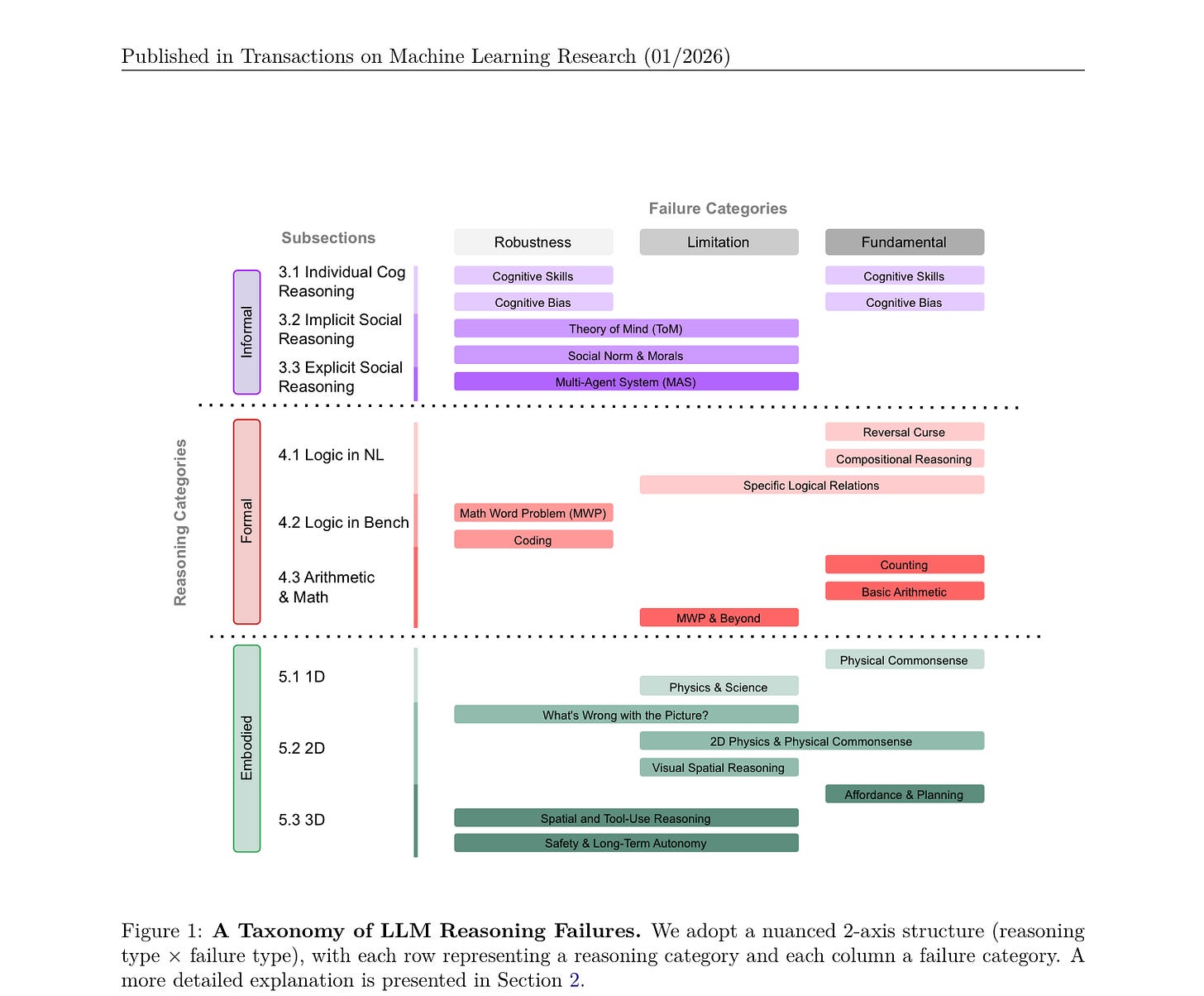

How serious an issue? A new review from Caltech and Stanford, called Large Language Model Reasoning Failure shows the latest deep learning systems — even the ones marketed as “reasoning” systems — (still) have major problems with reasoning.

Lots and lots of problems, everywhere they looked:

The paper is a thorough taxonomy/review, with excellent bibliography.

If you want a full idea of how far we have left to go (and, implicitly, why LLMs aren’t the right answer) read it. (Disclaimer: one of the paper’s authors, Noah Goodman, worked with me at my first startup Geometric Intelligence, which was acquired by Uber.)

Silicon Valley can do one of two things. It can continue to dismiss critics and criticism, praying for magical solutions, or it can face reality and start focusing on alternatives to LLMs.

🤷♂️

"We don't pray since we're gods but, yeah, magical solutions are coming soon. Like, real soon!"

-- The Soggy Bottom Boys

I'm going to stick my neck out here and say that mainstream tech people will just keep ignoring any paper that doesn't align with the magical thinking that LLMs can reason.