Breaking: Marcus weighs in (mostly) for LeCun

LeCun’s ugly departure from Meta - and why I mostly sympathize with him (but nonetheless doubt he will succeed at his new gig)

Yann LeCun is not a savory character; but he is certainly a character. For the last few days the tech world has been gossiping about the saga of his departure from Meta, in which every aspect of his character, negative and positive, from his arrogance and certitude to his commitment to science, has been on full display. You can get a glimpse of this in the excerpt from his interview with the FT, above.

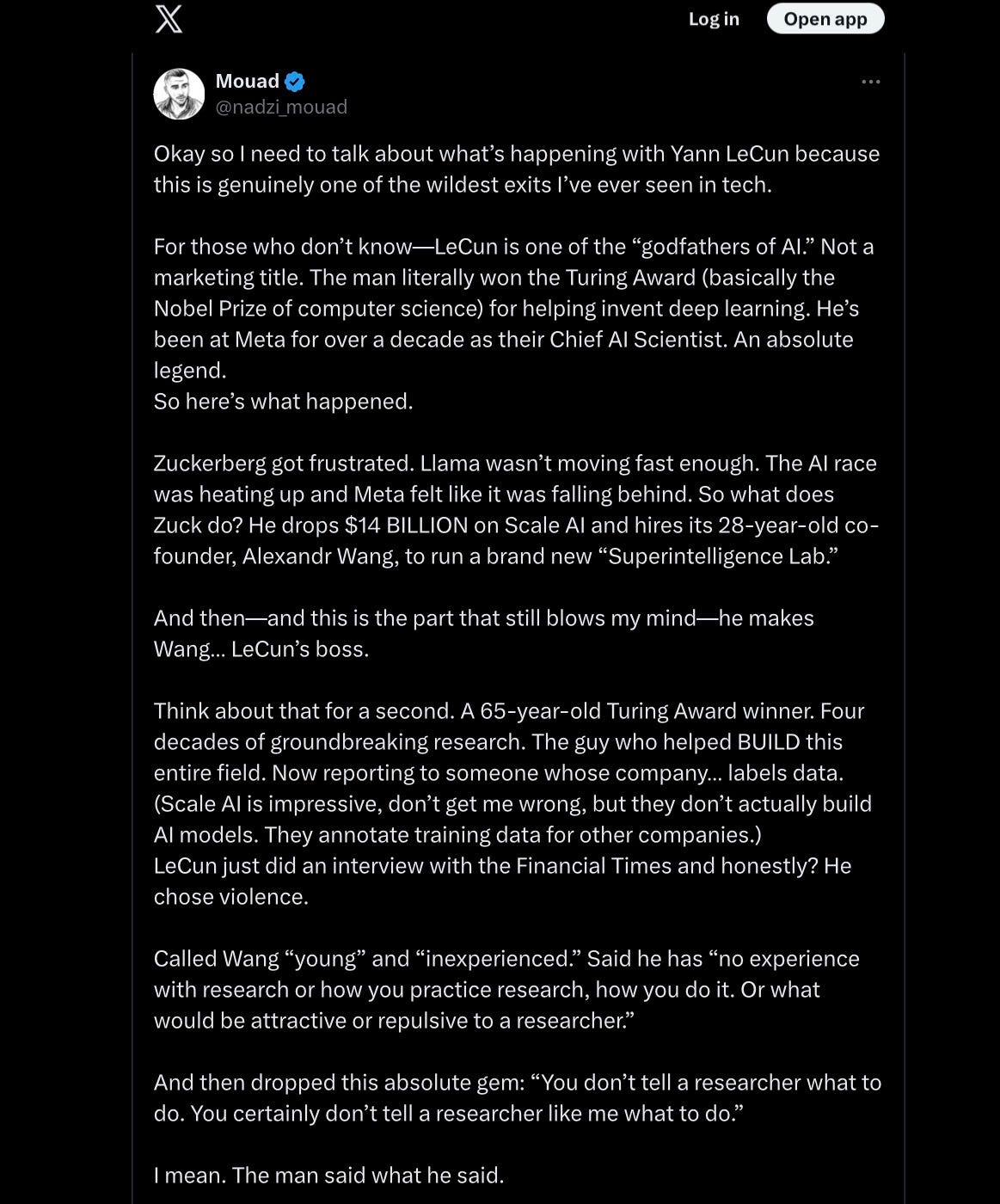

A long tweet below sums up some of the back story, mostly taking LeCun’s side. The funny thing is that – with a huge technical asterisk discussed below — I, a longstanding critic of LeCun, am basically on LeCun’s side in the fracas.

More power to LeCun for standing his ground. Zuckerberg doesn’t know more about AI than LeCun does, and Wang doesn’t either. Furthermore, what LeCun does best is research. He may not be as original as he pretends to be, but he (mostly) has good intellectual taste, and someone like Wang, known mainly for a somewhat “sordid” data collection company rather than any particular technical vision has no business telling LeCun what to think.

And it goes without saying that I too have huge doubts about LLMs. At this point so do literally hundreds of other researchers, even prominent machine learning insiders like Sutskever and Sutton. By sidelining LeCun, Zuck and Wang made it impossible for LeCun to stay. LeCun was right to depart, and justified as an accomplished scientist to want to pursue his own vision.

And, also to the good, LeCun is very interested in world models, which is something I too have often stressed (sometimes under the term cognitive models) for many years, especially in my 2020 article The Next Decade in AI.

So I’m glad that LeCun’s new company will investigating a new approach. That said, I doubt that it will succeed, for two reasons.

§

The first reason is technical. LeCun talks about world models, but (because of what appears to be an ego-related mental block against classical symbolic AI, which he has always allied himself against) I don’t think LeCun actually understands what a world model needs to be.

A world model (if my 2020 article is correct) needs to be full of explicit, structured, directly retrievable knowledge about time, space, causality, people, places, objects, events, and so on. You need all that for formal reasoning. (In my 2020 paper, I give a detailed example from Doug Lenat.) And you need all that to avoid hallucinations.

Alas, LeCun, who knows neural networks but refuses to engage in discussion about what classical AI brings to the table, doesn’t really see that. My own view, which I stand by, is that we need “neurosymbolic” contributions from both traditions if we are to get to trustworthy AI.

So what LeCun has wound up with for “world models” is just another opaque, uninterpretable neural network that doesn’t lend itself to reasoning. It’s a genuine effort, to be praised, at trying to have a system represent the world, over and beyond mere statistics of how language gets used on the web, but it will still likely fall short. I don’t expect it to solve many of the problems that have been plaguing LLMs.

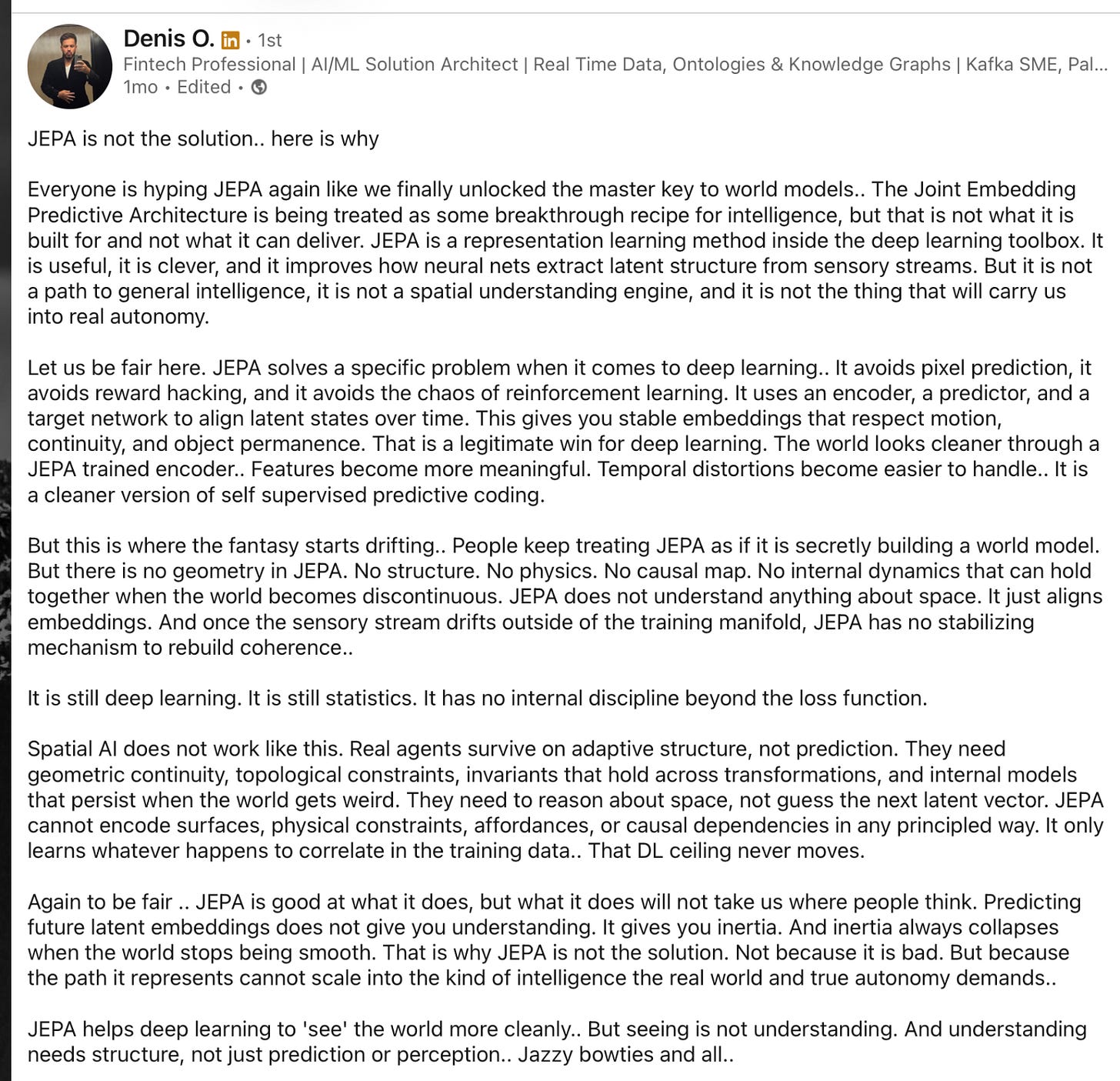

Indeed LeCun has already been working on his newapproach, JEPA [Joint Embedding Predictive Architecture], with what appears to be a reasonably large team (by academic standards) at Meta for the last few years with not a whole lot to show for it, just a handful of papers that don’t seem earth-shaking. He has the right name for a thing we need (“world model”) but an inadequate implementation.

A smart though technical post at LinkedIn from Denis O. a month ago, reprinted below, stated the situation extremely well. If you don’t follow the technical jargon, you can just skim, and focus especially on the part about what JEPA is — and is not:

It is useful, it is clever, and it improves how neural nets extract latent structure from sensory streams. But it is not a path to general intelligence, it is not a spatial understanding engine, and it is not the thing that will carry us into real autonomy…. People keep treating JEPA as if it is secretly building a world model. But there is no geometry in JEPA. No structure. No physics. No causal map. No internal dynamics that can hold together when the world becomes discontinuous. JEPA does not understand anything about space. … once the sensory stream drifts outside of the training manifold, JEPA has no stabilizing mechanism to rebuild coherence.”

Denis O’s closing summary absolutely nails it: “JEPA helps deep learning to ‘see’ the world more cleanly… But seeing is not understanding. And understanding needs structure, not just prediction or perception.” So it’s not really a model of the world.

That’s the technical problem. Then there is a management problem. LeCun is extraordinarily poor at acknowledging other people’s work, verging on, if not crossing over fully into, intellectual dishonesty. He has systematically ignored and in some cases belittled many people who have preceded him rarely if ever acknowledging most of them, a pattern that goes back to the early days of his career in the late 1980s. I wrote a whole essay about this in November, listing nearly a dozen scholars who have gotten the LeCun treatment (Jürgen Schmidhuber, David Ha, Kunihiko Fukushima, Wei Zhang, Herb Simon, John McCarthy, Pat Hayes, Ernest Davis, Fei-Fei Li, Emily Bender, and myself) and promptly received various emails about three others who have been similarly mistreated, including Alexander Waibel (a CMU professor whose TDNN preceded and directly prefigured LeCun’s most famous work), Les Atlas (who crystallized the term convolutional for neural networks before LeCun did), and LeCun’s fellow Turing Award winner Judea Pearl, who was talking about causality for many years before LeCun was. If LeCun pulls the same stuff in his new company, morale will be deathly low.

Still for all that, LeCun’s new company is trying to explore something genuinely new and that’s what we in the field of AI desperately needs. Even if LeCun isn’t doing world models the right way, he is certainly trying. And for that I salute him.

Wild read. I was totally unaware that this happened.

Is Yann LeCun’s new company trying to do something similar to what Li Fei-Fei is working on?

If I remember correctly, I read an interview where LeCun argued that what current LLMs do is nothing like animal intelligence. He used the example of a dog: if a dog wants to jump to a higher place, it first estimates whether the jump is possible before acting. That kind of embodied prediction and world modeling is fundamentally different from next-token prediction.