Breaking: OpenAI's efforts at pure scaling have hit a wall

Sam Altman, November 2024

The clearest evidence for this comes from a reading of recent events, and from Altman’s own words today. Or really from an event that has not happened, akin to Sherlock Holmes’ dog that didn’t bark.

The event that has not happened is the launch of GPT-5, which many people have been eagerly expecting for nearly two years, and which has been under development presumably since August of 2022, when Altman first showed GPT-4 to Bill Gates.

As I have noted before, OpenAI has every reason in the world to build GPT-5, given all the competition trying to do the same, and enormous resources (measured in the billions) with which to build it.

But internally, according to December reporting by Deepa Seetharaman the Wall Street Journal, the project to build it has been going on for quite some time, but facing mounting bills and inadequate results; according to WSJ’s headline, the project, called Orion, was “Behind Schedule and Crazy Expensive”.

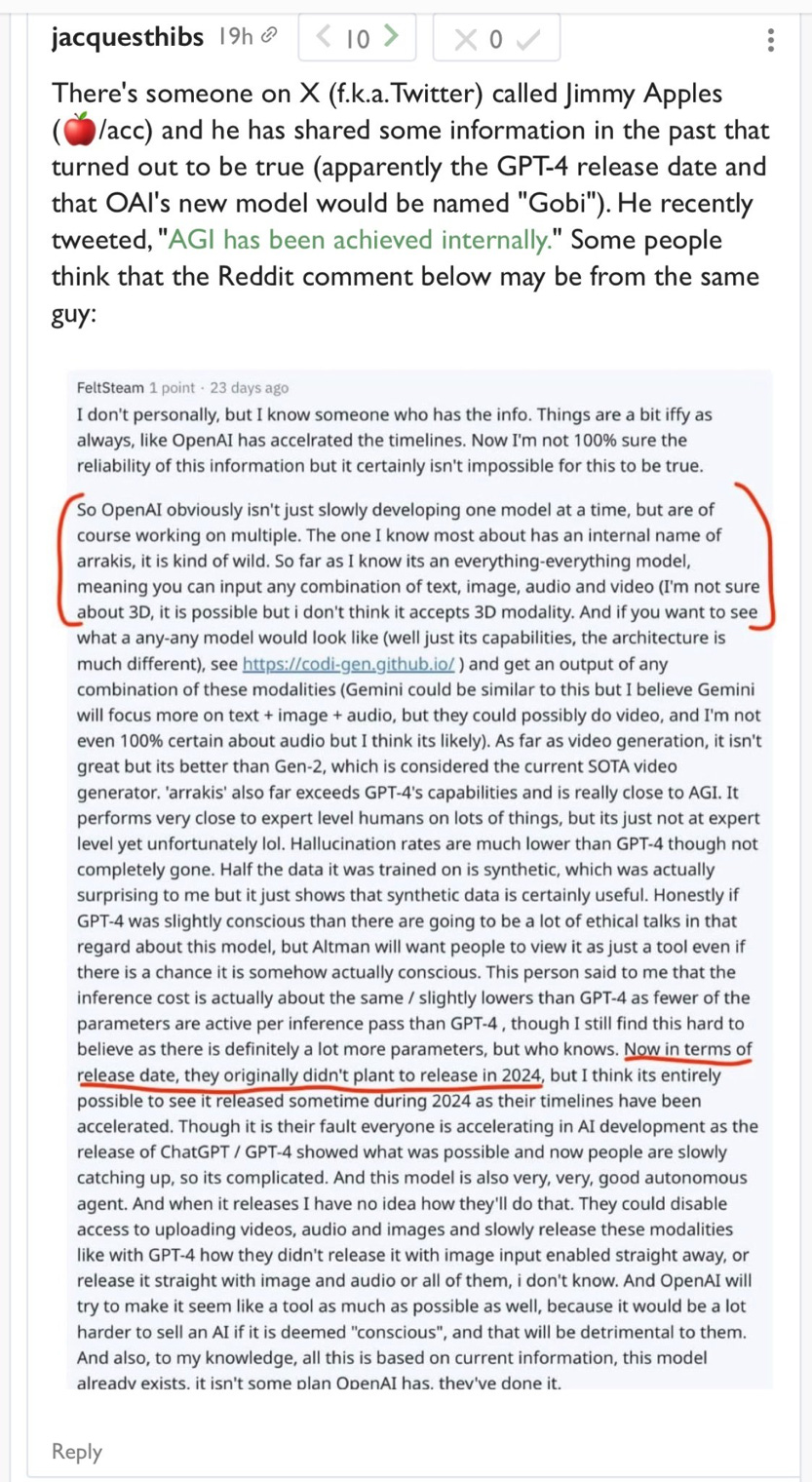

Nonetheless, for years, Altman regularly dropped cryptic comments implying progress, joking on reddit in 2023 that AGI had been achieved internally, and writing on his own blog as recently as a month or so ago that “We are now confident we know how to build AGI as we have traditionally understood it“

Fans have written whole essays about trying to decipher his comments, like this one in September 2023 (underlining is not mine; feel free to skim):

§

All this time, it turns out, Altman was bluffing.

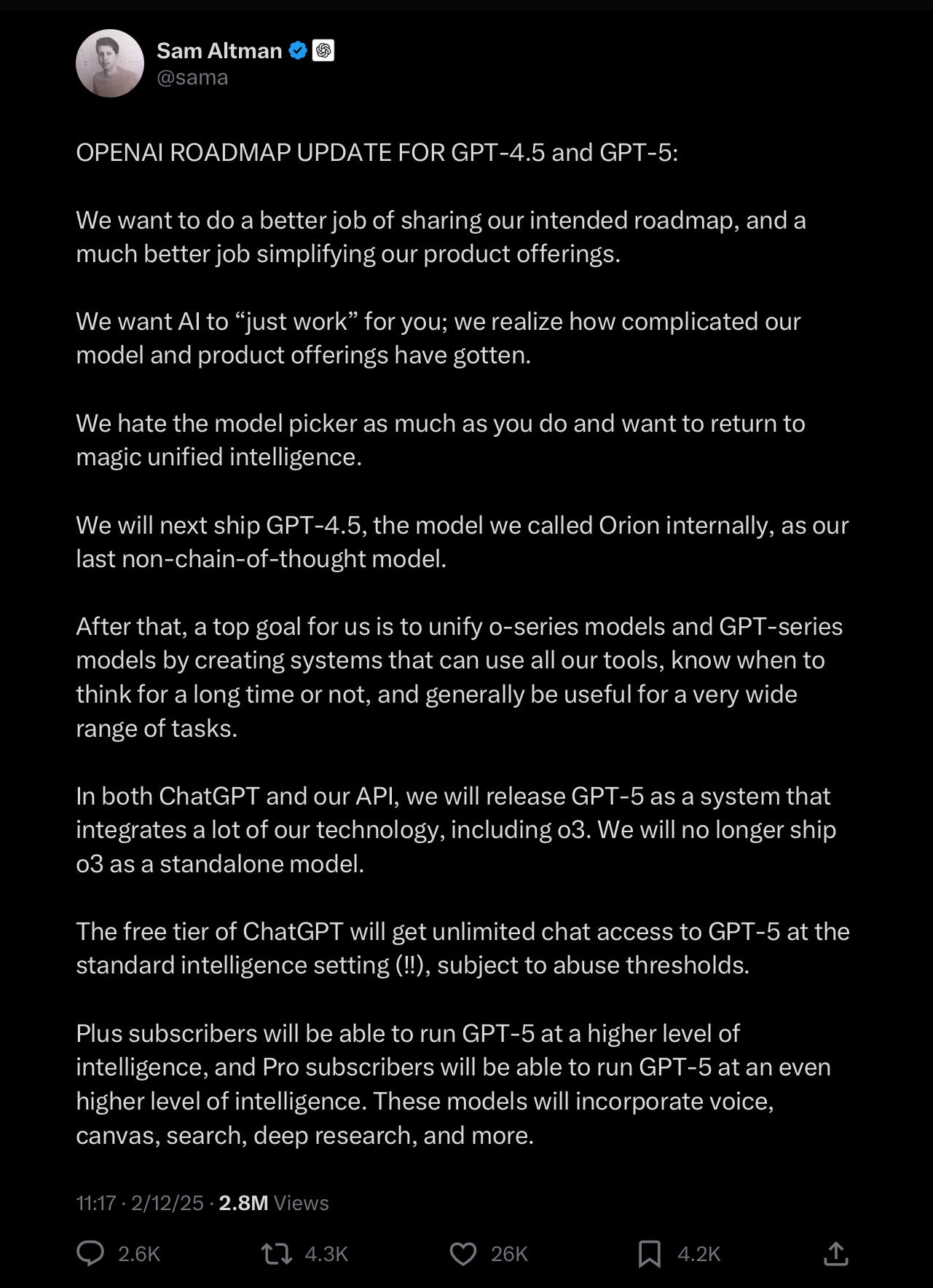

As of today, the jig is up. Orion has been officially downgraded - to GPT 4.5, by Altman himself.

From this post, we can infer a few things.

First, Orion – OpenAI’s last great effort at pure scaling – has failed. We can infer that they want to ship it, now, because they can get no further with it. But we can also infer that they don’t want to call it GPT-5 — presumably because nobody would take it seriously as AGI. If they called Orion GPT-5, reactions would likely converge for many on “is that all there is?”. OpenAI’s mystique might well take a serious hit. The company’s future rests on them doing something truly revolutionary, and Orion turns out not to be that.

Second, pure scaling will no longer be the means of attack. Instead, OpenAI will be throwing the kitchen sink at future efforts to build GPT-5 , including “test-time-compute”, a new approach that involves longer, more expensive inference times rather than “constant time inference” of GPT-4-like models, as well as (I suspect) massive amounts of synthetic data, which appears to work better for semi-closed domains like math and coding than in the open-ended real world.

If Altman knew how to build AGI, he would build it. Instead, he rechristened Orion as GPT 4.5 rather than 5, which likely means that despite major bills, Orion never met the expectations so-called scaling laws had implied.

Instead all we are getting is another entry in the GPT 4.x sweepstakes.

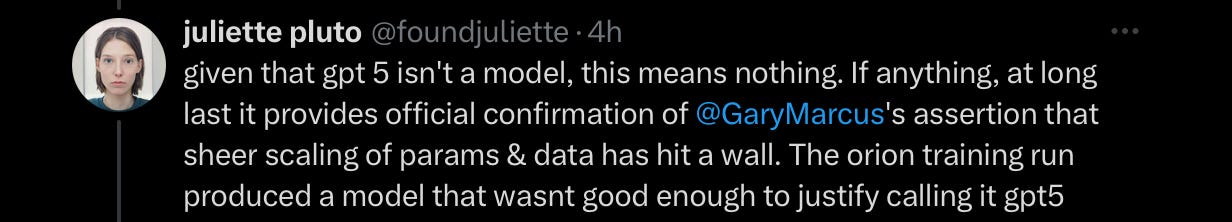

A security and privacy researcher who works for Google DeepMind (but who spoke for herself, not the company) spelled it out:

§

As I have always said, AI will go on; the death of pure scaling is not the death of AI. In all likelihood AGI will some day arrive, with or without LLMs at the core, and likely with all kinds of other gadgets included. Neurosymbolic AI, which I have long championed, may turn out to be part of the mix.

But the myth that you could predict an AI system’s performance simply based on how much data and how many parameters you use — which motivated a half trillion dollar industry — is dead.

The truth is nobody, not Altman, and not anyone else, has yet figured out to get to AGI. Scaling is useful, but it is not now and never was the whole answer.

Gary Marcus hopes you will someday reread his 2022 essay Deep Learning is Hitting A Wall with an open mind.

This was indeed predictable but the scaling narrative has taken up a lot of oxygen. Combined with the efficiency developments now commercially available (Deepseek. Qwen), they have downward price pressures that do not bode well. Unfortunately though, the scaling narrative keeps asking to double down on the approach potentially at the cost of what really makes AI reliable. It’s about time we ask hard questions about US innovation ecosystem:

https://open.substack.com/pub/mohakshah/p/the-deepseek-saga-are-we-taking-the?r=224koz&utm_medium=ios

Altman had the nerve to tell high schoolers more/less that there's no point to "outrunning AI tools" and his advice was to start using the tools and basically told students to ask themselves to use AI to help them do things "faster." Translation: subscribe to our overhyped, overpriced products, give us your data and money and don't bother learning beyond prompting. Look these tools are quite powerful, but they are also very heavily flawed with many limitations that won't readily be "solved" and in many cases not foreseeably, at least not with current LLM frameworks. These tech bros just wanna make society reliant on their substandard software and control us with their platforms and data. Welcome to the new oligarchy.