Caricaturing Noam Chomsky

Four attacks on Chomsky’s recent op-ed, and why their punches don’t quite land

When I was in grad school, in the early 1990s, a popular sport was “jump on Noam Chomsky”. He gave a series of lectures every year on linguistics and the mind. I went, and so did hundreds of other people. And every week, a bunch of folks would stand up and take cracks at Chomsky, convinced that if they could stump one of the smartest people in the world, as if it would prove that they, instead, were the smartest person in the world. None of them ever get very far, but Chomsky was implacable, patiently responding and dismantling all comers. The sport never ceased to entertain.

40 years on, and not much has changed. Chomsky co-wrote a New York Times op-ed the other day, and everyone is out there once again to prove they are smarter than he is, in the smuggest possible language they can muster.

UW linguist Emily Bender lead the brigade, with a kind of sneering condescension she often uses:

But when one reads on, to the rest of her thread, there just isn’t that much there. She complains that Chomsky dwells too much on grammaticality (true, but kind of besides the point) and says he is too focused on innateness, writing that “the whole debate about whether or not humans have an innate universal grammar is just completely beside the point here” but gives no argument whatsoever, of any form, to make her point. (Just in case you didn’t get that point that Bender is positive that she’s smarter than Chomsky, she ends her thread by encouraging readers to read a recent profile of her in New York magazine, “So, read this, not that”.) And of course Bender doesn’t bother to acknowledge that she actually agrees that LLMs (in her terms “stochastic parrots” are a lousy model of language, because the statistically parrot their inputs. Nor is she gracious enough to acknowledge that Chomsky first made that point in the early 1960s, before the author of stochastic parrots was born.

Then there’s Scott Aaronson, computer scientist best known for his work on quantum computation. He’s too joined the jump on Chomsky sweepstakes, accusing Chomsky (and others who have criticized large language models) of being “self-certain, hostile, and smug” in an essay that is, well, self-certain, hostile and smug— filled with passages like “To my astonishment and delight, even many of the anti-LLM AI experts are refusing to defend Chomsky’s attack-piece”

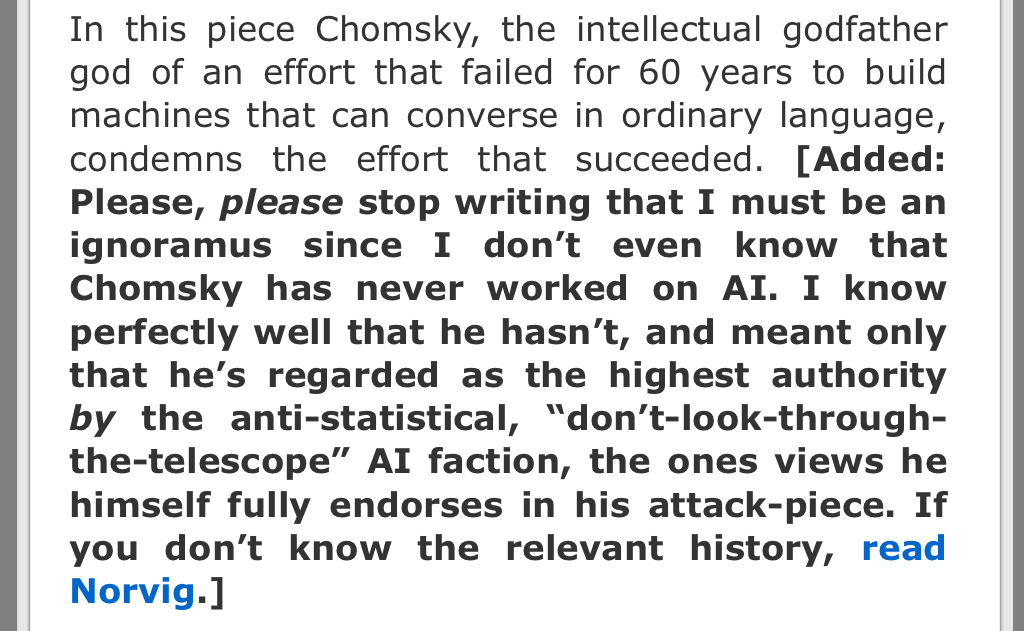

Alas there is again very little substantial content, beyond the sneering. Aaronson’s biggest error, now corrected, sort of, in bold, is in assuming that Chomsky has spent his life in some sort of failed effort to build AI, which kind of entirely misses the point of Chomsky’s piece (which says in so many words that we need to study the mind first before we try to make AI) and also utterly misrepresents Chomsky’s career. Frankly, I would be embarrassed to have to publish a correction (the part in bold) like this:

It’s a wild swing and a miss. Chomsky has spent his career trying to understand how humans acquire language, not “building machines” to try to do the same.

If Aaronson had bothered to ask, I am pretty sure Chomsky would have said, roughly, “since the ways in which humans acquire language remain a mystery, all this AI engineering is missing a source of insight that is likely to be essential, so it’s premature. (Or at least that’s pretty much what he said at the recent AGIdebate I hosted.) Aaronson’s condescension and erroneous characterization doesn’t change any of that.

Another swing and a miss, slightly better but still unconvincing, comes from the noted neuroscientist/machine learning expert (and leaders of the famous NeurIPS conference) Terry Sejnowski, in an email to a leading machine learning email list. Sejnowski affecting a different but still unmistakeable flavor of condescension, “I have always been impressed with Chomsky's ability to use plausible arguments to make his case even when they were fallacious”.

To his credit Sejnowski correctly picked on a weak point in the oped: Chomsky’s ChatGPT examples falling apples and gravity were anecdotal and insufficiently nuanced. But the email group quickly tore Sejnowski apart; his own examples were equally anecdotal. (As discussed below, Sejnowski’s retort — “If you ask a nonsense question, you get a nonsense answer... LLMs mirror the intelligence of the prompt” — doesn’t actually hold water.)

Neither Chomsky nor Sejnowski grappled enough with a critical reality: ChatGPT is wildly stochastic and unreliable; so single examples prove little. Still, even though Chomsky’s argument surely could have used considerably more nuance (see my article with Ernest Davis on how not to evaluate GPT), his overall point is correct: LLMs don’t reliably understand the world. And they certainly haven’t taught as anything whatsoever about why the world is at it is, rather than some other way. Ditto for the human mind.

But wait, there’s more. Machine learning prof Tom Dietterich joined in, trying to persuade the crowd that ChatGPT has some form of comprehension in some deep sense (which both Chomsky and I seriously doubt).

ChatGPT’s errors reveal that its “understanding” of the world is not systematic but rather consists of patches of competence separated by regions of incompetence and incoherence. ChatCPT would be much stronger if it could fill in the gaps between those patches by appealing to general causal models. This raises two questions: (a) how could a system learn such causal models and (b) how could we test a system to determine whether it had succeeded.

I chimed in, doubting the “regions of competence notion”, in a stochastic and unreliable system that sometimes has closely related text to draw on and sometimes doesn’t:

If a broken clock were correct twice a day, would we give it credit for patches of understanding of time? If n-gram model [a simple statistical tool to which nobody in their right mind would attribute comprehension] produced a sequence that was 80% grammatical, would we attribute to an underlying understanding of grammar?

At this point, Machine learning superstar Geoff Hinton joined in the fray, too, in the very same machine learning email list:

A former student of mine, James Martens, came up with the following way of demonstrating chatGPT's lack of understanding. He asked it how many legs the rear left side of a cat has.

It said 4.

I asked a learning disabled young adult the same question. He used the index finger and thumb of both hands pointing downwards to represent the legs on the two sides of the cat and said 4.

He has problems understanding some sentences, but he gets by quite well in the world and people are often surprised to learn that he has a disability.

Do you really want to use the fact that he misunderstood this question to say that he has no understanding at all?

Are you really happy with using the fact that chatGPT sometimes misunderstands to claim that it never understands?

Geoff

To which I replied at much greater length, because the issues are both subtle and critical:

Geoff, Terry (mentioned below) and others,

You raise an important question.

Of course learning disabled people can understand some things and not others. Just as some computer scientists understand computer science and not psychology, etc. (and vice versa; unfortunately a lot of psychologists have never written a line of code, and that often undermines their work).

That said your remark was itself a deflection away from my own questions, which I will reprint here, since you omitted them.

If a broken clock were correct twice a day, would we give it credit for patches of understanding of time? If n-gram model produced a sequence that was 80% grammatical, would we attribute to an underlying understanding of grammar?

The point there (salient to every good cognitive psychologist) is that you can’t infer underlying psychology and internal representations directly from behavior.

A broken clock is behaviorally correct (occasionally) but it doesn’t have a functioning internal representation of time. An n-gram model, for high-n, can produce fluent prose, but not have any underlying understanding or representations of what it is saying, succeding to the extent that it does by piggybacking onto a corpus of speech produced by humans that talk about a world that is largely regular.

Psychology is hard. Almost any “correct” behavior can be created in a multiplicity of ways; that’s why (cognitive) psychologists who are interested in underlying representations so often look to errors, and tests of generalization.

In the case of LLMs, it’s clear that even when they produce a correct output, they rarely if ever deribe the same abstractions that a human would, or that a symbolic machine might use (perhaps preprogrammed) in a similar circumstance.

Minerva, for example, is trained on an immense amount of data, and ostensibly captures two-digit arithmetic, but it fails altogether on 4-digit multiplication, The parsimonious explanation is that it is doing a kind of pattern recognition over stored examples (with 2-digit cases more densely sampled than 4-digit cases)—rather than genuinely understanding what multiplication is about.

The same goes for essentially everything an LLMs talks about; there is a degree of generalization to similar examples, but distribution shift is hard (the crux of my own work going back to 1998), and nearly any generalization can be easily broken.

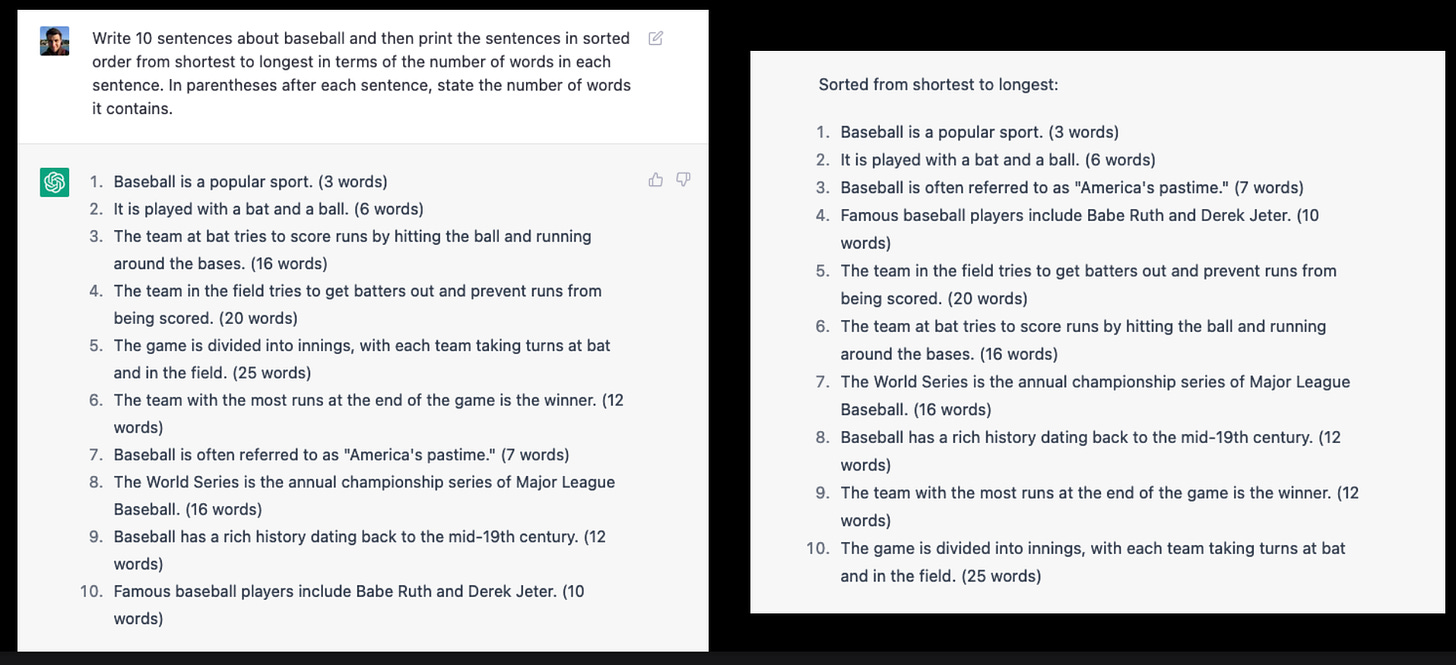

As a last example, consider the following, where it initially sort of seems like ChatGPT has understood both counting and sorting in the context of complex query—which would be truly impressive—but on inspection it gets the details wrong, because it is relying on similarity, and not actually inducing the abstractions that define counting or sorting.

This example by the way also speaks against what Terry erroneously alleged yesterday (“If you ask a nonsense question, you get a nonsense answer... LLMs mirror the intelligence of the prompt”). The request is perfectly clear, not a nonsensical question in any way. The prompt is perfectly sensible; the system just isn’t up to the job.

§

Hinton hasn’t replied yet, and I sort of doubt he will. The reality is that what I said, and what Chomsky said, is correct: the system really isn’t up to the job.

The oped wasn’t perfect. It was overwritten, with needlessly inflammatory language (eg needlessly editorializing adjectives like lumbering to describe large language models). And it could have been more careful about the use of anecdotal data in evaluating AI systems; the arguments around common sense could have been more sharply drawn. There wasn’t much contact with relevant empirical literature.. It could have been a little more effusive about the positive applications of LLMs (probably warned more about the negative applications). And there wasn’t an enormous amount there that was genuinely new.

But there is no real argument against Chomsky’s overall take: these systems are useful technology but remain a long way from true artificial intelligence, and they are even further from telling us anything useful about the function of the human mind.

By my scorecard, (counting the trilateral attack by three machine learning experts as a single joint tag team round) the score overall is Chomsky 4, Challengers 0.

Just like old times.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is a skeptic about current AI but genuinely wants to see the best AI possible for the world—and still holds a tiny bit of optimism. Sign up to his Substack (free!), and listen to him on Ezra Klein. His most recent book, co-authored with Ernest Davis, Rebooting AI, is one of Forbes’s 7 Must Read Books in AI. Watch for his new podcast, Humans versus Machines, this Spring.

Update: true to form, Noam Chomsky, loyal reader of this Substack, emailed me moments after it was posted, writing in part. “ If someone comes along with a physical theory that describes things that happen and things that can't possibly happen and can't make any distinction among them, it's no contribution to physics, understanding, theory, anything. That's LLMs. The high tech plagiarism works as well, or badly, for "languages" that humans cannot acquire (except maybe as puzzles) as for those they can. Therefore they are telling us nothing about language, cognition, acquisition, anything.

Furthermore, since this is a matter of principle, irremediable, if they improve their performance for language it only reveals more clearly their fundamental and irremediable flaws, since by the same token they will improve their performance for impossible systems.” For elaboration., see my earlier essay Chomsky and GPT-3,

I was eating popcorn, minding my own business, and watching these four swings as they happen in real time and some more (e.g. Christopher Manning's take), and realized that these takes are all, to varying degrees, "self-certain, hostile, smug," --- and with little substance. And it's sad to see that the supposed elite ranks of academia on the forefront of our technology have abdicated their duties of civil debate on ideas, and resorted to thinly veiled ad hominem attacks (e.g. Aaronson's piece) to one-up each other in a never-ending status game.

I respect Bender's work a lot, so it's disappointing to see her take that offers less substance than an impeachment of NYT's credibility. Same with Sejnowski's and Aaronson's take -- their tones changed remarkably, from civil to hostile, when it comes to everything Noam Chomsky.

There is a reason that Envy is one of the seven cardinal vices.

Granted, I don't agree with Chomsky on a number of things, but he is always willing to engage in civil debates of ideas, and tries to offer substance rather than dog whistles. That NYT OpEd is too writerly, no doubt, but the substance is there and it's up to the debunkers to come up with worthy counterarguments.

Hats off to good ol' Noam -- still slaying it at 94, and hope there will be many more!

Lets be honest about these attacks. The current crop of chat bots are part of a multi-billion industry trying desperately to find a use for their investment. People have bought into the hype, including academics, and now even the slightest criticism makes them hysterical.