Chaos and tension at OpenAI

Safety seems to be taking a back seat

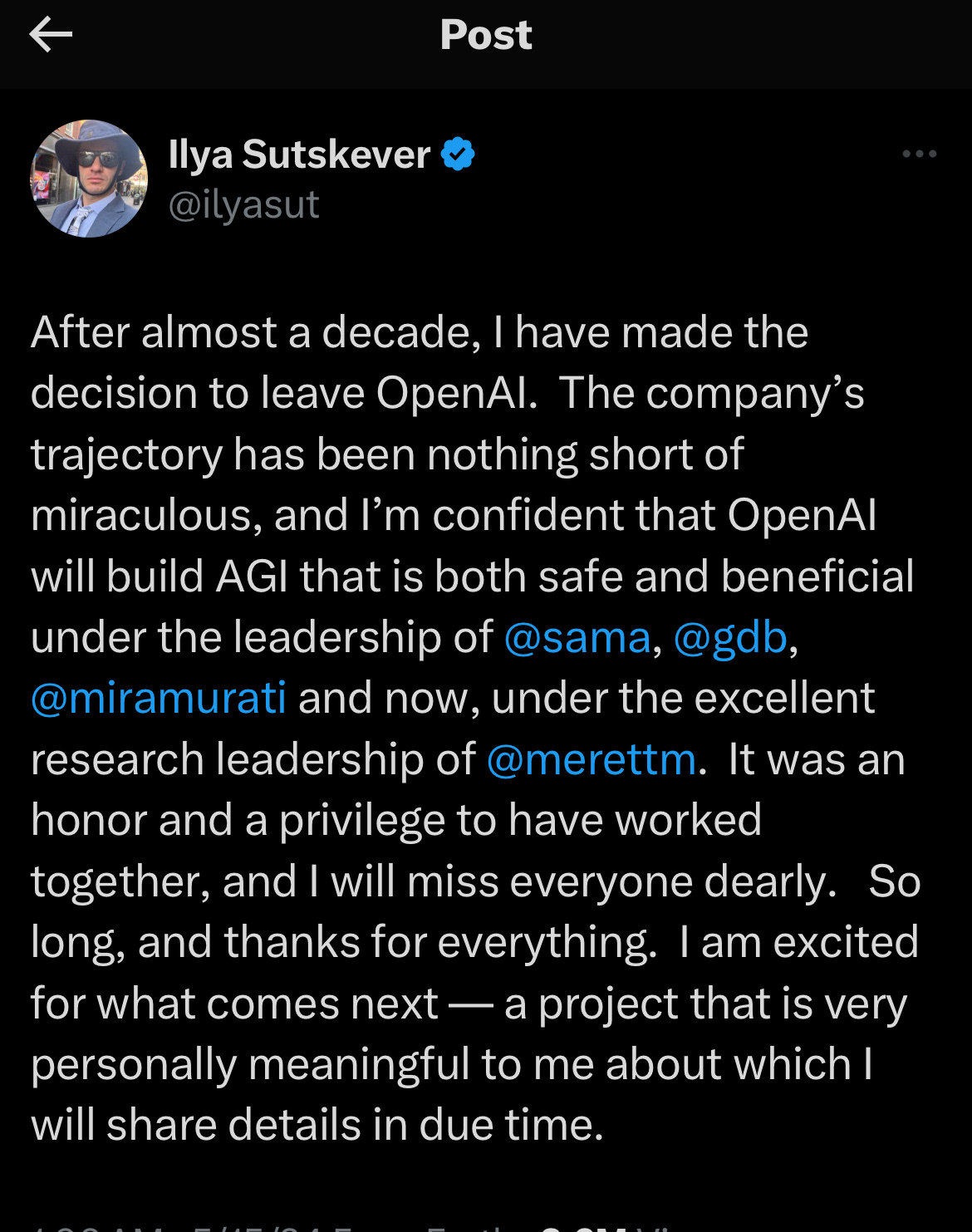

Whatever Ilya saw, he is leaving. I don’t think it’s about a scary new algorithm per se (as many people have speculated) – I suspects it is about an attitude: the company’s attitude towards AI safety.

The company was built with the socially-responsible goal of benefiting humanity; AI safety was always presumed to be central to that mission. As Elon Musk noted in his lawsuit against OpenAI, that mission seems to be in the rear view mirrors. But it’s not just Ilya that’s leaving.

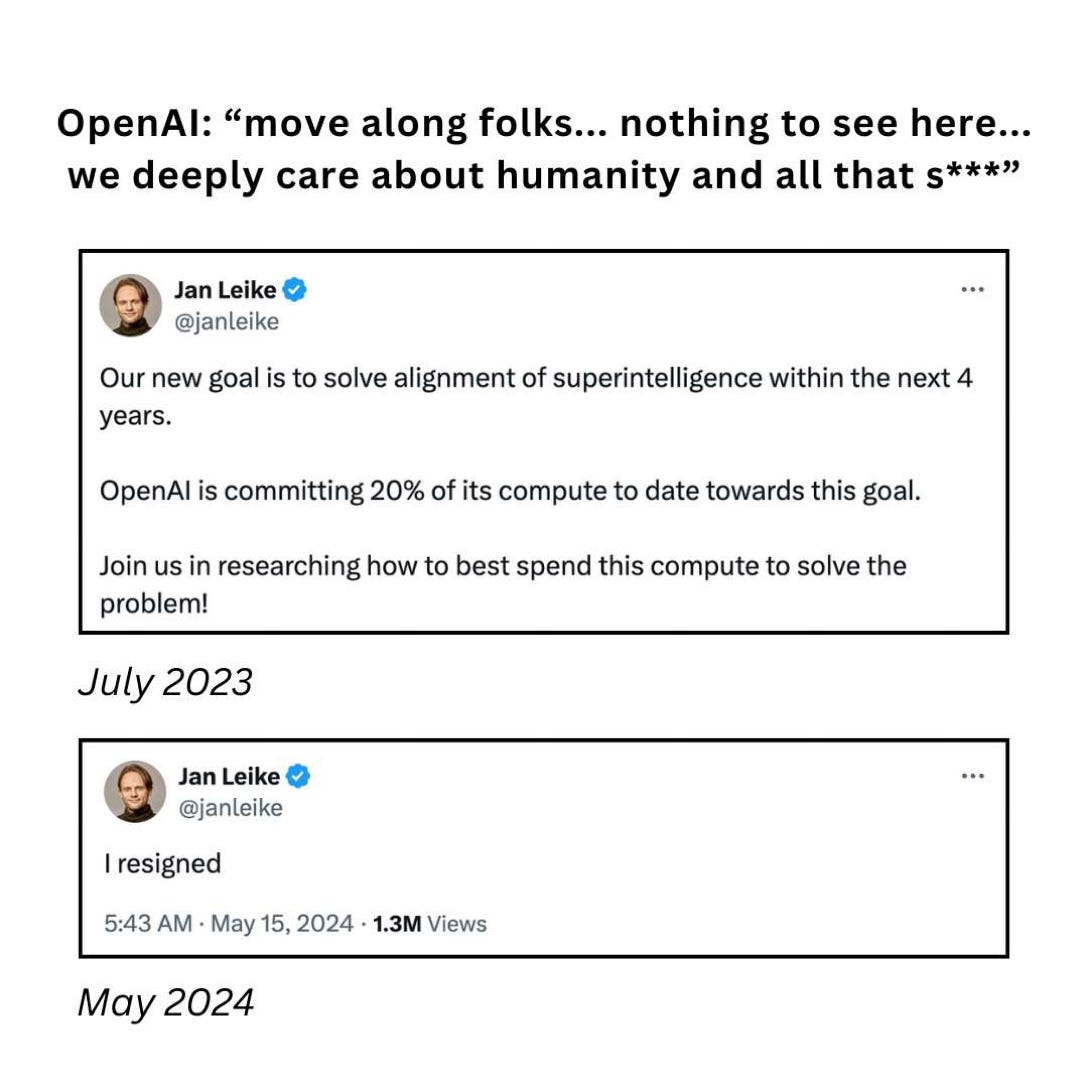

As noted up top, Jan Leike, a leader in the OpenAI “alignment” mission just quit, too.

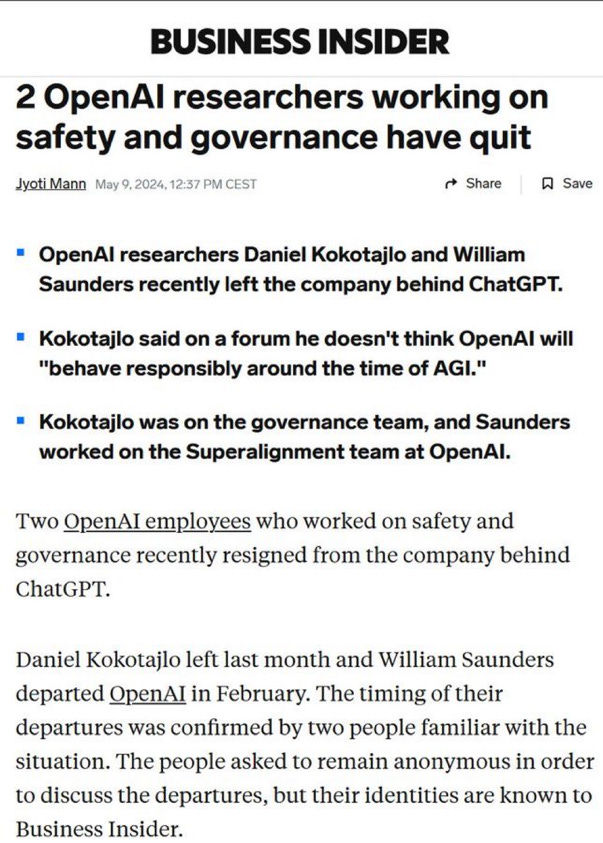

Two others left in recent months as well, as reported last week:

People don’t leave companies with $86 billion valuations for nothing. Four key resignations thus far in 2024 is not a good sign.

So many questions.

Should the public be worried?

Will the (new) board at OpenAI take note? Will they do anything to address the situation?

Will OpenAI’s status as a nonprofit remain in good standing?

Will the help Elon Musk’s case?

Does Sam care? Is this what he wanted?

Is OpenAI’s push to commercialization coming at the expense of AI safety?

My friends in Washington should look into this.

Gary Marcus will return later today to discuss breaking AI-related news that will soon be announced in Washington.

So many questions! (A) Should the public be worried? -- The public should be worried (in particular) about any AI system that is deployed at scale, so whatever the major AI labs such as OpenAI are doing should be top of this list. All contemporary AI (including the major LLMs such as ChatGPT) is at best only minimally aligned with human values. BY DEFINITION, a minimally-aligned AI system will inexorably inflict societal harm (in parallel with any benefits) when deployed. Therefore a minimally-aligned AI system that is deployed at global scale (such as ChatGPT) will necessarily inflict societal harm at global scale (although it's difficult to predict in advance exactly what form that harm will take, we will doubtless gradually find out over the next 10-20 years). The only good news in this regard is that contemporary LLMs are simply too dumb by themselves to represent a significant x-risk. (B) Will the (new) board at OpenAI take note? -- Internally, for sure; these are not stupid people. (C) Will they do anything to address the situation? -- Only if they perceive it to be a problem relative to their (highly rationalised) objectives. It may take external pressure, such as robust legislation, to significantly temper their (and thus the company's) behaviour (ditto re the other AI labs of course). (D) Will OpenAI’s status as a nonprofit remain in good standing? -- In my assessment, OpenAI is already firmly under the control of profit-motivated interests. It's entirely possible however that key people within the company, even board members, have not quite worked this out yet. (E) Will [this] help Elon Musk’s case? -- Quite possibly. (F) Does Sam care? -- I believe he cares deeply. I also believe HE believes he's doing the right thing, which (when Kool-Aid is involved) is not necessarily the same thing as actually doing the right thing. (G) Is this what he wanted? -- I suspect not, but HE needs to work this out (ditto re the rest of the board). (H) Is OpenAI’s push to commercialization coming at the expense of AI safety? -- 10,000%.

One has to look long and hard with a magnifying glass to find examples of a profitable business willing to offset revenue for safety concerns. Ford Pinto, anyone?