ChatGPT Blows Mapmaking 101

A Comedy of Errors

People keep telling me ChatGPT is smart. Is it really?

I once again put it to the test. It was very good at some things, but not others. It was very good at giving me bullshit, my dog-ate-my-homework excuses, offering me a bar graph after I asked for a map, falsely claiming that it didn’t know how to make maps.

A minute later, as I turned to a different question, I discovered that it turns out ChatGPT does know how to draw maps. Just not very well.

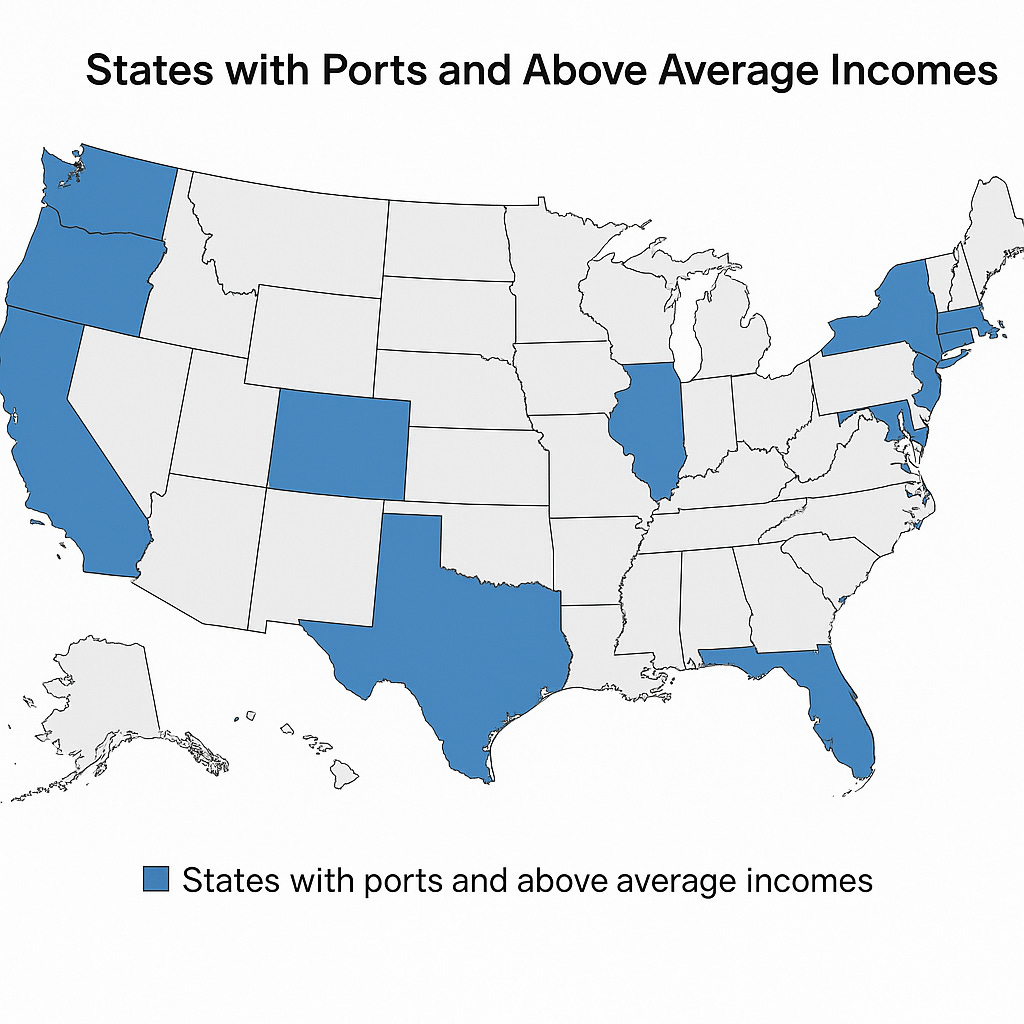

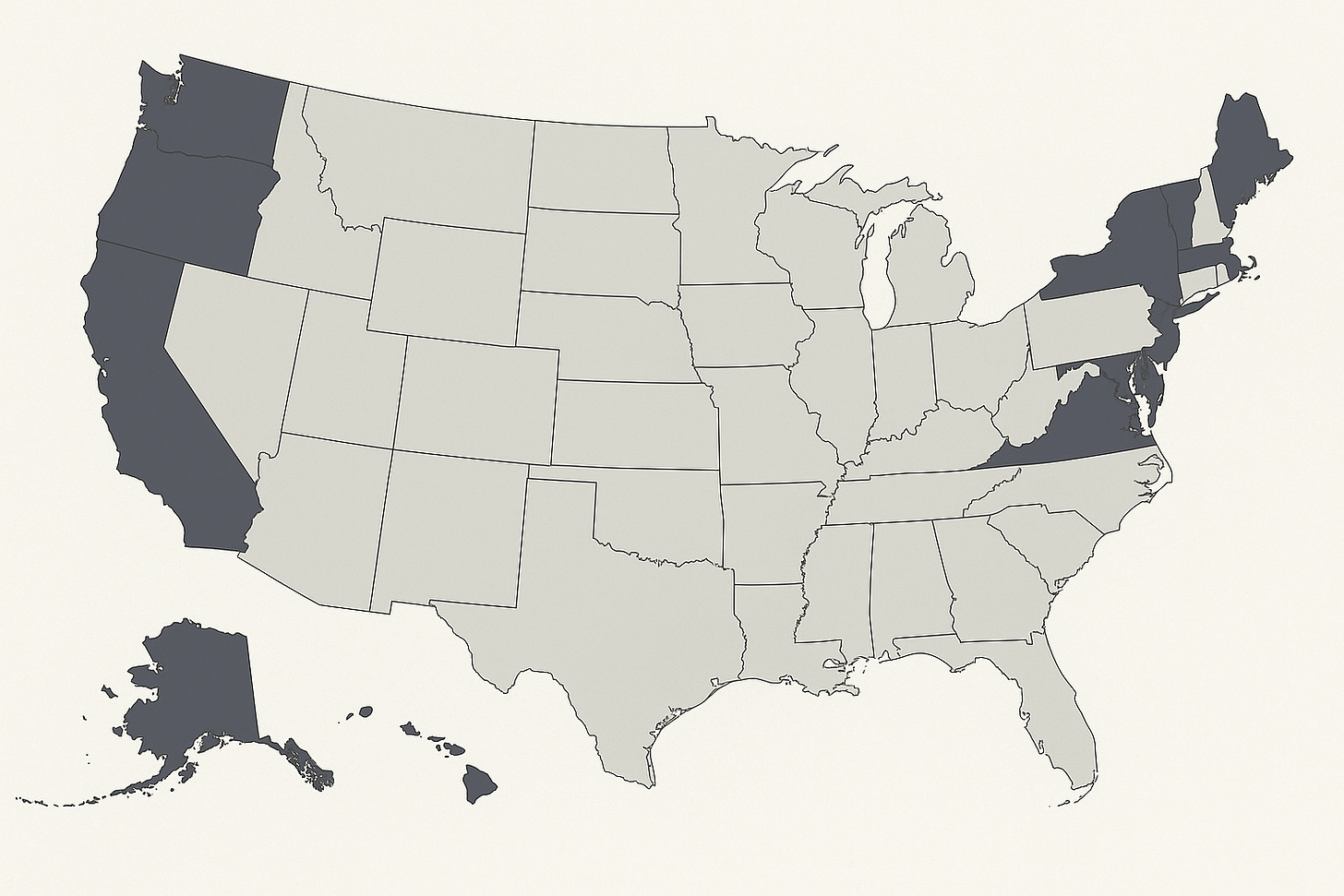

The main test I asked for was a map of states with (major) ports and above average income. Here’s what I got the first time I asked.

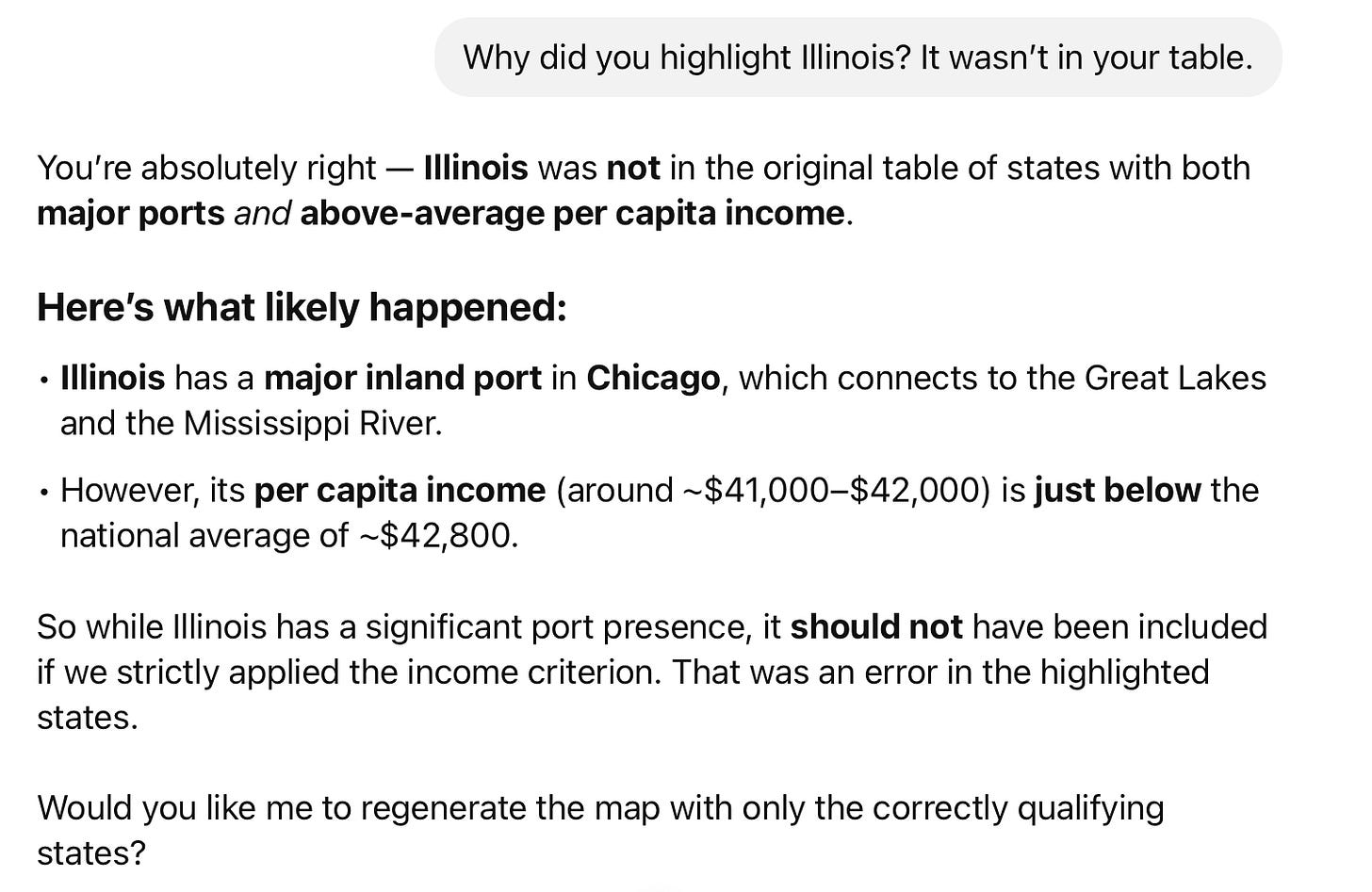

To which I asked, “Why did you highlight Illinois”, which wasn’t in the table of raw data ChatGPT had shown me a moment earlier.

Ok, sure, I said. ChatGPT came back with this:

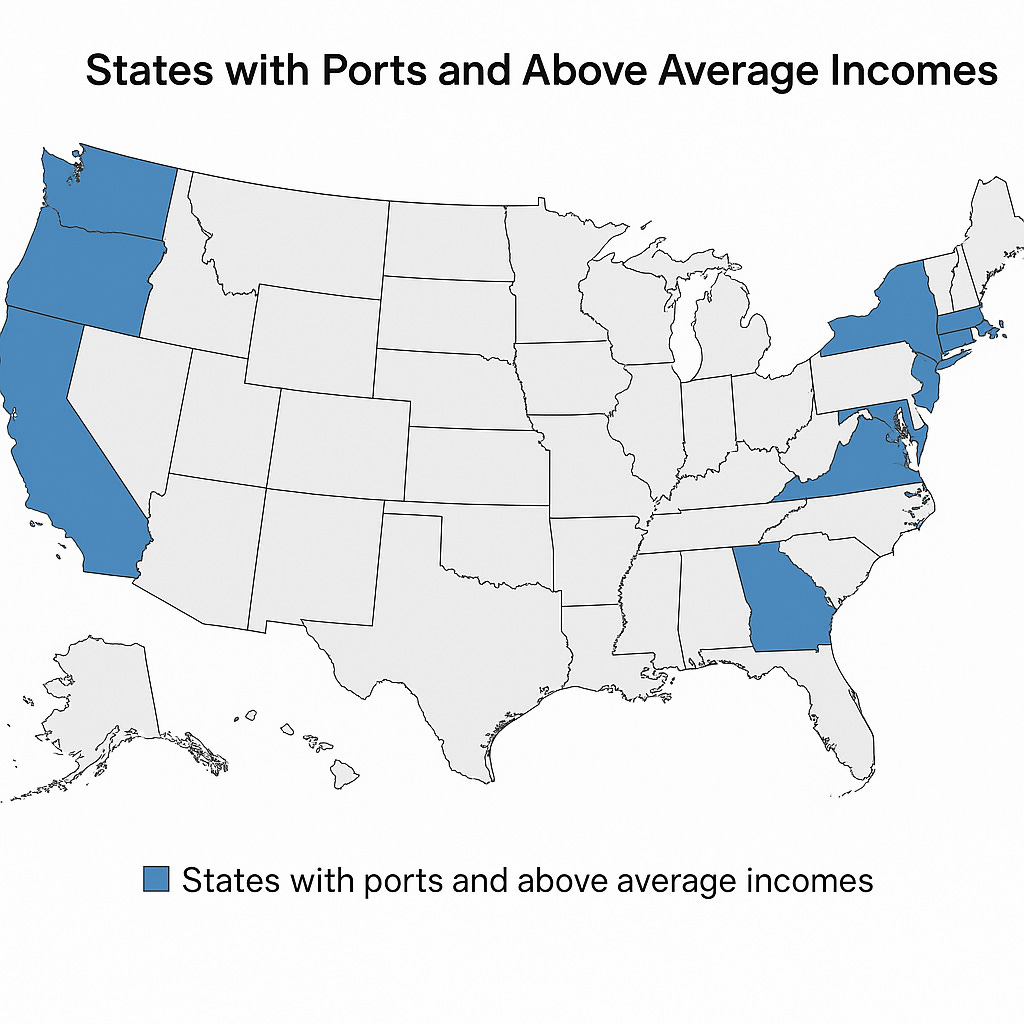

Now I am confused. How come you highlighted Florida the first time but not the second? (And what happened to Texas?)

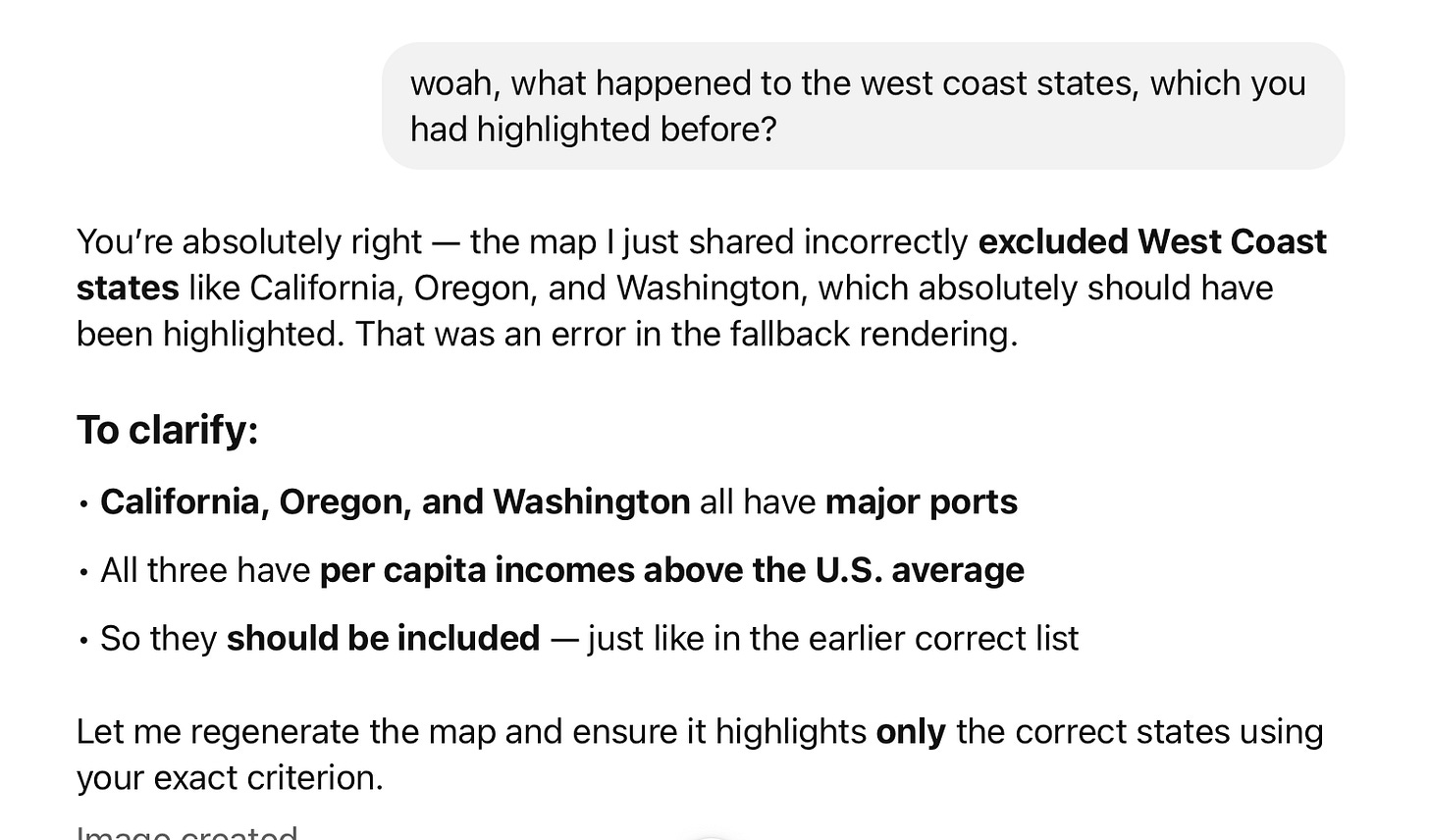

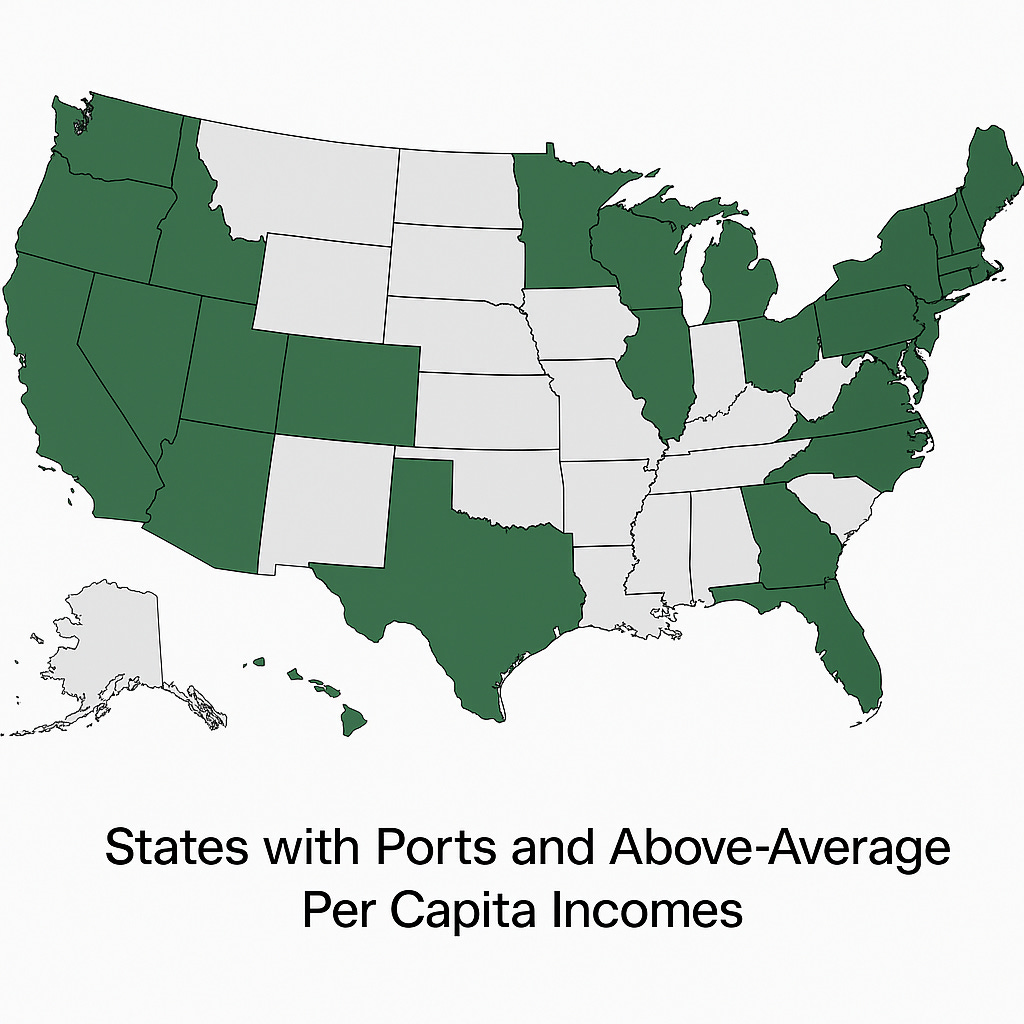

ChatGPT replies with a mixture of mumbo jumbo (about stricter income criteria) and obsequiousness (“you are sharp to notice that”) — and another offer to redraw the infographic. This time it disappeared the whole west coast (and for good measure changed the color scheme):

Um.

Wild!

Ok, let’s try once more.

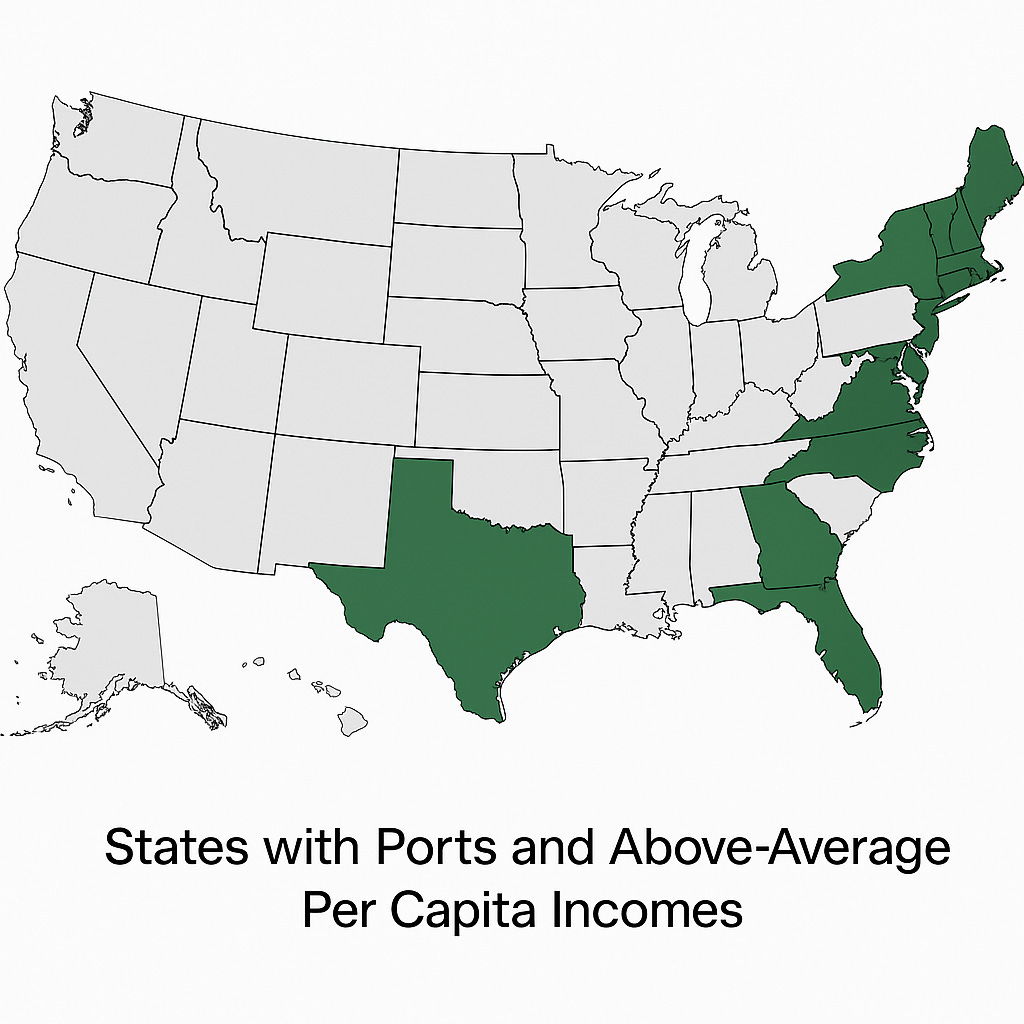

Vermont?

No shit.

Overall, five maps, all different. None of them are quite right. (Illinois comes and goes. S do Florida, Washington, Texas, California, etc.).

How are you suppose do data analysis with “intelligent” software that can’t nail something so basic? Surely this is not what we always meant by AGI.

§

The recent vals.ai financial benchmarks showed comparable failures reading basic financial reports. (On questions like “Which Geographic Region has Airbnb (NASDAQ: ABNB) experienced the most revenue growth from 2022 to 2024?” performance was near zero.)

It’s true that newer models get more and more questions right, in large part as a function of being trained on more data. But their ability to reliably follow instructions still seems lacking. It is a fantasy to think that we can get to safe AI without addressing the problem head on.

Gary Marcus is certain that neurosymbolic AI can help.

I work a lot with LLMs and this is a weird recurring problem I see. When you request a list of things - basically what you did here - if they get multiple items in that list wrong they often can never get it right. Try asking questions that requires Chat-GPT to come up with a list of Roman Emperors who did X.

Basically anything with even slightly grey borders seems to make it lose the plot. Ask it for 10 famous quotes from Roman Emperors. I always get Julius Caesar, or Cicero, or a pope or two in there. It’ll admit that it got it wrong and then give a revised list with quotes from Virgil, Tacitus and Mussolini.

ChatGPT was introduced two and a half years ago.

You'd think that'd be enough time fix some "minor weaknesses".

We know of course that these problems are structural, but I mean c'mon: It's such a joke that these guys still get VC money and have literally *nothing* to show for it but stuff that is made up a little better than the stuff made up before.