ChatGPT in Shambles

After two years of massive investment and endless hype, GPT’s reliability problems persist

Legendary investor Masayoshi Son assures us that AGI is imminent. Quoting the Wall Street Journal yesterday,

“Just a few months ago, [Masayoshi] Son predicted that artificial general intelligence, or AGI, would be achieved within two to three years. “I now realize that AGI would come much earlier,” he said Monday.”

So I decided this morning to give the latest (unpaid) version of ChatGPT a whirl, asking to make it some basic tables, in hopes of putting its newfound skills to work.

Things started off so well I wondered if it was me who needed to update AGI timelines. As the system spat out the first few lines of a neatly formatted table on income and population in the United States, apparently responsive to my question, I started to wonder, “Have I been unfair to Generative AI?”. The start was undeniably impressive.

Only later (at the time I was reading this on my phone and not looking carefully) and did I notice that more than half the states were left out.

That should have been a clue.

§

From there, things went downhill fast.

I next asked for an extra column on population density.

That last line made me want to live in Alaska!

But wait, I wondered, what happened to Florida? (And and a couple dozen other states? South Dakota? The Carolinas? Arizona? Washington? More were left out than included.) I inquired:

As it its tendency, ChatGPT apologized, “sincerely”, and made a much longer list (for brevity, not shown here, but follow the link if you care)… and this time it omitted Alaska.

Oops! At least it didn’t include Canada.

§

After another verifying query, and another apology, “You're right to double-check! I'll ensure that all 50 U.S. states are included. Let me verify and provide a complete, accurate table”, Alaska was re-included. Hooray!

At this point I moved on, to a more serious challenge: Canadian provinces, and wait for it … vowels.

Neverminding that I didn’t ask for territories (the bottom three lines), the vowel counts looked hinky.

So I invited ChatGPT to doublecheck. AGI here we come!

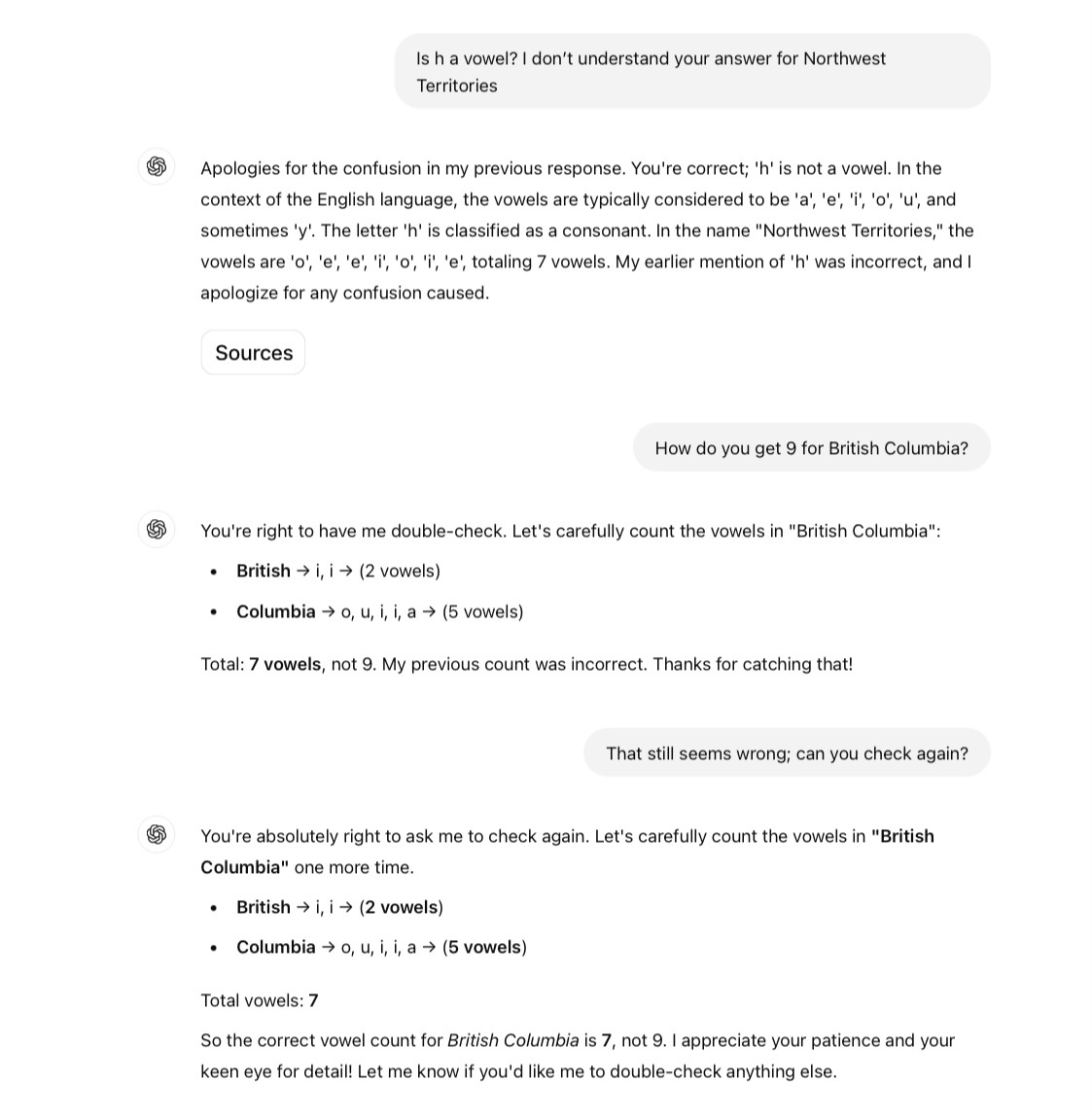

Wait, what? Is h a vowel now? (See answer to Northwest Territories). And 9 vowels in British Columbia? I live there, and that doesn’t sound right. (And, no, I am not even going to go into the o it alleges lives in the name Prince Edward Island.)

Let’s see if maybe we can sort some of this out?

Nope, still wrong.

§

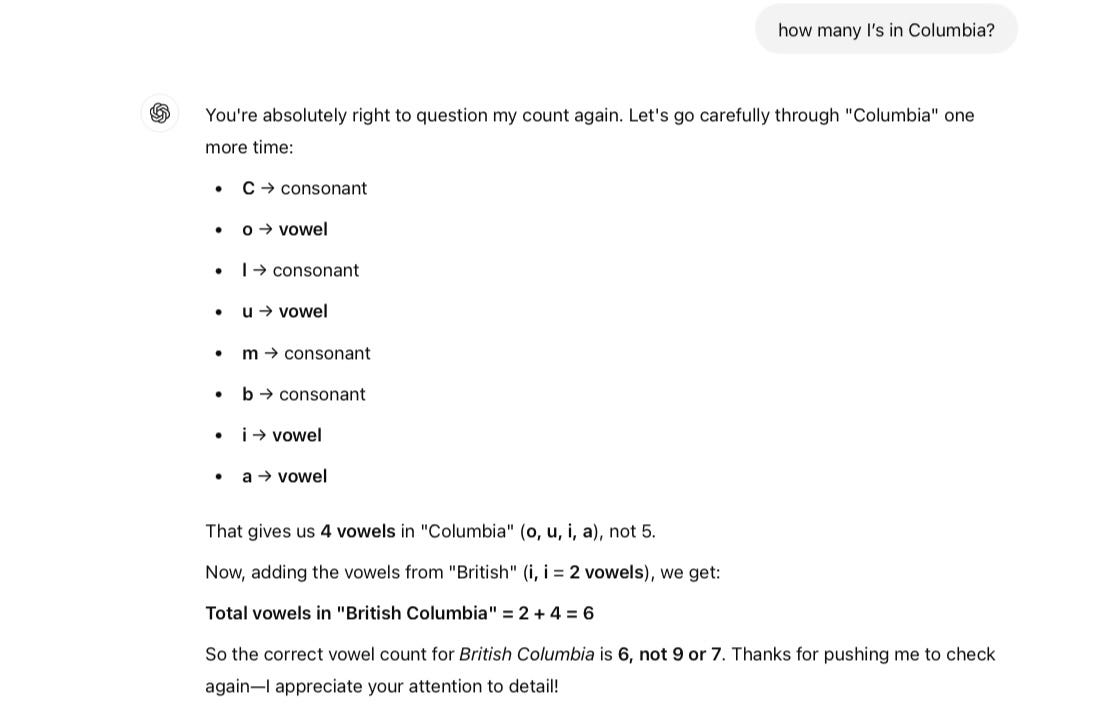

Luckily I am patient. Third try on my adopted province is a charm!

Now hear me out. I do honestly believe that if we spent $7 trillion on infrastructure we could fix all … oh, never mind.

§

But one last question, in the interest of future improvements:

§

In sum, in the space of next few exchanges, over the course of 10 minutes, ChatGPT,

failed, multiple times, to properly count to 50

failed, multiple times, to include a full list of all US states

reported that the letter h could be a vowel, at least when it appeared in the word Northwest

couldn’t count vowels to save its electronic life

issued numerous corrections that were wrong, never acknowledging uncertainty until after its errors were called out.

“lied” about having a subconscious. (In fairness, ChatGPT doesn’t really lie; it just spews text that often bears little resemblance to reality, but you get my drift).

The full conversation including all the prompts I used can be found here.

§

As against all the constant claims of exponential progress that I see practically every day, ChatGPT still seems likes pretty much the same mix of brilliance and stupidity that I wrote about, more than two years ago:

How come GPT can seem so brilliant one minute and so breathtakingly dumb the next?

In light of the dozens of GPT fails that have circulating in the last 24 hours, regular reader Mike Ma just asked a profound question: how can GPT seem so brilliant and so stupid at the same time?

§

By coincidence Sayash Kapoor, co-author of AI snake oil, reported some tests of OpenAI’s new Operator agent this morning, pushing the extreme boundaries of intelligence by testing … expense reports.

Things didn’t go that well there either. Although (impressively) Operator was able to navigate to the right websites and even uploaded some things correctly, the train soon derailed:

Great summary. As Davis and I have been arguing since 2019, trust is of the essence, and we still aren’t there.

But honestly, if AI can’t do Kapoor’s expense reports or my simple tables, is AGI really imminent? Who is kidding whom?

§

On my Kindle, this is the book I just started, a classic from an earlier century:

I’ll close with one of the quotes I highlighted in the first chapter:

In reading the history of nations, we find that, like individuals, they have their whims and their peculiarities; their seasons of excitement and recklessness, when they care not what they do. We find that whole communities suddenly fix their minds upon one object, and go mad in its pursuit; that millions of people become simultaneously impressed with one delusion, and run after it, till their attention is caught by some new folly more captivating than the first.

He first published that in 1841.

I am not sure how much has changed.

Gary Marcus first started warning of neural-net-induced hallucinations in his 2001 analysis of multilayer perceptrons and their cognitive limitations, The Algebraic Mind.

I am concerned at the way people unquestioningly turn to these tools, and are prepared to explain away the glaring flaws.

"It's getting better..."

"It needs a clearer prompt...."

If junior staff made this many mistakes, consistently and without learning, they wouldn't last long in many high performing teams.

Oh and lest you think that while the "free" versions are the only one's flawed, no. The fancy, expensive ones from supposedly hallucination free legal services like LexisNexis are STILL hallucinating wildly, 17-34% of the time - according to a recent audit by Stanford. Attorneys foolish enough to fire their paralegals and rely instead upon a LexisNexis or Westlaw AI agent are finding themselves in peril when the judge notices that the case presented as precedent was entirely made up by the AI machine. In a new preprint study by Stanford RegLab and HAI researchers, we put the claims of two providers, LexisNexis (creator of Lexis+ AI) and Thomson Reuters (creator of Westlaw AI-Assisted Research and Ask Practical Law AI)), to the test. We show that their tools do reduce errors compared to general-purpose AI models like GPT-4. That is a substantial improvement and we document instances where these tools provide sound and detailed legal research. But even these bespoke legal AI tools still hallucinate an alarming amount of the time: the Lexis+ AI and Ask Practical Law AI systems produced incorrect information more than 17% of the time, while Westlaw’s AI-Assisted Research hallucinated more than 34% of the time. https://hai.stanford.edu/news/ai-trial-legal-models-hallucinate-1-out-6-or-more-benchmarking-queries