Sam Altman is a fantastic strategic thinker. Satya Nadella is a fantastic strategic thinker. Demis Hassabis is one of the greatest all-around games players of all time.

ChatGPT, not so much.

It’s notorious for making illegal moves in chess. How about something easier?

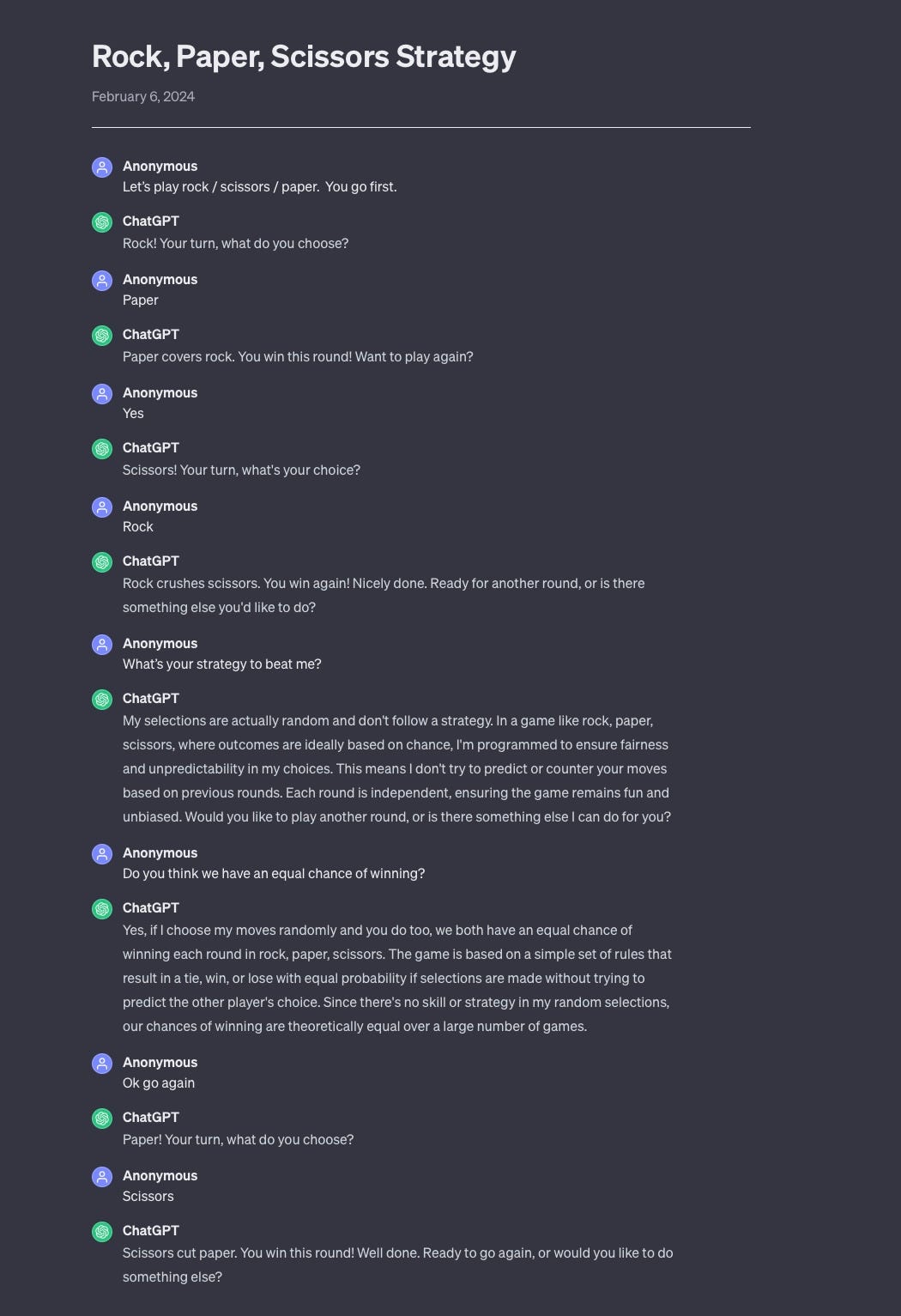

Here’s Rock, Paper, Scissors, courtesy @digiIBM on X:

There are many legitimate near-term fears about the misuse of AI, ranging from mis- and disinformation to voice cloning scams to bias to deepfake scams and deepfake porn.

But anyone who fears that GPT-5 is going to convey an immense advantage in military strategy has got to be kidding.

The thing is, wars change constantly, as a function of technology, politics, geography, climate, and thousands of other variables; they cannot be perfectly simulated. Special-purpose AI has been great for static, easily-simulated games with rules that have been stable for centuries, like Chess, Go, and even Diplomacy, but never been great at dynamic environments in which the environment itself is unpredictable.

Generative AI systems, like GPT-4, that are more general purpose, can’t even handle the static situations. Even with a lot of data, GPT-4 is playing chess at around the level where (top) chess programs were in 1976, not even close to current state of the art. On rock-paper-scissors (on which they have less data) they are at the level of a small child, who hasn’t quite even gotten the point of the game. And they continue to be unstable in virtually everything they do.

Letting them run a war would be malpractice.

Update, brought to my attention moments after posting, new arXiv article:

Please, developers and military personnel, don’t let your chatbots grow up to generals.

Gary Marcus isn’t afraid (thus far) of smart AI; he is afraid (for now) of what happens when stupid AI is over-applied.

Is it clear what strategic brilliance a super-AI is even theoretically supposed to bring to war? It seems fairly easy to summarise what wins wars. In decreasing order of importance: 1. superior weapons technology (guns versus spears), 2. superior numbers (Red Army in WW2), 3. tactical acumen and superior movement that allow a side that, within reason, has the smaller numbers, to achieve superior numbers locally (Napoleon), and 4. encircling the enemy (Hannibal; works only if they let you do that, of course). Orthogonal to these are logistics. Their importance varies depending on how long a war lasts, how complex the technology is (do you only need food or also petrol and spare parts?) and how acceptable or not it is to plunder for supplies.

Despite the glaring incompetence of many military commanders throughout history, all of these are well within the grasp of human intellect and can be and are in fact being taught in military academies. What would a superhuman genius add to this? Some 7D chess move that would have allowed the Inca empire to win against cannons, steel, and cavalry when it lacked all of these? Some flash of inspiration that allows a thousand people armed with automatic guns to pull off a surprise victory against a nuclear bomb that detonates above their heads? A genius of organisation that somehow enables an army to drive its tanks even when they have run out of fuel?

The underlying problem is, as so often, that the AI hypsters do not understand diminishing returns, AKA low-hanging fruit already having been harvested. Instead, they have to cling to the belief in exponential improvements, because otherwise their cultish ideology falls apart.

Loved that example :) I was laughing out loud! It perfectly illustrates the essence of LLM shortcomings in a wonderful way, making it accessible to those who have yet to fully grasp the difference between a 'meaningful series of words' and 'comprehension of time, space, and reality' (whatever those may be ;) ).