Disturbing and misleading efforts to defame Helen Toner through misdirection

OpenAI’s new board just made its loyalties clear. We should all be worried/

Let it not be said that Sam Altman doesn’t have his admirers. At least two proxies have gone after Helen Toner, one (by a pair of prominent authors, both on OpenAI’s board) in The Economist , highbrow, one low (a post on X that got around 200,000 views).

Both read to me as deeply misleading, verging on defamatory. The lowbrow attack comes from an anonymous poster; in my view it is wrong on every point but not worth wasting a lot of time on. In brief, before I get to the counterattack by the Board that is far more worrying:

The X post seems to object to Toner’s claim that the board heard about ChatGPT via Twitter, on the grounds that a predecessor (the GPT 3.5 API) was publicly available. The 3.5 API (geared towards programmers as a backend tool) was available beforehand, but that’s not of course the consumer-directed product with gimmicks like word-by-word typing to give a false impression of humanness that ultimately went viral. It is the latter, and not the former, that led the late Dan Dennett to write an essay about the perils of counterfeit humans, arguing that “Companies using AI to generate fake people are committing an immoral act of vandalism, and should be held liable.” The Board should have been apprised that this was in the works.

The author’s thread claims that the author never heard Sam say that he had no financial interest in the company, and argues that as a board member he had a right to a salary, but Sam did in fact say more or less that he had no substantive financial interest, to the Senate, under oath, “I get paid enough for health insurance. I have no equity in OpenAI.” The poster missed something that millions of others heard distinctly on live television; that’s not Toner’s fault.

The thread accuses Toner of “outright lying” pointing to a real (but misunderstood) tension between the Toner saying “lot of people didn’t want the company to fall apart, us included” and Toner telling “The board’s mission was to ensure that the company creates artificial intelligence that “benefits all of humanity,” and if the company was destroyed, she said, that could be consistent with its mission”. Here the author of the thread misses a key difference between what the board preferred to do, and what the Board was prepared to do – per its charter – in the worst case circumstance. The fact that the Board agreed in November (when they did not see an absolute safety issue of the sort that would require the end of the company) to dissolve itself and to restore Altman to CEO rather than let the company dissolve (once virtually all the employees threatened to leave) shows that their preference for keeping the company was genuine, and that they acted in good faith.

The post on X was not in good faith. Then again, I am not so sure about the Board’s essay on The Economist, either.

§

The highbrow attack comes from two members of the current board, in The Economist, Bret Taylor (Chair of the current Board) and Larry Summers (perhaps best known as a former President of Harvard), both part of the crew that replaced Helen Toner and Tasha McCauley.

Some of what the essa6 says is true (e.g., “OpenAI has held discussions with government officials around the world on numerous issues ), but the core of the essay is misdirection (either deliberate or accidental; I can’t say).

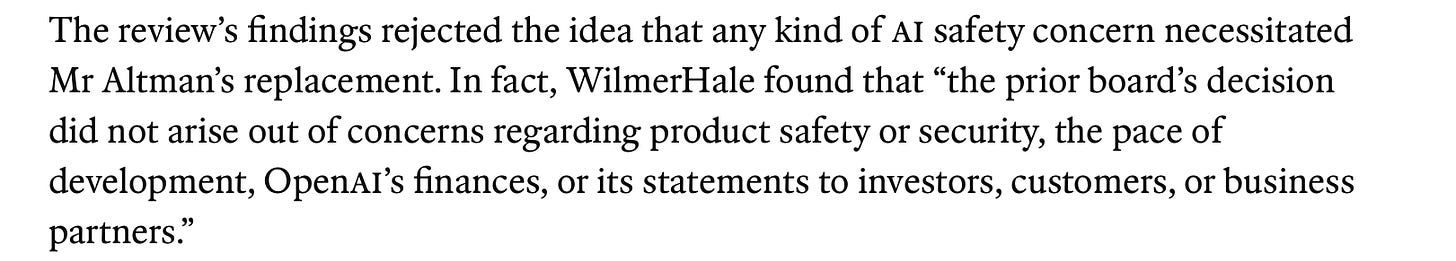

Fundamentally, the essay attacks a strawman, viz. that the firing of Altman was somehow precipitated by an immediate safety concern), thereby diverting from the board’s actual, stated concern (candor). The board never said that they were motivated by an immediate safety issue.

Taylor and Summers refer to the WilmerHale review, but leave the most important part out. Here’s what they do say, addressed to the safety bogeyman:

Here’s the thing: the (old) board never said that the firing of Sam was directly about safety, they said it was about candor (“not consistently candid”).

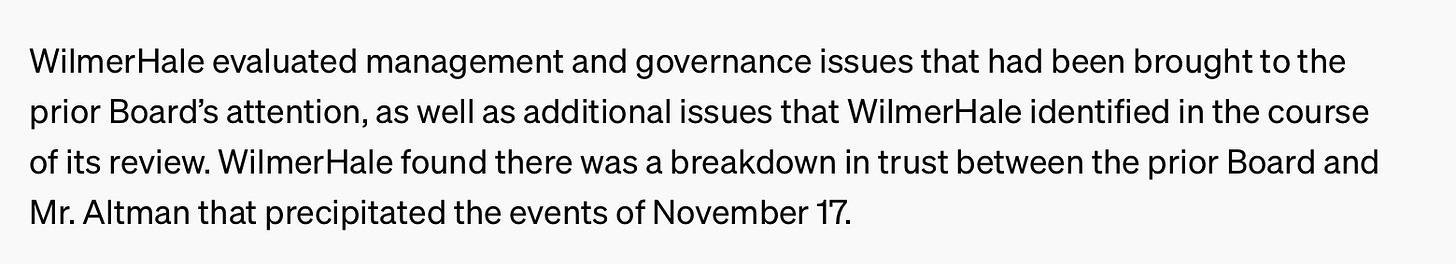

And the WilmerHale report appears to bears that out, per OpenAI’s own web page

This is exactly inline with what Toner said — but nowhere do Taylor and Summers acknowledge it. (Because Taylor and Summers worked closely with WilmerHale, they certainly were aware of the breakdown in trust.)

Given that OpenAI put this line about “breakdown in trust” (but not the full report) on their own website, and given that OpenAI did not find enough to say that the breakdown in trust was purely in the Board’s imagination, we can safely conclude that actions by Sam Altman did play at least some role in that breakdown of trust.

§

To think about the red herring of safety versus trust in a different way, recall what Toner said in recent days.

For years, Sam had made it really difficult for the board to do that job [of following the non-profit mission for humanity] by, you know, withholding information, misrepresenting things that were happening at the company, in some cases outright lying to the board.

I can’t share all the examples, but to give an example of the kind of thing that I’m talking about, it’s things like when ChatGPT came out in November 2022, the board was not informed in advance about that. We learned about ChatGPT on Twitter.

On multiple occasions he gave is inaccurate information about the small number of formal safety processes that the company did have in place, meaning that it was basically impossible to know how well those safety processes were working or what might need to change.

I wrote this paper which has been, you know, way overplayed in the press.. The problem was that after the paper came out Sam starting lying to other board members in order to push me off the board. So it was another example … that just like really damaged our ability to trust him.

All that was in the first instance about trust, not safety. She didn’t say that GPT-4 was going to kill us all, or that the much ballyhooed new algorithm Q* was some raging force that could not be controlled. Instead, used words like “lying”, “witholding”, “misrepresenting”, not “informing”, giving “inaccurate information”, and “damaged … trust.”

Taylor and Summers talked a good game, but completely failed to engage with the substance of what Toner said, in favor of a distracted, invented argument. Toner didn’t say they fired Sam for (immediate) safety violations; they said they fired Sam because they couldn’t trust him any longer. That seems to be accurate.

§

Tellingly, Taylor and Summers never use the word “candor” in the oped, and didn’t address trust at all; the only time they use a variant of the word trust is in a discussion of the trustworthiness of the software, not the trustworthiness of the leadership.

The degree to which they diverted from that core issue that led to Sam’s firing is genuinely disturbing, given that the board is our last and only line of defense if Sam chooses for economic reasons to do something genuinely dangerous.

And that’s no joke. In Sam’s own words, spoken to the US Senate, “My worst fears are that we cause significant, we, the field, the technology, the industry cause significant harm to the world.”

The Board’s job is to make sure that doesn’t happen — not to cover Sam’s ass.

§

Unfortunately, today’s letter makes quite clear where their allegiances lie.

§

It would be one thing if they were on the board of a for-profit, issuing deceptive, butt-covering statements to maximize profits; we all know that drill.

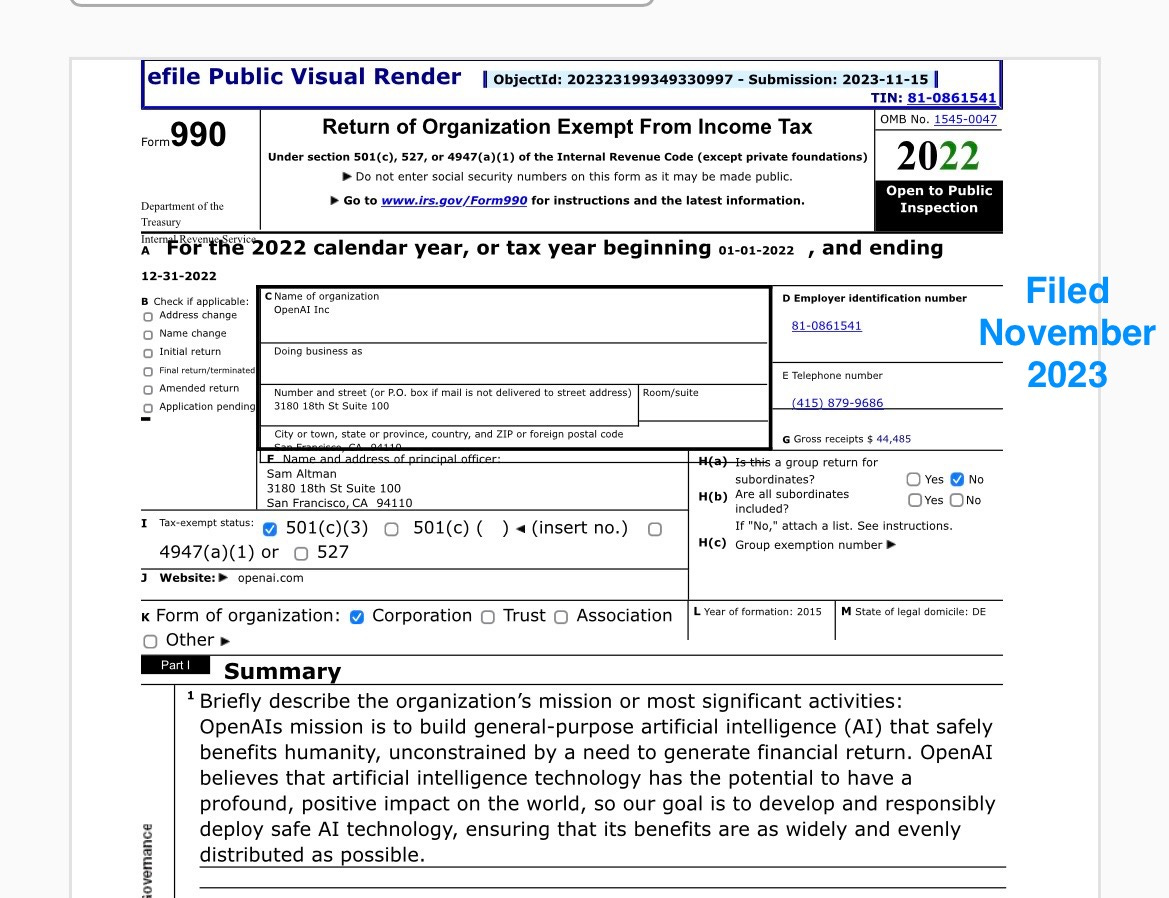

But OpenAI has been and continues to be a California-registered 501(3)(c) nonprofit, pledged not to profits, but to humanity – and still getting tax-exempt status on the basis of their promise to deploy AI safely:

§

Of course, there is a connection between safety and candor, and an obvious one at that: if Altman can’t be trusted, safety could become a serious issue.

The failure of two members of the new board (include Taylor, who chairs that Board) to draw that connection, coupled with their misdirection and unfair attack on Toner, is absolutely terrifying.

§

If OpenAI does eventually develop deeply risky technology, it is incumbent on the nonprofit’s board to address that, and if they can’t trust Altman, I don’t see they can do their job.

But I also don’t see how they can do their job of upholding the nonprofit’s pledged safety- and humanity-focused mission if they don’t even recognize that Altman’s apparent issues of trustworthiness are relevant to their job.

As far as I can tell, Toner and the earlier board acted in good faith. The misleading new oped has made me lose confidence in the new board.

§

And it is time to put the nonprofit ruse to an end. OpenAI does not behave like a nonprofit and has not for a long time. If they cannot assemble a board that respects the legal filings they made, and cannot behave in keeping with their oft-repeated promises, they must dissolve the nonprofit, exactly as the organization Public Citizen has argued.

Gary Marcus just wants OpenAI to do what it promised.

You need to quickly submit a letter to the Economist along the lines of your post today. In my experience, the Economist takes letters seriously, particularly from people of your stature.

Honestly, just fuck these guys. The ego, hubris and god complex need checked, and fast.