Eric Schmidt’s Risky Bet on AI and Climate Change

Is AI so valuable to society that we should place this bet?

In a brief aside in a recent interview, Eric Schmidt argued that, despite AI’s rapacious energy demands, he would rather bet on AI solving the problem than try to constrain AI:

In the 2 minute clip, Schmidt concludes “My own opinion is that we’re not going to hit the climate goals anyway because we are not organized to do it and yes the needs in this area [AI] will be a problem. But I’d rather bet on AI solving the problem than constraining it.”

Schmidt needless to say has a large stake in the companies in building in AI, and I think we need to take those potential conflicts of interest seriously.

More broadly, I think we must ask whether the benefit to society of AI actually warrants taking that bet. I find his argument problematic:

One key assumption Schmidt makes is that AI will be eventually worth essentially arbitrary environmental costs. For now, this seems very premature. I don’t see anything like a tight argument for that. Almost every putative virtue of AI (aside from making a small number of people rich) has been promissory. We have been told that AI will “solve physics” (per Sam Altman), make major breakthroughs in medicine, battery technology, etc, but so far none of that has materialized. All of the major potential virtues are quite speculative. (Even though some of the drawbacks, which inherently are less dependent on reliably and tight causal reasoning, such as misinformation use, nonconsensual deepfake porn, ans deepfake kidnapping scams, are already here to say). The case for techno-utopia , especially with current technology —which struggles with reasoning in open-ended domains, especially in rare situations with smaller amounts of specific data — is so far very abstract and vague. Maybe AI will transform society for the better on it, but it’s hard to put a probability on it. (Or a timeline.) All of this seem very weak premise for placing such a massive bet.

The case that AI will do serious harm to the environment if we continue on the current path is actually much stronger. The only potent trick anyone seems to have is scaling, and the more scaling the big tech companies do, the more power they will consume. Microsoft wants to take the complete power output (and turn back on) the Three Mile Island nuclear plant. Coal-plants that were going to be shut down may turn back on line. If GPT-5 isn’t magic, people will try for GPT-6, and risk even more harm to the environment, upping the current harm (difficult to conclusively calculate since so little information is disclosed) by many orders of magnitude.

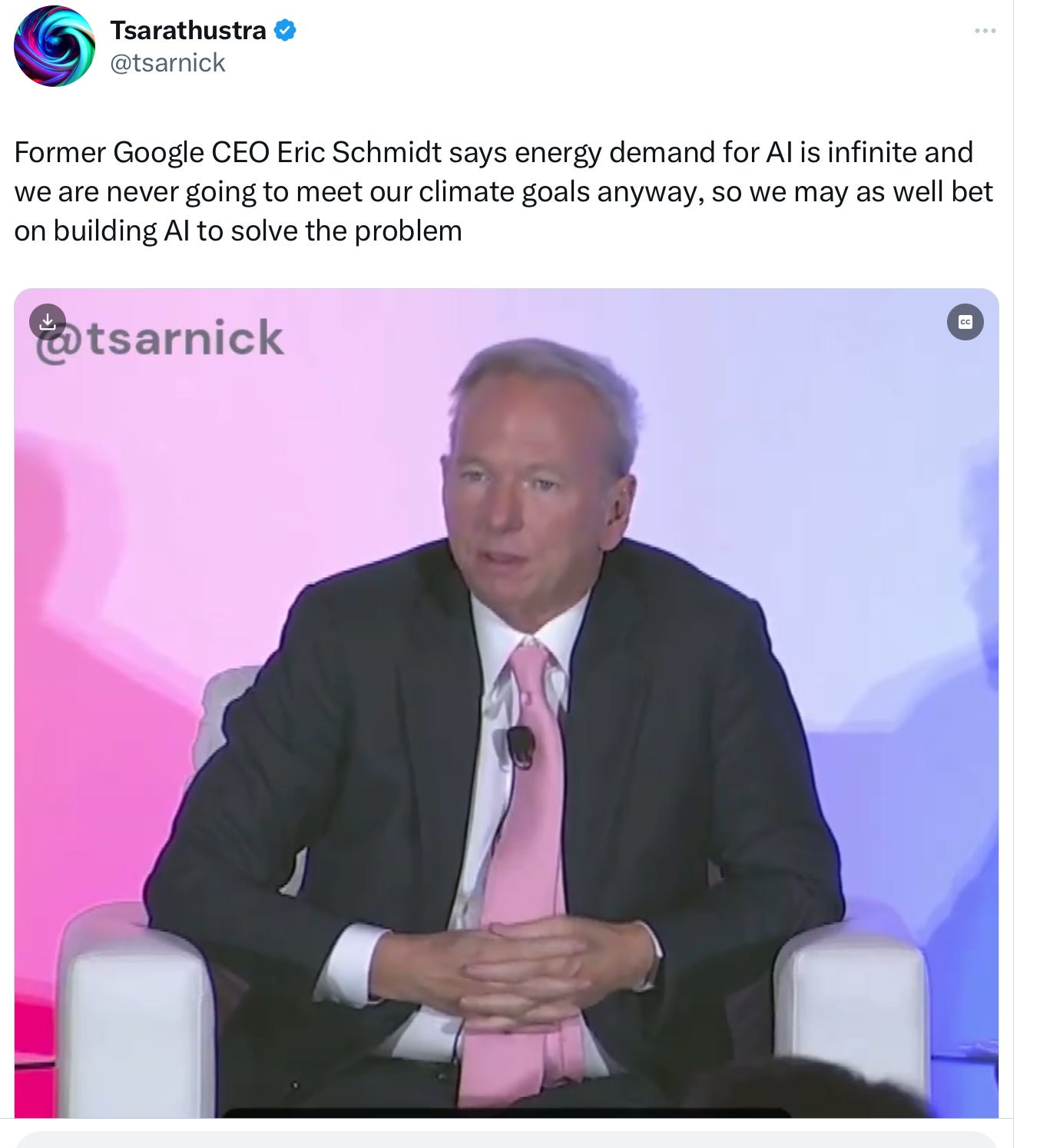

In the clip, Schmidt runs together different many different forms of AI. GenAI, which is consuming all the power, probably isn’t going to do a whole lot for addressing climate change, when it can’t even reason reliably about river crossing problems. Other forms of AI, that are more domain-specific and specialized might help; those aren’t actually the ones getting the massive funding – or doing the massive harm. Machine learning pioneer Thomas Dietterich’s comment on Schmidt’s bet captures this well:

LLMs are not the AI we need to address climate change; they are the AI we would use if we wanted to risk serious harm to the climate. Clarifying the difference between the two is critical.

§

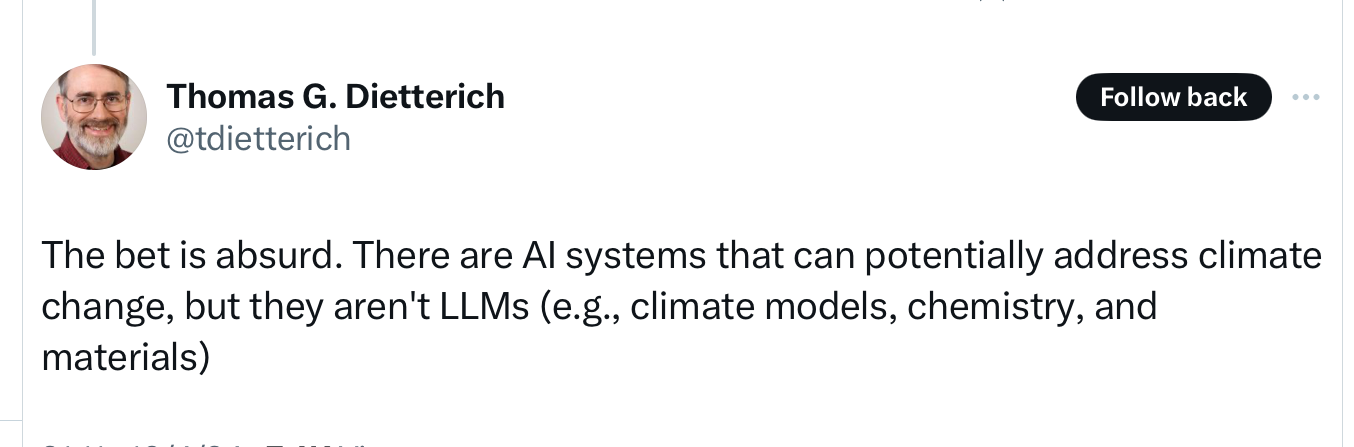

Another take on X:

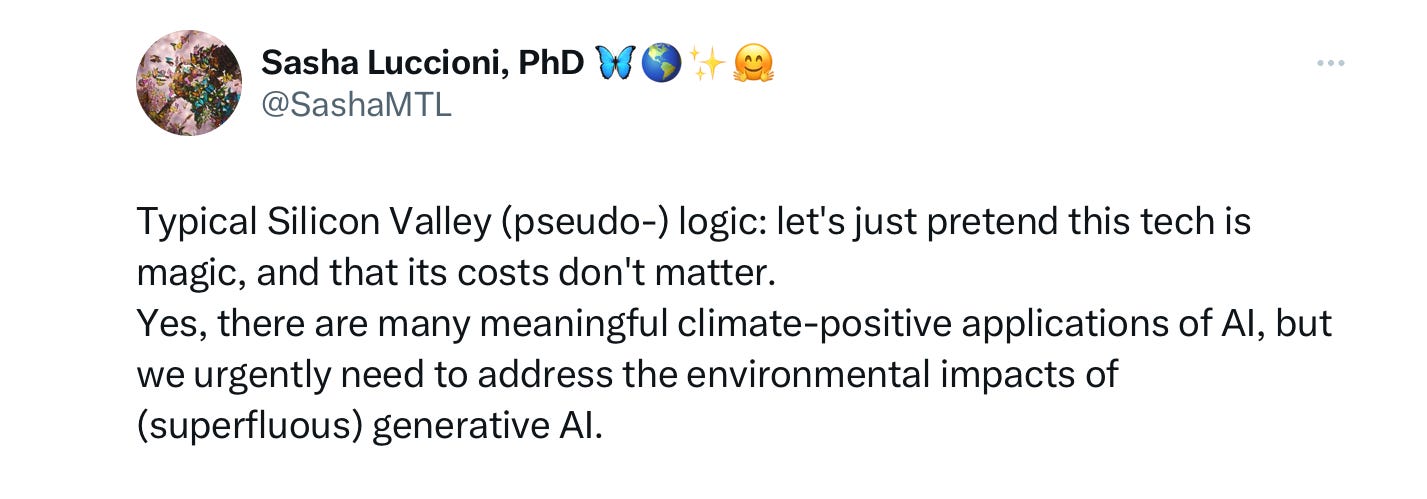

Or in the words of Sasha Luccioni, an AI researcher who has looked extensively at AI’s environmental costs:

§

Ultimately, we can’t, or at least shouldn’t, place massive bets like these without transparency and accountability. We need independent scientists asking about whether the best (rather than simply most conveniently at hand) technologies would be used, and what the odds are of success, and the costs are of any major bets, so we can make a careful decision about what it is worth it. It shouldn’t be up to a few billionaires to decide, and profit-driven companies with unelected leaders shouldn’t decide on their own, for all of us. Society has to have a vote here.

§

For the love of all that is beautiful on this planet, I hope that we think extremely carefully before going literally all in with the Earth on an unproven technology.

Chatbots and movie synthesis machines (perhaps the most energetically costly systems) are fun, maybe even amazing - but I don’t think we should be risking our planet for them.

Diverting funding from them to more focused efforts around special-purpose AI addressing climate change might make more sense.

Gary Marcus, scientist, author, and entrepreneur, is the author of Taming Silicon Valley, and co-author, with Ernest Davis, of Rebooting AI.

Idiotic and self-serving.

What magical solution "AI" would come up with, that we humans missed? A past reward-hacking and dumb AI 'idea' related to wars - 'don't start one or participate in one' (I am paraphrasing heavily) - that was hailed as amazing, by its proponents.

The climate catastrophe continues to get worse because we humans won't tackle it, not because we don't know what to do.

If greed could talk it would sound like Eric Schmidt.