Gary Marcus vs Sam Altman on GPT-o1 aka Strawberry

You might be surprised

Bear with me. I will get to Strawberry in a moment.

But first let’s rewind. Remember how last November I said that Sam Altman was turning into Gary Marcus, a few days before he got fired?

Not a joke, that really happened; here’s an excerpt.

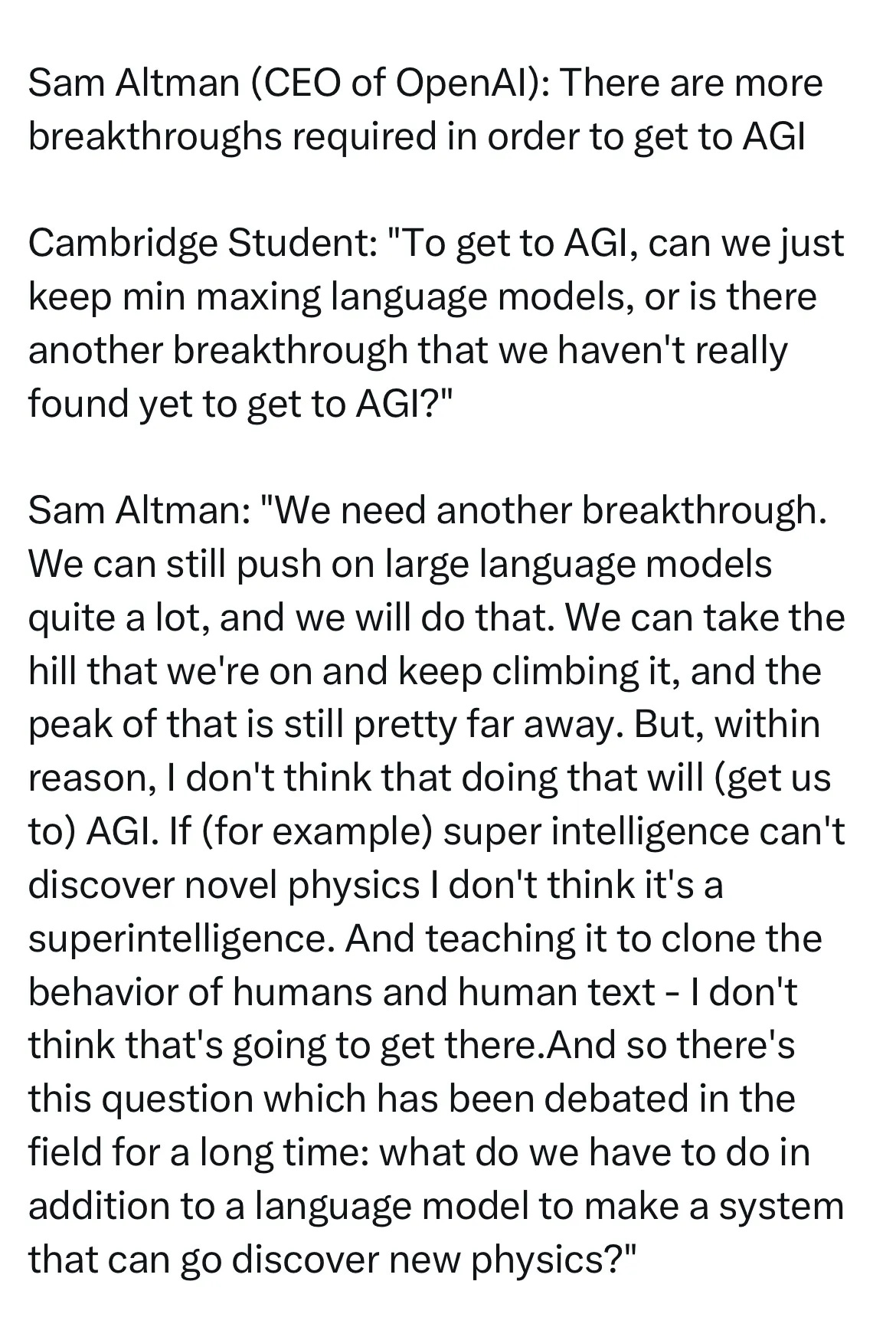

Altman had, much to my surprise, just echoed my longstanding position that current techniques alone would not be enough to get to AGI

Everyone forgot about that essay a few days later, in the Great Firing and Rehiring drama that followed.

§

Fast forward to today. I promise it will all come together in a moment.

¶

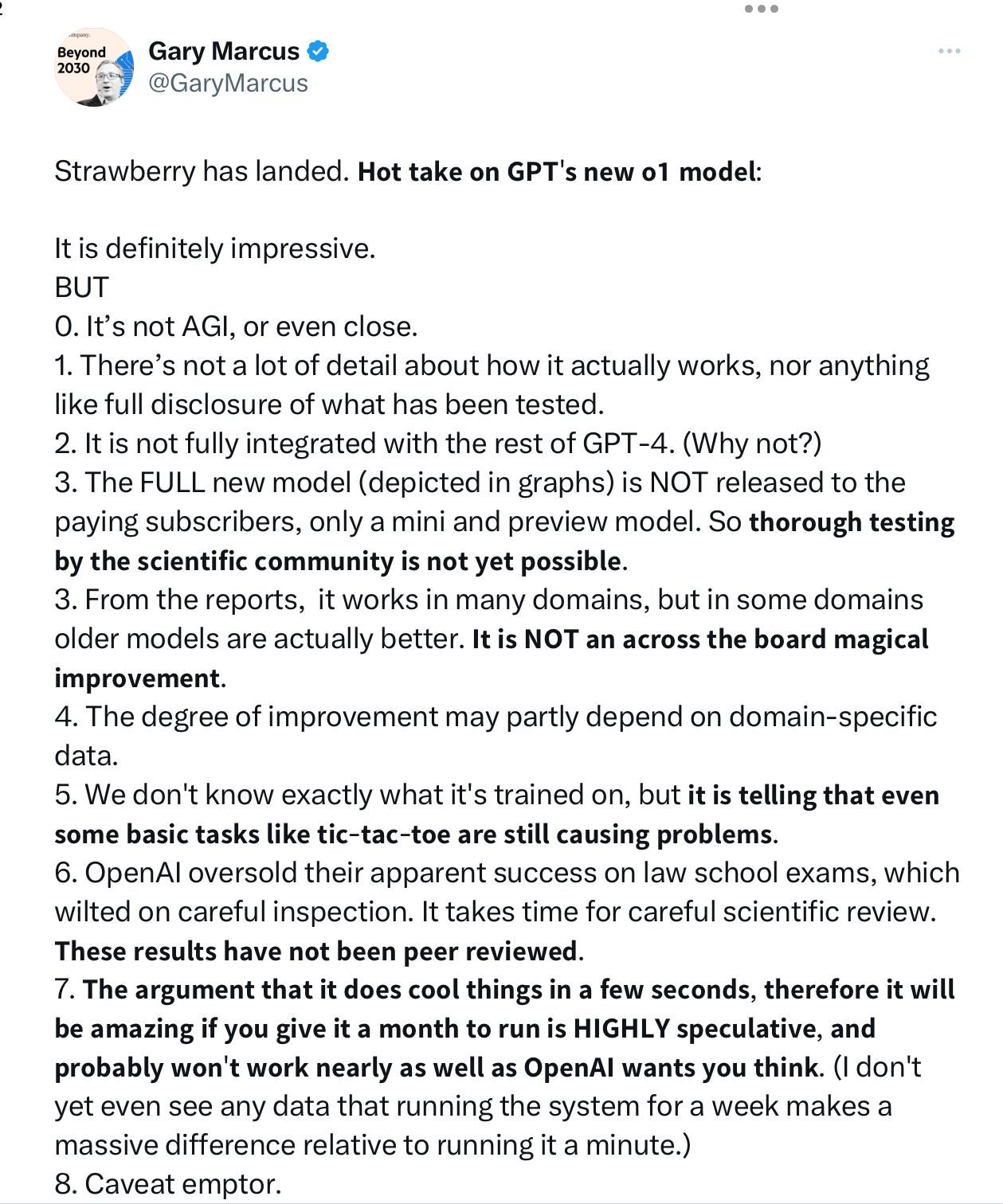

OpenAI’s latest, GPT o1, code named Strawberry, came out.. In the midst of any insanely packed day, I rushed out a hot take on X, my usual cautions about how LLMs aren’t really AGI:

(An additional point I should have made is that the new system appears to depend heavily on synthetic data, and that such data may be easier to produce in some domains (such as those in which o1 is most successful, like some aspects of math) than others.)

Fan boys as usual called me a hater.

§

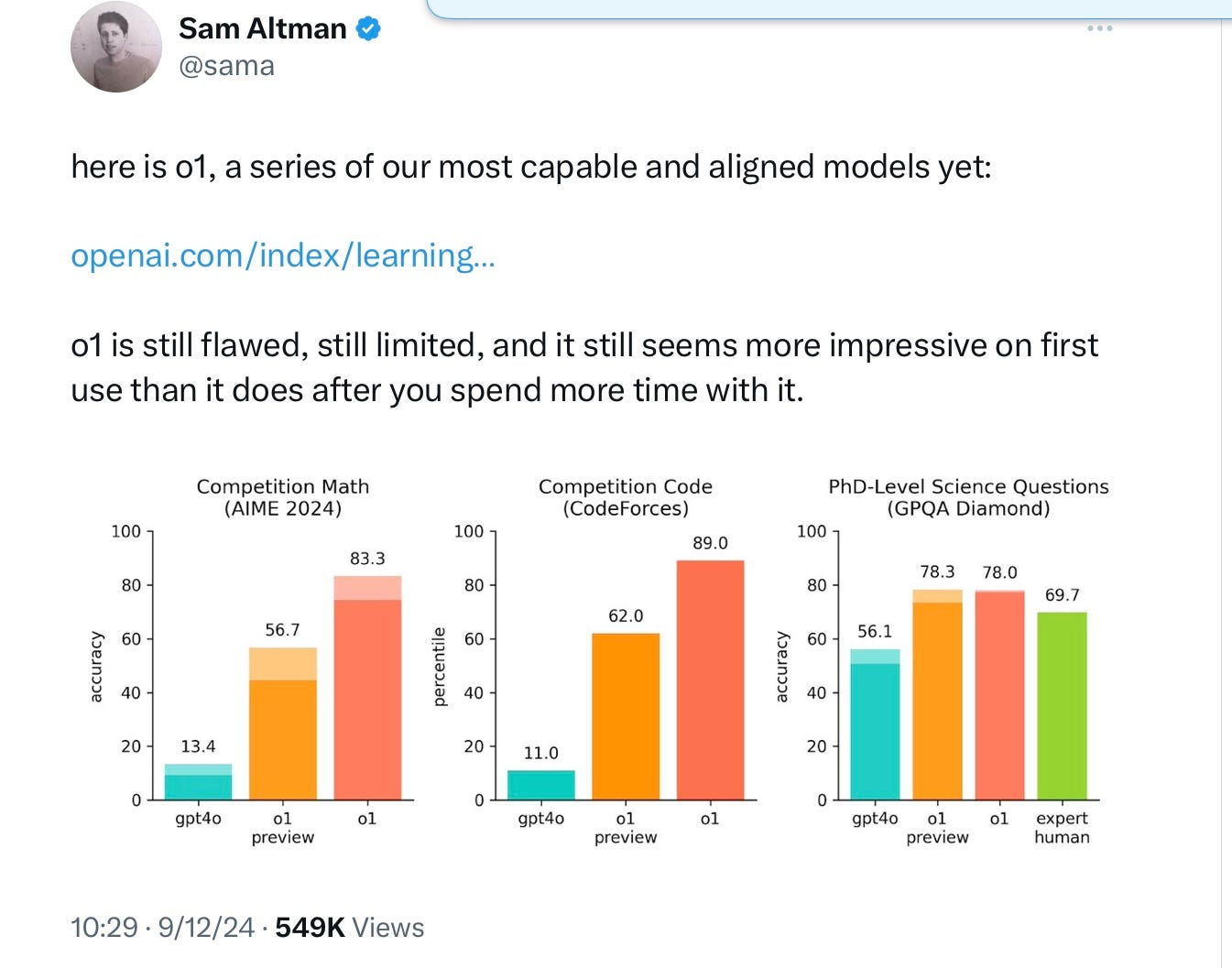

What I didn’t know, as a I scurried to get out my thoughts, was that Sam (who has had much more time to play with 4o than I have) had put his thoughts, too, at roughly the same time:

“Still flawed, still limited, seem more impressive on first use”. Almost exactly what I predicted we would see with GPT-4, back on Christmas Day 2022.

The more things change, the more they remain the same.

Even Sam is starting to see it.

Gary Marcus continue to a dream of day in which AI research doesn’t center almost entirely around LLMs..

Remind me, we need this, why?

I am VERY curious about whether they have started to incorporate symbolic reasoning or some remnant of good old fashioned AI here. In fact, I thought of you when reading the Verge coverage. "The model hallucinates less" hardly raises the bar. https://www.theverge.com/2024/9/12/24242439/openai-o1-model-reasoning-strawberry-chatgpt