Gaslighting and reality in AI

Some things that have and haven’t changed over the last few years

In fall of 2019, GPT-2 had started to grow popular and I started writing about it, a whole thread of examples of how it can easily be provoked into saying dopey things, and how superficial its understanding of reality was.

Yann LeCun tried, at that time, to minimize my objections, saying they were just about math, in machines that weren’t trained to do math, with nothing deeper was amiss:

I said the problem wasn’t math per se, but that large language models didn’t represent robust world models of how events fold over time; I stand by this 100% even today.

Yann LeCun battled me on every step of the journey, most notably in this rather bellicose and somewhat condescending “rear-guard battle” tweet, in which he said that all my concerns had long since been addressed

What’s changed since 2019?

I haven’t changed. I still often point out the dopey things that GPT-2’s grandchildren say. And I still attribute those problems to a lack of world models that represent the ways in which things unfold over time. I still see this as the central flaw in large language models.

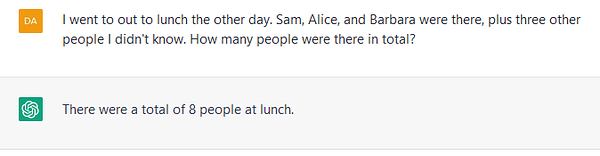

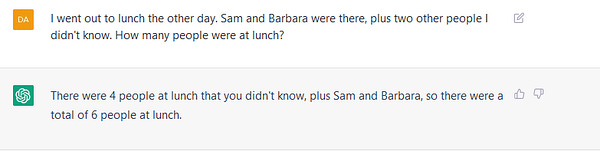

And GPT hasn’t really changed, either. Its output is more fluent, and lots of individual mistakes have been addressed. But more than three years on, it’s still—even after a new update a few days ago focused around math—having lot of problems with easy word problems like this:

and this

§

You know who has changed? Yann LeCun. LeCun’s not accusing me any more of fighting a rearguard battle, when it comes to large language models.

Instead, he’s up there with me (never citing me, of course), making the exact same points I was making in 2019, as if he never disagreed.

Oh, one other change, too: a bunch of other people have taken notice what LeCun is up to, and how he has retrenched, without a shred of acknowledgement, as if Oceania had always been at war with Eurasia. After the tweet above, one person DM’d me this morning, kidding, “Be honest. Did you hack his account to write that? lol”.

Another (someone I don’t know) wrote a long thread about LeCun’s constant gaslighting, which starts like his:

My favorite is Step 6, “Never agree with the aforementioned critics but start mimicking their approach.”

To sum up, Yann LeCun may have changed, but I haven’t. My goalposts haven’t shifted; I still want A(G)I that knows what the f___ it’s talking about, and GPT still doesn’t.

Maybe things will be different in another three years.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is a skeptic about current AI but genuinely wants to see the best AI possible for the world—and still holds a tiny bit of optimism. Sign up to his Substack (free!), and listen to him on Ezra Klein. His most recent book, co-authored with Ernest Davis, Rebooting AI, is one of Forbes’s 7 Must Read Books in AI.

Epic summary, Gary --- and lots of gold nuggets! I, for one, am glad that your views are finally being heard, despite all the gaslighting over the last 3 or 4 years and still rampant wishful thinking now. Please keep speaking up and bring to us more realistic and clear-headed views on AI, because we need them to make real progress.

I have been *stunned* at LeCun, perhaps naively. Through pure chance, I encountered theorists who made me extremely skeptical of the relationship between these types of agents and what was historically meant by "AI" and now by "AGI"; later, working with ML made me even more skeptical. To see people I once considered opponents in this debate silently switching sides has been shocking to me, but probably shouldn't have been (anymore than seeing founders trumpet their current fixations and enterprises before later pretending they never bought the hype is).

Love your work, of course!