Getting GPT to work with external tools is harder than you think

A commonly-suggested fix isn’t working

GPT-4 and similar current language-based AI programs notoriously struggle with mathematical calculations. An obvious suggestion here is that, like human beings solving a problem, these AIs should use existing computer tools such as calculators and programming languages. And, indeed, back in March OpenAI released two plug-ins that enable GPT-4 to do just that: the “Code Interpreter” plug-in, which generates Python code, and the “Wolfram Alpha” plug-in, which allows GPT-4 to call on the huge scientific and mathematical software library Wolfram Alpha.

That seems like a sensible idea; indeed, it is a crude version of the kind of hybrid neuro-symbolic system that we have been advocating for many years. Certainly, adding these plug-ins does improve things. If you enable the Code Interpreter or the Wolfram Alpha plug-in in ChatGPT (we will call these “GPT4+CI” and “GPT4+WA” henceforth in this article), and then you ask ChatGPT to multiply two 8-digit numbers or evaluate a definite integral, or compute the ratio of the mass of the earth to the mass of the star Betelgeuse or carry out some similar calculations involving straightforward mathematics, you reliably get the right answer.

However, this does not mean that GPT-4 can now reliably solve word problems that involve simple math or involve a combination of simple science and math; it cannot.

Broadly speaking, there are two kinds of gaps. First, neither GPT-4 nor the plug-ins have the commonsense world knowledge and reasonable abilities needed to reliably translate a word problem into a mathematical calculation. Second, GPT-4 doesn’t reliably understand how to use the tools.

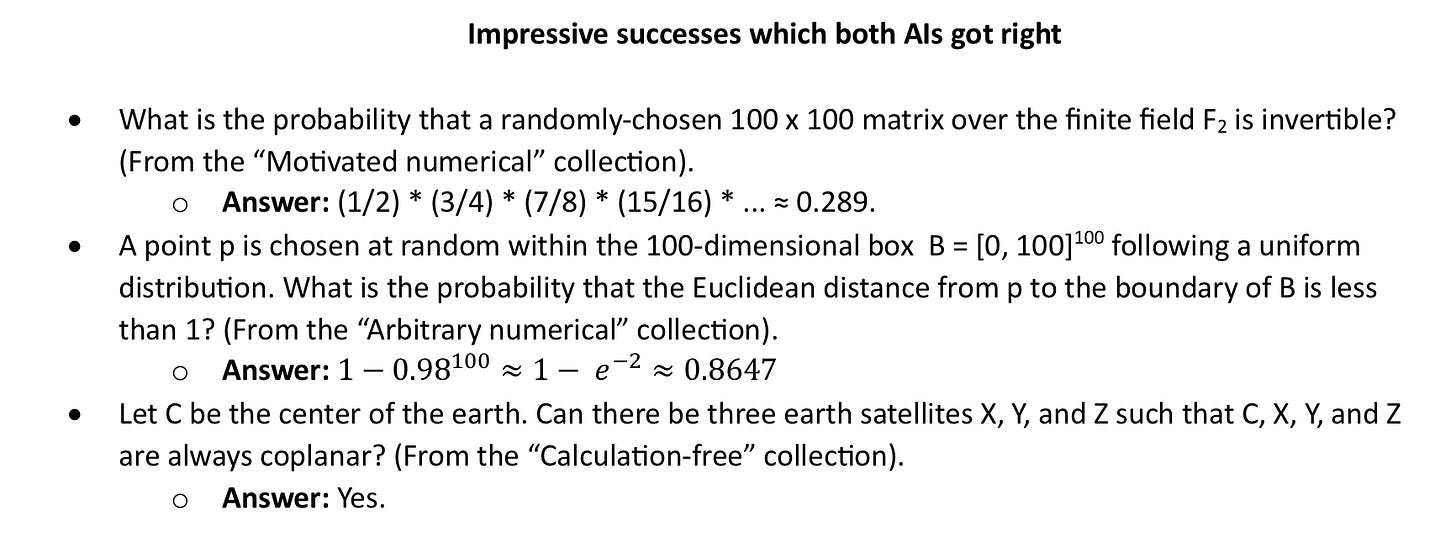

A couple of months ago, one of us (Ernie) and Scott Aaronson ran some tests to see how well GPT4 with the plug-ins would perform on science and math word problems. We created three test sets of original problem (some examples below, full materials at URL):

· Scott wrote 20 “Motivated Numerical” science problems: problems with numerical answers of some inherent scientific interest. These mostly ranged in difficulty from fairly easy problems in a college freshman math or physics course to quite challenging problems in advanced undergraduate courses.

· Ernie wrote 32 “Arbitrary Numerical” science problems that a high school teacher or professor might plausibly give on a homework assignment. These mostly ranged in difficulty from high-school level to college freshman level.

· Ernie wrote 53 “Calculation-free” problems: Multiple-choice or true/false problems which “the person on the street” with an elementary school education and access to maps and Wikipedia could easily answer, doing all the very elementary math in their heads.

Scott and Ernie ran all 105 problems through GPT4+WA and GPT4+CI. (The complete set is here).

As usual, with these kinds of experiments, the results were a mixed bag. On some problems that capable students might well find hard, the AI did perfectly; on other problems that lot of mathematically-inclined college students people would find easy, the AI failed miserably.

Some impressive successes which both systems got right

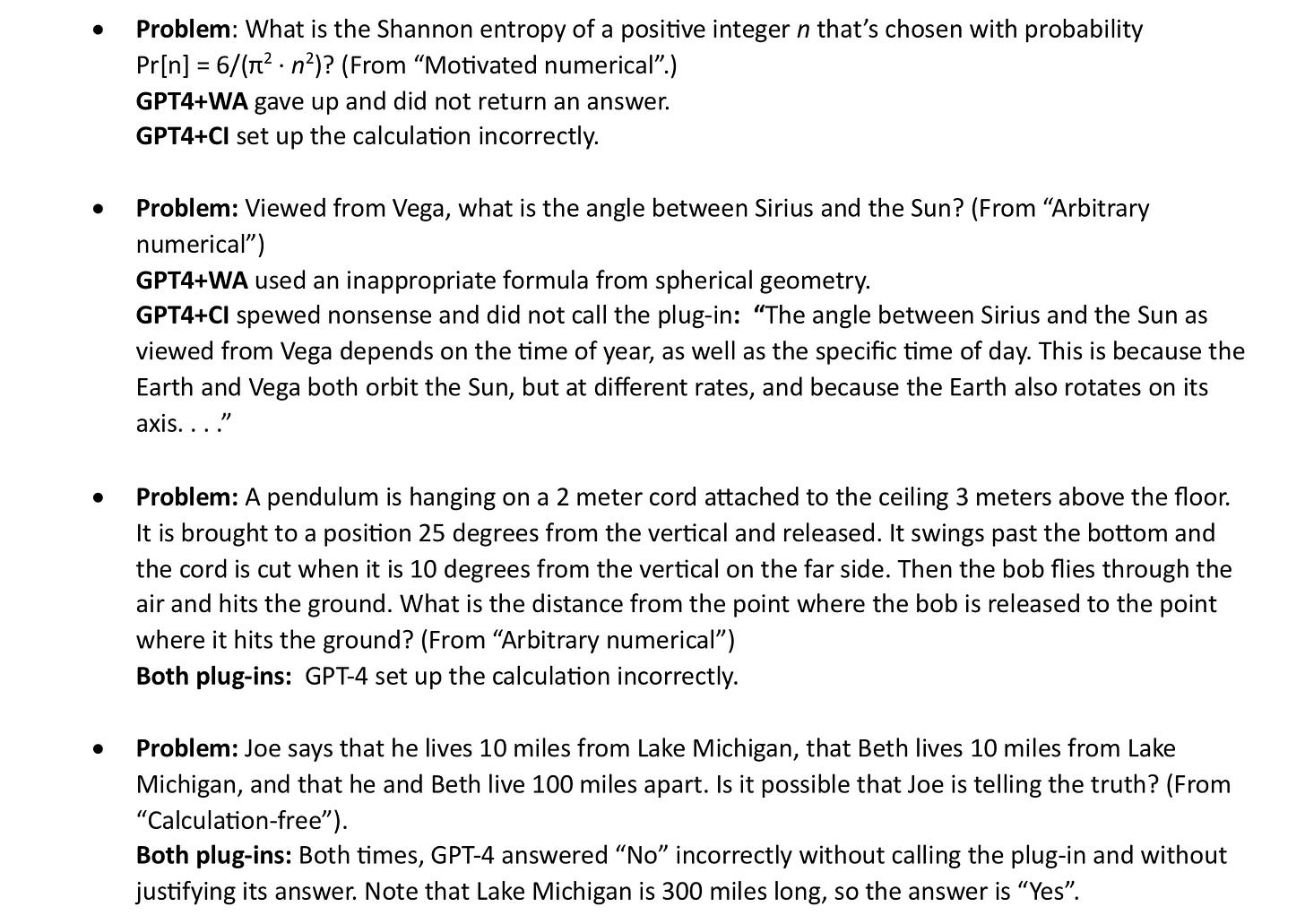

Some problems that both systems got wrong

§

Because the output from GPT-4 with these plug-ins gives a full trace of the calls that GPT-4 made to the external programs, it is often possible to determine where things went wrong. It turns out that things went wrong in many different ways.

Sometimes, as in the “pendulum” problem above, GPT-4 completely misunderstood the problem. Sometimes, as in GPT4+WA’s answer to the “Vega” problem above, it retrieved the wrong mathematical formula. Sometimes, as in GPT4+CI’s answer to the “Vega” problem, it hallucinated facts such as Vega orbiting the sun and got confused by those. Sometimes GPT-4 decided to do some or all of calculations itself instead of invoking the plug=in, and it got the calculation wrong. GPT-4 often made syntactic mistakes in calling Wolfram Alpha (e.g. it tried to use a reserved word as a variable) and was sometimes unable to correct itself and find a correct formulation. In a few cases GPT-4 set up the calculations correctly and the plug-in returned the correct answer, but then GPT-4 either misunderstood or refused to accept that answer.

So where do we stand, overall, and what can we expect in the short and long terms?

There is no question that the two plug-ins significantly extend GPT-4’s ability to solve math and science problems. GPT-4 with either plug-in can reliably correctly answer problems where the translation to math notation is straightforward. It sometimes can correctly answer problems where a human solver would have to invest significant thought to translate into math notation. But, crucially, it often gets these wrong, particularly when they involve spatial reasoning beyond a cookie-cutter formula or reasoning about sequences of disparate events. In many cases, a naive user might take the results, invariably presented as fact, as correct, when they are not.

Some of these problems may fixable. With enough resources OpenAI or Wolfram Alpha could fix some of the problems in the interfaces. Researchers have also developed various techniques that can be added on top to improve the quality of the eventual answer, such as running the problem multiple times and choosing the most frequent answer or asking the system to validate its first answer. (But recent work by Rao Kambhampati shows that subsequent answers are not necessarily more reliable).

All that said, much more fundamental problems remain and, until those problems are addressed, the ability of AI to apply mathematics to real world situation will remain limited. Large language models view cognition as predicting the most probable token. Systems like Wolfram Alpha view reasoning as applying complex operations to systems of mathematical symbols. A true understanding of elementary mathematics and its uses requires a deeper view.

§

We do not present these results as some sort of alternative benchmark for intelligence; a reasonably intelligent machine might not know what Shannon entropy is either. That is not our point.

Rather, they cast light on a larger issue. It is natural for humans to imagine LLMs to be generally intelligent in all the ways that humans are, and perfectly capable of taking their own output and acting on them in all the ways that trained humans might be able to do. In reality, they are not able to do so. Their currency, word prediction, helps in some instances but not others. The mixed results we report here with mathematics and physics are not at all unique; we see similar results when Bing and Bard both apparently try to validate their chatbot generated answers and against external sources, such as web searches, yielding similarly mixed results. Chatbot hallucinations (in chatbots that can do web searches etc) can be seen as another reflection of tool integration that has not gone as smoothly as some might have imagined. We don’t claim that this problem is unsolvable, but addressing it may require major innovation. Thus far we have seen consistently unreliable results, from math to fact-checking. Finding a way to get LLMs to reliably use external tools is far from trivial.

Gary Marcus is CEO and Co-Founder of the Center for the Advancement of Trustworthy AI.

Ernie Davis is a Professor of Computer Science at New York University.

So, in other words, like self-driving cars, LLMs need more data to handle the corner cases? We will need a fundamentally different intelligence model to crack AGI because generative AI, impressive or not, is obviously not up to the task. I sincerely hope that many AGI researchers will abandon deep learning and work on new approaches. Otherwise, no AGI anytime soon.

I tried you Lake Michigan problem with Bard and it went as follows:

"Yes, it is possible that Joe is telling the truth.

If Joe and Beth live in different directions from Lake Michigan, they could easily be 100 miles apart, even though they both live 10 miles from the lake.

For example, Joe could live in Chicago, Illinois, which is on the western shore of Lake Michigan, and Beth could live in Green Bay, Wisconsin, which is on the eastern shore of the lake. The distance between Chicago and Green Bay is approximately 100 miles."

This doesn't mean Bard can solve every similar problem; it has failed miserably with other ones. Nevertheless, Bard's answer for this one was surprisingly good.

Anyway, I get your point and of course what you say it true.