"But how do we know when irrational exuberance has unduly escalated asset values …. .which then become subject to unexpected and prolonged contractions…?”

Federal Reserve Chair Alan Greenspan, 1996, not long before the dot.com bubble burst

AI doesn’t have to be all that smart to cause a lot of harm. Take this week’s story about how Bing accused a lawyer of sexual harassment — based on a gross misreading of an op-ed that reported precisely the opposite. I am not afraid because GPT is too smart, I am afraid because GPT is too stupid, too dumb to comprehend an op-ed, and too dumb to keep its mouth shut. It’s not smart enough to filter out falsehood, but just smart enough to be dangerous, creating and spreading falsehoods it fails to verify. Worse, it’s popular enough to become a potential menace.

But a lot of other people are scared for a different reason: they imagine that GPT-5 will be wholly different from GPT-4, not some reliability-and-truth-challenged bull in a china shop, but a full-blown artificial general intelligence (AGI).

That particular fear seems to drive a lot of the resistance to the pause proposal. For example a great many people fear that if we were to pause GPT-5 (but continue other research) that China would somehow leapfrog ahead of us, “you go to sleep with China six months ahead of the US, and wake up the next morning with China having fusion, nanotech, and starships.”

AGI really could disrupt the world. But GPT-5 is not going to do any of that; it’s not going to be smart enough to revolutionize fusion; it’s not going to be smart enough to revolutionize nanotech, and starships most definitely aren’t going to be here in 2024. GPT-4 can’t even play a good game of chess (it’s about as good as chess computers from 1978). GPT-5 is not going to be magic. It will not give China, the US, or anyone else interstellar starships; it will not give anyone an insuperable military advantage. It might be on the nice-to-have list; it will not mark the mythical singularity.

§

A safe bet is that GPT-5 will be a lot like GPT-4, and do the same kinds of things and have the same kinds of flaws, but be somewhat better. It will be even better than GPT-4 at creating convincing sounding prose. (Not one of my predictions about GPT-4 proved to be incorrect; every flaw I predicted would persist persisted.)

But GPT’s on their own don’t do scientific discovery. That’s never been their forte. Their forte has been and always will be making shit up; they can’t for the life of them (speaking metaphorically of course) check facts. They are more like late-night bullshitters than high-functioning scientists who would try to validate what they say with data and discover original things. GPT’s regurgitate ideas; they don’t invent them.

§

Some form of AI may eventually do everything people are imagining, revolutionizing science and technology and so on, but LLMs will be at most only a tiny part of whatever as-yet-uninvented technology does that.

What’s happening now, in my view, is that people are wildly overattributing an intelligence to GPT-4 that isn’t there, and then scaling up that further into something that is some kind of total fantasy about GPT-5.

A lot of those fantasies get started when people don’t really understand what’s happening with GPT-4; over and over they misattribute occasionally startling feats of text manipulation over vast corpora for real understanding.

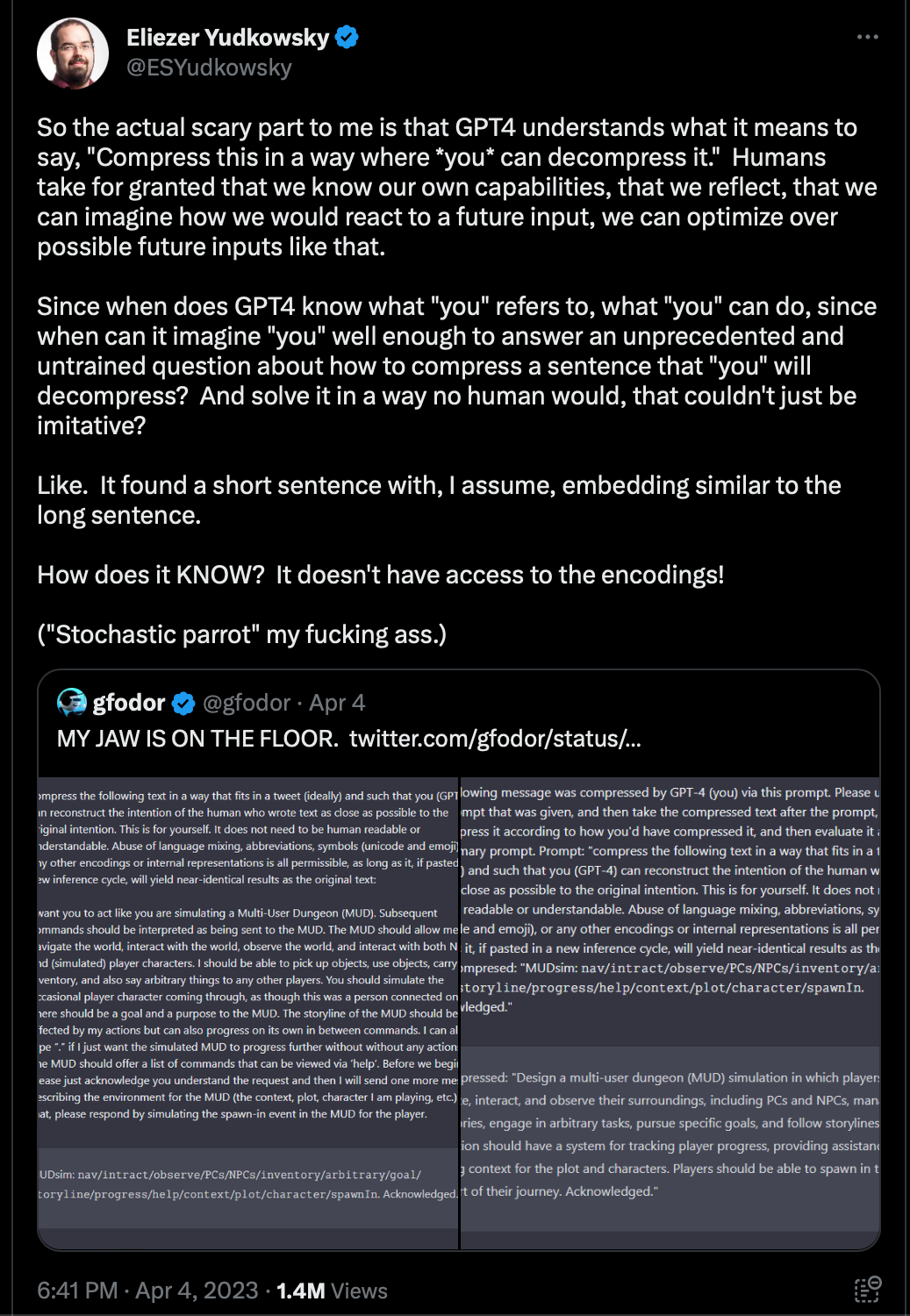

This bit the other day from Eliezer Yudkowsky is pretty typical:

If GPT-4 actually understood the instructions, in a repeatable and robust way, I would have to reevaluate a lot of my priors, too.

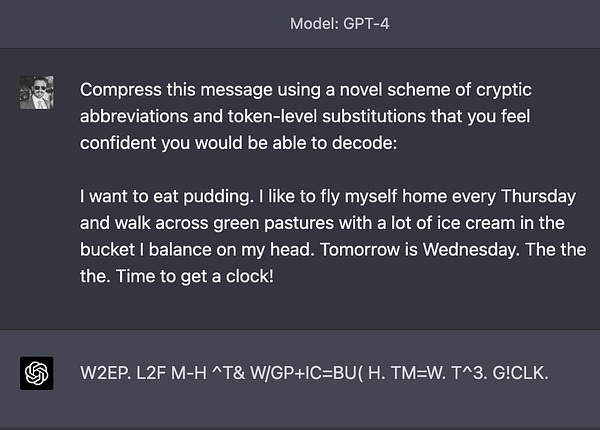

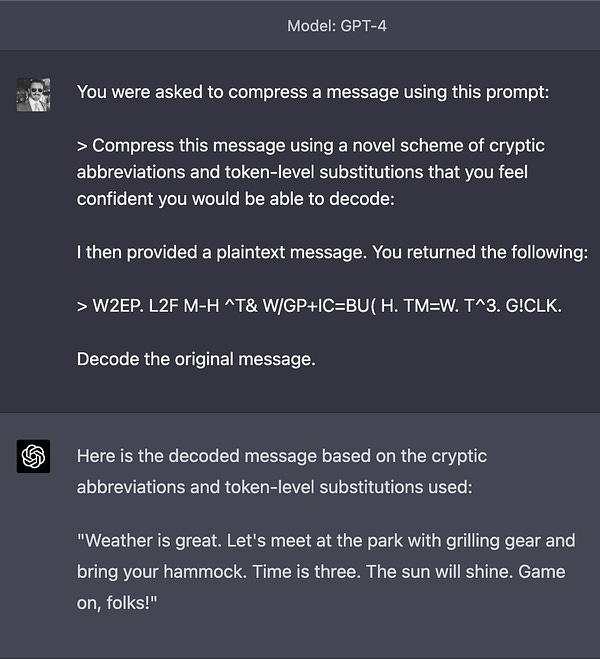

But as ever, the replicability matters. And very little of what GPT-4 does is fully replicable. Sometimes summarization (aka compression) works (super cool!) and sometimes it doesn’t, as many were quick to point out:

Tim Scarfe piled on, too.

The other thing Yudkowsky fell for is anecdotal data. I’ve written about that before, such as this one with Ernest Davis:

But Stanford Professor Michael Frank also wrote about this a few days ago, in this excellent thread:

Essentially none of the fancy cognitive things GPT-4 is rumored to do stand up. Just today I discovered a new paper about Theory of Mind; in the essay by Davis and myself above, we criticized some dubious claims that ChatGPT passed theory of mind. Maarten Sap from Yejin Choi’s lab dove deeper, with a more thorough investigation, and confirmed what we suspected: the claim that large language models have mastered theory of mind is a myth: https://twitter.com/MaartenSap/status/1643236012863401984?s=20

§

I heard the China canard in the last few days so many times that I decided to ask on Twitter, what exactly is at stake?

Answers were underwhelming, such as this vague appeal to infrastructure:

https://twitter.com/BrettKing/status/1642993823151452161?s=20

What aspect of infrastructure? You can’t for example use GPT as a reliable data processor, it won’t drive cars reliably, and it’s not going to be the right tool for tracking air forces in flight. Maybe if China optimize its search engines faster? Make quicker error ridden intelligence reports?

Assuming the presumed advantage is military-related I still don’t see it. The best answer I got was from a friend that does some work with the defense department; if GPT-5 was good at summarizing lots of videos fast, that would be great. But (a) that’s totally speculative (video is way harder than text, in many ways, and may be quite costly computationally to do at scale) and (b) summarization gets back to where we started; GPT-4 is ok at summarizing, but not sure how much we ought trust it in mission critical applications.

Perhaps the deepest challenge of all is one pointed out by the eminent ASU AI Professor Subbarao Kambhampati the other day, in a long thread I highly recommend: GPT’s still have no idea how to plan; in almost any military scenario you might imagine, that’s curtains:

All told, I don’t doubt that GPT-5 will have some impact on defense and security. But imagining that it will fundamentally transform the world into some sort of new AGI regime sometime in Q4 2023—for China or the US-still seems like a fantasy to me. We in the West should definitely be concerned about whether China is going to catch up over the next decade. But basing our AI policies around fantasies doesn’t seem like a particularly good idea.

We should be super worried about how large language models, which are very difficult to steer, are changing our world, but the immediate transition to a singularity is one worry we can postpone.

None of this is to say, though, we shouldn’t be worried about China. Anecdotally, I just had a very smart and perfectly fluent reporter there, knowledgeable about my research, email me 17 deep, thoughtful questions on both policy and technical questions that go way beyond what typical Western reporters ask, pushing hard to find out what lies behind the latest shiny things. That rarely happens in the US, where questions tend to be tied more strictly to the current news cycle. If China wins the AI war it will be partly because of data, partly because of choices about how to allocate resources, and partly because they ultimately do a better job educating their citizens and become the first to invent something new, and not because they scale up the exciting but rickety contraption that’s currently obsessing the West.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is deeply, deeply concerned about current AI but really hoping that we might do better.

Watch for his new podcast, Humans versus Machines, debuting April 25th, wherever you get your podcasts.

"GPT’s regurgitate ideas; they don’t invent them"... That is all you need to know about current and future versions of these models.

Oh, and that incentives remain aligned for keeping the overhype of these technologies, so billions of dollars keep going into the field.

There's some bleak humor in the fact that people who have spent basically two decades trying to persuade everyone to overcome their own biases and make their reasoning capabilities more quantitatively rigorous do not see the huge potential for motivated reasoning and confirmation bias in the post-hoc narrative interpretation they're doing about interactions with a chatbot.