Hot take: GPT 4.5 is mostly a nothing burger. GPT 5 is still a fantasy.

• Scaling data and compute is not a physical law, and pretty much everything I have told you was true.

• All the bullshit about GPT-5 we listened to for the last couple years: not so true.

• AI influencers like Tyler Cowen will blame the users, but the results just aren’t what they had hoped for.

And by the way, Nvidia dropped 8.48% today, 13% over the last 3 months.

§

Seems like it was only yesterday that I was predicting that GPT 4.5 would be a nothing burger and that the pure scaling of LLMs (adding more data and compute) was hitting a wall.

So now GPT 4.5 has dropped, and — breaking news — I was right. Hallucinations didn’t disappear today, and nor did stupid errors. Grok 3 didn’t fundamentally change things, and GPT 4.5 – which was probably supposed to be GPT 5 – didn’t either.

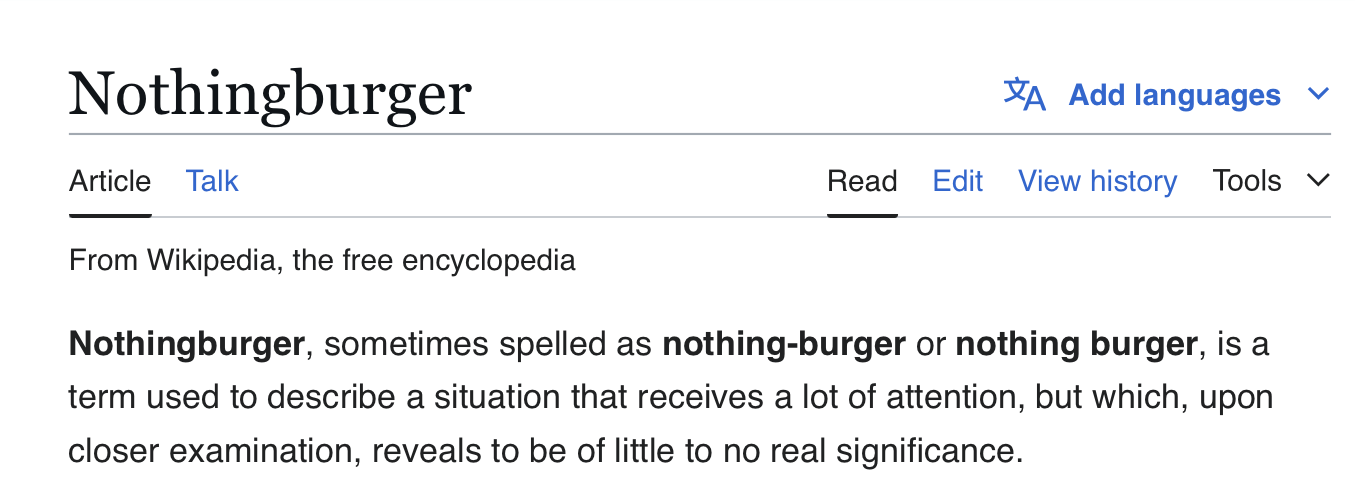

On some measures, it’s barely better than the last version of Claude:

And– first time I have ever seen this — one respected AI forecaster was so underwhelmed that he moved his projections for when AGI was coming to later:

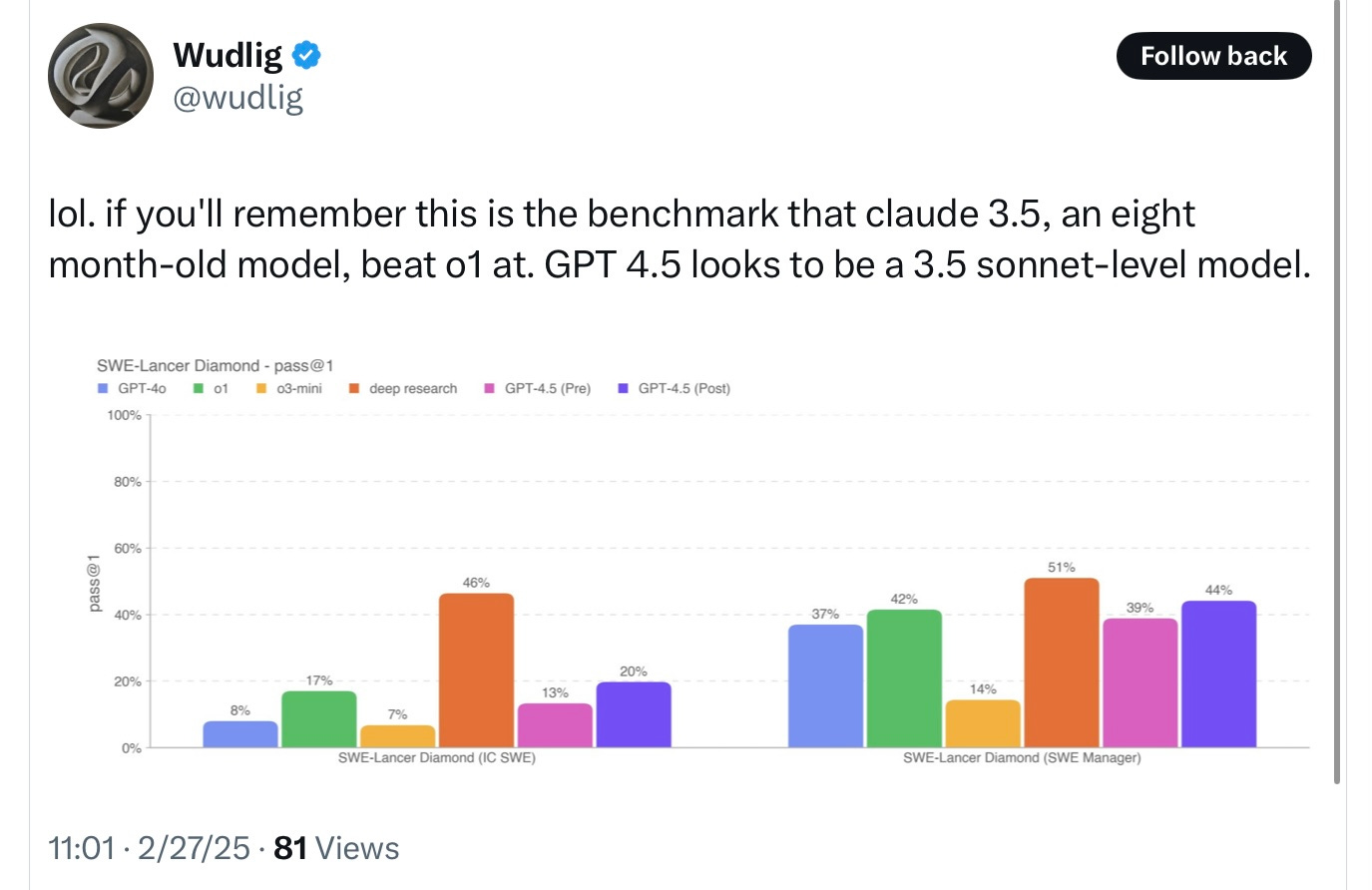

Even Altman himself was uncharacteristically tepid in his product announcement. In lieu of his usual AGI hype ( “we now know how to get to AGI”, “AGI is gonna be wild” , etc) , Altman announced GPT-4.5 in a long and somewhat defensive tweet, acknowledging the expense of massively scaled models, without mentioning AGI at all:

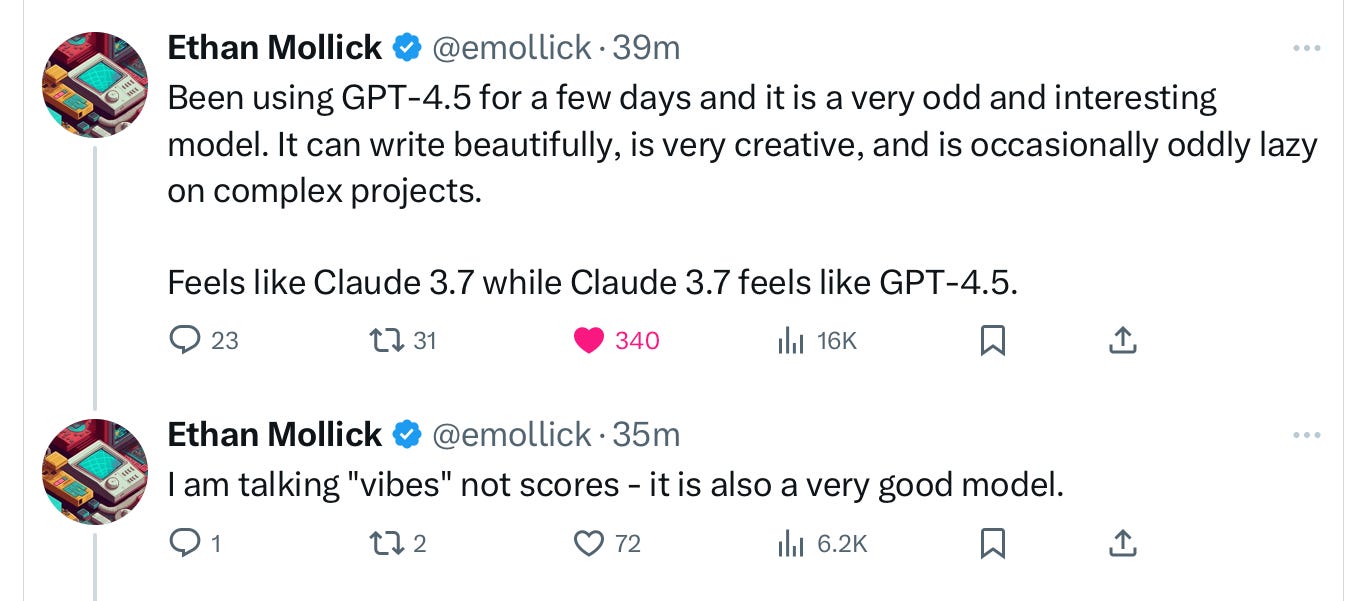

The ever-optimistic Ethan Mollick (who unlike me was given a sneak peek) was more restrained than usual, too, calling it odd and interesting and full of fine “vibes” without at all suggesting GPT 4.5 was transformational:

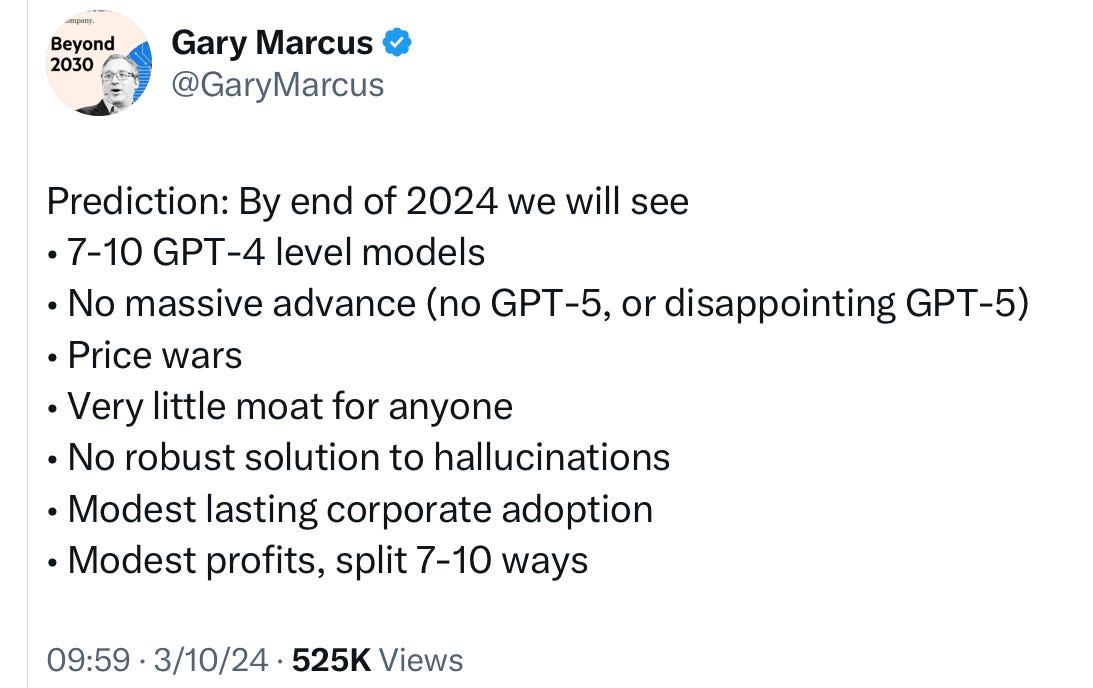

2024 Predictions continuing holding strong

What I said almost a year ago still holds true, especially when coupled with the equally underwhelming performance of the similarly massive Grok 3:

Half a trillion dollars later, there is still no viable business model, profits are modest at best for everyone except Nvidia and some consulting forms, there’s still basically no moat, and still no GPT-5. Any reasonable person with a scientific mind would say, “scaling was a hypothesis, we invested roughly 2x the Apollo Project in inflation-adjusted dollars in it, and we still don’t have that much to show for it.”

Or, as Ariana Grande might have said, if she was in the AI business, “Thank U, Next/ scaling wasn’t a match.”

Gary Marcus was right all along that pure scaling would eventually hit a wall, or at least reach a point of massively diminishing returns.

I love the cope here from Altman and Mollick. "It's a different kind of intelligence", "there's a magic to it I haven't felt before", "I am talking 'vibes' not scores".

Yes gentlemen, this is what bias feels like from the inside.

Funny how both Claude and ChatGPT are asking me to “save money” by paying up front instead of month by month….