Hot take on Google’s Gemini 3

Still no AGI, but it may nonetheless represent serious threats both to OpenAI and Nvidia

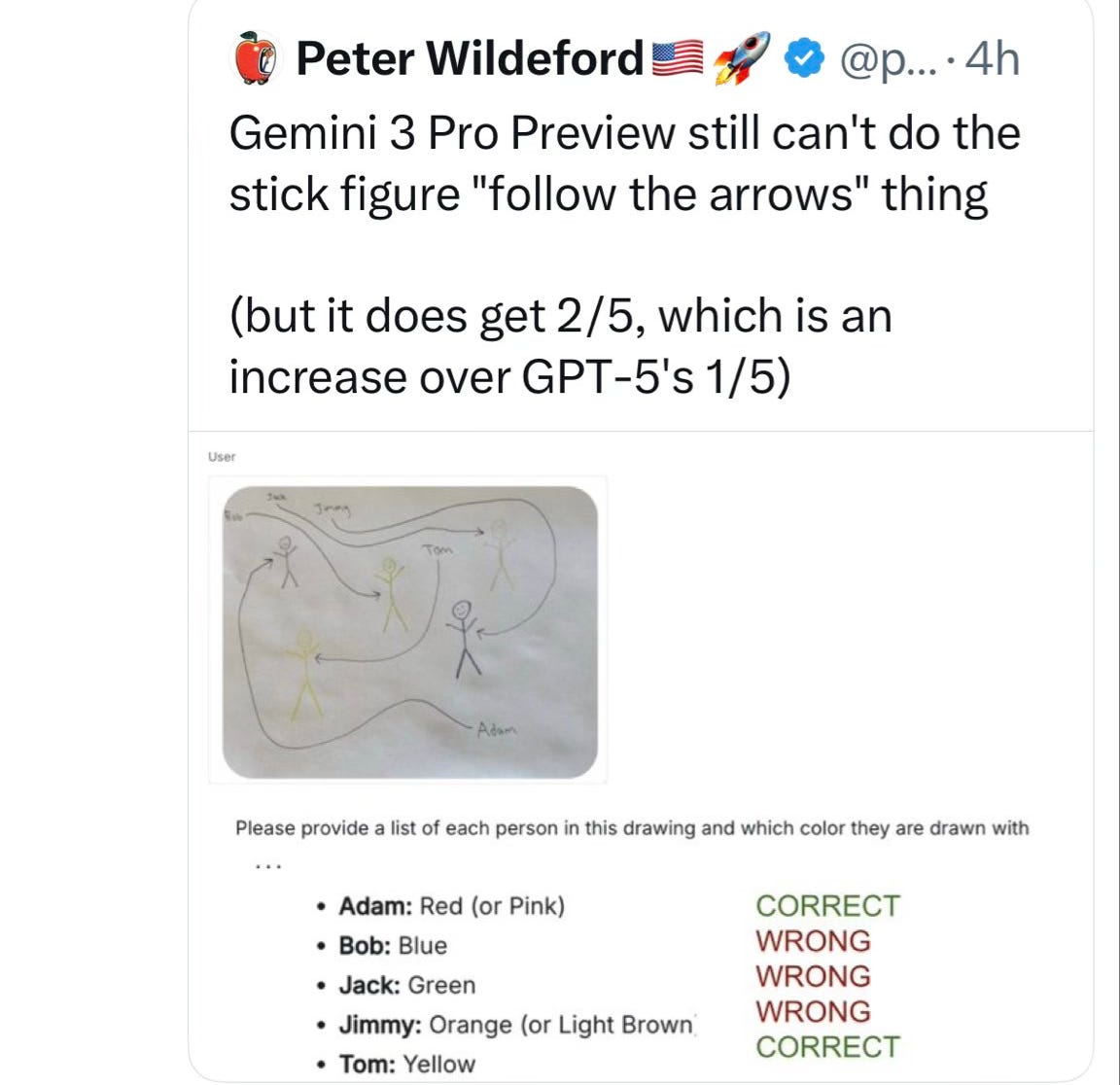

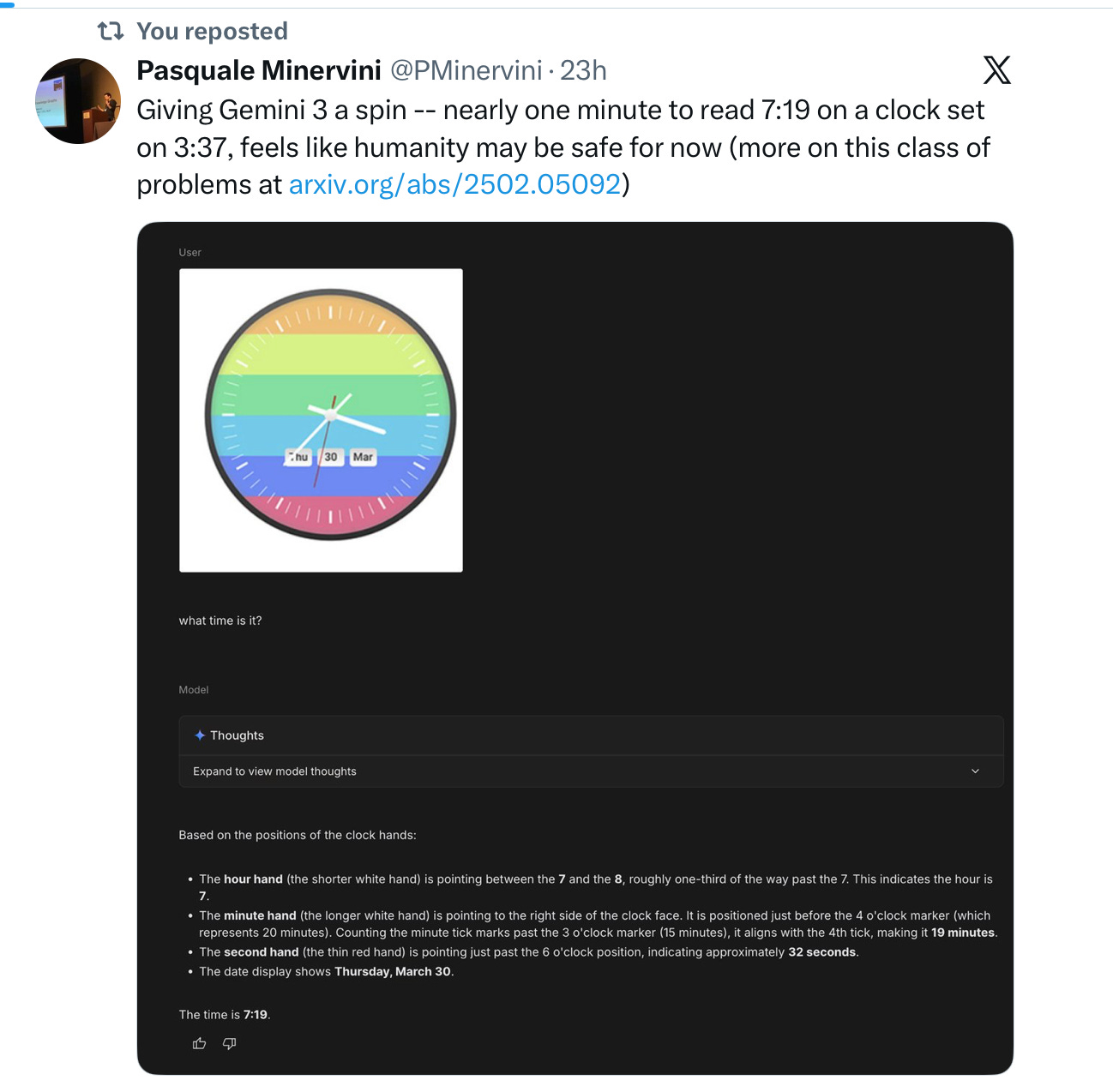

Google announced their big new state-of-the-art model yesterday, Gemini 3, on most measures better than any other model out there. Still, the usual reports of silly errors and embarrassing results trickled out within hours.

No surprise there. Here’s my brief hot take:

It’s great model, as far as LLMs go, topping most benchmarks, but it’s certainly not AGI. It’s haunted by the same kind of problems that all earlier models have had. Hallucinations and unreliability persist. Visual and physical reasoning are still a mess.

In short, scaling isn’t getting us to AGI.

OpenAI has basically squandered the technical lead it once had; Google has caught up. What happens to OpenAI if Google undercuts OpenAI on price?

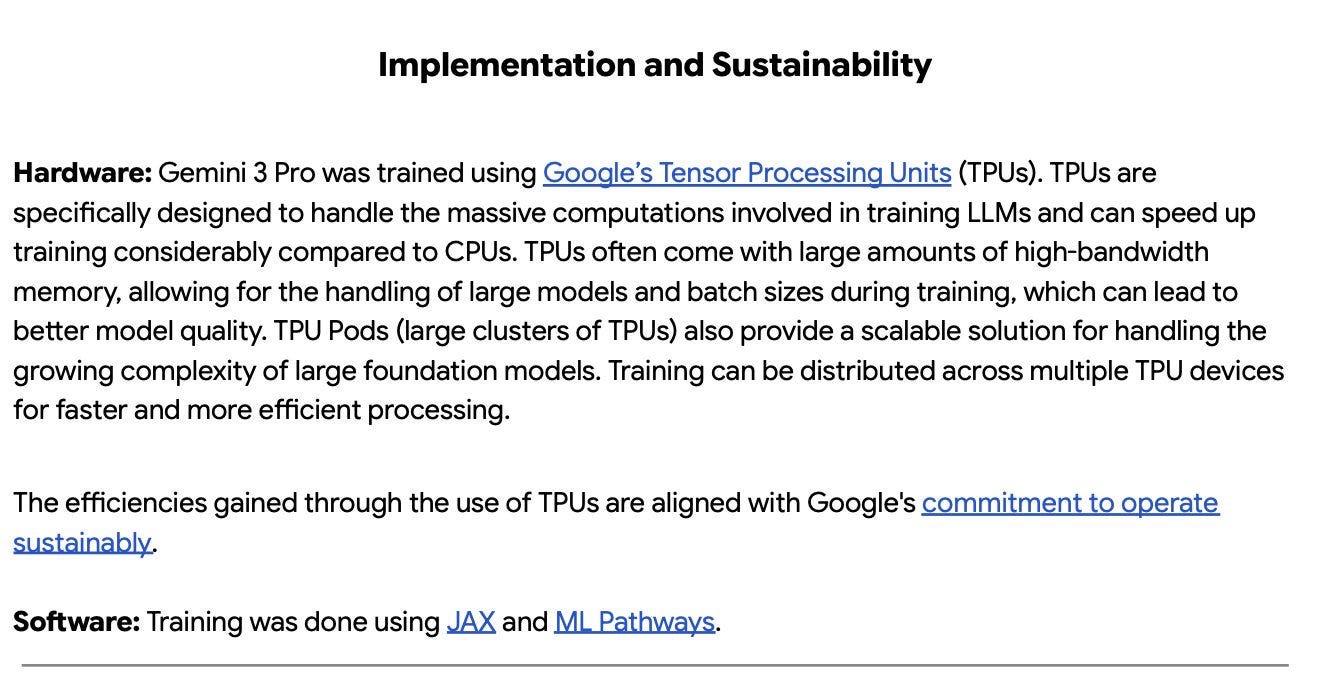

But biggest news was buried in the methods: Google got better results than it is competitors without using Nvidia GPUs, relying solely on their own TPUs:

If Google were to make those TPUs commercially available at scale and reasonable price, Nvidia’s dominance would end, price wars would begin, and compute would become a commodity. That would be huge.

Postscript on a separate topic: AI pioneer Jürgen Schmidhuber seconded and extended the concerns about Yann LeCun’s integrity that I expressed here yesterday.

The LLM race is reminiscent of the “race” between horses on a merry-go-round.

There is no solution without a solution to Feiganbaum's ( and Minsky) third problem. Not anyone, Hinton, LeCun, Pinchar or Altman propose a solution.

Boggles the mind to think this problem is solved by statistics. In fact the the entire AI debacle is due to not knowing how to solve the problem. No free lunch.

Al_ Knowledge_Origins_175986... 1980

https://stacks.stanford.edu/file/druid:cn981xh0967/cn981xh0967.pdf

First is the problem of knowledge representation. How shall the knowledge of the

field be represented as data structures in the memory of the computer, so that they

can be"conveniently accessed for problem-solving?

Second is the problem of knowledge utilization. How can this knowledge be used

Essentially,

in problem solving? Essentially, this is the question of the design of the inference engine. What designs for

What designs for the inference engine are available?

Third, and most important, is the question of knowledge acquisition.

How is it

possible

to acquire the knowledge so important for problem-solving automatically or at least semi-automatically, in a way in which the computer facilitates the transfer of expertise from humans (from practitioners or from their texts or their data) to the symbolic data structures that constitute the knowledge representation in the

acquisition

machine?

Knowledge acquisition is a long-standing problem of Artificial

Intelligence. For a long time it was cloaked under the word "learning". Now we are able to be more precise about the problem of machine learning; and with this increased precision has come a new term,

"knowledge acquisition research".

This is the most important of the central problems of Artificial Intelligence research. The reason is simple: to enhance the performance of AI's programs,

the inference

knowledge is power. The power does not reside in the inference procedure.

It was solved 25 years ago. National security was the reason for stealth. Epistemic disaster is the reason to build immutable knowledge.

http://intellisophic.net/2025/09/12/the-fundamental-innovation-orthogonal-corpus-indexing-oci/