Information pollution reaches new heights

AI is making shit up, and that made-up stuff is trending on X

Anyone who has been reading me for a while knows that I have been genuinely frightened for years by what generative AI might do to the information ecosphere. Among many other essays was my December 10, 2022 essay on AI’s Jurassic Park moment (“New systems like chatGPT are enormously entertaining, and even mind-boggling, but also unreliable, and potentially dangerous.”), an essay in The Atlantic (“Bad actors could seize on large language models to engineer falsehoods at unprecedented scale.”), and my warning that Google should worry about polluting its own well (“How sewers of lies could spell the end of web search”).

And of course Renee DiResta to her credit saw it all long before I did:

In the old days, though, we were just speculating.

Things have changed. And as the old joke goes, I’ve got some good news, and some bad news.

§

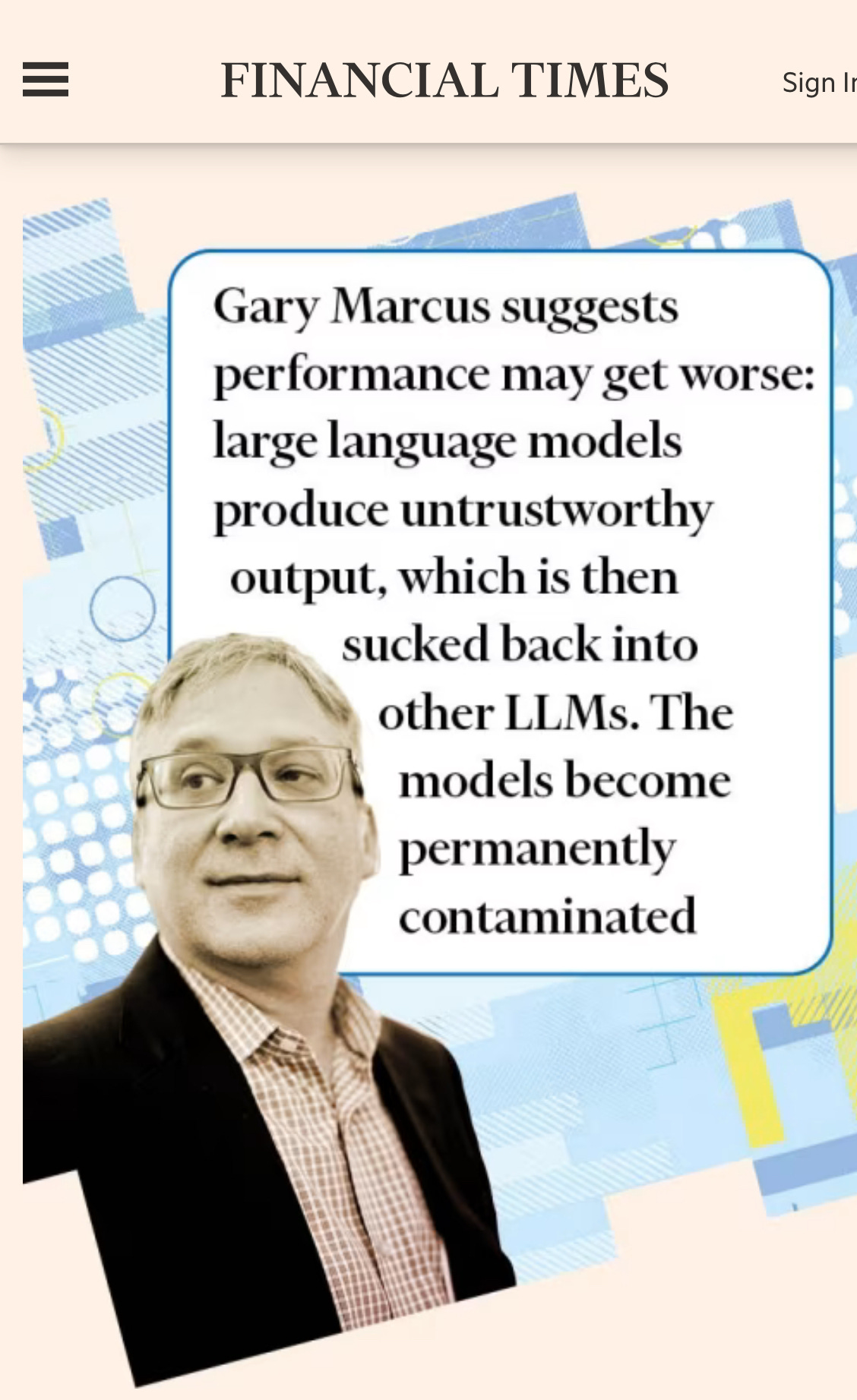

The good news is that word is finally getting out more broadly. I was totally chuffed, for example, to see a quote from me this morning in the FT, done up in fancy style. My mom will love it:

Glad someone is listening.

§

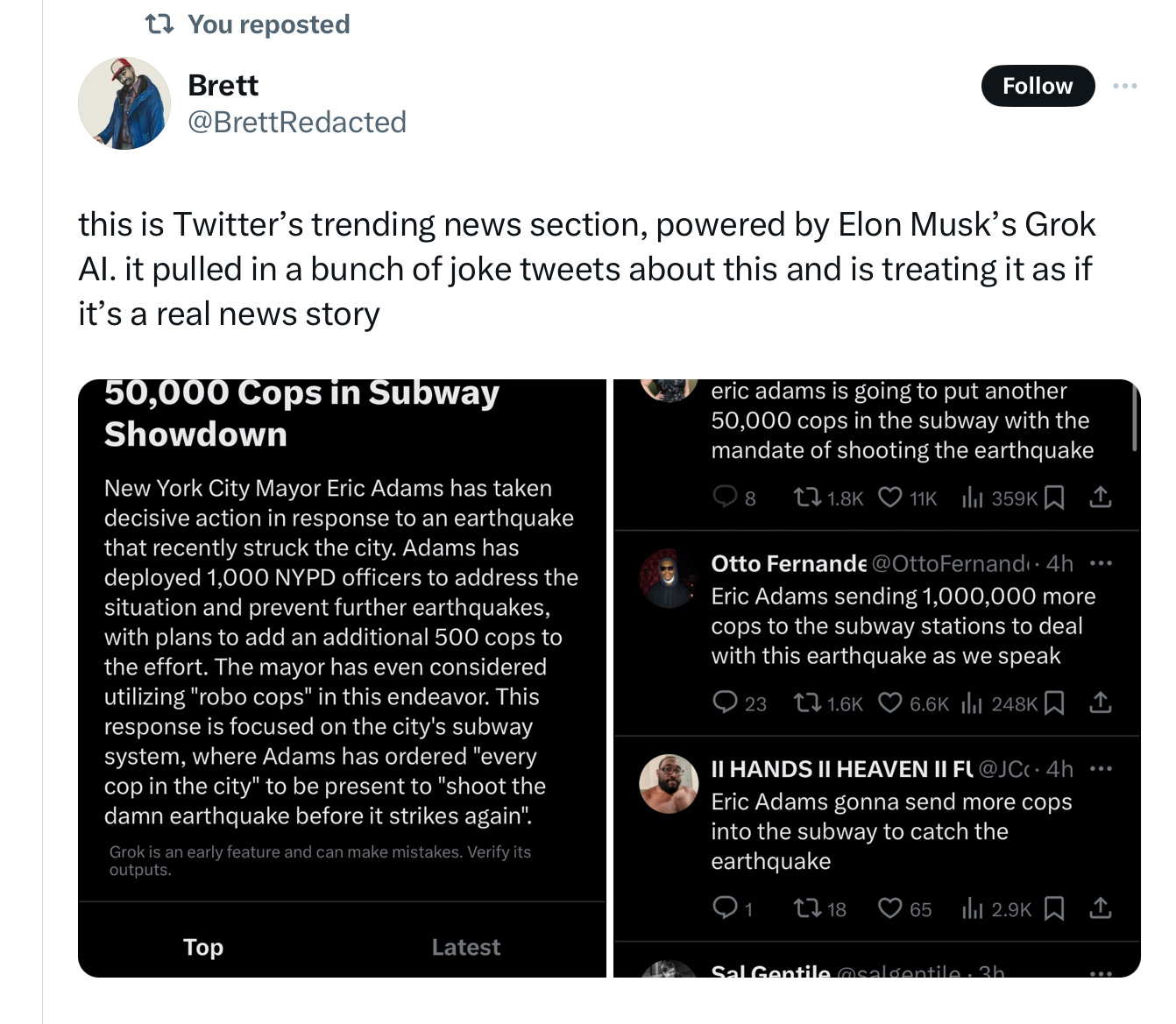

The bad news, which outweighs the good, is that the problem is quickly getting worse. Here’s an example from yesterday

and another from Thursday:

I am old enough to remember when Meta’s AI guru Yann LeCun said on Twitter (in late 2022) that AI-generated misinformation would never get traction. Clearly he was wrong.

§

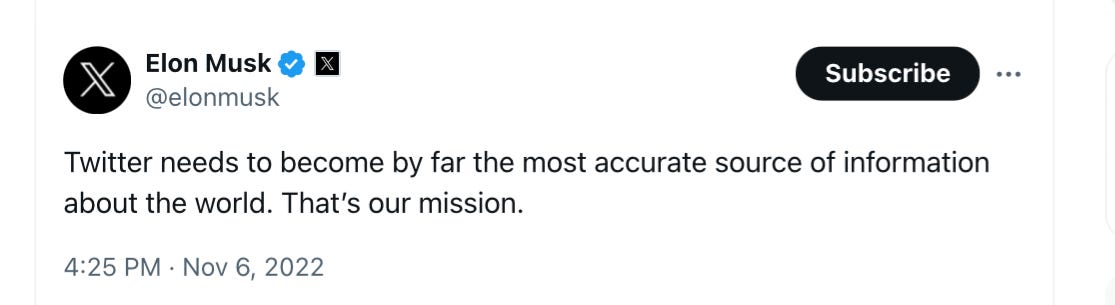

Shortly after Musk took over X (then Twitter), he claimed that he wanted Twitter to be the most trustworthy source of information on the web.

Ironic that his own chatbot, Grok, is making the problem worse. Meanwhile X itself is promoting nonsense.

§

I have said it before, and I will say it again. If we don’t get a handle on this problem, fast, nobody will believe anything.

Bluntly, we risk falling directly into Putin’s hands:

§

On Tuesday (or so) I have an essay coming out in Politico, about what the US Congress should do to make sure that we get to a good, rather than bad, place with AI. A bunch of the discussion is around transparency. One of the (many) suggestions, almost the least we could ask for, is that we should require all AI-generated content to be labeled. Mixing bogus chatbot stories in with the rest is a recipe for disaster, both for humans and for LLMs. It’s a downward spiral waiting to happen.

Senator Schumer, please take note. We need to move on this, fast.

Gary Marcus doesn’t know how to it say it more clearly: fast, cheap, automated misinformation left unchecked threatens to undermine trust — and democracy itself.

equally important however is that we need to do something that should have always been done for software, but was not done because of a tradeoff between benefits and drawbacks where the former was deemed more valuable than the latter. specifically, the notion of responsibility. If a faulty item in a car goes awry resulting in a severe accident or death of the driver or passenger, the car company can be held liable even if it wasn’t aware of the problem in advance. Software companies escaped this scrutiny and liability for various reasons. However, we are now coming to modern-point of that slippery slope since these GenAI systems are in fact software. These companies who racing to release what are clearly incomplete technologies should be held liable for any damage done by these. Yes, I realize their systems are unpredictable, but by the same token they should not be released until they can find better ways of predicting how they come up with answers, how they go about creating narratives, etc. This notion of the free pass for society to then have to deal with the fallout is nuts. So many are caught with the “idea” of the benefits because they got an answer to a question in well written English, that they ignore the core issues here. The net benefits do not outweigh the net drawbacks once we view this from a longer time scale than a month and people need to understand that 😉

I find it difficult to believe that people who didn't see the need to regulate against Fox News or British newspapers propagating falsehoods will see the need to regulate against LLM services propagating falsehoods. The generations currently in charge (and I don't just mean politicians but also journalists, owners of news media, and millions of voters) are completely unfamiliar with seriously bad times and see everything as a game without real stakes, as about maximising clicks or getting one over on the other side ('liberal tears') rather than ensuring good material outcomes.

Things will have to get much worse, I fear, perhaps to the degree of global economic crisis on the level of 1929 and another world war, before the insight is entrenched again for two or three generations in that making sound decisions based on good information is important for our collective and individual welfare.