LLMs aren’t very bright. Why are so many people fooled?

How Generative AI preys on human cognitive vulnerability

For a long time I have been baffled about why so many people take LLMs so seriously, when it is obvious, from the boneheaded errors, hallucinations, and utter inability to fact-check their own work, that the cognitive capacities of LLMs are severely limited.

Overnight, two friends, Magda Borowik and Kobi Leins, sent me a fantastic analysis (from last year, as it happens) of this very phenomenon, by a software engineer/website designer/lucid author named Baldur Bjarnason, called “The LLMentalist Effect: how chat-based Large Language Models replicate the mechanisms of a psychic’s con.”

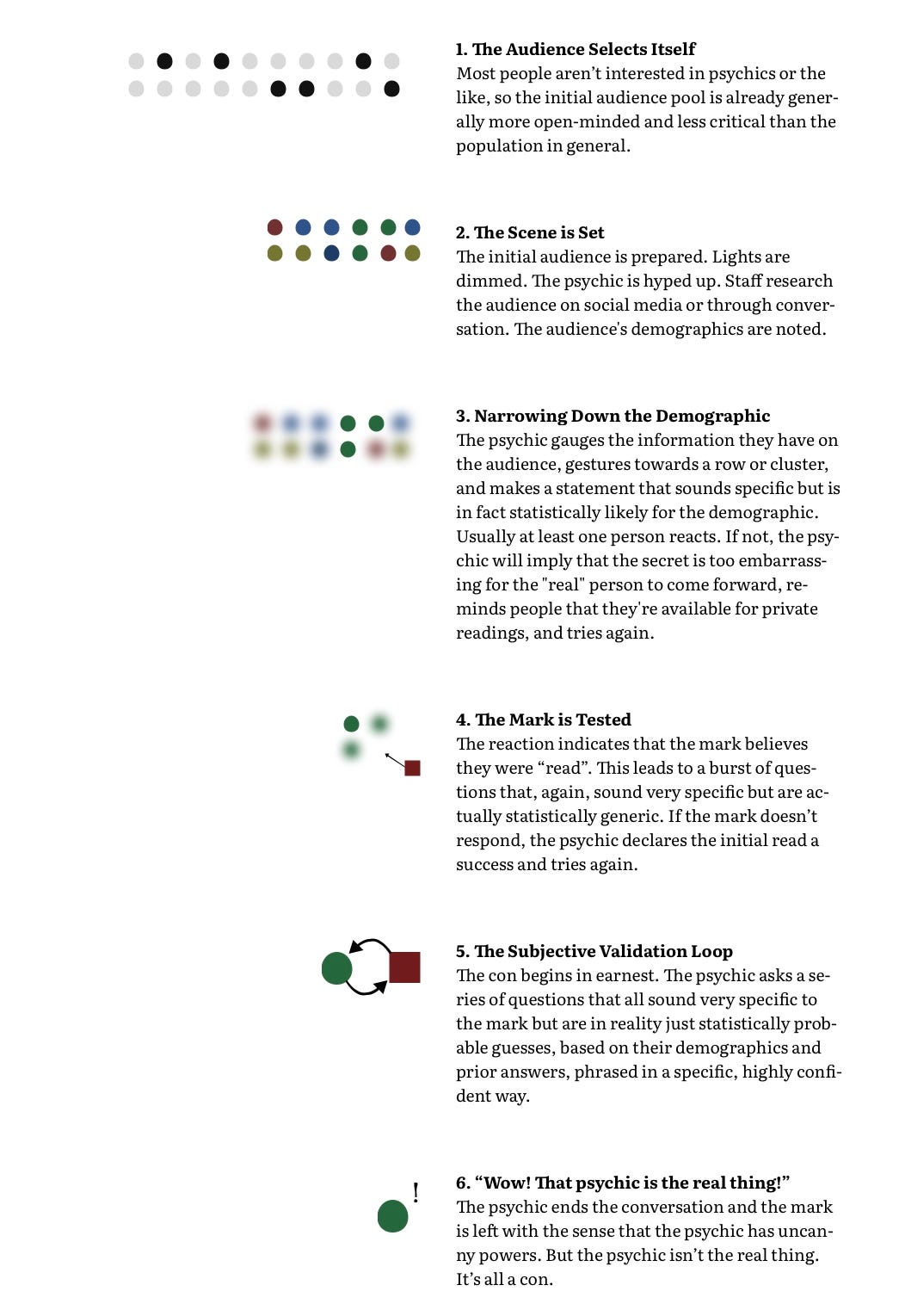

The basic structure of Bjarnason’s essay is to compare the methodology of mentalists (aka mind-readers, entertainers and con artists who pretend to read minds) with what happens in LLMs. The essence is distilled in a pair of summary tables.

The first, as he puts it, lays out “the psychic’s con”.

The second summary, deeply parallel with the first, outlines the process by which an unthinking LLM (without meaning to do so) cons many of its users. He calls it the LLMentalist Effect:

Both damning and correct. People who believe in LLMs have played themselves.

Whole thing is worth reading, not just for the elaboration of the above but also for the further parallels with the tech industry as a whole. Check it out.

Gary Marcus thinks the whole world would be different if so many people weren’t so easily taken by the parlor tricks of generative AI.

An amazingly damning analysis that rings so true to life.

Believers will never critically analysis their belief.

Oh, this one is really good. Beautiful.