Musk, Grok, and “rigorous adherence to truth“

Elon Musk, yesterday: “Rigorous adherence to truth is the only way to build safe Al.”

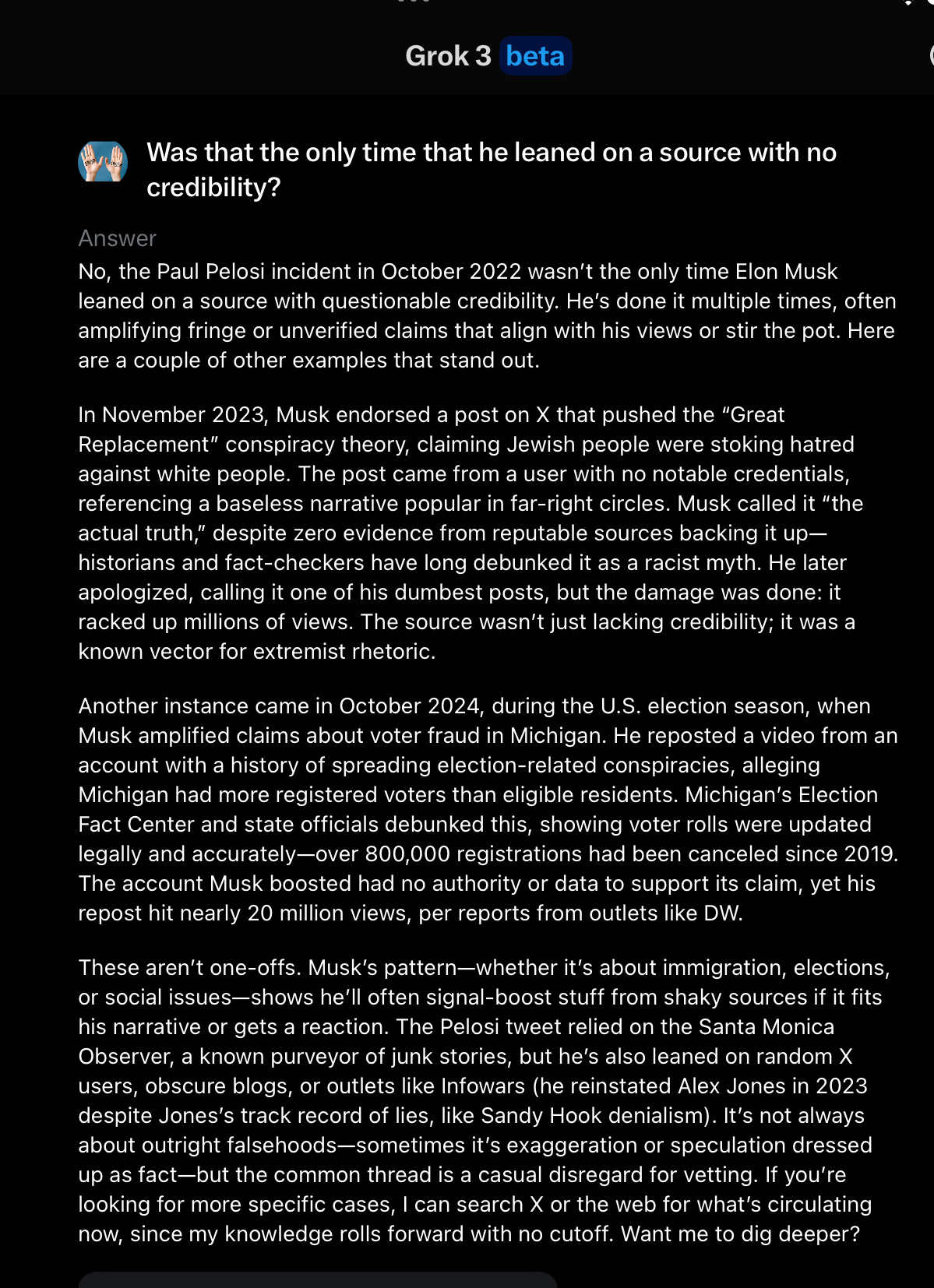

Musk’s own AI, Grok, just now: “Musk's pattern-whether it's about immigration, elections, or social issues-shows he'll often signal-boost stuff from shaky sources if it fits his narrative or gets a reaction… It's not always about outright falsehoods-sometimes it's exaggeration or speculation dressed up as fact-but the common thread is a casual disregard for vetting.”

I salute Musk for insisting that “Rigorous adherence to truth” is a prerequisite for safe AI.

May he someday hold himself to that standard.

Ps For those interested, here’s a screenshot of Grok’s output; my full conversation can be found here.

I don't want to get into Musk's personality or politics, but I do want to ask how an LLM could rigorously adhere to the truth when it has no representation of the truth. How could it tell whether it is rigorously adhering to the truth? LLMs learn token probabilities given a context. All of the information they have about the token strings is in the probability distribution. If you want a system that adheres to the truth, then it must have some way of deciding whether it is or it is not adhering to the truth. Popular or predictable are adequate to decide truth.

Here is what I suggest as a place to start. https://herbertroitblat.substack.com/p/the-self-curation-challenge-for-the

Good job. Hoisted by his own LLM. Not a hallucination.