“No one person should be trusted here.”

The irony of OpenAI’s unusual structure

§

Brilliant analysis this morning by Jeremy Kahn in Fortune.

If OpenAI winds up blowing up the world, it will merit endless rereading. Summarizing, mostly in Kahn’s own words:

“OpenAI’s structure was designed to enable OpenAI to raise the tens or even hundreds of billions of dollars it would need to succeed in its mission of building artificial general intelligence (AGI), the kind of AI that is as smart or smarter than people at most cognitive tasks, while at the same time preventing capitalist forces, and in particular a single big tech giant, from controlling AGI”

“Altman … struck the deal—for just $1 billion initially—with Nadella in 2019. From that moment on, the structure was basically a time bomb. By turning to a single corporate entity, Microsoft, for the majority of the cash and computing power OpenAI needed to achieve its mission, it was essentially handling control to Microsoft, even if that control wasn’t codified in any formal governance mechanism.”

If Altman returns and the board resigns as now seems likely, “it will prove that Altman’s structure failed—OpenAI was not able to both raise billions of dollars from a big tech corporation while somehow remaining free from that corporation’s control.”

“It would be the ultimate irony if the flaws of the very structure Altman designed wind up saving his job at CEO and allowing him to outmaneuver the board that he established to safeguard AGI”

§

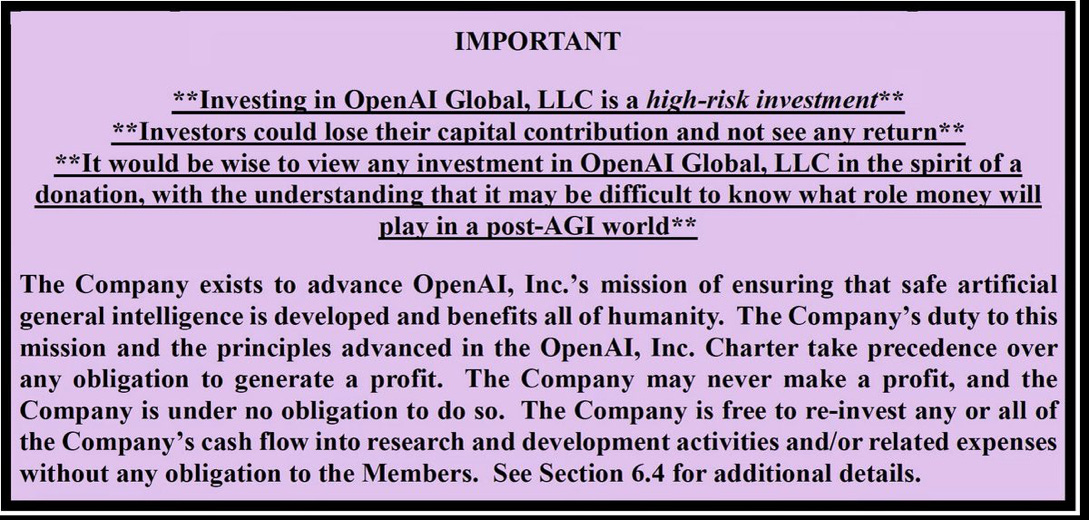

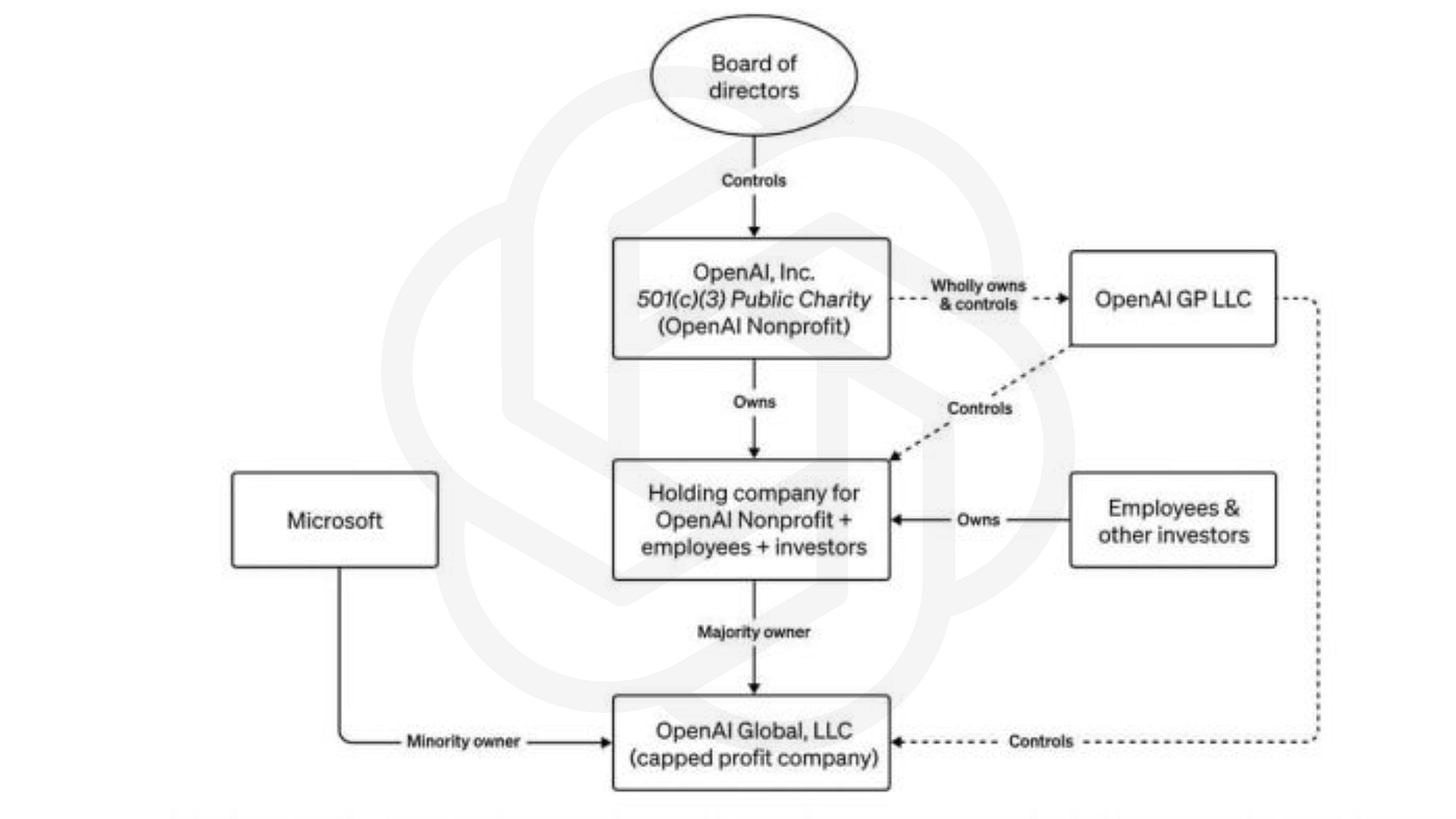

The spirit of the original arrangement was that everything that the for-profit did was supposed to be in the service of the non-profit. A board with no financial interest was supposed to look out for humanity. According to a prospectus that was shared on line “The Company exists to advance OpenAI, Inc’s mission of ensure that safe artificial general intelligence is developed and benefits all of humanity”.

Ostensibly the structure of the organization guarantees this:

In reality, things have gone quite differently. When the nonprofit Board felt it was prudent (for undisclosed reasons) to remove Altman, per the goals of the nonprofit that they were trying to represent, at the risk of potentially devastating commercial potential, those with a stake in the nominally subordinate for-profit (both employees and investors) quickly set to work to push out the board and to undo its decisions.

All signs are that those financially-interested stakeholders will quickly emerge victorious. (Arguably, they already have).

The tail thus appears to have wagged the dog—potentially imperiling the original mission, if there was any substance at all to the Board’s concerns.

If you think that OpenAI has a shot, eventually, at AGI, none of this bodes particularly well.

§

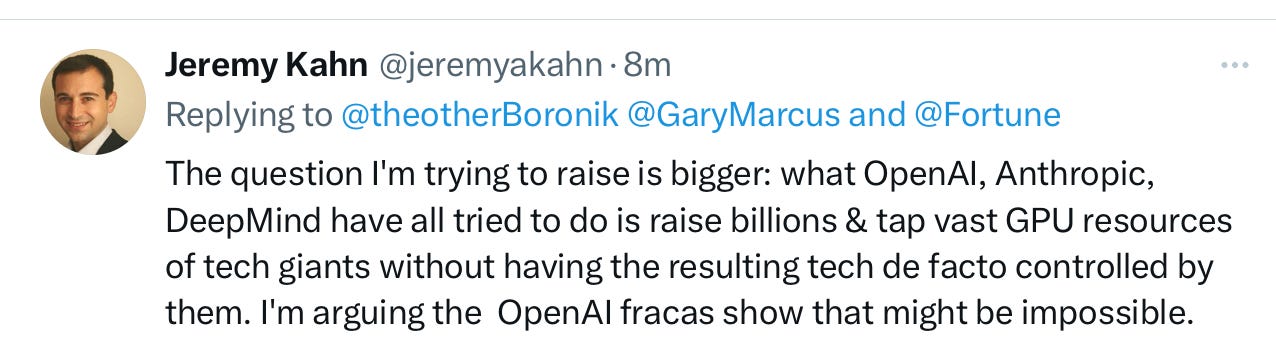

Let me close with a terrifying thought that Kahn posted on X just as I was wrapping this up:

Three orgs filled with brilliant minds tried to create AI independently of the tech giants, and all three have been subverted.

Gary Marcus feels sick to his stomach.

I realize that everyone is focused on corporate politics at this time but I have a few issues with this:

"OpenAI’s structure was designed to enable OpenAI to raise the tens or even hundreds of billions of dollars it would need to succeed in its mission of building artificial general intelligence (AGI), the kind of AI that is as smart or smarter than people at most cognitive tasks, while at the same time preventing capitalist forces, and in particular a single big tech giant, from controlling AGI"

I don't understand the logic of raising "tens or even hundreds of billions of dollars it would need to succeed in its mission of building artificial general intelligence (AGI)".

First, OpenAI has no clue how intelligence works. Heck, ChatGPT is the opposite of intelligence. It's an automated regurgitator of texts that were generated by the only intelligence in the system: millions of human beings that went through the process of existing and learning in the real world. They also had to learn how to speak, read and write, something that ChatGPT can never do.

Second, if one has no idea how intelligence works, how does one know that solving it will require tens of billions of dollars? A small spider with less than 100,000 neurons can spin a sophisticated web in the dark. How does OpenAI or anyone else propose to emulate the amazing intelligence of a spider with such a small brain? And if one has no idea how to do spider-level intelligence, how does one propose to achieve human-level intelligence?

I have other objections but these two will do for now.

Only really smart people could be dumb enough to think they could buck the golden rule - he who has the gold makes the rules. If you're completely dependent on your commercial partner for survival, it's the commercial partner who is in the driver's seat no matter how "clever" a governance structure you set up.