Scaling is over, the bubble may be deflating, LLMs still can’t reason, and you can’t trust Sam

New evidence that confirms so much of what I have been saying

Some brief but important updates that very much support the themes of this newsletter:

“Model and data size scaling are over.” Confirming the core of what I foresaw in “Deep Learning is Hitting a Wall” 3 years ago, Andrei Burkov wrote today on X, “If today's disappointing release of Llama 4 tells us something, it's that even 30 trillion training tokens and 2 trillion parameters don't make your non-reasoning model better than smaller reasoning models. Model and data size scaling are over.”

“occasional correct final answers provided by LLMs often result from pattern recognition or heuristic shortcuts rather than genuine mathematical reasoning”. A new study on math, supporting what Davis and I wrote yesterday re LLMs struggling with mathematical reasoning from Mahdavi et al, converges on similar conclusions, “Our study reveals that current LLMs fall significantly short of solving challenging Olympiad-level problems and frequently fail to distinguish correct mathematical reasoning from clearly flawed solutions. We also found that occasional correct final answers provided by LLMs often result from pattern recognition or heuristic shortcuts rather than genuine mathematical reasoning. These findings underscore the substantial gap between LLM performance and human expertise…”

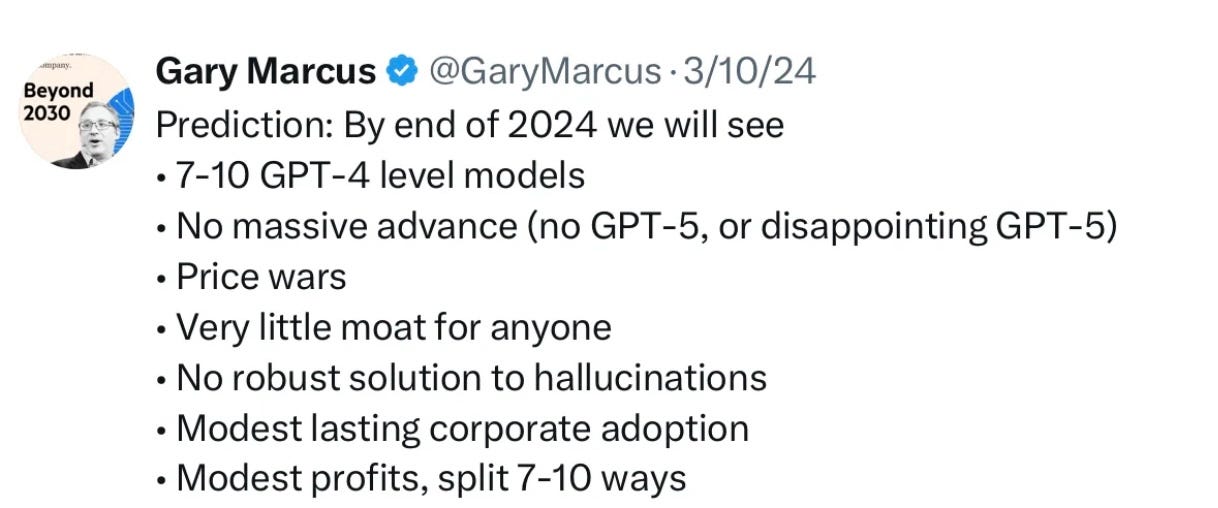

Generative AI may indeed be turning out to be a dud, financially. And the bubble might possibly finally be deflating. NVidia is down by a third, so far in 2025. (Far more than the stock market itself.) Meta’s woes with Llama 4 further confirm my March 2024 predictions that getting to a GPT-5 level would be hard, and that we would wind up with many companies with similar models, and essentially no moat, along with a price war, with profits modest at best. That is indeed exactly where we are.

Sam Altman does in fact appeared to have gotten fired in 2023 for lying, exactly as I speculated back when he was fired, in my essay “not consistently candid”. (Not a popular suggestion at the time; hordes of people on X opposed it, and you may recall Kara Swisher blocked me for it.) Check out WSJ’s Keach Hagey’s excerpt from her new book, which makes this very clear, “The Secrets and Misdirection Behind Sam Altman’s Firing From OpenAI.”

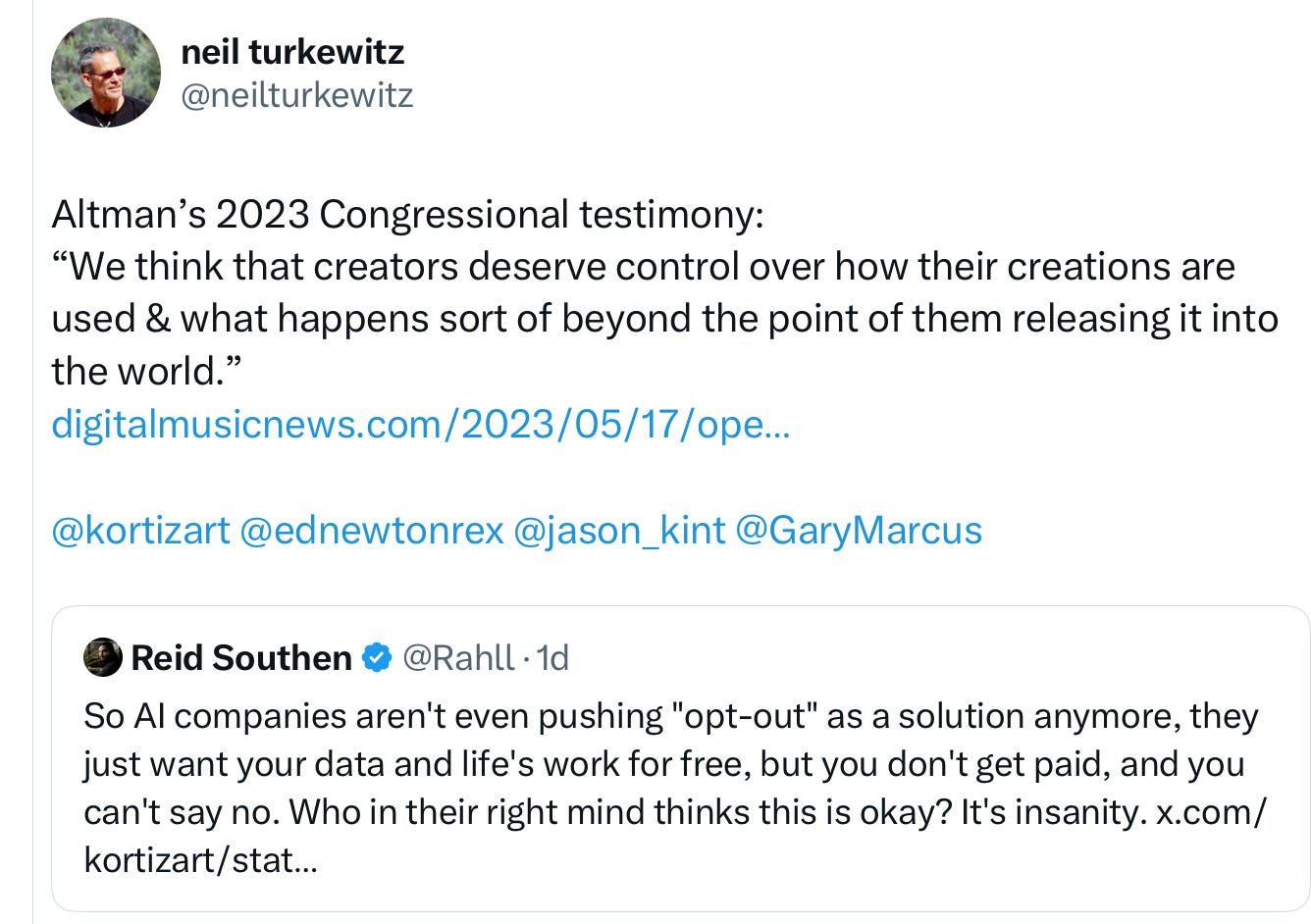

Sam Altman has continued to walk back important stuff he said when I sat next to him in the Senate:

Speaking of Sam Altman’s never-to-be-trusted commitments, wasn’t OpenAI supposed to be a nonprofit? Considering signing this open letter (I did): https://ab501.com/letter.

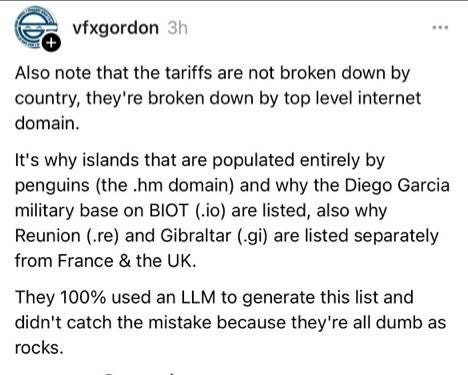

LLMs and tariffs. Remember how a few days ago I said the tariff plan was probably partly written by an LLM? Wondering why the penguins in small islands got hit with tariffs?

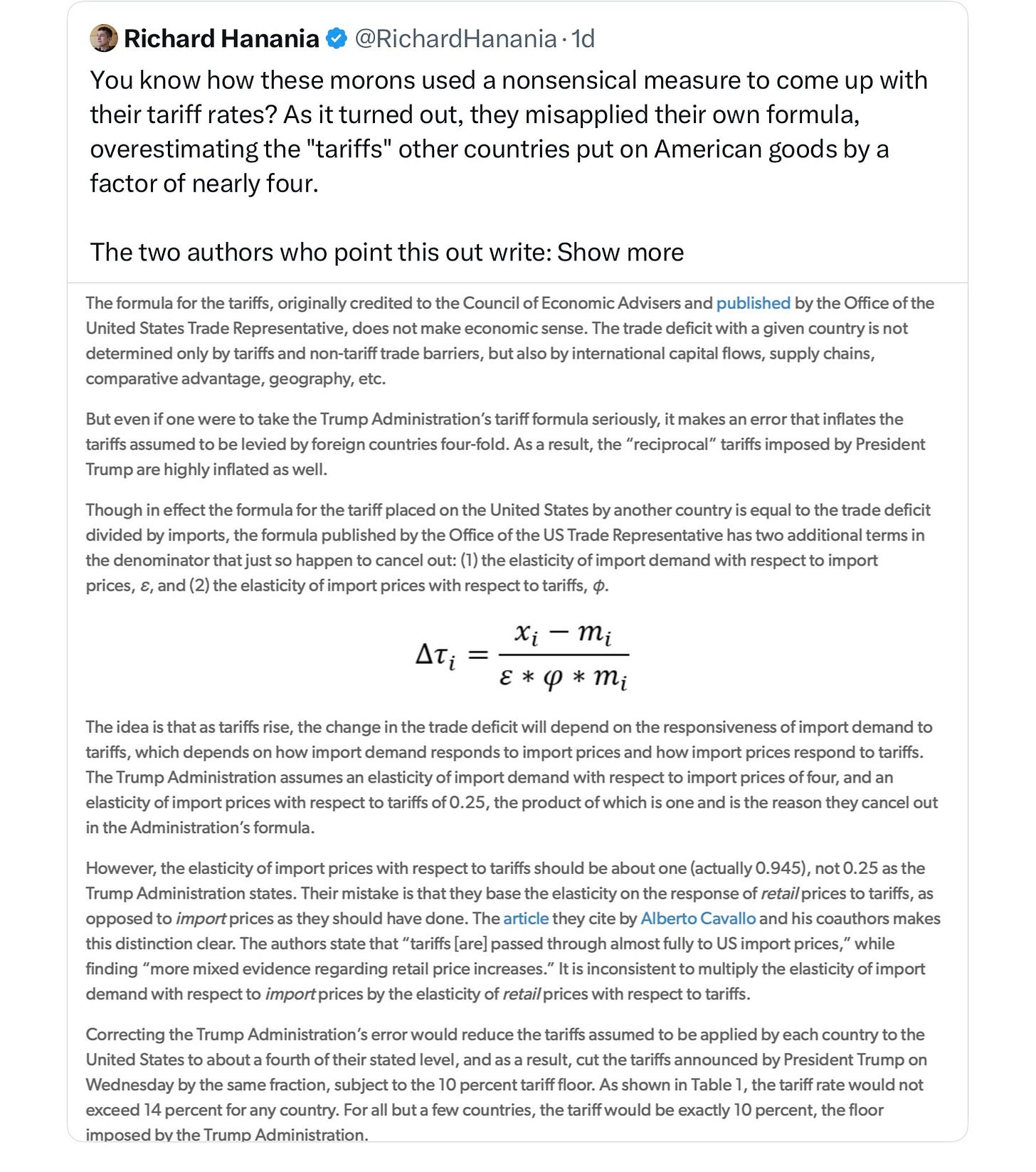

Speaking of “dumb as rocks” (and see my recent essay on Trump’s apparent cognitive decline), Trump’s crew devastated the global economy based in part on what they got from an LLM and they didn’t even do their math right:

§

There you have it, folks. America is (for now, still) a free country. In an age of near-zero accountability, pundits like Tyler Cowen are free to go around shouting (without a shred of evidence) that I am “always wrong”, but reality very much appears to be otherwise.

Scaling has run out (like I said it would); models still don’t reason reliably (as anticipated in What to Expect When Your Expecting GPT-4); the financial bubble may be bursting; there still ain’t no GPT-5; Sam Altman can’t be trusted; an overreliance on unreliable LLMs (another longstanding theme here and in Taming Silicon Valley) has indeed gotten the world into deep doodoo. Every one of my 25 predictions for 2025 still seems to be on track.

LLMs are not the way. We definitely need something better.

Gary Marcus is looking for serious investors who would be interested in developing alternative, more reliable approaches.

Gary Marcus on AI is one of the only things keeping me sane as these crazy weirdos try and take over the world. What scares me most is how little resistance there is to their plans. Living in the UK, with what is meant to be a people's government, we see the government keen to sell as much as it can of what makes us as a country politically and culturally important to the strange, nebulous and authoritarian tech bros.

"LLMs are not the way. We definitely need something better." Trouble is, LLMs have sucked all of the oxygen out of the room, making it impossible for non-LLM-based research to find funding.