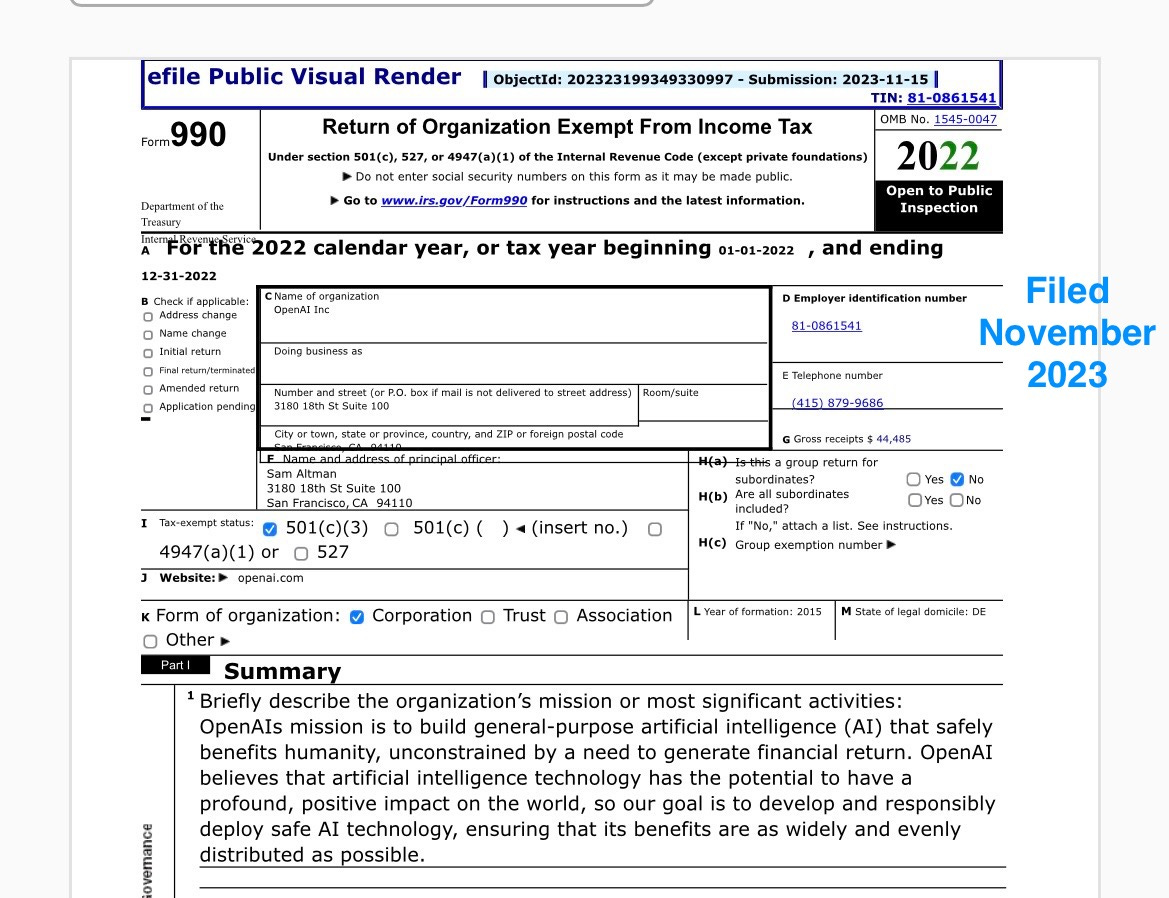

As recently as November 2023, OpenAI promised in their filing as a nonprofit exempt from income tax to make AI that that “benefits humanity … unconstrained by a need to generate financial return”.

The first step towards that should be a question about product – are the products we are making benefiting humanity?

Instead, they appear to be focused precisely on financial return, and appear almost indifferent to some the ways in which their product has already hurt large numbers of people (artists, writers, voiceover actors, etc).

Furthermore, OpenAI apparently hasn’t even fulfilled their own promises to devote 20% resources to AI safety. (And a lot of their safety-focused researchers just left, perhaps not coincidentally.)

By comparison, GoogleDeepMind devotes a lot of its energy towards projects like AlphaFold that have clear potential to help humanity. I don’t see OpenAI doing much of that.

If you want to know which team I am rooting for, and why, there you have it.

§

As I just wrote on X, The real issue isn’t whether OpenAI would win in court, it’s what happens to all of us, if a company with a track record for cutting ethical corners winds up first to AGI.

Gary Marcus hopes that the most ethical company wins. And that we don’t leave our collective future entirely to self-regulation.

I haven't noticed much in the way of ethical behaviour from Google; rather the reverse if anything. Their AI project may currently be less bad than OpenAI, but I expect that it will regress to the Google norm in time.

They are hanging themselves via there own words!! Impressive investigation Gary. I tip my hat. Much respect. This I hope decrease the haze that these companies seem to cause people. #beautiful

- This needs to be everywhere