Scientists, please don’t let your chatbots grow up to be co-authors

Five reasons why including ChatGPT in your list of authors is a bad idea

Things have gotten out of control. Take this tweet:

It’s smug, it’s arrogant, it’s a sign of a world to come. And, oh, by the way, it’s … wrong.

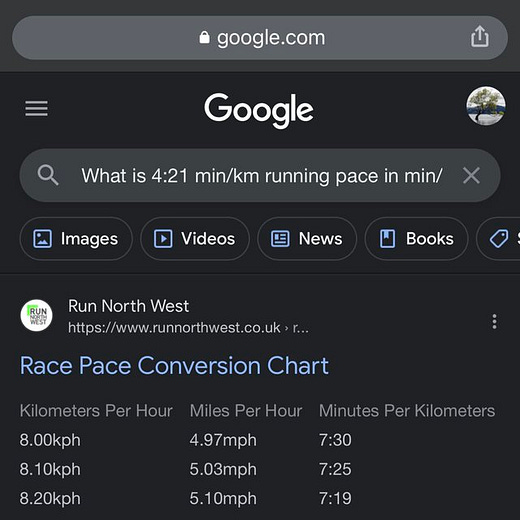

ChatGPT hasn’t done the conversion correctly (answer below), but the will to believe in this great new tool, full of precision (down to the second!), but lacking in reliability, is so strong that the author didn’t bother to check. And that’s the problem. Statistical word prediction is no substitute for real math, but more than a few people have thought that it was. (As it happens, a 4 minute 21 second kilometer works out to be a 7 minute mile, if you ask Google nicely, it will actually give you the right answer.)

The worst thing about ChatGPT’s close-but-no-cigar answer is not that it’s wrong. It’s that it seems so convincing. So convincing that it didn’t even occur to the Tweeter to doubt it.

We are going to see a lot of this – misuses of ChatGPT in which people trust it, and even trumpet it—when it’s flat-out wrong.

§

Wanna know what’s worse than hype? People are starting to treat ChatGPT as if it were a bona fide, well-credentialed scientific collaborator.

Yesterday’s trend was hyping ChatGPT’s alleged Google-killing abilities. Today’s trend is listing ChatGPT as a co-author.

According to Semantic Scholar, 6-week-old ChatGPT already has two publications, 12 co-authors (all of whom agreed to this prank) and one citation.

I sincerely hope this trend won’t last.

Here are five reasons why:

ChatGPT is a tool, but not a person. It’s at best, more like a spell-checker, or a grammar-checker, or a stats package, than it is like a scientist. It is not contributing genuine ideas, nor coming up with carefully controlled experimental designs, nor summing up prior literature in thoughtful ways. You wouldn’t make your spell-checker or your spreadsheet a co-author. (What you might do is to cite, in your list of references, some resource, such as a software package like SPM for brain analysis that was special-purpose and important for your work; you wouldn’t make SPM a co-author.)

Co-authorship is defined differently in different fields, but is generally defined as making a substantive scientific contribution. ChatGPT simply cannot reason well enough to to do this; as I have documented in previous essays, it has demonstrably misunderstood physics, biology, psychology, medicine, and so forth. Given ChatGPT’s current understanding level of scientific understanding, assigning authorship to it is disrespectful to the scientific process itself.

ChatGPT has proven itself to be both unreliable and untruthful. It makes boneheaded arithmetical errors, invents fake biographical details, bungles word problems, defines non-existent scientific phenomenon, stumble over arithmetic conversion, and on and on. If you don’t know that, you are not up on the AI literature; if you do know that, you show that you just don’t care, which is even worse. If you declare it as a co-author, it says you are more interested in being on trend, than in being on target.

You wouldn’t trust a calculator that is 75% correct; if you blatantly advertise that you are excited about “writing” your paper with a tool with a shoddy track record, years before it has matured, why should I trust you?

Scientific writing is in part about imparting truth in a clear way to others. If you treat ChatGPT as a sentient being, when it clearly is not, you are misleading the public into thinking ChatGPT is sentient; rather than communicating good science, you are hyping bullshit. Just stop.

Friends don’t let friends co-author with ChatGPT.

bio and share buttons

ChatGPT gives one answer. If you ask it about its sources - it can't tell you. It also has some subjects that have obviously been manipulated to parrot woke nonsense. If you use it to write something - if someone suspects it came from ChatGPT - they can submit a part of the writing to ChatGPT and ask if it wrote it. It will answer Yes if it did. Maybe this is not universal - but it was for several cases.

Finally - and most important - Google gives pages of results for a search. One word can get you started. I typically scan the results - and look at several. As noted above - ChatGPT gives one answer.

Thank you for continuing to pound much needed sense into this dangerous LLM phenomenon. Like self-driving cars, this technology is not yet ready for widespread public distribution and should be banned on the internet until it becomes mature and reliable. ChatGPT is what I call fake AI.