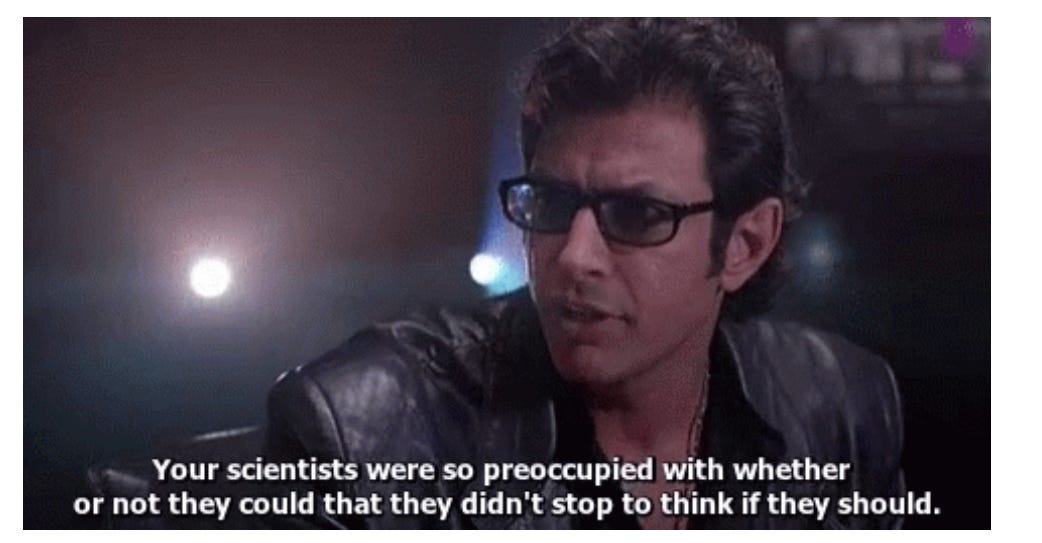

Sentient AI: For the love of Darwin, let’s Stop to Think if We Should

A very short post on why a new paper is making me nervous

If I could make one change to Rebooting AI, the 2019 book I co-wrote with Ernie Davis, it might well be to boldface this passage:

AI, like any technology, is subject to the risk of unintended consequences, quite possibly more so, and the wider we open Pandora’s box, the more risk we assume. We see few risks in the current regime, but fewer reasons to tempt fate by blithely assuming that anything that we might invent can be dealt with.

My reason for mentioning this now is that an all-star cast of philosophers and AI researchers has just posted a paper on arXiv that not only considered the criteria for building conscious machines, but also provides “some tentative sketches” for how to build one.

Honestly, is that a good idea?

We can’t even control LLMs. Do we really want to open another, perhaps even riskier box?

We all know what Michael Crichton would be writing about today.

Gary Marcus doesn’t, contrary a common belief, hate AI; he just wants a world in which AI is net positive. Is that too much to ask?

I'm not worried about a conscious AI because, regardless of the many claims, I don't think anyone knows what consciousness is. I'm, however, very worried about an AGI falling in the wrong hands. An AGI will behave according to its conditioning, i.e., its motivations. It will not want to do anything outside its given motivations. And its motivations will be conditioned into it by its trainers and teachers.

What bugs me about the paper : all the indicators of consciousness could be implemented in some silly 2D matrix of integers world-of-a-kind, and yet nobody would dare hypothesize such a simple computer program is conscious.

It seems to further support that complexity and/or substrate are keys. Perhaps the heuristic of "if it's really smart and self-aware, it's probably conscious" is sufficient for preventing suffering.