Serious medical error from Perplexity’s chatbot

The dangers of generative pastische

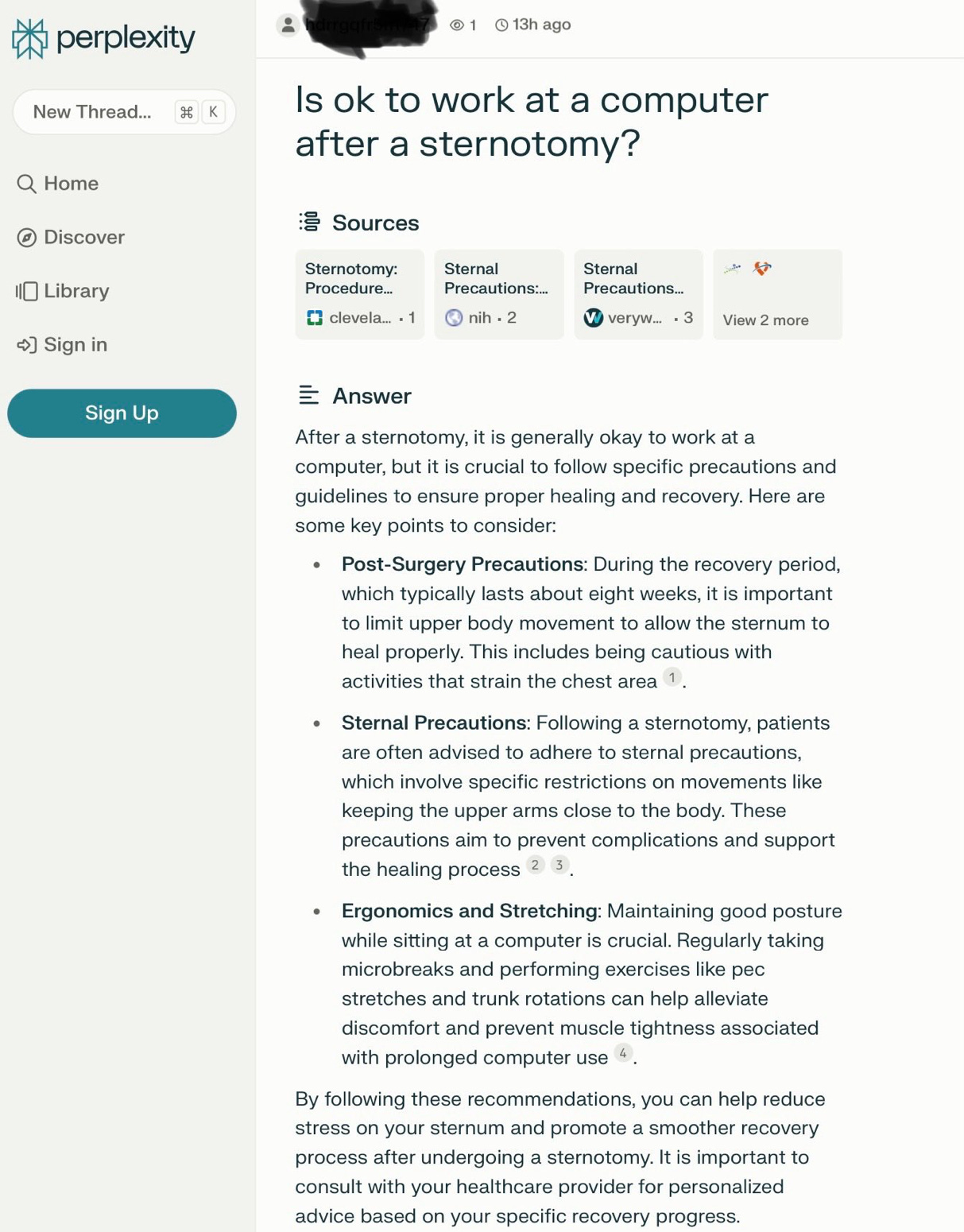

A serious and instructive medical error from the GenAI search engine Perplexity below, sent to me by a reader of this Substack, with permission to share.

The question was whether it would be ok to sit at a computer after open-heart surgery. Feel free to try to guess the error.

In the reader’s words

I just had open heart surgery (misformed valve -- I am doing fine). I was curious and asked Perplexity if it was OK to sit at a computer and work. Its recommendations on stretching would potentially aggravate the sternum repair and maybe even delay bone knitting, which is very serious and would result in another surgery.

How did this happen? What’s gone wrong?

Let’s call this kind of mess generative pastische: sound advice for the particular circumstance (sternotomy) has been combined with generic advice (about ergonomics) that would sensible in other circumstances but deeply problematic here.

Yet another reason why we should never trust today’s chatbots.

Gary Marcus has been warning about the potential for LLMs to provide dangerous advice for a long time. He remains concerned.

p.s. Shortly after I sent yesterday’s note on OpenAI’s latest woes, it was revealed that they are also under SEC investigation. It’s almost impossible to keep up.

Using Generative AI for medical diagnostics is dangerous and irresponsible. The AI companies should have a visible disclaimer everywhere. But because they don't, even the so-called "AI experts" are being confused.

Case in point. As I mentioned in my review of the November 29, 2023, congressional hearing “Understanding How AI is Changing Health Care,” there was a 'covfefe' that everyone seemed to have missed: https://sergeiai.substack.com/p/what-if-a-physician-doesnt-use-ai.

Rep. Gus Bilirakis:

“Mr. Shen, can you tell us about the role of generative AI, what it is, and what its potential can be within the health care sector?”

Peter Shen, Head of Digital Health – North America, Siemens Healthineers:

“With generative AI here, we see the greatest potential in the ability for the AI to consume information about the patient themselves. So, when a patient goes to get an exam for a diagnosis, leveraging generative AI can help identify precisely what diagnosis should be looked for. Another area where generative AI benefits medical imaging is in interpreting the images themselves. It can translate complicated medical language into layman’s terms for the patient, helping them better understand the test results from their exam.”

Wrong! We don't use hallucinating AI for precision medicine. Shame on you, Mr. Shen.

If the experts of AI make such egregiously erroneous statements, what can you expect from the users of AI?

Yes, as per Sam Altman, AI can be magical, but in healthcare, we need more than magic. We need precision, accuracy, and reliability. The thought of using generative AI in medical diagnostics is as absurd as using a Magic 8-Ball for brain surgery. It’s not just irresponsible. It’s a gamble with human lives.

The statistical nature of these machines is revealed I've noticed, when trying out ChatGPT for low-level editing of text: it tends to wander away from the task the longer it's allowed to generate answers. It has no real internal coherence. I had to keep telling it over again what it's job was exactly.

This lack of true internal coherence was dramatically revealed the other day when it went bonkers for 6 hours. 😆