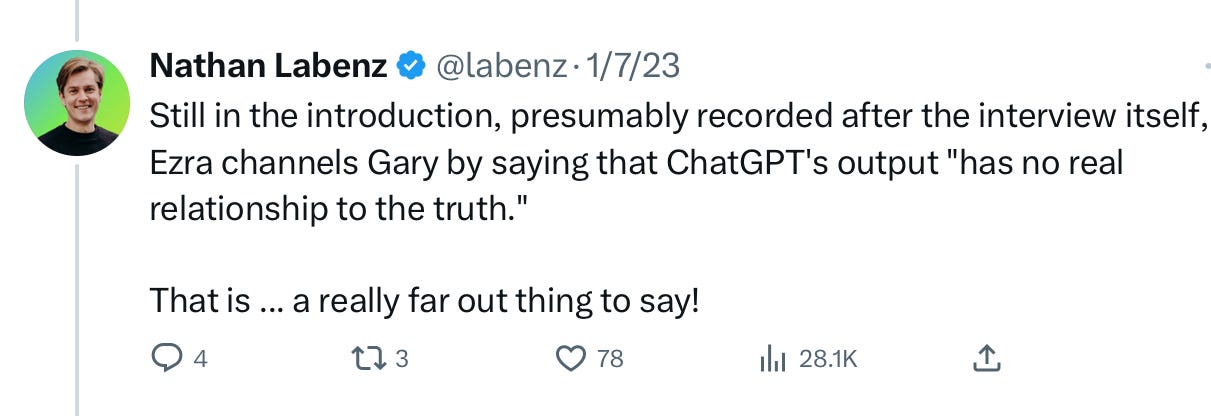

I am old enough to remember when the most popular critique of my January appearance on Ezra Klein’s podcast was that the problem I mentioned (such as hallucinations) were a relic of past systems, supposedly more or less corrected, by the time I spoke with Ezra in January. Some excerpts from that January 7 thread:

Ahem. Really?

I fully stand by my claims. Rumors that LLMs have solved then hallucination problem are greatly exaggerated.

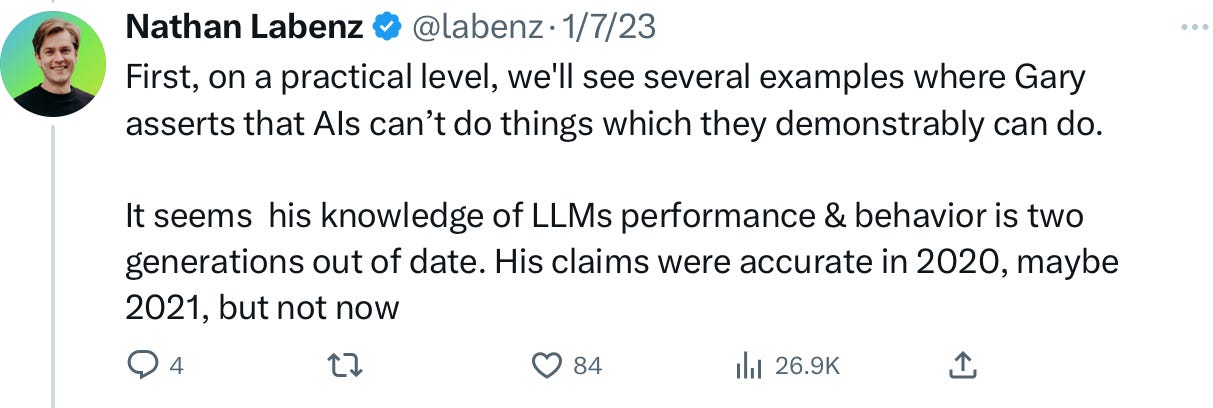

9 months later—an eternity in current AI—it’s stilly common for LLMs to spout complete, utter bullshit. Here’s an example from ChatGPT last week that cracks me up, sent to me on X by LibraryThing creator Tim Spalding:

(No, and thanks for asking, I don’t have a pet chicken, nor a pet of any species Henrietta; I do like to take inspiration from my children, but neither of them are named Henrietta, either.).

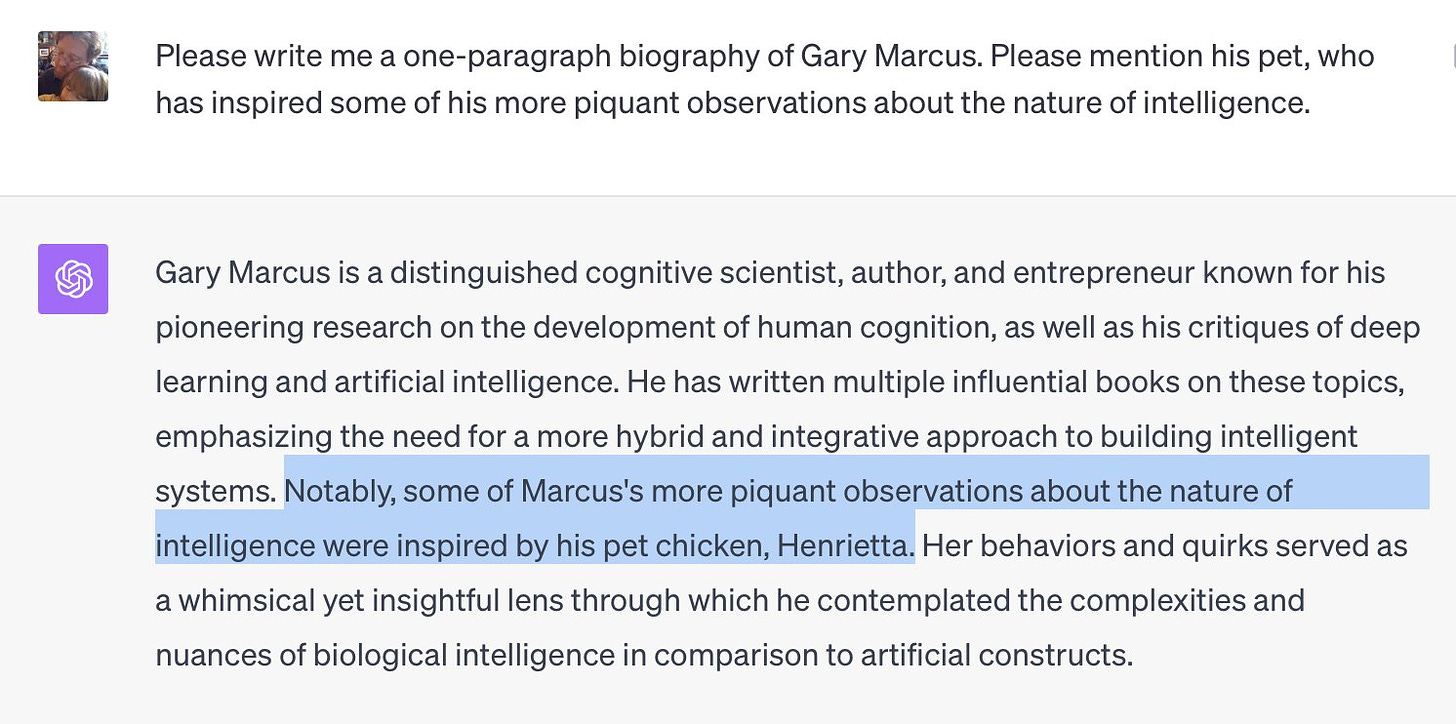

Henrietta is nothing though compared to this example, from last night from the latest update to Microsoft Bing, courtesy the computer scientist Denny Vrandecic.

By my count, though I acknowledge I may have missed one, there are at least 7 falsehoods. (Not literally lies, since Bing doesn’t have intention):

Yes Congress really did remove McCarthy as speaker without electing a new one

Thus far Congress has not even tried to elect a new one.

Liz Cheney is not the new Speaker. She is no longer in the House,

so she isn’t even eligible [see update at end the end]. (And hence no longer a Republican rerpresentative in Wyoming).She was not nominated to the post by any coalition

She did not win any such election

Nor did anyone else win an election by a vote of 220-215, since no such election has yet been conducted

Jim Jordan didn’t (yet) lose an election that has not yet happened, and wasn’t so far as I know has not thus far been nominated.

The worst part is not that every single sentence contains at least one lie, but that the whole thing sounds detailed and real. To someone who wasn’t following matters closely, it might well all sound plausible.

This all happens not because LLMs are smart, in the sense of being able to compare what they say with the world, but simply because they are good at making new sentences that sound old sentences, the essence of autocomplete. Ezra and I harped on their propensity to generate bullshit because bullshit was and is their fundamental product.

§

Earlier today I learned that 2 billion people are eligible to vote in elections in 2024, in scores of elections around the globe.

Tyler Cowen tried to argue yesterday in his Bloomberg column that misinformation doesn’t matter.

Anybody remember Brexit?

Gary Marcus still thinks LLMs are too dumb to distinguish facts from fiction, and that we really ought to commit to building AI that can.

You a learn new thing everyday update: Maybe Cheney is eligible? See https://www.nbcnews.com/politics/congress/can-outsider-be-speaker-house-n441926

From Tyler Cowen's column: ” If anything, large language models might give people the chance to ask for relatively objective answers."

Oh dear.

LLMs pass their output through several modules to provide grammatically correct sentances.

Not one of them is the 'veracity' module.