Give me one reason to stay here

And I'll turn right back around

– Tracy Chapman

The best meme on X yesterday featured Idris Elba, coughing and nearly choking in utter you-have-got-to-be-kidding-me disbelief.

I watched the video (sorry I can only embed a still here) about 10 times and could not stop laughing. In asking for $7 trillion, it is pretty obvious to most that Sam Altman has jumped the shark.

§

Here are seven reasons why we should gather together to tell the aspiring young CEO that the world does not—and should not—revolve around him:

Energy and Climate: $7 trillion worth of AI infrastructure used to full capacity would use (in precise technical terms) a metric shit ton of energy. And let’s not kid ourselves about it all being “renewable”. Someone on X compared the (current) needs of GenAI to the power consumption of the entire nation of Germany. My guess is that is actually a very conservative estimate going forward, particularly if models continue the trend of becoming bigger and more costly to train. Already there is pressure from GenAI to keep coal plants on. Needless to say– oh no, I guess I do have to say it — using that much energy is not something that not be taken lightly. So far GenAI has been mostly promise, rather then delivery. There is no argument yet that it is actually worth tearing up the globe.

Natural Resources: Extending the point above, ML researcher Sasha Luccioni told this to VentureBeat’s Sharon Goldman:

Full story at VentureBeat You should really read the whole story, but here’s a further excerpt:

For comparison, in September 2023 Fortune reported that AI tools fueled a 34% spike in Microsoft’s water consumption; Meta’s Llama 2 model reportedly guzzled twice as much water as Llama 1; and a 2023 study found that OpenAI’s GPT-3 training consumed 700,000 liters of water

OpenAI has never disclosed those numbers for GPT-4; I can scarcely imagine what they will be for GPT-5.

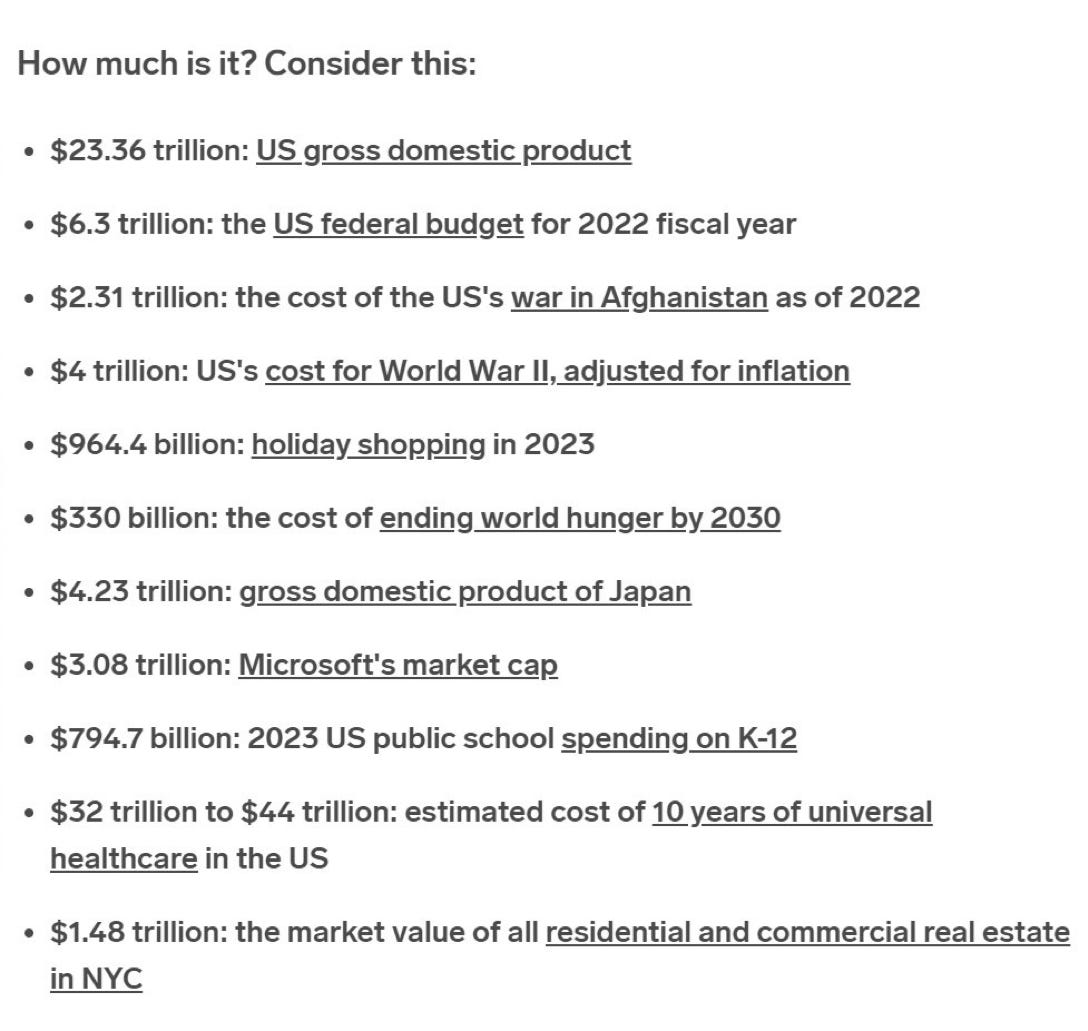

Economic resources: $7 trillion is almost ten times more than the US spends on schools in a year, and, per the chart below, 21 times the cost of ending world hunger. Money is not infinite, and there are inevitably opportunity costs. I know the fantasy here is generative AI will make the whole pie vastly bigger, as far as I can tell running it is so costly OpenAI hasn’t yet even turned a profit. Making even a tenth of the $7T investment, and giving it all to a guy who has never even exited one of his own companies, all on promise? Who is kidding whom? As

put it on X, just now, “Imagine all the frontier ideas we could say "Yes" to with $7 trillion! One of the reasons we can't do so right now is that all those resources are getting sunk into chatbots.”

Financial risk: A project that costs $7 trillion is inevitably going to be seen as too too big to fail. Any bailout could easily shatter the world’s economy. Even if AI doesn’t kill all or many of us directly, as some people fear, the collapse of a $7 trillion project could trigger a devastating, worldwide financial depression, far worse than the subprime mortgage crisis of 2007-2008. The burst housing bubble that is dragging China down now should be seen as a warning of what could happen from building too much infrastructure too fast.. But this could be far worse.

Human intellectual capital. OpenAI has argued that they literally can’t build what they are building without an exemption from copyright that would crush artists, musicians, writers, and other creators.

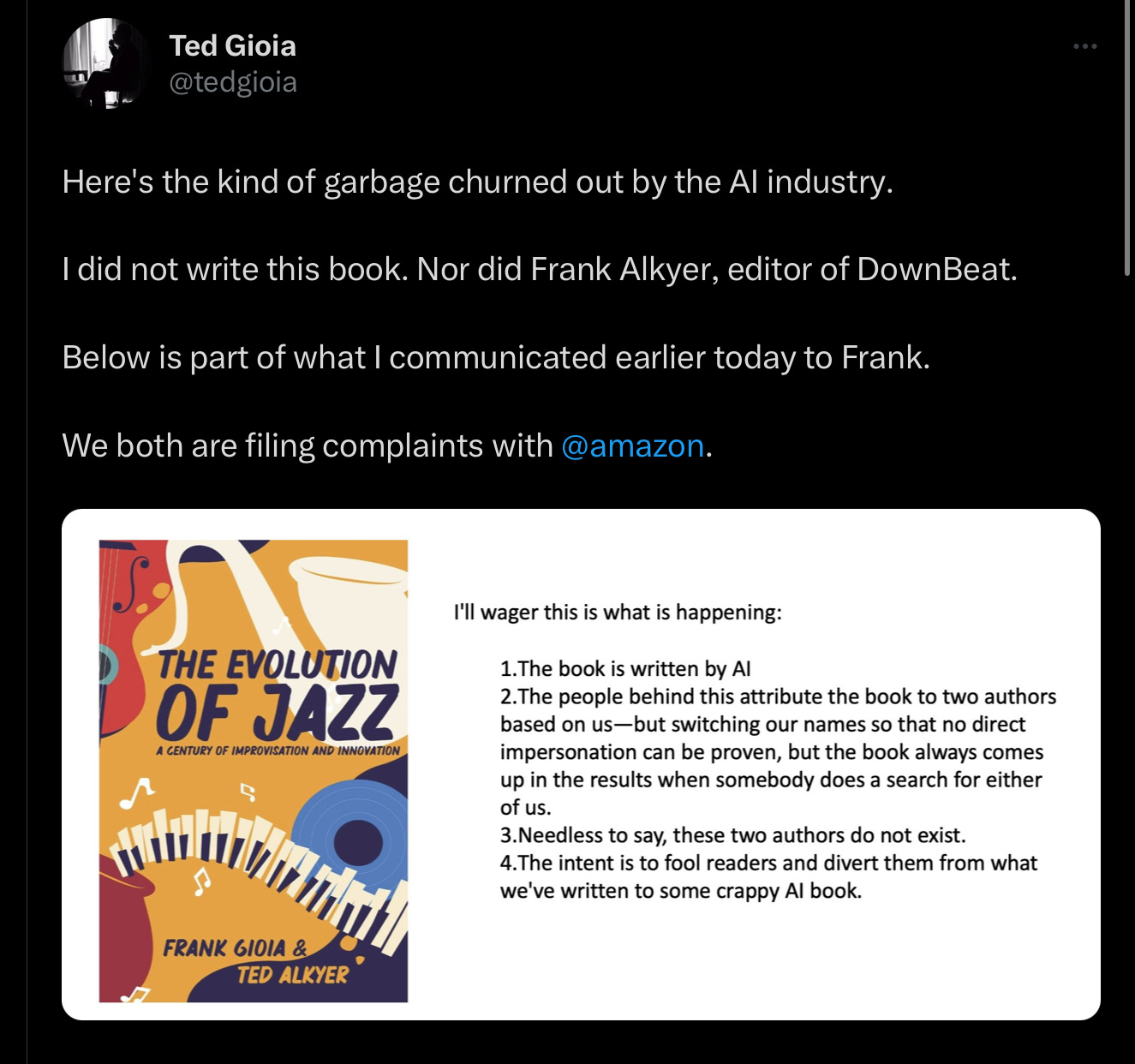

Negative externalities, essentially none of which OpenAI is absorbing, like the cost of dis- and misinformation, cybercrimes, and the latest, fake books, a scam that has recently hurt at least three authors I know over the last two months. The latest report, this time from the noted music writer Ted Gioia came yesterday, regarding a fake book by a “Frank” Gioia:

It is bad enough that large language models are making this kind of stuff easy now; worse that companies like OpenAI seem content to let society bear all the costs, like some careless industrial plant belching out toxic chemicals back in the day. Even war is not out of the question. As Sharon Goldman noted yesterday, “shortages of rare earth minerals such as gallium and germanium have even helped inflame the global chip war with China”. If the battles over resources became acute enough, actual war could ensue. And, let’s be honest folks, the “alignment problem” isn’t remotely solved. Many of the leaders in AI have signed a letter saying this technology could kill us all. Why are we in such a big rush?

§

Speaking of not being in rush, Phil Libin, who has spent years both as CEO and venture capitalist, emailed me another excellent point:

Such an investment is premature simply because we don’t yet know what kind of specialized chips, and specialized assembly lines, we’ll actually need. The field is developing too quickly and the real use cases haven’t been proven yet. We should get to something real first, then scale it. It’s a good thing Sony didn’t convince the world to dump $7T ($1.33T in 1977 dollars) into Betamax factories.

Let’s not by any means abandon research in AI, altogether—I do still see great hope if we can get to more trustworthy forms of AI—butfor now we should be focusing primarily on safety and innovative new approaches, rather than rushing to scale up existing and problematic architectures to unfathomable and unpredictable consequences.

After all, as Libin points out, “a colossal flop of a $7T … project could set back legitimate AI development for another decade.”

Libin continues, “ Worldcom … bur[ied] a few billion $ into fiber wayyy before anyone needed it [and] it precipitated a pretty big scandal and economic shock. This is … 1000x larger.”

If you want go to fast, fine; if you want to go insanely fast, you risk screwing the pooch, and possibly the entire field of AI along with it. Even so-called effective accelerationists should wonder whether Sam is playing his cards correctly.

§

We should all say “No” to reckless AI expansionism — unless and until tech leaders can show persuasively that the technology is mature enough that such expansion would demonstrably yield enormous net benefit.

Taking a real chance at wrecking both society and the environment on little more than vague speculation makes no sense.

Gary Marcus has grown tired of the narcissism of Silicon Valley.

I guess there’s always a rich guy with heroism delusions happy to risk the world. Is it ok for one guy to have this much influence? How do we as a society guard against following one guy down a flop? Worrying.

Too much reliance on "genius", too little reason.