Should we worry more about short-term AI risk or long-term AI risk?

We can’t just act as if nothing is happening here. And we can’t act like we have infinite time to decide what to do, either.

On holiday, but concerned enough to write. A few quick notes:

In The Atlantic earlier today, I wrote about the immense near-term risks of mass-produced misinformation: “It will be an uphill, ongoing move-and-countermove arms race from here… If we don’t start fighting now, democracy may well be overwhelmed by misinformation and consequent polarization—and perhaps quite soon. The 2024 elections could be unlike anything we have seen before.”

On Sam Harris’ podcast Making Sense last week I had an intense and fascinating discussion with Berkeley Professsor Stuart Russell, on both short term and long term risks in AI that left me a little shaken. In some way I play the moderate to Stuart Russell’s alarmism. But I’m not that moderate. The disorganized release of Sydney has left me significantly less confident in the AI industry’s competence and eagerness to police itself. Of course I view ChatGPT as only dimly related to artificial general intelligence, but I do see it as dress rehearsal. And, by any reasonable measure, that dress rehearsal went badly. Russell and I discuss. To me the only solution to the long-term risk issue is to build machines with consensus human values, but we are a long way from knowing how to do that, and Russell (who shares my minority view that machines ought to have values they can reason over)) wants machines to learn those values from data, without innate constraint, and I am worried about where insufficiently constrained learning might read. Some said it was the AI podcast of the year; I do think it is worth your time.

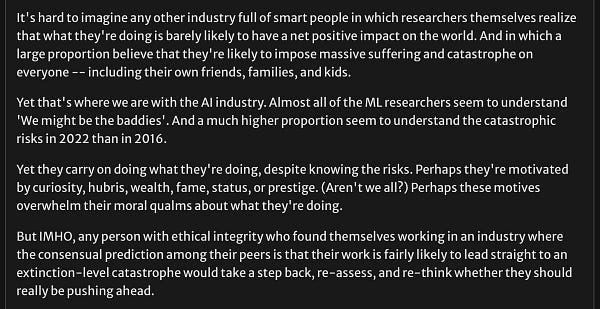

Katja Grace, lead researcher at AI Impacts recently surveyed 559 machine learning researchers on how we worried they are about really serious, truly bad outcomes for humanity stemming from AI. A lot of machine learning researchers are worried. And yet most people carry on as if they weren’t. Psychologist Geoffrey Miller just pointed out the contradiction in no uncertain terms; it’s worth pondering what he had to say:

What’s more scary? @KatjaGrace’s study showing how many ML researchers are forecasting serious levels of AI risk, or the fact that so many ML researchers seem indifferent to that possibility? @primalpoly rips into the ML community here: https://t.co/gH3Sc2vvVE

What’s more scary? @KatjaGrace’s study showing how many ML researchers are forecasting serious levels of AI risk, or the fact that so many ML researchers seem indifferent to that possibility? @primalpoly rips into the ML community here: https://t.co/gH3Sc2vvVE 2022 survey participants' expected AI outcomes: 14% of area to 'extremely bad' (dark grey), 31% total on net bad (dark grey + red) https://t.co/3UEoiEpqUn

2022 survey participants' expected AI outcomes: 14% of area to 'extremely bad' (dark grey), 31% total on net bad (dark grey + red) https://t.co/3UEoiEpqUn AI Impacts @AIImpacts

AI Impacts @AIImpacts

Matthew Cobb’s reply to my tweet, below, is worth pondering; not sure we are at that moment, but are we sure we aren’t?

§

The genie is already partway out of the bottle. Large language models are here to stay, their recipe well understood, and not hard for people skilled in the art to replicate; large pretrained models are out their for the taking on the dark web. Geoffrey Miller’s lately been campaigning for an outright pause on AI, both research and deployment. I have caused for something less: stricter regulations governing deployment. Either way I think we need to raise these questions seriously, sooner rather than later.

Being honest, I can no longer even decide whether I am more concerned about the short-term risks (misinformation undermining democracy tops my own list, though issues of bias continue to loom large) or the long-term risks (e.g, machines with messy values, perhaps induced from mediocre machine learning, and too much power).

I frankly don’t know what we should do.

But I am with Ezra Klein in his most recent op-ed, in which he wrote, “One of two things must happen. Humanity needs to accelerate its adaptation to these technologies or a collective, enforceable decision must be made to slow the development of these technologies. Even doing both may not be enough.”

We can’t just act as if nothing is happening here. And we can’t act like we have infinite time to decide what to do, either.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is a skeptic about current AI but genuinely wants to see the best AI possible for the world—and still holds a tiny bit of optimism. Sign up to his Substack (free!), and listen to him on Ezra Klein. His most recent book, co-authored with Ernest Davis, Rebooting AI, is one of Forbes’s 7 Must Read Books in AI. Watch for his new podcast, Humans versus Machines, this Spring.

Gary, you asked, so … The short-term risks to democracy and the 2024 elections cannot be overstated. But, if we survive that, the long-term risks are literally beyond our ability to even begin to conceptualize. In our “post truth” world, it has been extremely difficult to decipher what is more true or perhaps more accurate than what is not true or even a lie. Up till now, with enough time and effort, those who cared could find the ‘more true’ instead of the ‘not true,’ but that was before search purposely repositioned itself to become the ultimate delivery of the chaos. That said, in the slightly longer term, the false bravado and fake intelligence manifest by current iterations of pretend AI will create social turmoil and upheavals, as well as mental and wellbeing injury, harming individuals, families, communities, and countries in ways that go far beyond what is being discussed today. And there is no government, or coalition of governments, other than an authoritarian one, that can develop and enforce regulations quick enough to even attempt to stop this. And never in human history has there been any universal agreement on universal values, or any form of consensus on human values, (and the human values we may imagine, or desire cannot be found in biased data, and all data is biased). The bigger challenge, how to embed these ‘values’ into non-reasoning technologies and enforce adherence to these values, in the extraordinarily short time window required, cannot happen, except again by an authoritarian regime. Values in an authoritarian regime do not come from the consensus of the people, but are dictated ones designed solely to benefit the authoritarians – which in the end may not be a government at all.

Physics has Newton's 3rd law. Do social scientists have a law of unintended consequences?

Have you noticed that while in the early days of the Internet, when there was no spam or clickbait, you could search on something and get a real, helpful result? But not anymore?

Is it possible that mass generation of "misinformation" (yech, that word should be banned) - will simply cause users to look elsewhere to find information they can trust?

Consider the rise of The Free Press (Bari Weiss). Or substack?

Isn't this a reaction to the failure of mass media to do their jobs?

I guess you are correct to worry about the consequences of AI - but what about that 3rd law.

Thanks for reading my mental wanderings.