Sorry, GenAI is NOT going to 10x computer programming

Here’s why

We spent 18 months hearing about how Generative AI was going to “10x” coding, improving programmer productivity by a factor of 10.

The data are coming in – and it’s not.

§

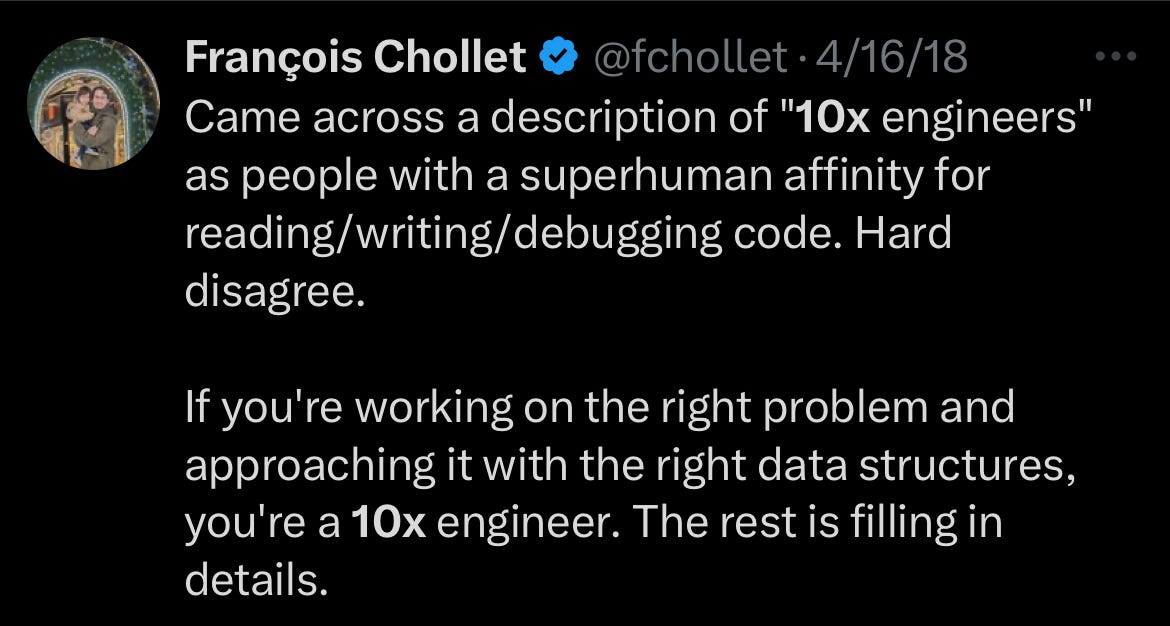

Influencers have been endlessly posting stuff like this

Here’s the hypiest AI Influencer of then all, reasoning by analogy with putative coding gains:

§

Data, though, is where hype goes to die. Two recent studies show nothing like “10x” improvement :

One result with 800 programmers shows little improvement and more bugs.

Another somewhat more positive study doesn’t look at bugs, but shows moderate (26%, not 1000%) improvement for junior developers, and only “marginal gains” for senior developers.

An earlier study on gitclear showed “downward pressure on code quality”

Yet another earlier study (with earlier models) showed evidence of users writing less secure code, which of course could lead to a net loss of productivity long term.

No single study is perfect. More will come. But paraphrasing from memory one of my childhood heroes, the baseball sabermetrician Bill James, if an elephant has passed through the snow, we ought to be able see its tracks. The tracks here are pointing to modest improvements, with some potentials costs for security and technical debt, not 10x improvement.

A good IDE (versus none) probably is a much bigger, much less expensive, much less hyped improvement that helps more people more reliably.

§

Why aren’t we seeing huge gains? In many ways, AI researcher Francois Chollet nailed this years ago, long before GenAI become popular:

10x-ing requires deep conceptual understanding – exactly what GenAI lacks. Writing lines of code without that understanding can only help so much.

Use it to type faster, not as a substitute for clear thinking about algorithms + data structures.

And, as ever, don’t believe the hype.

Gary Marcus has been coding since he was 8 years old, and was very proud when his undergrad professor said he coded an order of magnitude faster than his peers. Just as Chollet might have predicted, most of the advantage came from clarity — about task, algorithms and data structures.

This aligns with my experience. I’m a Sr. Engineer and we use Copilot, Cursor, ChatGPT, ect. at my company.

Personally, I haven’t seen a meaningful uptick in feature velocity since we adopted GenAI coding assistants, but I am seeing more code volume from Jr. devs with bizarre bugs. My time digging through PRs has ticked up for sure.

In my dev work I’ll find myself turning off Copilot half the time, because it’s hallucinating suggestions get pretty distracting.

A software developer learns how to code. An LLM doesn't even know what code is. Throwing together a probabilistic sequence of vectors that appear many times in github repos will only get you so far.