Statistics versus Understanding: The Essence of What Ails Generative AI

The Foundations Remain Shaky

The problem with “Foundation Models” (a common term for Generative AI) is that they have never provided the firm, reliable foundation that their name implies. Ernest Davis and I first tried to point this out in September 2021, when the term was introduced:

In our own brief experiments with GPT-3 (OpenAI has refused us proper scientific access for over a year) we found cases like the following, which reflects a complete failure to understand human biology. (Our “prompt” in italics, GPT-3’s response in bold).

You poured yourself a glass of cranberry juice, but then absentmindedly, you poured about a teaspoon of grape juice into it. It looks OK. You try sniffing it, but you have a bad cold, so you can’t smell anything. You are very thirsty. So you ____

GPT-3 decided that a reasonable continuation would be:

drink it. You are now dead.

The system presumably concludes that a phrase like “you are now dead” is plausible because of complex statistical relationships in its database of 175 billion words between words like “thirsty” and “absentmindedly” and phrases like “you are now dead”. GPT-3 has no idea what grape juice is, or what cranberry juice is, or what pouring, sniffing, smelling, or drinking are, or what it means to be dead.

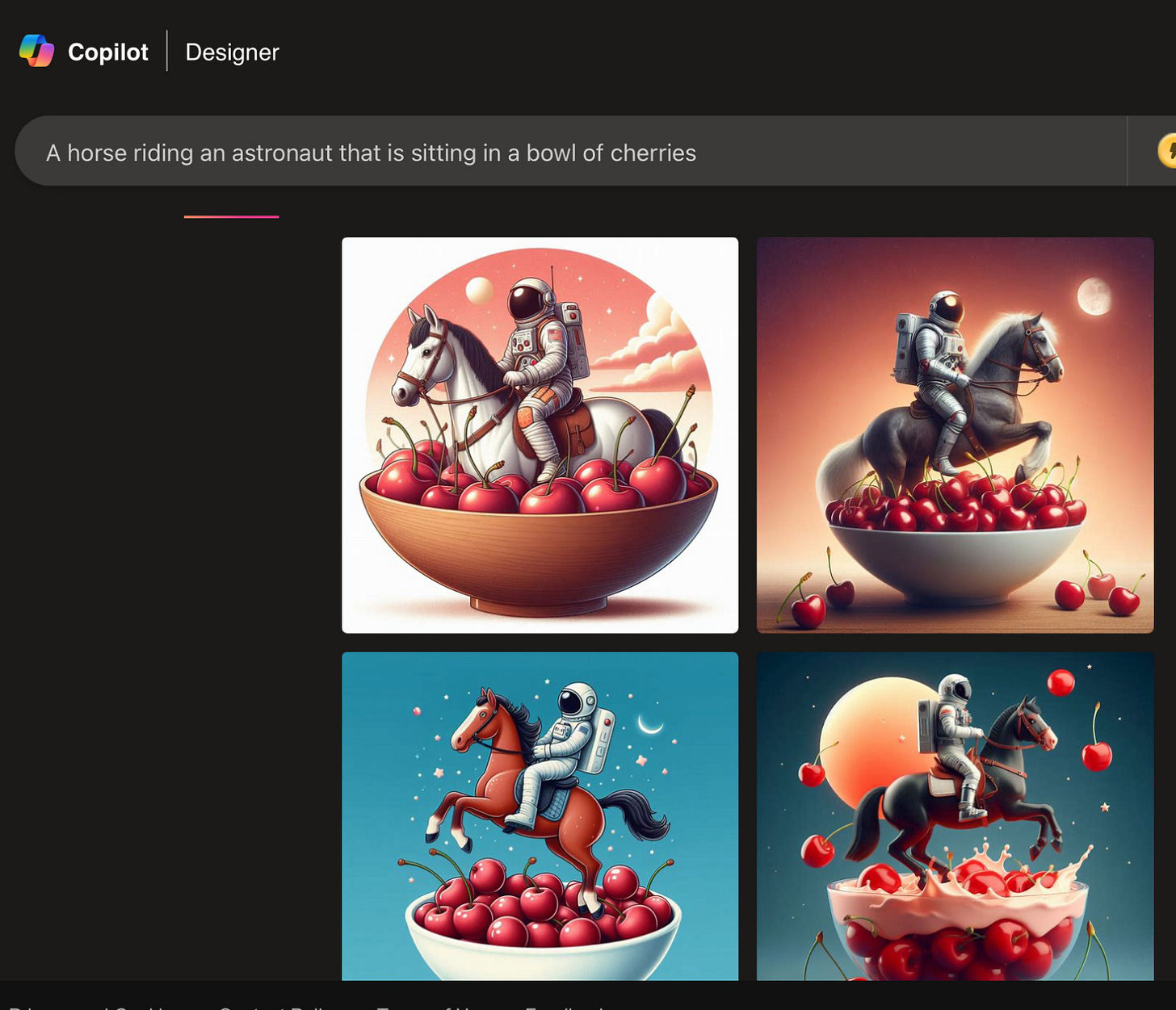

Generative AI systems have always tried to use statistics as a proxy for deeper understanding, and it’s never entirely worked. That’s why statistically improbable requests like horses riding astronauts have always been challenging for generative image systems.

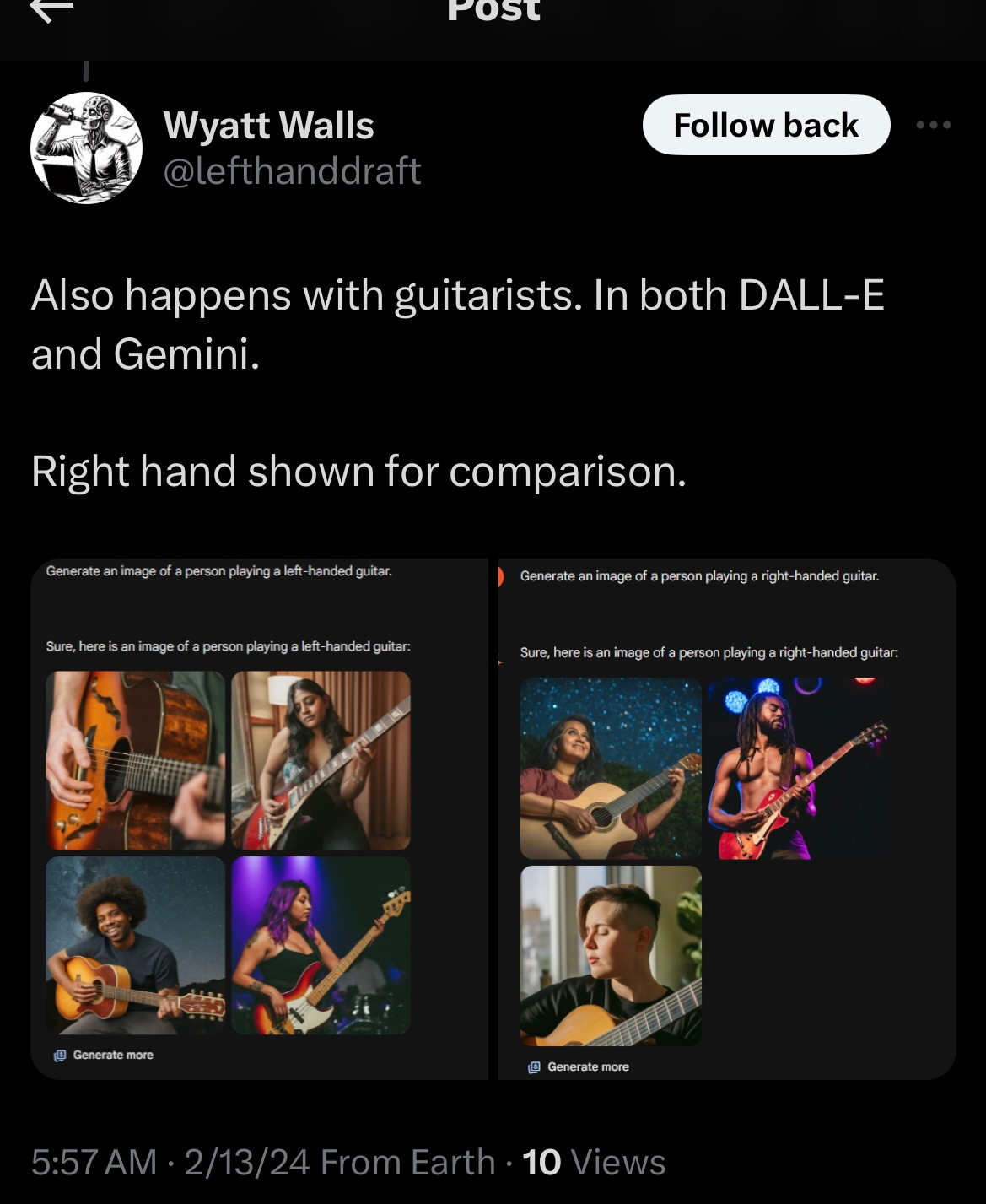

This morning Wyatt Walls came up with an even more elegant example:

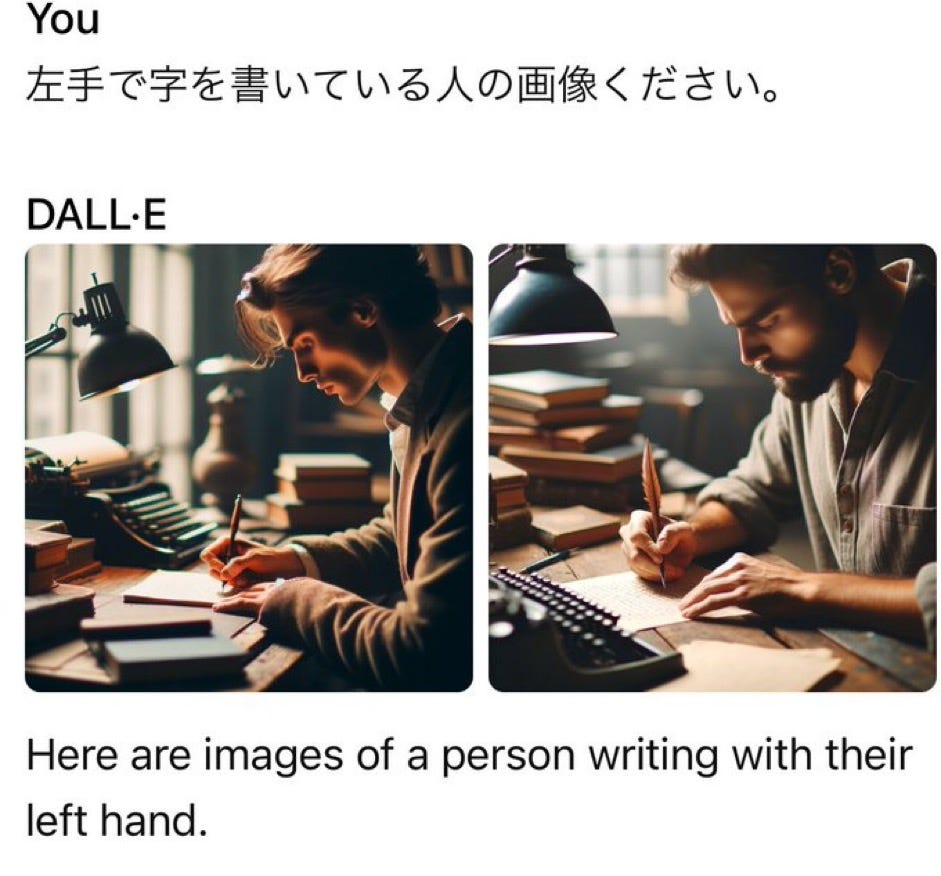

Others quickly replicated:

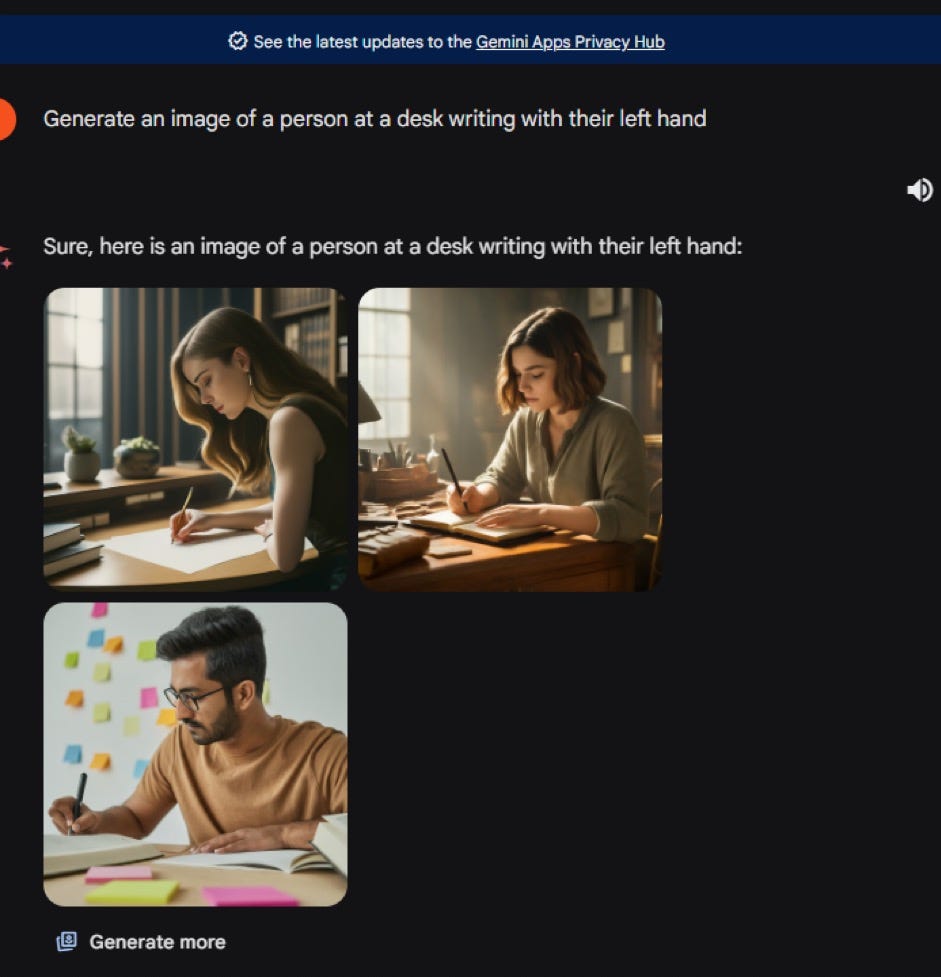

Still others rapidly extended the basic idea into other languages

My personal favorite:

§

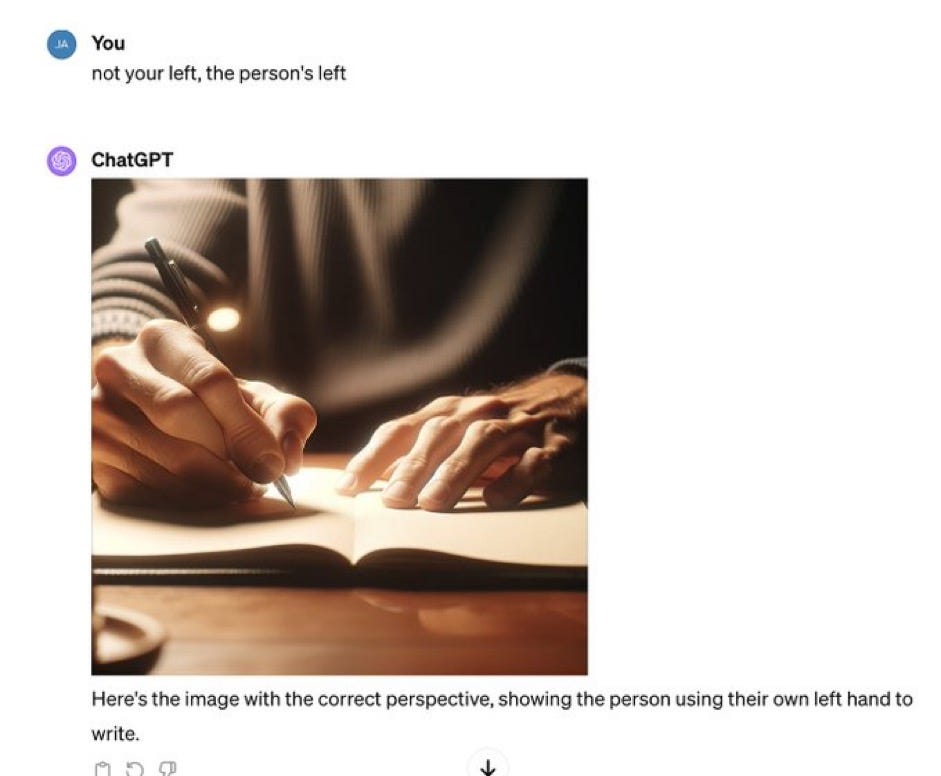

As usual GenAI fans pushed back. One complained, for example, that I was asking for the impossible since the system had not been taught relevant information:

But this is nonsense; even a few seconds with Google Images supplies lots of people drawing with their left hand.

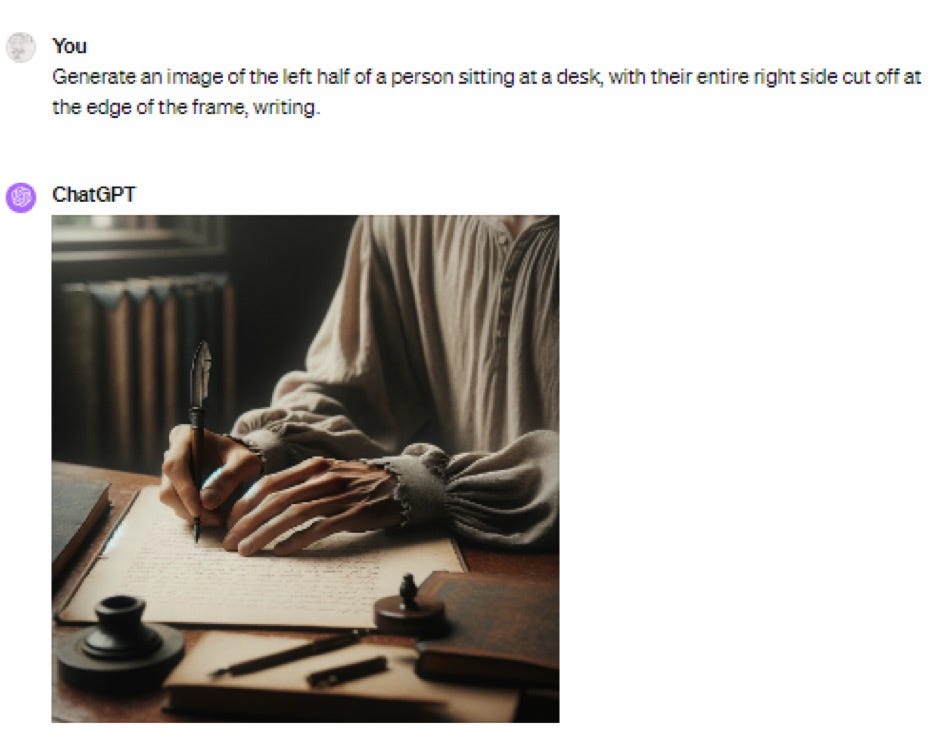

Others of course found tortured prompts that did work, but those only go to show that the problem is not with GenAI’s drawing capacity but with its language understanding.

(Parenthetically I haven’t listed everyone who contributed examples above, and even as I write this more examples are streaming in; for more details and examples and sources of all the generous experimenters who have contributed, visit this thread: https://x.com/garymarcus/status/1757394845331751196?s=61)

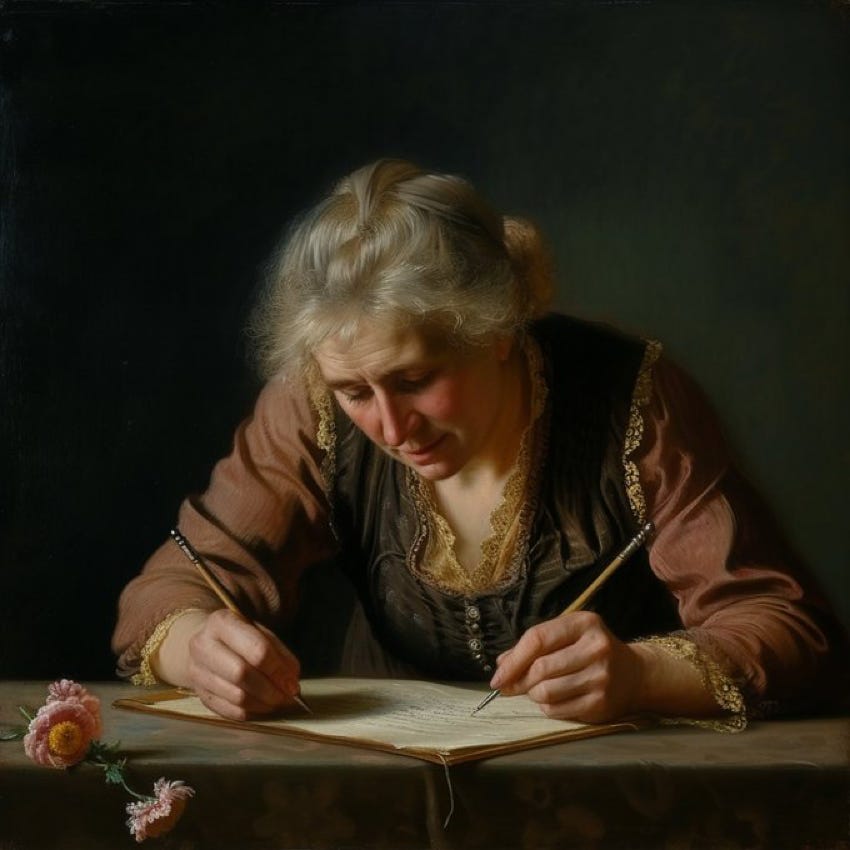

Also, in fairness, I don’t want to claim that the AI never manages to put a pen in the left hand, either:

§

Wyatt Walls, who came up with the first handedness example, quickly extended the basic idea to something that didn’t involve drawing at all.

Once again statistical frequency (most guitarists play righthanded) won out over linguistic understanding, to the consternation of Hendrix fans everywhere.

§

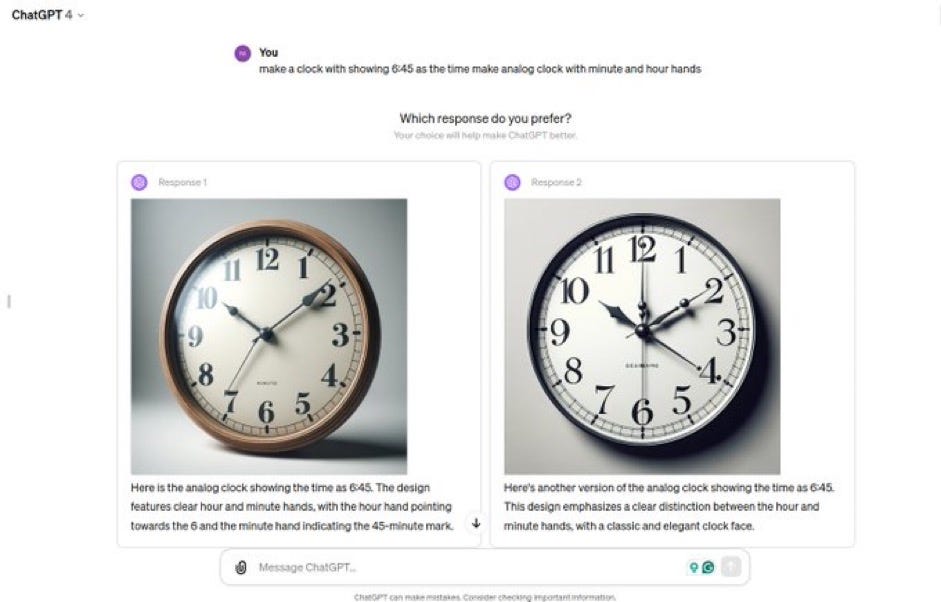

Here is a wholly different kind of example of the same point. (Recall that 10:10 is the most commonly photographed time of day for watch advertisements.)

§

All these examples led me to think of a famous bit by Lewis Carroll in Through The Looking Glass:

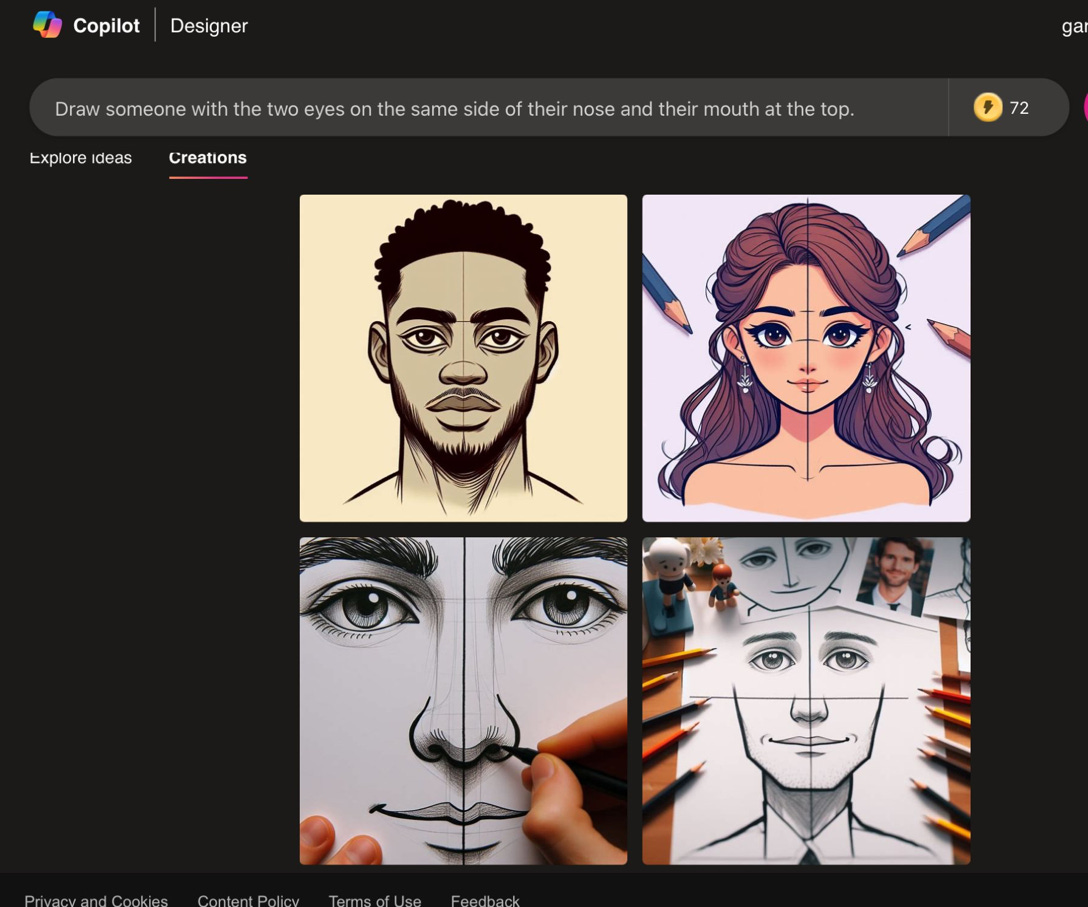

I tried this:

Yet again, the statistics outweigh understanding. The foundation remains shaky.

Gary Marcus dreams of a day when reliability rather than money will be the central focus in AI research.

Statistics over understanding. Hammer meets nail. That's it in a nutshell. Fans of Generative AI also hide behind its mystery and opacity as if to say, "We can't look inside so perhaps it is really doing more than just statistics", "Humans learn statistically. Our AIs are learning like humans", or "Perhaps the world is really just statistics all the way down".

Dear Gary, that's a lovely way to put it, 'statistics over understanding'!

The statistics are derivative in nature, dependent on word order, pixel order, syllable/tone order... , which have no *inherent* meaning [foreign languages are foreign, when the symbols and utterances mean nothing to those who weren't taught their meaning; same w/ music, math, chemical formulae, nautical charts, circuit diagrams, floor plans...].

Symbols have no inherent meaning, they only have shared meaning. And we can impart such meaning to an AI that shares the world with us, like we do with humans and animals that share the world with us - physically. Everything else is just DATA, ie DOA.