Sub-Optimal

What was missing from Tesla’s new Optimus demo was perhaps even more important than what was there

The Optimus demo turned out to be a bit of a dud. Some wag on Twitter posted this, with footage drawn from the demo:

A whole pile of well-known roboticists gave their early reactions, too, and it wasn’t pretty.

The most positive thread I read from a roboticist was this one. It was justifiably impressed with Tesla’s quick turnaround (point 1 is legit!) but hardly dripping with wow:

Also, Animesh Garg noted that there might be some advances on the motor control side. And I think all of us appreciated how much Musk shared the spotlight with his engineers. It might not be state of the art, but no high school team could actually have pulled this off quite that fast.

But many roboticists, like Cynthia Yeung, were absolutely scathing; what makes a robot a robot is autonomy, the ability to navigate the world and make good safe choices without relying on humans. We didn’t see much of that:

And Yeung posted this tweet, too, (among many others in a long thread worth reading):

Ken Golberg, too, wondered what portion of what we saw was genuinely autonomous and what was merely tele-operated (ie operated by remote control), which is about as damning as one roboticist can be to another, while still being polite:

The biggest winner of the night, arguably, was the 30-year-old, thrice-sold robotics firm Boston Dynamics, which started to trend on Twitter (thanks for the free PR!). As more than a few onlookers noted, BD seems way ahead of what we saw last night. (Visit YouTube to see this at a better frame rate):

§

The challenge for Tesla isn’t really so much the mere fact that Boston Dynamics robots are ahead (or that Agility Robotics is also doing similar work). With enough investment, Tesla might in principle catch up. If Musk really wants to win the robotics race, he has the resources to do so. (Though he clearly has not invested nearly enough so far.)

What I didn’t see last night was vision.

I mean this in two different senses.

First, there was no clearly outlined vision for what Optimus would do, nor much justification for why Tesla is building the robot the in this specific way. There was no decisive justification for why to use a humanoid robot (rather than e.g. just an arm), no clarity about the first big application, no clear go-to-market strategy, and no clear product differentiator. It was the kind of thing you see in a seed stage robotics startup, but it was surprising coming from the CEO at at one of the world’s largest companies. There was a lot of bluster (we will 100x the world’s productivity) but no road map.

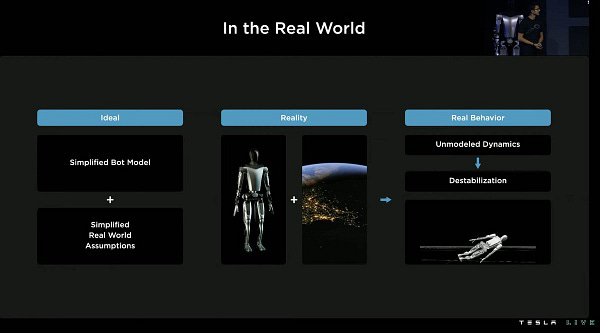

Second, there was very little vision for how Tesla would build the cognitive part of the AI they will need, beyond the basics of motor control (which Boston Dynamics already does so well), nor much recognition about why robotics is so hard in the real world. How will the system decide what is safe and worth doing in a home filled with unfamiliar objects, and humans and pets that are coming and going? How will it keep from wreaking accidental mayhem? How will it understand the difference between what people say and what they really mean?

All there was, really, was a prayer—to the god of big data. At one point, in the question period, Musk argued that Tesla was likely to contribute to AI (and solve whatever needs solving) because it would have the most data and and the compute, weak version of the implausible alt intelligence hypothesis I discussed in May.

To begin with both premises are arguable. Does Tesla actually have more humanoid robotics data than Google or Boston Dynamics do? Certainly not yet. The implied subpremise is that lots of people will buy Tesla’s $20k robot, leading to the collection of a lot of data, but that’s speculative at best, and years away even in an optimistic scenario. (Nobody is paying $20k for what they saw last night.) With respect to raw computational power, Tesla might eventually outgun Boston Dynamics, depending on how things go, but I seriously doubt they could outgun Google if Google went all in on humanoid robotics.

The overall logic is even weaker. The reality is that AI needs genuine, paradigm-shift level innovation. Simply building ever bigger neural networks won’t cut it. The way I put it last night was this:

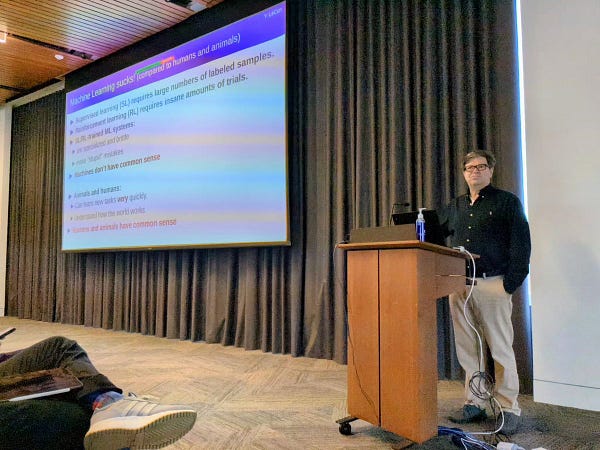

But don’t take my word for it. Here’s deep learning pioneer Yann LeCun giving a a talk earlier this week:

If you read the top of the slide, it says “Machine Learnings sucks! (compared to humans and animals)”. because it is so slow and inefficient by comparison. LeCun also points out (as too I have often said, eg in this 2015 review with Ernest Davis) that we know far too little about how to to embed common sense into AI. We in AI have a long long way to go. Data and compute alone won’t be enough. Meta, which has more data and compute than almost anyone, has finally woken up to that. Musk still hasn’t.

LeCun would probably agree that addressing common sense is the single biggest challenge facing robotics; without a solution to it, you cannot have humanoid robots in the home and expect them to be safe. We disagree about how to solve the problem, but we both see it as front and center. Musk barely even acknowledged the problem, and certainly didn’t lay out any sort of credible strategy for attacking it..

For me the most worrisome part of last night’s presentation was not the lack of a world-beating demo, but a lack of recognition of what would even be required.

In recent interviews (e.g., at TED 2022), Musk has acknowledged that self-driving is a lot harder than he anticipated, and might even be an “AI complete” problem that requires general artificial intelligence. The thing about such problems is, as Wikipedia nicely puts it, “Currently, AI-complete problems cannot be solved with modern computer technology alone”. Sooner or later he will recognize that humanoid robots, too, pose a whole set of challenges that lie well beyond our current grasp.

I admire Musk for trying, but I would have more confidence if I thought he understood more deeply the nature of the challenge.

Hi Gary, thanks for the excellent writeup! The Moravec 'coffee-making' challenge remains alive and well, Optimus (or even a BD robot) isn't about to solve it anytime soon.

An embodied presence by itself will not result in general intelligence, there needs to be matched 'embrainment' - a brain design that permits native representation of the world, using which the system can imagine, expect, reason, etc.

Instead, if the robot uses ML, it's simply computing outputs based on learned patterns in input data, which amounts to operating in a derivative computational world while being in the real physical one! There is no perception, no cognition, of the real world - because there is no innate experiencing of it.

Sure, it will work in a structured, mostly static, unchanging environment (a narrow/deep 'win' of sorts, in keeping with the history of AI) - but an average home is anything but.

Robustness in intelligence can only result from a design that can deal with exceptions to the norm (within limits - the more the limits, the less capable the system).

I always have thought of humanoid robots as the ornithopters of the robotics world. For a true AGI you need far more neurons and far denser connections than we can achieve in silico at present. I think the best we can do for now is use NNs for perception and higher level systems such as OpenCog and Open-NARS for higher level reasoning with a society of mind / drives approach.