Superhuman AGI is not nigh

Why Elon was probably wise not to take the bet

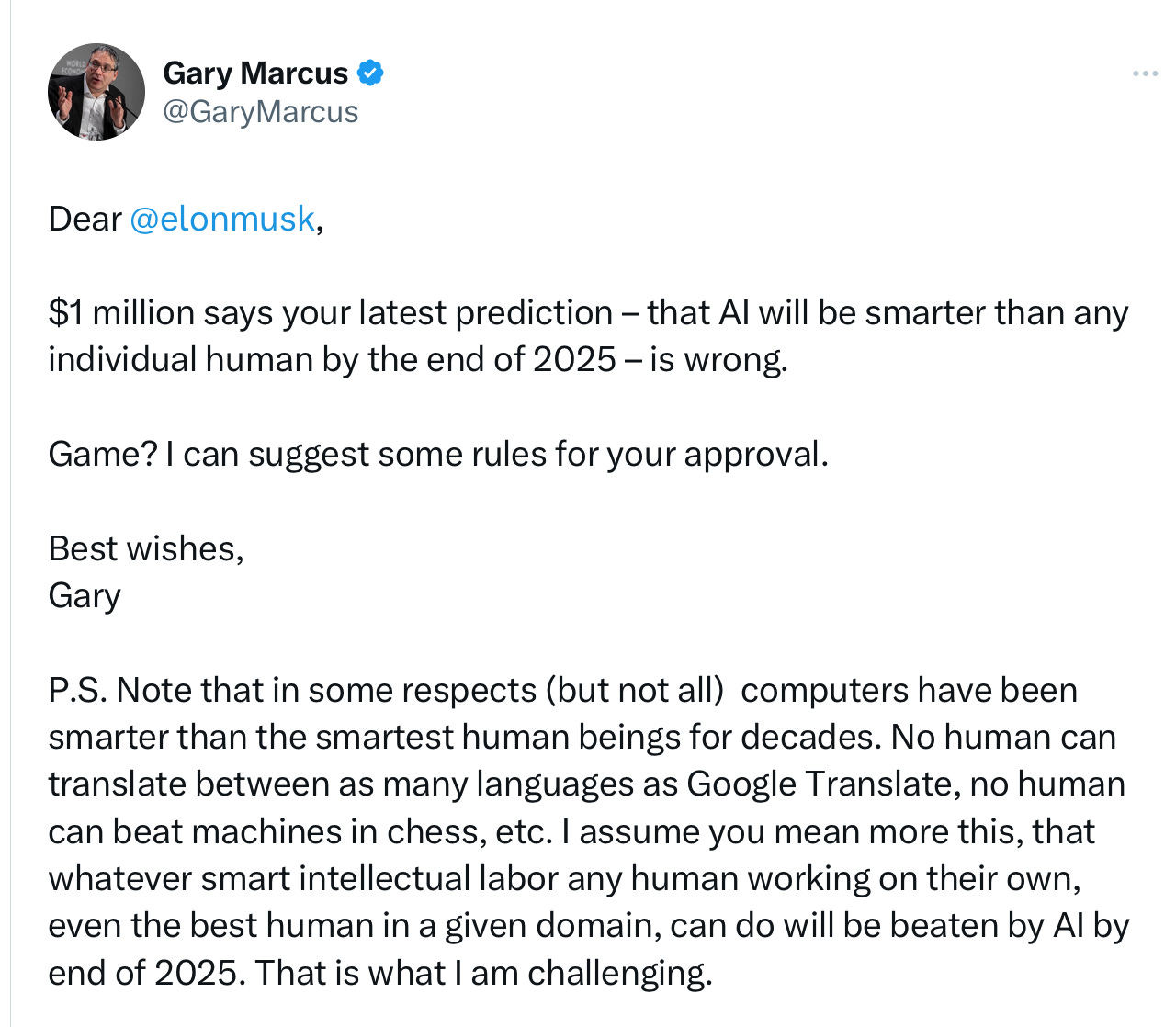

A few days ago Elon Musk claimed “My guess is that we'll have AI that is smarter than any one human probably around the end of next year…” Skeptical of this, I offered Musk a $1 million bet that AGI superior to the smartest individual humans would not arrive by the end of 2025, reprinted here for those who missed it. (The PS is important.)

Damion Hankejh raised the offer to $10 million.

In subsequent days, there has been no word from Elon. Indeed, one of the readers of this Substack set up a prediction market, and 93% (including me) think he won’t take the bet.

The Wall Street Journal asked Musk’s people about the bet and got no reply.

§

If I were Elon, I wouldn’t take the bet either. His claim, if I understand it correctly, is a really really strong claim, almost certain to be untrue. What I think Musk is saying (based on this and similar but slightly clearer wording in March on the Joe Rogan show) is that AGI will be able to do any intellectual work that any human can do by the end of next year.

For Hankejh and me to win the bet, all we would have to do, then, is to show at least one piece of intellectual work that the very best humans can do that AGI can’t, and then we would win. Right now there are likely hundreds, maybe thousands or even millions of such things.

Here are some things that ordinary people do that I seriously doubt AI will be able to do by the end of 2025.

• Reliably drive a car in a novel location that they haven’t previously encountered, even in the face of unusual circumstances like hand-lettered signs, without the assistance of other humans.

• Learn and master the basics of almost any new video game within a few minutes or hours, and solve original puzzles in the alternate world of that video game.

• Watch previously unseen mainstream movie and be able to follow plot twists and know when to laugh, and be able to summarize it without giving away any spoilers or making up anything that didn’t actually happen

• Drive off-road vehicles, without maps, across streams, around obstacles such as fallen trees, and so on.

• Learn to ride a mountain bike off-road through forest trails

• Write engaging biographies and obituaries without obvious hallucinations that aren’t grounded in reliable sources.

• Clean unfamiliar houses and apartments

• Walk into random kitchens and prepare a cup of coffee or make breakfast

• Babysit children in an unfamiliar homes and keep them safe

• Tend to the physical and psychological needs of an elderly or infirm person

• Write cogent, persuasive legal briefs without hallucinating any cases

And here are a few things that some of the most skilled humans are able to do at least occasionally:

• Write Pulitzer-caliber books, fiction and non-fiction

• Write Oscar-caliber screenplays

• Come up with paradigm-shifting, Nobel-caliber scientific discoveries.

All of the above seem outside the bounds of current AI. None seem to me to be within immediate reach.

§

As I was wrapping this up, Ernest Davis passed along a briliant, wise letter to Nature Biotechnology by Berkeley Professor Jennifer Listgarten, called “The perpetual motion machine of AI-generated data and the distraction of ChatGPT as a ‘scientist’” that is quite relevant here. You should read the whole thing, but here is an excerpt:

DeepMind researchers performed a fantastic feat with the development of AlphaFold2, substantially moving the needle on the protein structure prediction problem. Protein structure prediction is a tremendously important challenge with actual and still-to-be-realized impact. It was also, arguably, the only challenge in biology, or possibly in all the sciences, that they could have tackled so successfully …

As for ChatGPT and its relatives, these will undoubtedly continue to provide a new generation of incredibly useful literature synthesis tools. These literature-based tools will drive new engines of profound convenience, previously impossible and only dreamed of, such as those providing medical diagnosis and beyond—also those not yet dreamed of. But they will not themselves, anytime soon, be virtual scientists.”

I couldn’t agree more. And parallel arguments could be made in many domains.

§

To be sure, I could be wrong on a few in the lists above. Some items might get checked off by the end of 2025, but all of them?

No wonder Elon hasn’t taken the bet.

Gary Marcus has been making predictions about the limits of neural networks since 2001. Nearly all of them have held true.

For the use of AI chatbot based on LLMs in medicine or any life and death issues, we should be very cautious. There is no way (no matter the technique, convoluted prompt engineering, RAG, LoRA, etc.) to « guarantee » that an LLM's based AI system (not an AI, please let's avoid anthropocentrism) will not confabulate (the poorly named hallucinations which are sensory problems, but the generation of disheveled or insane texts which are confabulations).

Forgot Elon Musk's prediction: if AI is able to perform arbitrary tasks at the level of an adult human of below-average intelligence by the end of next year, I'd call it a miracle.