The Five Stages of AGI Grief

And a look at how people keep trying to redefine (or even revoke) the goalposts of what Artificial General Intelligence means.

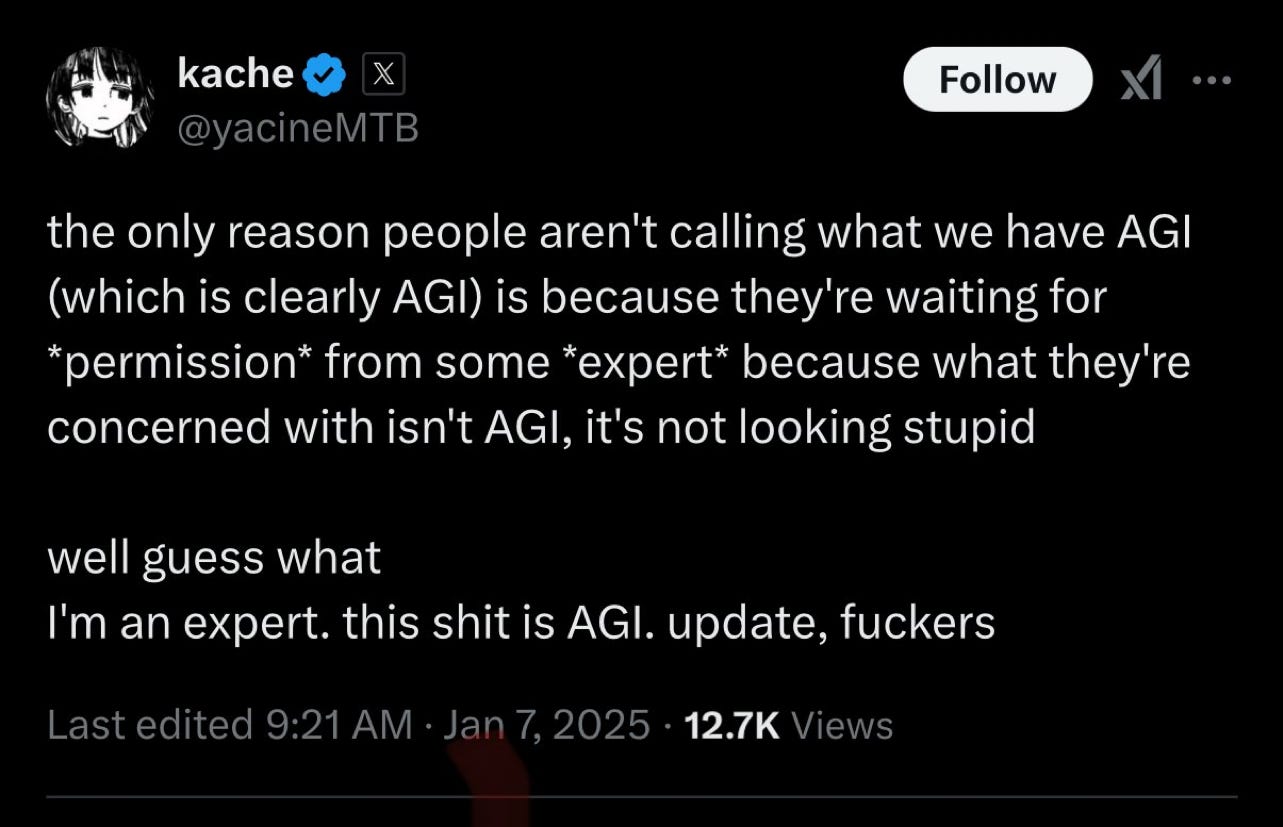

In the five stages of AGI Grief, this post (from an engineer at X) is halfway between “denial” and “bargaining”, with a healthy dose of “anger” - but no definition or engagement with the literature or criteria previously proposed:

In reality, the definition of AGI, while not perfect, is reasonably clear — and has been reasonably clear — for a long time.

Here’s what I came up with in May 2023, based on conversations with two of the people who coined the term, Ben Goertzel and DeepMind cofounder Shane Legg.

I take AGI to be “a shorthand for any intelligence ... that is flexible and general, with resourcefulness and reliability comparable to (or beyond) human intelligence”.

Both Goertzel and Legg signed off at the time. (Peter Voss, who likely coined the term independently earlier, was also ok with that definition).

Around the same time Ernie Davis and I proposed some concrete examples in a bet I offered Elon Musk, objectives like being able to watch a movie and tell you accurately what is going on (including things like character motivations and plot twists), doing the same with novels, working as a competent cook in an arbitrary kitchen (extending Steve Wozniak’s cup of coffee benchmark, and, at the upper bound, taking arbitrary proofs from the mathematical literature written in natural language and convert them into a symbolic form suitable for symbolic verification. Again, as the archives of X would attest, onlookers at the time agreed these were pretty reasonable tests of AGI.

Indeed, once upon a time (in February 2023 to be exact) OpenAI themselves defined as AGI as “AI systems that are generally smarter than humans”, reasonably consistent with the definitions the rest of us had offered.

§

There has been a lot of mission creep ever since.

We can expect a lot more of that in 2025. Here are four common moves:

Economic redefinition, v1. On OpenAI’s charter page, OpenAI defines AGI as “ highly autonomous systems that outperform humans at most economically valuable work”

At one point, perhaps based on a suggestion from Vinod Khosla, OpenAI suggested (if I recall correctly) that AGI would be defined as a system that could do 80% of economically valuable human work.While such a milestone would be important economically and socially, it is also a departure from, and even a retrenchment from, the kind of cognitive criteria around flexibility and resourcefulness that people in the field had generally talked about it. (One could imagine, for example, replacing every single job one job at a time, each with its own separate domain-specific systems. That would be impressive, but would not reflect the General in Artificial General Intelligence.)Economic redefinition, v2. More recently, OpenAI has apparently shifted towards defining AGI purely in terms of profits; according to The Information, Microsoft and OpenAI are considering a definition of AGI as being $100 billions in profits. By that absurd definition, Apple iPhones achieved AGI long ago. Any reference to cognition is dismissed altogether.

Premature declaration of victory, through redefinition. In October 2023, Blaise Aguera y Arcas and Peter Norvig effectively tried to retrofit a definition AGI to mean whatever AI systems happen doing now. Ernie Davis and I wrote a whole essay about why that didn’t really follow, called Reports of the birth of AGI are greatly exaggerated. (For example, they claimed “Frontier language models can perform competently at pretty much any information task that can be done by humans, can be posed and answered using natural language, and has quantifiable performance.” That’s just obviously not so, e.g., “write a biography of X individual without making stuff up” remains pretty unreliable; same for frontier models if you ask them to “play grandmaster level chess without making up illegal moves”.)

Redefinition by providing no definition. Another common move is to write entire essays about the implications of AGI without making any commitments whatsoever as to what AGI is or how it is defined, as in the tweet I quote at the top, and Altman’s most recent blog post. Some people shift their goalposts; others try to duck specifying goalposts altogether.

§

If a lot of people’s goalposts have moved, mine have not. same as before, I will be satisfied if and when we reach AI systems are “flexible and general, with resourcefulness and reliability comparable to (or beyond) human intelligence” – and not until.

I will close with what Davis and I said in 2023, because every word still holds true.

Here’s where we actually are, so much more boring, and so much more real: Current models trained on next-token prediction have remarkable abilities and remarkable weaknesses. The scope of those abilities and weaknesses of the current systems is not well understood. There are some applications where the current systems are reliable enough to be practically useful, though not nearly as many as is often claimed. No doubt the next generation of systems will have greater abilities and more extensive applications and will somewhat mitigate the weaknesses. What is to come is even more poorly understood than the present. But there is zero justification for claiming that the current technology has achieved general intelligence.

Gary Marcus has been proposing the comprehension of complex discourse as a challenge for AGI since 2014.

My impression is that "AGI" has entered the 5 stages of marketing and is transitioning from technical milestone towards branding tool.

The very concept of AGI is bass-ackwards. AGI is a solution to which specific problem? It has no specific mission definition, so what exactly are we building it for- Chits n' giggles? Okay we want to "building something to generally replace a human" why? For exactly what? ...and how exactly is that economical or even desirable? Also, linking this before anyone gives me any nonsense about how "costs of everything goes down": https://davidhsing.substack.com/p/automation-introduces-unforeseen