Agony first. The agony starts with the fact that Elon Musk has a point; OpenAI, Altman and Brockman *have* changed their mission since he gave them his money, his time and his reputation. Here's what they told that State of the California in 2015:

THIRD: This Corporation shall be a nonprofit corporation organized exclusively for charitable and/or educational purposes within the meaning of section 501(c)(3) of the Internal Revenue Code of 1986, as amended, or the corresponding provision of any future United States Internal Revenue law. The specific purpose of this corporation is to provide funding for research, development and distribution of technology related to artificial intelligence. The resulting technology will benefit the public and the corporation will seek to open source technology for the public benefit when applicable. The corporation is not organized for the private gain of any person.

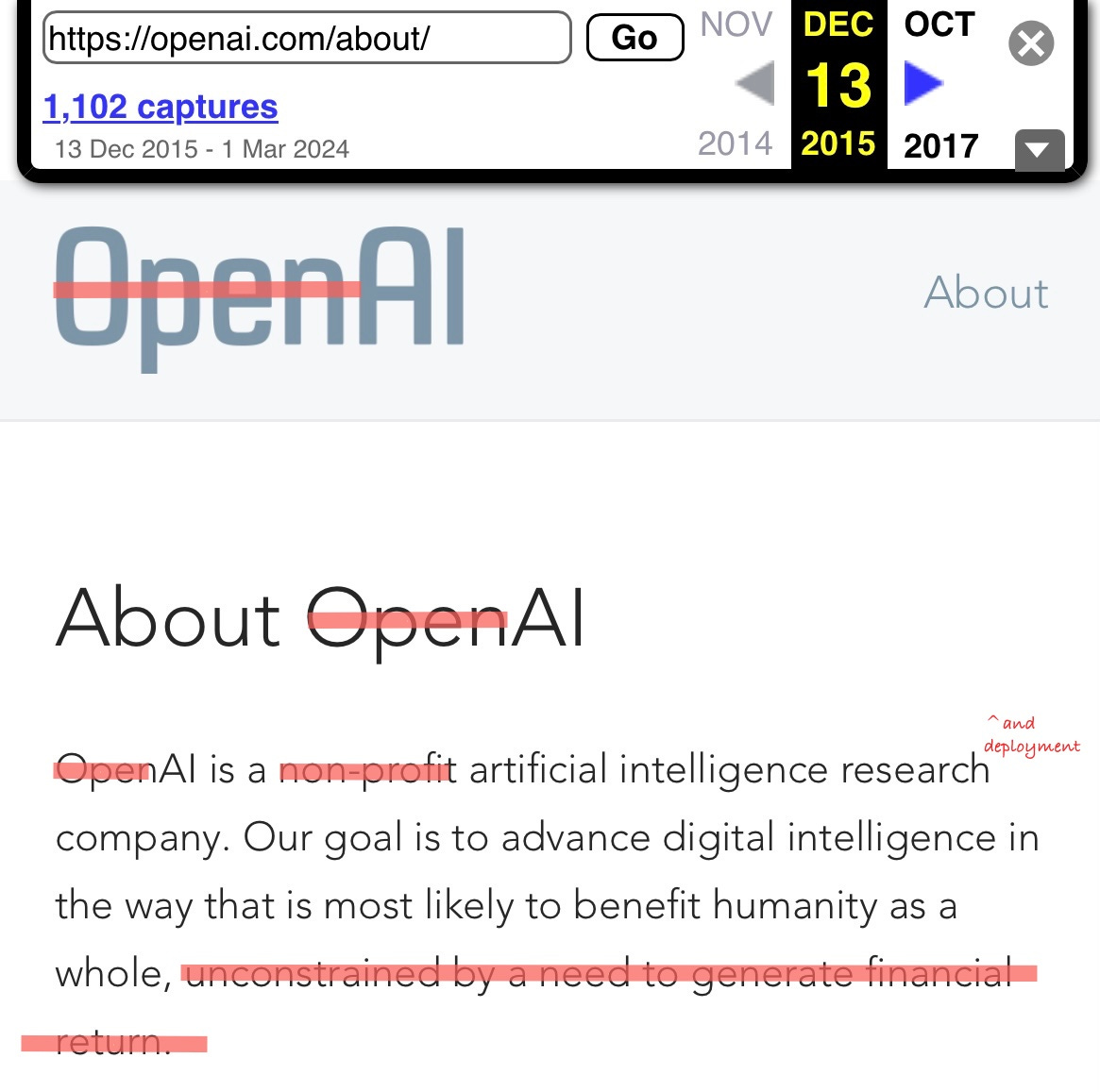

And what they then said in 2015 on their website:

OpenAl is a non-profit artificial intelligence research company. Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.

Or what they said as recently as November 2023, in their most recent nonprofit tax filing:

OpenAI’s mission is to build general-purpose artificial intelligence (AI) that safely benefits humanity, unconstrained by a need to generate financial return.

Now compare all that to what they have today on their homepage

Dropped is any mention of (a) the nonprofit (even though legally the company remains a nonprofit), (b) open source, once central both to their mission and name, and (c) the original phrasing about being "unconstrained by a need to generate financial return."

Musk's lawyers might reasonably argue that those three changes reflect a kind of consciousness of guilt. Certainly they reflect changes to the mission that were not originally envisioned.

The Agony is that Musk mostly did all that he did for OpenAI on faith, presumably faith in Altman in particular.

Courtroom quarrels are likely to focus on documentation of their agreement, rather than the real issue: which is whether OpenAI is still built around helping all of humanity. In my view, humanity has now taken a back seat to money; their choices now seem very directly anchored in a need to generate a massive return, in direct contradiction to their promises to California, to Musk, and to the world.

§

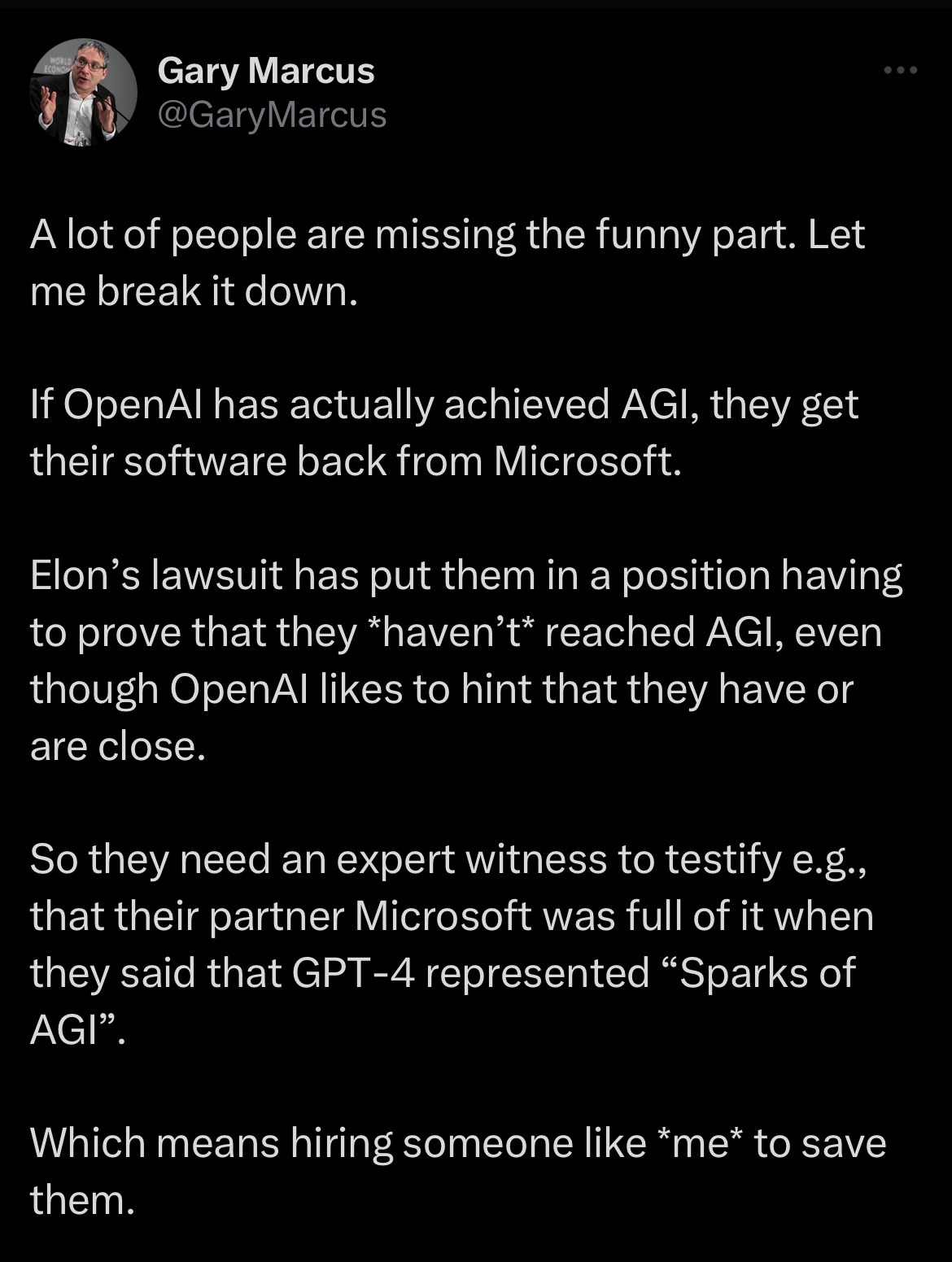

The Irony is a bizarre set of facts I outlined yesterday:

Musk's lawsuit, combined with OpenAI's bizarre arrangement with Microsoft in which the continuation of an exclusive license rests on whether or not OpenAI has achieved something that is difficult to define (AGI) has left OpenAI in the position of needing skeptical experts to deflate their own hype. As Elon himself put it in reply to my tweet:

§

The lawsuit isn't about money— Musk isn't asking for any—it's about honor, and about OpenAI's mission. He doesn’t want a refund; he wants OpenAI to be what it was supposed to be.

I wrote to Musk about this last night, riffing on John Lennon, "All you are saying is give the Original Mission a chance…"

Musk immediately wrote back and agreed.

The mission—that is what this lawsuit is about.

§

We all want courts to administer justice, but in the end laws may or may not be enough to get OpenAI to honor its initial promises.

Humanity stands to lose if the lawsuit fails.

§

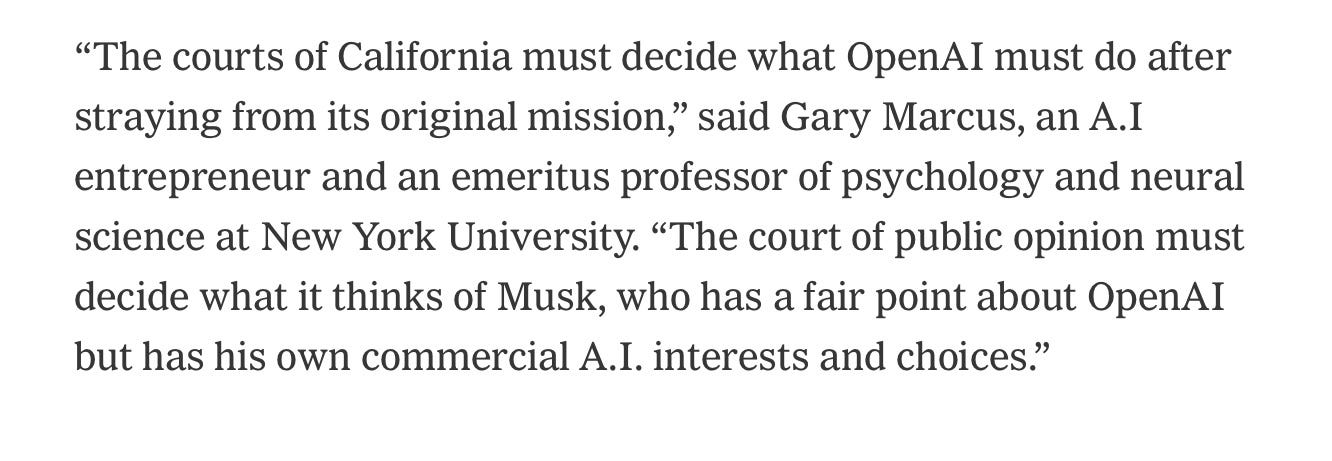

Musk has complicated motives, to be sure. The very filing of the suit has angered many. I often disagree with him about many things. The optics on the suit are terrible. As I said to the NYT a couple days ago:

Whatever the current optics, the past is actually pretty clear. Musk has been concerned about AI risk at least since 2014. In the early days, long before he formed a competitor, he donated his money and put his reputation on the line, in order to help OpenAI with its original mission. The entity strayed from that mission.

Can anyone really blame him for wanting OpenAI to return to what it was?

I honestly think the world would be a better place if it did.

Gary Marcus doesn’t play favorites; he calls them like he sees them. When a LeCun or Musk gets things wrong he says so; if they get them right, he says that, too. Our choices in AI should be about ideas and values, rather than personalities.

On this I wholeheartedly agree with Elon, and you Gary. Incidentally, the key to all of this work many of us are doing is in your post scriptum:

"Gary Marcus doesn’t play favorites; he calls them like he sees them. When a LeCun or Musk gets things wrong he says so; if they get them right, he says that, too. Our choices in AI should be about ideas and values, rather than personalities."

Amen to that! If only the mainstream media could finally understand this...

I just really hope you will be called as the expert witness and we all get to watch it live.