The misguided backlash against California’s SB-1047

Why should AI be exempt from liability and basic requirements around safety?

State Senator Scott Wiener and others in California have proposed a bill, SB-1047, that would build in some modest (to my taste) restrains around AI.

It doesn’t call for a private right of action, which would allow individual citizens to sue AI companies for a wide set of reasons; it doesn’t call for a ban on training or deploying AI, not even the kind of extremely large language models some called for a ban on. It certainly doesn’t call for a ban on research. The most stringent rules don’t even apply to training runs that cost less than $100 million dollars, exempting all or nearly all academic research, and most of what smaller and even medium-sized startups could afford. It doesn’t call for the state to make decisions about what can be deployed, as an FDA-like approval process for drugs does. In many ways, it is much weaker than what Altman and I talked about at the Senate last year.

It does, for example, ask that companies take reasonable measures to ensure that their products are not hazardous and to safety-test their products – something that 7 companies already swore on their pinkies to President Biden to do. And it allows the attorney general to file liability actions in extreme circumstances, which of course is something we take for granted in virtually all other industries from cars to airplanes to medicine.

And it demands a reasonable path to shutdown, if any systems starts causing major mayhem in the billions. None of that is unreasonable, and the CEO of OpenAI himself said that we need regulation on AI. Microsoft, too has alleged support for regulation on AI. Alphabet, too, among others.

But now that some actual regulation—somewhat neutered but not nothing —appears that it could plausibly pass in the home state of Big Tech, the gloves have come off. Lobbyists are out there lobbying, demanding (and getting) meetings with their State Senators, and yesterday both the well-known deep-learning expert Andrew Ng and the industry newspaper The Information came out against 1047 in vigorous terms. God forbid the big tech companies be obliged to full the promises they made to the President.

And (as far as I know) not one of the companies that previously stood up and said they support AI regulation is standing up for this one, or offering anything substantive in its place.

Fine to talk big in front of the Senate, but when push comes to shove, nobody in the industry seems to actually want the kind of regulation they previously hinted at. Remember how Zuckerberg told the Senate he wanted regulation on social media but didn’t really? These guys have their moves down pat.

§

The Information’s oped complains that “California’s effort to regulate AI would stifle innovation”, but never really details how. It describes the bill as “sweeping and restrictive” but doesn’t really say which of those sweeping restrictions would be so onerous that they would keep any particular startup from going about its business. The companies have already for example mostly pledged to safety test, and presumably budgeted for that. Will it break them to obligate them to do what they already promised? I see big noise in The Information oped but where’s the beef?

They do mention that the State would spend some money (raised from fees and fine) setting standards, but is that a terrible thing? It complains that really huge models would be subjected to scrutiny, but again the developers have already said they are doing that; shouldn’t we hold them to it? It quotes an industry group as complaining that “new models, which might inject welcome competition into the frontier landscape will face significant regulatory challenges that the dominant players do not” but doesn’t name any specific challenges that the dominant players would be exempt from, and I don’t see any provision in the bill that works that way.

The author also seems quite exercised that

Anyone training a "covered Al model," including startups, academics and open source developers, must certify, under penalty of perjury, that their model will not be used to enable a "hazardous capability" in the future, including by others. Developers who don't submit this certification must provide annual assurances to the Frontier Model Division-again, under the penalty of perjury-that they will mitigate risks of the model or any model built on top of it.

But is it really so terrible to say that you should either (a) warrant that you don’t think anything bad is going to happen or (b) you do the best you can to try to reduce how much bad stuff happens? Hazardous is defined here as half a billion dollars in damage; should we give that AI industry a free pass no matter how much harm might be done? Why them, when we don’t do that for anyone else?

§

AI entrepreneur and investor Andrew Ng has a long blog post that is also making the round on social media. Quoting a bit from his brief summary on X, Ng complains that:

There are many things wrong with this bill, but I’d like to focus here on just one: It defines an unreasonable “hazardous capability” designation that may make builders of large AI models liable if someone uses their models to do something that exceeds the bill’s definition of harm (such as causing $500 million in damage). That is practically impossible for any AI builder to ensure. If the bill is passed in its present form, it will stifle AI model builders, especially open source developers.

I see only two problems with this:

He seems perfectly happy to have society pick up the tab, even for harms causing half a billion dollars in damage, unashamedly outsourcing essentially all potential negative externalities to society — just like the toxical chemical spewing factories of yore. (I’m gonna call my insurance company and see if they can pick the first half billion dollars of anything stupid I do.)

He doesn’t specify which actual restrictions in the proposal bill would “stifle AI models”. Filing annual reports? Installing an off switch? Doing safety testing? Why should we exempt open-source developers from these requirements if they are not in a position to certify that their wares won’t cause massive harm?

Weird the way a bunch of people are calling for Universal Basic Income but freak out at the first sign that they might need to spend a few dollars on paperwork and internal testing.

§

As I wrote last night on X, a lot of people talking about regulations and CA SB-1047 don’t seem to know the slightest thing about how the actual world works.

Take for example explosives, which are very much a dual-use technology. We don’t say, “go ahead, manufacture whatever the hell you want, and sell it to whoever, and god bless you.”

We have rules. Rules that require basic care in how things are manufactured, how they are distributed, who they are sold to, what needs to be reported, etc.

Yet people like Ng seem to want to have an absolute free pass for AI, essentially no matter how much damage it might cause. (E.g., as noted above, Ng is apparently objecting to having any kind of safety testing being mandatory — even if a piece of AI might plausibly cause half a billon dollars worth of damage.)

Some in the tech world also seem to think that the world as we know it will end, if developers bear even a slight responsibility for licensing, knowing customers, etc.

This is nonsense.

Every company that would be subject to the stronger set of regulations is valued in the billions. The laws in question pertain to $100M+ training runs.

Anyone who can afford that can easily fill out some paperwork and run a few tests, just like I can pay a few bucks to get a driver’s license. It’s the least they can do.

“But noooooo!,” as they used to say on Saturday Night Live. Big tech seemingly will not be content until they have squeezed out every last cent of profit, whilst socializing the costs of every last risk.

Don’t let them get away with it.

We should be making SB-1047 stronger, not weaker.

§

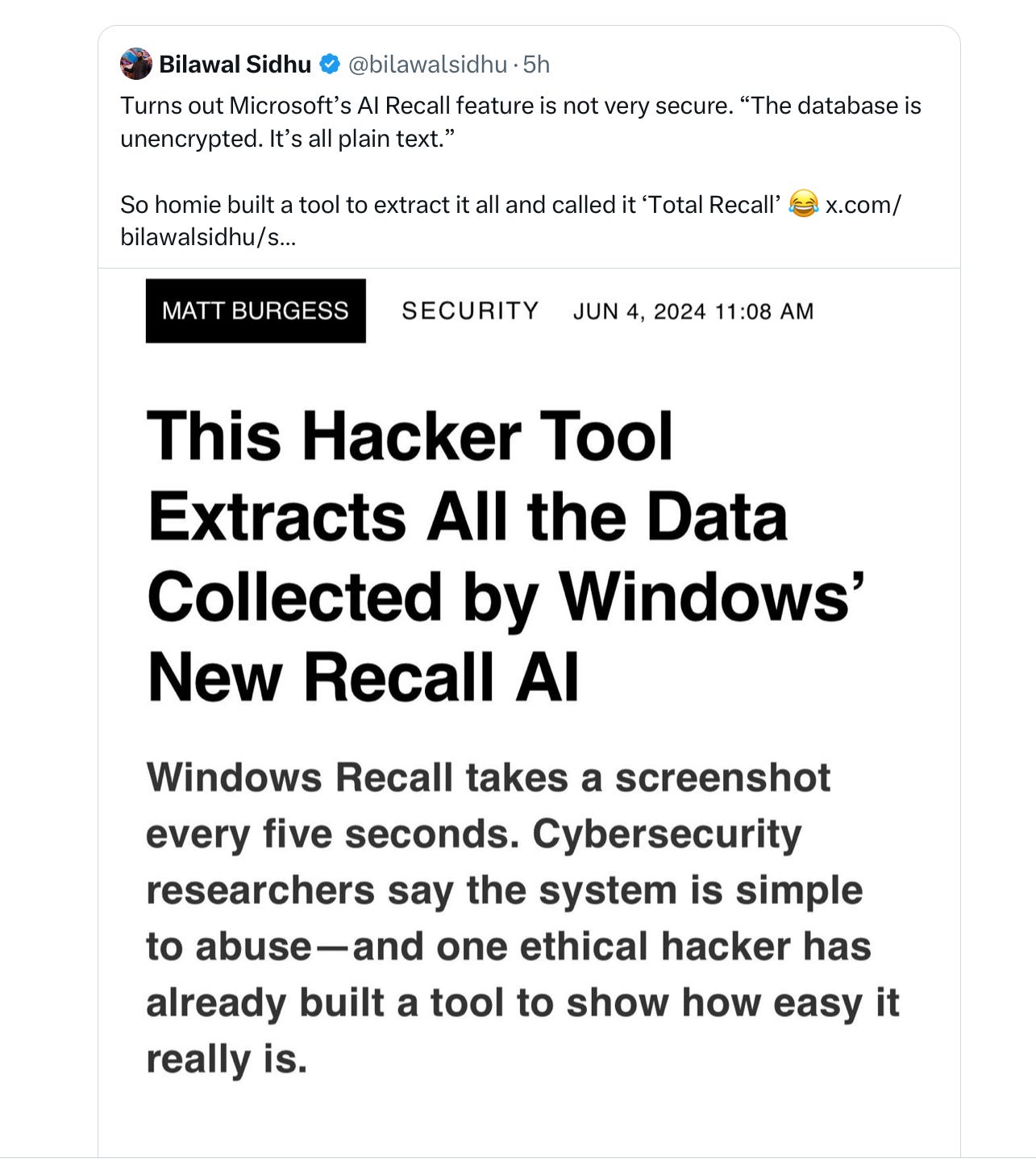

One last thought for the road. The alternative to regulation is self-regulation; Big Tech’s overwhelming message is “Trust Us”. Should we? Consider this example from the last couple weeks: Microsoft is unveiling a new AI-powered product called Microsoft Recall that will take screenshots every five minutes, allegedly stored only locally.

The whole thing instantly made me 1984-level nauseous.

At the time, March 24, I tweeted:

Two weeks later, it has already come out that the database that indexes the whole thing is in plain text (except on systems that are explicitly set to encrypt everything). That’s bananas. Keystone Kops level security.

These guys? We are supposed to trust them?

Gary Marcus, author of the forthcoming book Taming Silicon Valley, hopes that people will eventually recognize that unregulated AI could be as foolish as — or more foolish than — unregulated explosives.

Asking any industry to police itself is lunacy pushed by industries: we have plenty of examples from chemicals, oil, social media, nanotech, etc. I recall reading that only 10,000 of the 150,000 chemicals used have been safety-tested.

Even Pharma and Food skirt the rules as often as possible, and they are directly life-threatening. And they do so even when better-regulated as in Europe.

Greed is powerful :(

The only kind of regulation they'll support is that which either widens their moat or straight up hands them cash. Lobbying used to be a crime in this state and it should be again. Criminalizing the lobbyist is the kind of progress I'd love to see. Let the people elect leaders and let the leaders lead. While I'm eyeing that pie in the sky, how about a little public campaign finance as well. Now where is that llama tutorial?