The need for a President that speaks AI natively

Last night was a travesty, but that is just the beginning of our problems

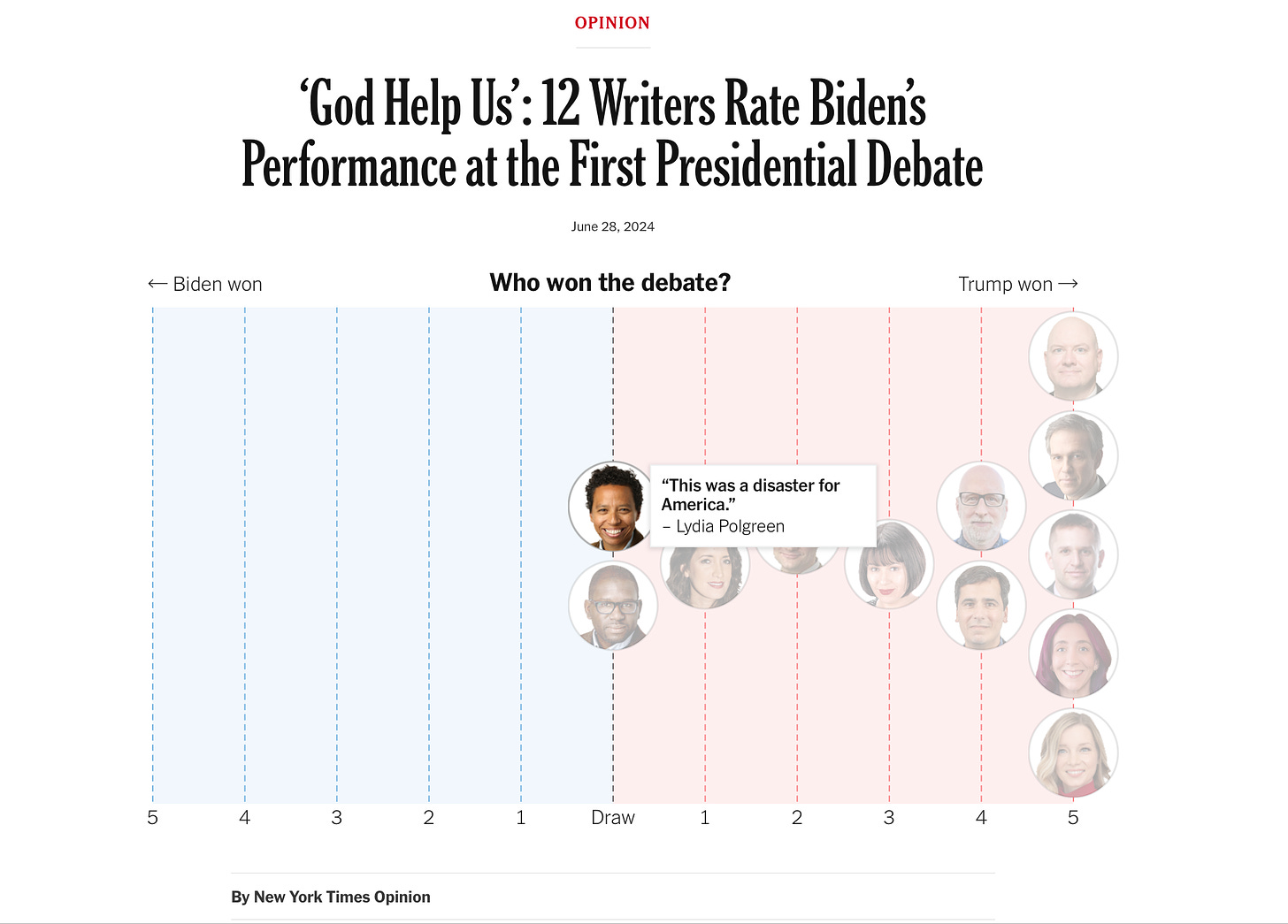

Former President (and convicted felon) Donald Trump lied like an LLM last night, but still won the debate, because Biden’s delivery was so weak:

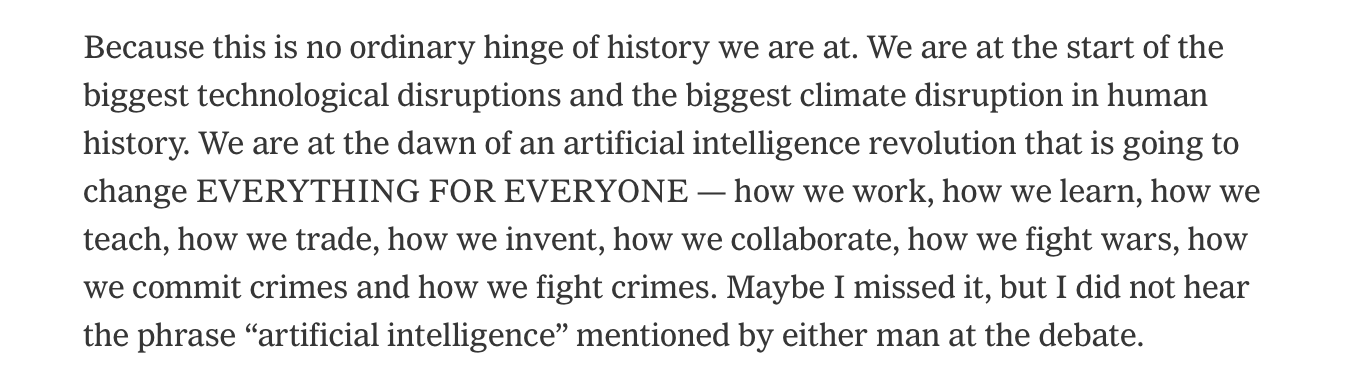

As Tom Friedman noted this morning in The New York Times, neither president even mentioned AI, which was a travesty of a different sort:

There was far more discussion of golf than AI.

§

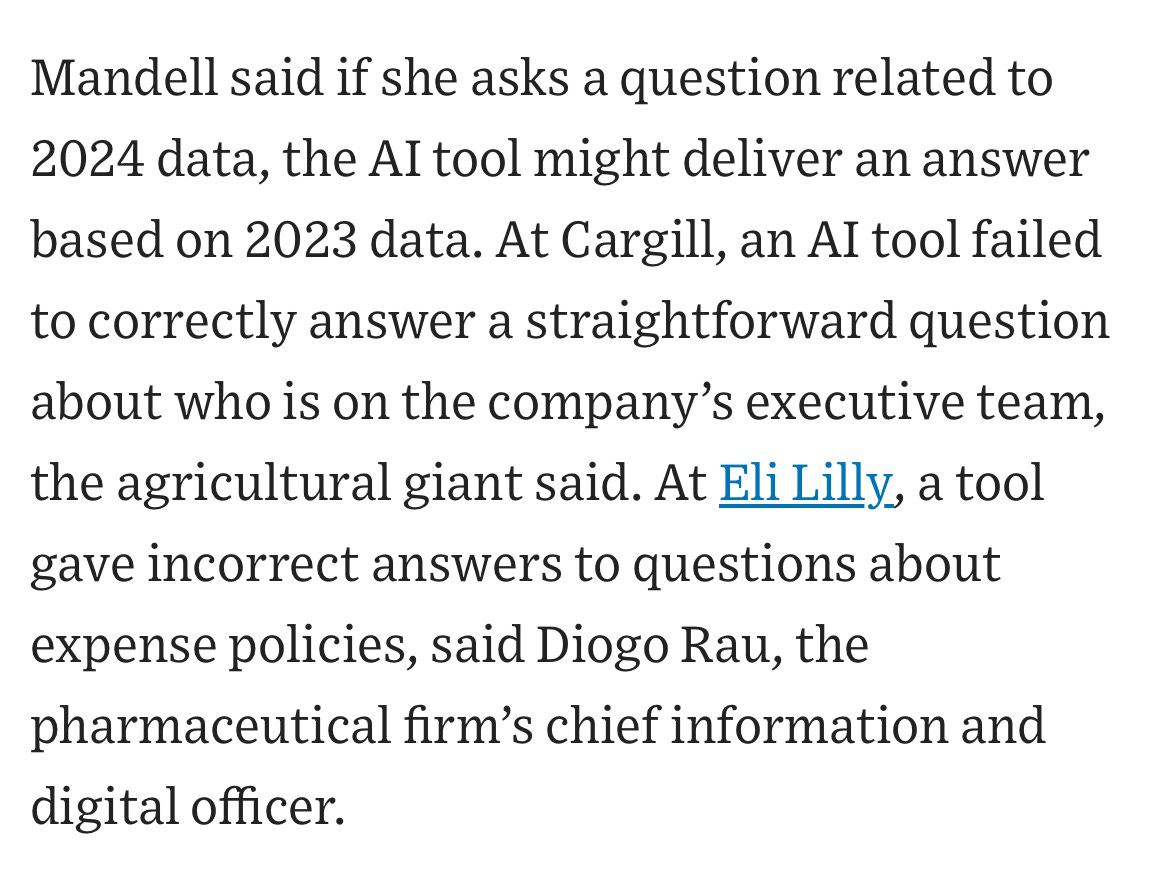

If you have ever read almost anything I have written about AI, you know that I don’t think AI is going to change “EVERYTHING FOR EVERYONE” tomorrow. And more or less everything I have written here (and elsewhere) for years is turning out to be true: Generative AI does in fact (still) have enormous limitations, just as I anticipated, It still hallucinates, it still makes boneheaded mistakes, and the whole Generative AI thing is looking more and more like a dud. OpenAI may in fact yet turn out to be the WeWork of AI. Scaling is not enough. Returns are starting to diminish. We do need new approaches to AI. Businesses are finally finding this out, too. (Headline in WSJ: “Al Work Assistants Need a Lot of Handholding”, because they are still riddled with errors.)

And yet every word that Friedman says above is also true: AI is going to change everything, if not tomorrow, sometime over the next 5-20 years, some ways for good, some for bad. We cannot afford to have a President in 2024 that doesn’t fully grasp this. The markers that our next President puts down—about how much liability and responsibility companies will have, and about how the government keeps up with a rapidly changing technology— will have lasting impact.

§

The need for a President that speaks AI natively is more and more pressing because there has recently been a subtle shift in how the big tech companies are hyping AI. A couple years ago they were mostly lying about (or at least exaggerating) what their current or near-term products could do.

Now, the truth is out. The WSJ article, for example, is brutal, detailing a wide range of common errors, from hallucinations to failures in reasoning about which data sources are relevant to particular problems. (Honestly, “handholding” is putting it a bit too charitably, and way too anthropomorphically, but that’s a story for another day). A small sample (there is more in the article):

With the truth that LLMs can’t be trusted now becoming more widely known, the big AI leaders have switched their focus to long term promises that are impossible to verify and even more grandiose than “we can help your business in unprecedented ways” (which has turned out to be mostly untrue). Altman, for example, promised a week ago at Aspen Ideas that AI would “discover all of physics”, a claim that is neither coherent nor plausible anytime in the next couple decades. Microsoft’s AI CEO Mustafa Suleyman confidently told the same crowd that the cost of knowledge production would go to zero in 15 years, again extrapolating wildly relative to what is currently possible. Anthropic’s Dario Amodei is fantasizing about how AI intelligent as Nobel Prize-winning scientist, accelerate discoveries in biology and cure diseases, when his companies products still often fail on basic reasoning. Not to be outdone, Altman offhandedly told Aspen, that AI could “double the world’s GDP”— without a shred of evidence. As Elizabeth Holmes learned long ago, this sort of techno-utopia plays well with audiences. Combined with confident delivery, it often fools a lot of the people, a lot of the time. But that doesn’t make it true.

§

We need a President who can recognize when corporate leaders are promising things far beyond is currently realistic, who can sort truth from bullshit, in order to develop AI policies that are grounded in reality. We also need a President who can stand up to big tech, and resist regulatory capture. And we need a President who can get Congress (which has this far dithered) to recognize the true urgency of the moment, since Executive Orders alone are not enough. And above all else, we need a President who understands and appreciates science.

Last night wasn’t promising.

Gary Marcus thinks we have maybe one shot to get AI policy right in the US, and that we aren’t off to a great start. His forthcoming book Taming Silicon Valley lays out a blueprint for what we need.

"lied like an LLM". A new one for the corpus. (And we get lies *from* LLMs as well as *about* them ... rather like politicians indeed...).

Being a Canadian and long-time admirer of the US, I watched last nite debate with dismay. Your comment is right on.