For many decades, part of the premise behind AI was that artificial intelligence should take inspiration from natural intelligence. John McCarthy, one of the co-founders of AI, wrote groundbreaking papers on why AI needed common sense; Marvin Minsky, another of the field’s co-founders of AI wrote a book scouring the human mind for inspiration, and clues for how to build a better AI. Herb Simon won a Nobel Prize for behavioral economics. One of his key books was called Models of Thought, which aimed to explain how “Newly developed computer languages express theories of mental processes, so that computers can then simulate the predicted human behavior.”

A large fraction of current AI researchers, or at least those currently in power, don’t (so far as I can tell) give a damn about any of this. Instead, the current focus is on what I will call (with thanks to Naveen Rao for the term) Alt Intelligence.

Alt Intelligence isn’t about building machines that solve problems in ways that have to do with human intelligence. It’s about using massive amounts of data – often derived from human behavior – as a substitute for intelligence. Right now, the predominant strand of work within Alt Intelligence is the idea of scaling. The notion that the bigger the system, the closer we come to true intelligence, maybe even consciousness.

There is nothing new, per se, about studying Alt Intelligence, but the hubris associated with it is.

I’ve seen signs for a while, in the dismissiveness with which the current AI superstars, and indeed vast segments of the whole field of AI, treat human cognition, ignoring and even ridiculing scholars in such fields as linguistics, cognitive psychology, anthropology, and philosophy.

But this morning I woke to a new reification, a Twitter thread that expresses, out loud, the Alt Intelligence creed, from Nando de Freitas, a brilliant high-level executive at DeepMind, Alphabet’s rightly-venerated AI wing, in a declaration that AI is “all about scale now.” Indeed, in his mind (perhaps deliberately expressed with vigor to be provocative), the harder challenges in AI are already solved. “The Game is Over!”, he declares:

There’s nothing wrong, per se, with pursuing Alt Intelligence.

Alt Intelligence represents an intuition (or more properly, a family of intuitions) about how to build intelligent systems, and since nobody yet knows how to build any kind of system that matches the flexibility and resourcefulness of human intelligence, it’s certainly fair game for people to pursue multiple different hypotheses about how to get there. Nando de Freitas is about as in-your-face as possible about defending that hypothesis, which I will refer to as Scaling-Uber-Alles.

Of course, that name, Scaling-Uber-Alles, is not entirely fair. De Freitas knows full well (as I will discuss below) that you can’t just make the models bigger and hope for success. People have been doing a lot of scaling lately, and achieved some great successes, but also run into some road blocks. Let’s take a dose reality, before going further, into how de Freitas faces facts.

A Dose of Reality

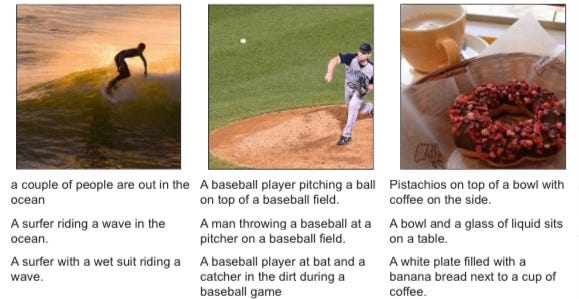

Systems like DALL-E 2, GPT-3, Flamingo, and Gato appear to be mind-blowing, but nobody who has looked at them carefully would confuse them for human intelligence.

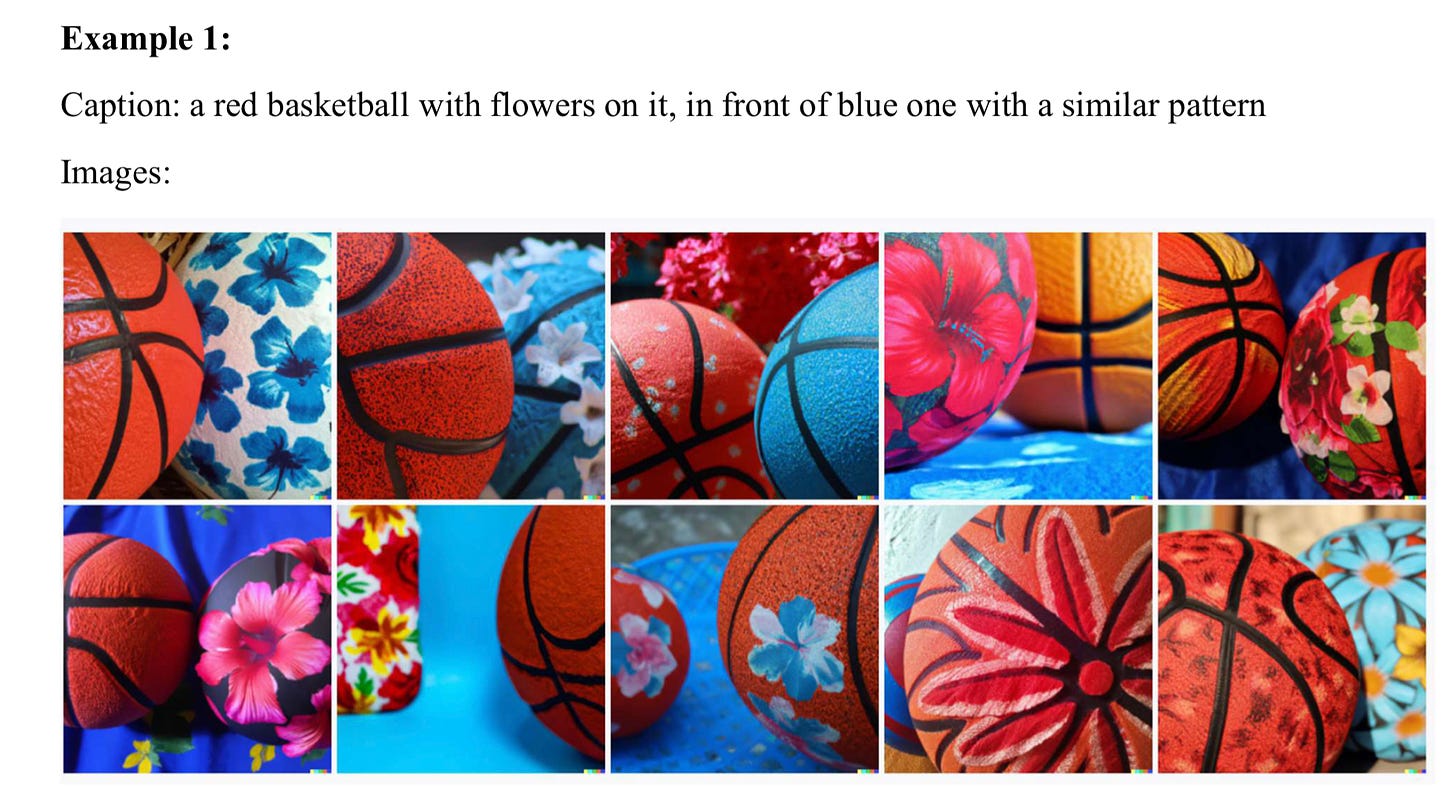

DALL-E 2, for example, can often create fantastic artwork, from verbal descriptions “an astronaut riding a horse in a photorealistic style”:

But it’s also pretty prone to surprising stumbles, like this uncomprehending reply to “a red cube on top of a blue cube”. [DALL-E’s responses, created by a network called unCLIP, are on the left of the figure; interestingly a predecessor model, shown on the right, actually does better on this particular item.]

When Ernest Davis, Scott Aaronson, and I probed in a bit more detail, we found a number of similar examples:

Similarly, the ostensibly amazing Flamingo has (not unrelated) bugs of its own, captured in a candid and important thread by DeepMind’s Murray Shanahan, with further examples from Flamingo’s lead author Jean-Baptiste Alayrac. For example, Shanahan confronted Flamingo with this image:

and wound up having this discomprehending dialogue:

Um no.

DeepMind’s newest star, just unveiled, Gato, is capable of cross-modal feats never seen before in AI, but still, when you look in the fine print, remains stuck in the same land of unreliability, moments of brilliance coupled with absolute discomprehension:

Of course, it’s not uncommon for defenders of deep learning to make the reasonable point that humans make errors, too.

But anyone who is candid will recognize that these kinds of errors reveal that something is, for now, deeply amiss. If either of my children routinely made errors like these, I would, no exaggeration, drop everything else I am doing, and bring them to the neurologist, immediately.

So let’s be honest: scaling hasn’t worked yet. But it might, or so de Freitas’s theory—a clear expression of the Zeitgeist—goes.

Scaling-Uber-Alles

So how does de Freitas reconcile reality with ambition? Literally billions of dollars have been poured into Transformers, the underlying technology that drives GPT-3, Gato, and so many others; training data sets have expanded from megabytes to gigabytes, parameter counts from millions to trillions. And yet the discomprehending errors, well-documented in numerous works since 1988, remain.

To some (such as myself), the abiding problem of discomprehension might—despite the immense progress—signal the need for a fundamental rethink, such as the one that Davis and I offered in our book Rebooting AI.

But not to de Freitas (nor to many others in the field—I don’t mean to single him out; I just think he has given prominent and explicit voice to what many are thinking).

In the opening thread he elaborates much of his view about reconciling reality with current troubles, “It’s about making these models bigger, safer, compute efficient, faster at sampling, smarter memory, more modalities, INNOVATIVE DATA, on/offline”. Critically, not a word (except, perhaps “smarter memory”) is given to any idea from cognitive psychology, linguistics, or philosophy.

The rest of de Freitas’ thread, follows suit:

This is, again, a pure statement of scaling-über-alles, and marks its target: the ambition here is not just better AI, but AGI.

AGI, artificial general intelligence, is the community’s shorthand for AI that is at least as good, and resourceful, and wide-ranging, as human intelligence. The signature success of narrow artificial intelligence, and indeed alt intelligence, writ large, has been games like Chess (DeepBlue owed nothing to human intelligence) and Go (AlphaGo similarly owed little to human intelligence). De Freitas has far more ambitious goals in mind, and, to his credit, he’s upfront about them.

The means to his end? Again, de Freitas’s emphasis is mainly technical tools for accommodating bigger data sets. The idea that other ideas, e.g., from philosphy or cognitive science, might be important is dismissed. (“Philosophy about symbols isn’t [needed]” is perhaps a rebuttal to my long-standing campaign to integrate symbol-manipulation into cognitive science and AI, recently resumed in Nautilus Magazine, though the argument is not fully spelled out. Responding briefly: His statement that “[neural] nets have no issue creating [symbols] and manipulating them” misses both history and reality. The history missed is the the fact that many neural net enthusiasts, have argued against symbols for decades, and the reality that is missed is the above-documented fact that symbolic descriptions like “red cube on blue cube” still elude the state-of-the-art in 2022.)

De Freitas’s Twitter manifesto closes with an approving reference to Rich Sutton’s famous white paper, The Bitter Lesson:

Sutton’s argument is that the only thing that has led to advances in AI is more data, computed over more effectively. Sutton is, in my view, only half right, almost correct with his account of the past, but dubious with his inductive prediction about the future.

So far, in most domains (not all, to be sure) Big Data has triumphed (for now) over careful knowledge engineering. But,

Virtually all of the world’s software, from web browsers to spreadsheets to word processors, still depends on knowledge engineering, and Sutton sells that short. To take one example, Sumit Gulwani’s brilliant Flash Fill feature is a one-trial learning system that is staggeringly useful, and not at all founded on the premise of large data, but rather on classical programming techniques. I don’t think any pure deep learning/big data system can match it.

Virtually none of the critical problems for AI that cognitive scientists such as Steve Pinker, Judea Pearl, the late Jerry Fodor, and myself have been pointing to for decades is actually solved yet. Yes, machines can play games really well, and deep learning has made massive contributions to domains like speech recognition. But no current AI is remotely close to being able to read an arbitrary text with enough comprehension to be able to build a model of what a speaker is saying and intends to accomplish, nor able (a la Star Trek Computer) to be able to reason about an arbitrary problem and produce a cohesive responsive. We are in early days in AI.

Success on a few problems with a particular strategy in no way guarantees that we can solve all problems in a similar way. It is sheer inductive folly to imagine otherwise, particular when the failure modes (unreliability, bizarre errors, failures in compositionality and discomprehension) have not changed since Fodor and Pinker pointed them out (in separate articles) in 1988.

In closing

Let me close by saying that I am heartened to see that, thankfully, Scaling-Über-Alles isn’t fully consensus yet, even at DeepMind:

I am fully with Murray Shanahan when he writes “I see very little in Gato to suggest that scaling alone will get us to human-level generalisation.”

Let us all encourage a field that is open-minded enough to work in multiple directions, without prematurely dismissing ideas that happen to be not yet fully developed. It may just be that the best path to artificial (general) intelligence isn’t through Alt Intelligence, after all.

As I have written, I am fine with thinking of Gato as an “Alt Intelligence”— an interesting exploration in alternative ways to build intelligence—but we need to take it in context: it doesn’t work like the brain, it doesn’t learn like a child, it doesn’t understand language, it doesn’t align with human values, and it can’t be trusted with mission-critical tasks.

It may well be better than anything else we currently have, but the fact that it still doesn’t really work, even after all the immense investments that have been made in it, should give us pause.

And really, it should lead us back to where the founders of AI started. AI should certainly not be a slavish replica of human intelligence (which after all is flawed in its own ways, saddled with lousy memory and cognitive bias). But it should look to human (and animal cognition) for clues. No, the Wright Brothers didn’t mimic birds, but they learned something from avian flight control. Knowing what to borrow and what not is likely to be more than half the battle.

The bottom line is this, something that AI once cherished but has now forgotten: If we are to build AGI, we are going to need to learn something from humans, how they reason and understand the physical world, and how they represent and acquire language and complex concepts.

It is sheer hubris to believe otherwise.

P.S. Please subscribe if you’d like to read more no-bullshit analysis of the current state of AI.

Gary. is there any work addressing the issue of schizophrenic behaviors and these models. In other words judging the DALL-E misread as a delusion (hallucination).

PS you're wonderful and you will get your due

Did you ever learn something from a person who agreed with you?

How is stuffing a computer with existing data any different?

Yesterday I sat at the birthplace of Wilbur Wright and watched 2 pairs of vultures gracefully balanced along the unseen currents of air.

When Wilbur and Orville watched birds in flight - they didn't think about making wings out of feathers and wax...

They saw the balance and control - they connected that to the bicycles they built. They thought about the hours of practice required to become proficient and competitive riding a bicycle. They understood intuitively the subtle design problems that would have to be resolved - simple things, such as the slight forward bend in the front fork that made a bicycle stable.

The knew that for a man to fly - he would need hours of practice, balancing on the air. The exact thing that had eluded all the other brilliant inventors.

One last question - what will we do when our AI disagrees with us?

Thanks for reading.