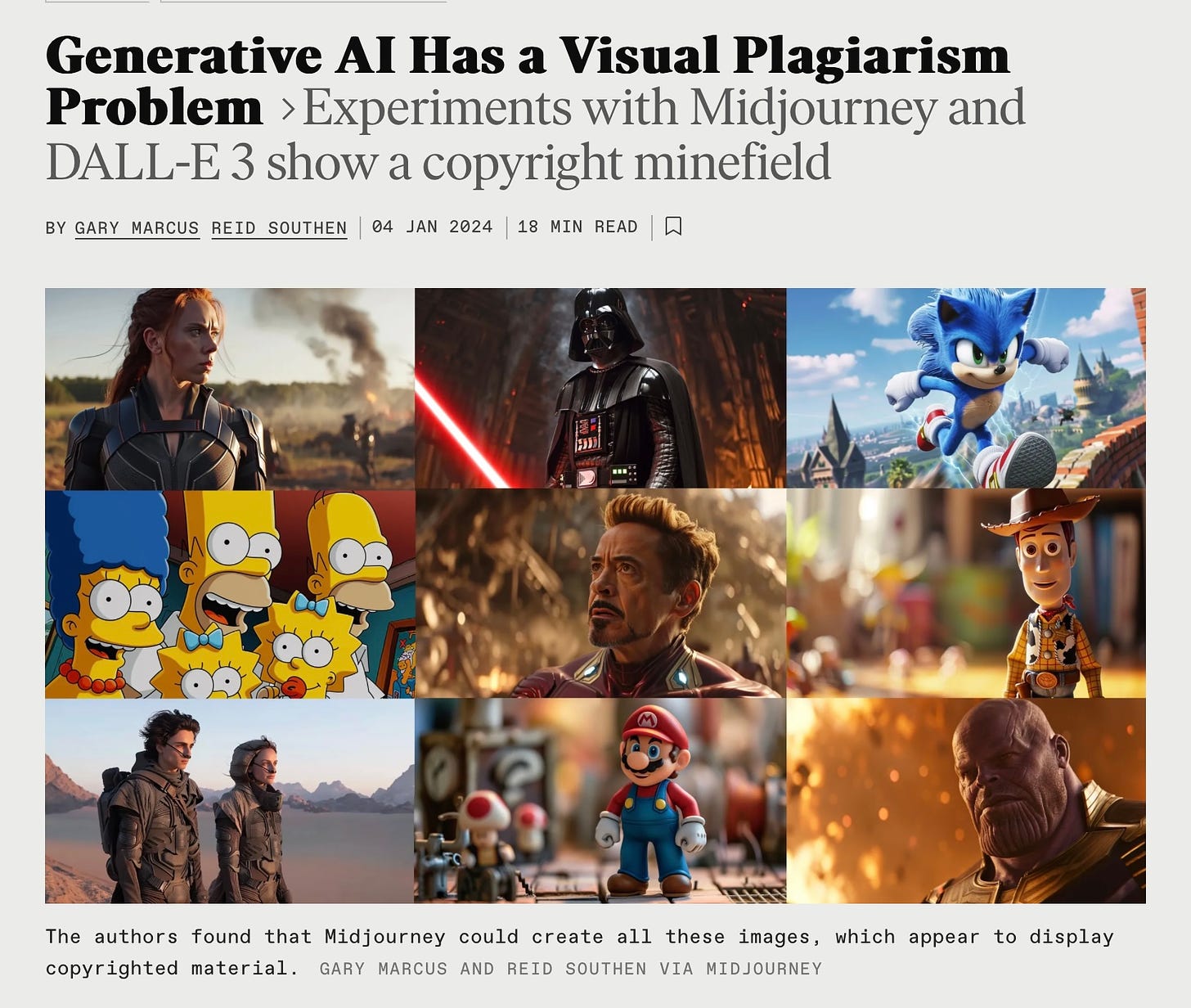

Reid Southen and I continued our experiments, and wrote a long paper about what we found, and why they may pose serious problems both for users and developers that are difficult to fix.

You can find it here: https://spectrum.ieee.org/midjourney-copyright

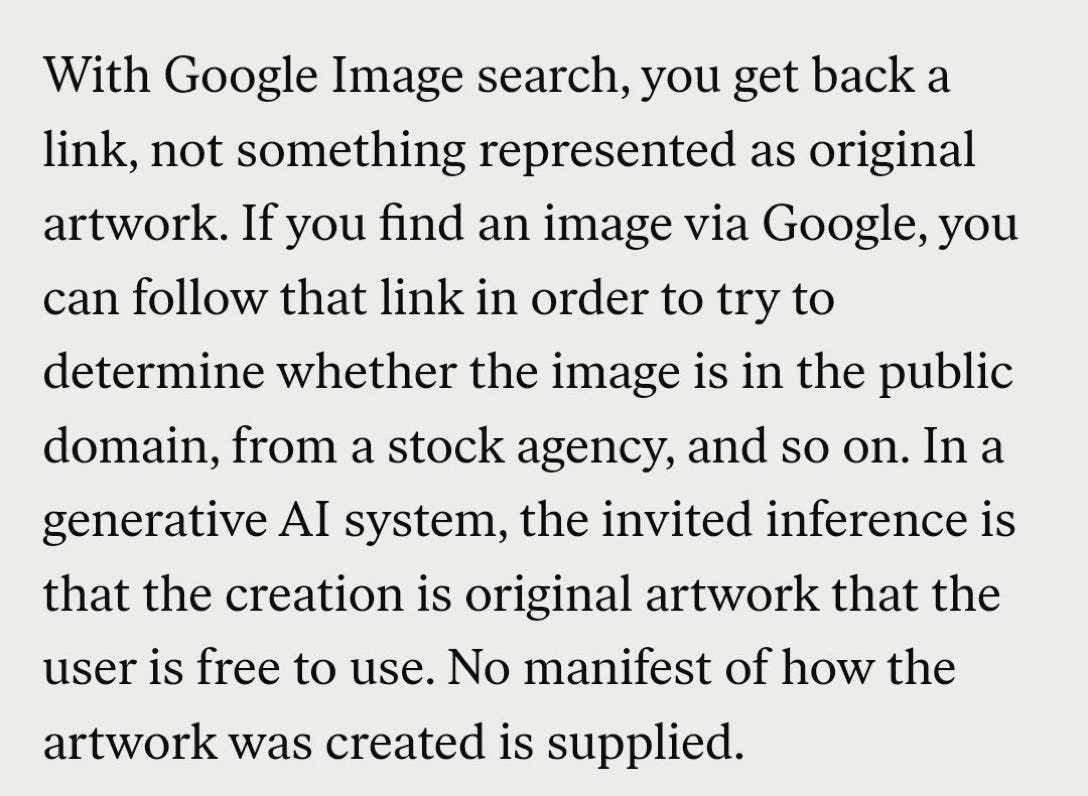

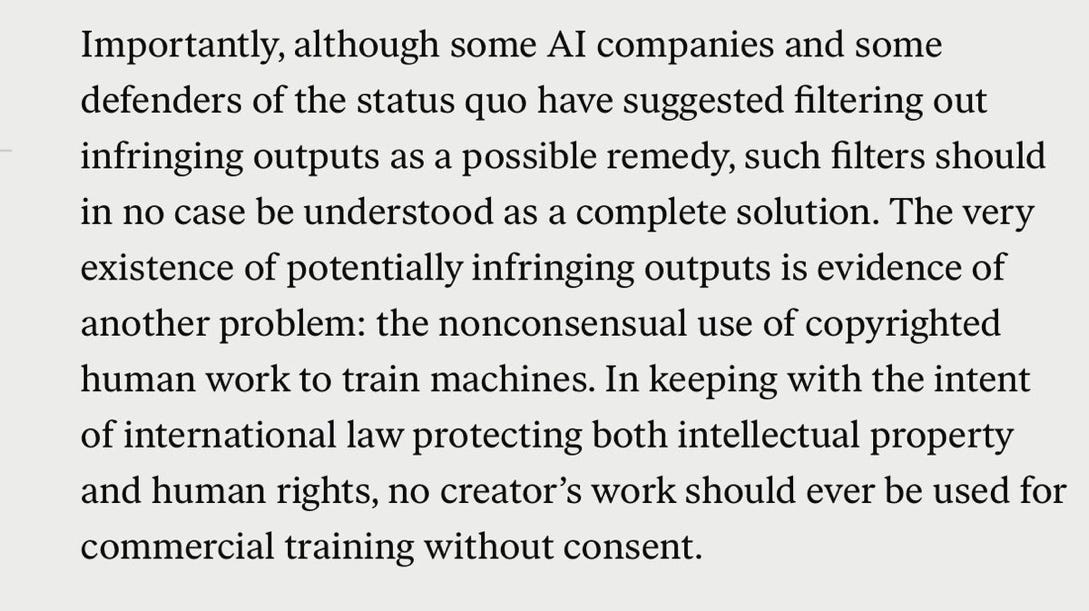

A couple brief excerpts:

and

There’s a lot more in the paper.

Lastly, Avram Piltch from Tom‘s Hardware has already replicated the gist of our results: https://www.tomshardware.com/tech-industry/artificial-intelligence/ai-image-generators-output-copyrighted-characters

Gary Marcus was honored to help Reid Southen in this investigation.

Interesting. Goes along the lines of what I was saying in the other comments threads from the previous 2 articles, that no matter how you try and spin it as using only links or URLs or whatever, the fact is that an image or a text is still used at the very beginning as input. How to solve this, other than getting consensus for use? We'd have to re-conceptualize what it means to digest and "use" material, or try and grant some kind of "thinking" or sentience status to AIs, such that they are free to consume copyrighted material and "think" about them and output about stuff, like we do as free human agents (that are genuinely creative) ... which is a distortion of what's really going on, which is a mechanical system created by humans as a tool. And this all strikes at the very core of what is at issue and what Gary writes about: what is "intelligence", and what is AI and what is the goal, how to use them (properly), and how to get there?

The summary of your paper is very clear and relevant in my opinion. It suits well my way of thinking on the subject. There are true solutions to avoid genAI plagiarism but none of them will be easily accepted by companies providing genAI tools and most of them will have to be enforced by the law (new regulations or lawsuits).

1) AI driven systems could be trained on public domain content only or on content for which a permission under a specific license was granted. That means a huge step back, resetting all training data bases and reeducating the systems.

2) Any result generated by an AI system could be assorted with a list of references (works) used for producing this specific output. Not possible with present LLMs if I well understood, but possible in foreseeable future if the purely LLM approach is changed to a more inferential and rigorous one. That means investing further in a new technology.

3) Every content generated by an AI system which is a based on no referenced data could be accompanied by a clear written liability warning for the user stating that this content is intended for strictly personal, no professional and no commercial use. Such a restriction will surely refrain some customers from using the system.

All these solutions are not straightforward to apply now when the AI systems have already been commercialized but what is going on presently is just plain not respecting of intellectual property principles.