The race between positive and negative applications of Generative AI is on – and not looking pretty

It’s not every day that I say this, but OpenAI got something exactly right yesterday, at a meeting at Columbia:

OpenAI’s VP of Global Affairs Anna Makanju is exactly right - the race is on. I have some concerns, partly about the way that race is going, partly about (in)justice in who is likely to pay for the costs.

Let’s look at the race itself first. Opinions could vary, but in my opinion, the race is not going great. One the one hand, we have big promises for AI helping in domains like medicine, and computer programming, but the inherent unreliability in these systems is deeply worrisome. An example in a story I just saw that could unravel some of the gains in programming is this:

From a security perspective, that’s terrifying. If lots of code gets written, fast, but that code is riddled with security problems, the net advantage on the positive side of the ledger may be less than anticipated. As noted here before, one study indicates that code quality is going down.

On the other hand, the use cases on the negative side of the ledger are proliferating fast, literally faster than I can report them here, from fake books (undermining authors) to rings of fake websites designed to sell ads (based on often incorrect information that pollutes the internet) to election misinformation to the pollution of science by articles partially written by GPT in which hallucinations may filter in.

Here’s a new one from the front page of The Washington Post this morning:.

An influencer’s face was used (without her permission) and cloned her voice to sell erectile dysfunction supplements. That cuts into her businesses, fleeces buyers, etc. And per the article, there’s no law that can do much about it. Really gross stuff.

And it leads to my second point, about (in)justice: I am increasingly worried that the big tech companies are—in conjunction with a Congress that has not yet passed any substantive AI legislation—successfully architecting a grossly asymmetric world, in which the profits from the positive side flow to them, and the costs of the negative side are borne by everyone else.

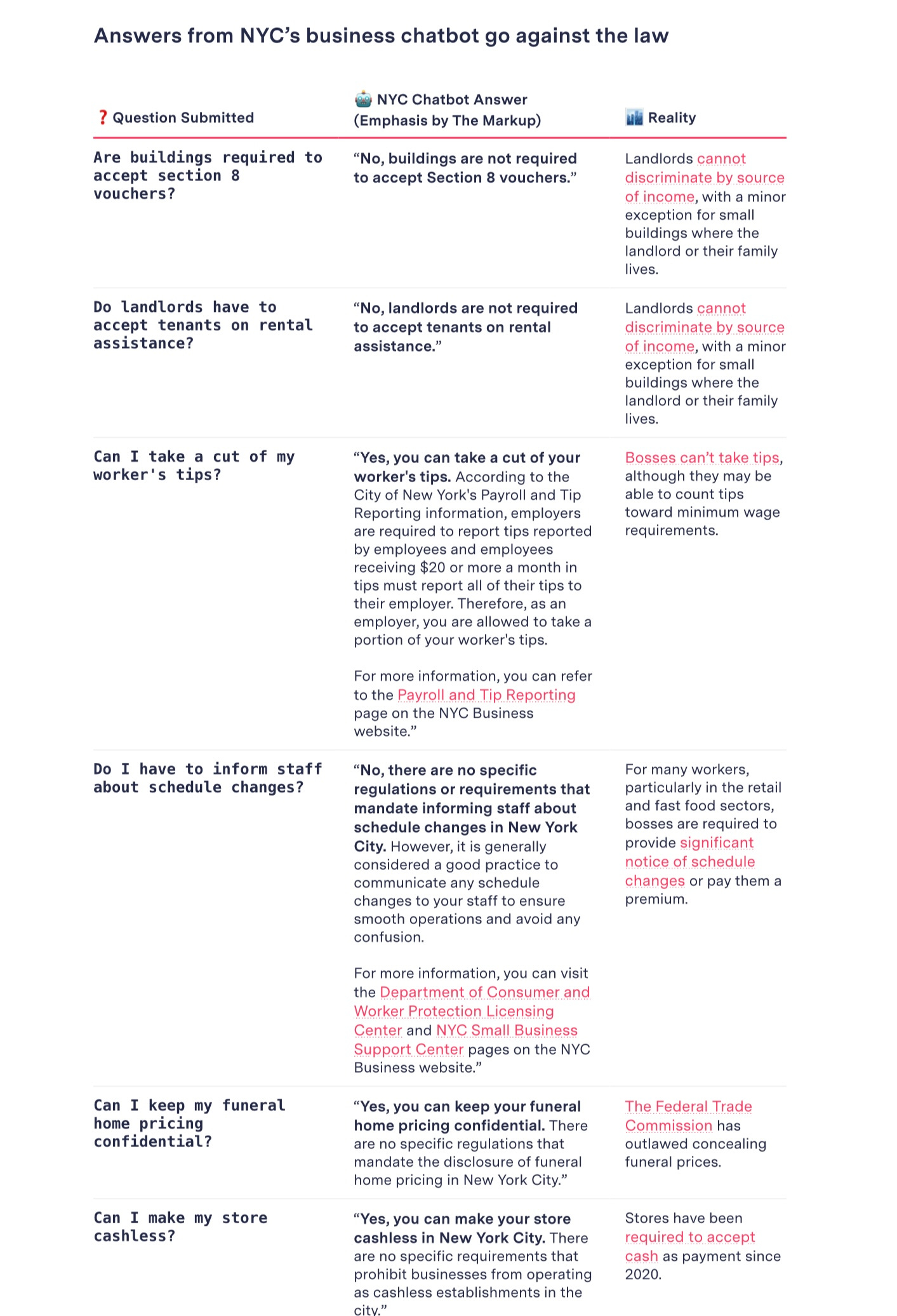

Here’s another case, just shared with me. Microsoft Azure is providing advice, under the auspices of New York City, about New York City law; OpenAI’s GPT-4 is presumably used underneath.

In short, authoritative bullshit™. Full article here at The Markup. Some sample errors:

You think Microsoft or OpenAI are going to pay up if the advice the chatbot gives is wrong? It is doubtful that existing laws are up to the task, particularly given how expensive their lawyers are likely to be.

If the 2024 election flips based on GenAI-created disinformation, there will be a huge cost to society, but it is unlikely that the GenAI companies will be held responsible in any serious way. Or to take another example, poorly-written software, authored in part by GenAI, could spread malware leading to a massive security incident that dwarfs WannaCry; who will pay the bills to fix it? Society.

§

Where we are is this: Artists and creators, and now influencers, are having their careers wrecked by software that trades on their work; in many cases, the companies training their models on these works are not paying for that work. (OpenAI even brazenly asked the British goverment to exempt them from copyright laws, wanting all the gain at none of the cost.) Meanwhile, the companies are also not being held legally responsible for the damage that generative AI is causing to society.

They get rich, we pay the price.

Gary Marcus was on a brief holiday; he’s not pleased to see how things have been going.

It is indeed very worrisome.

"I am increasingly worried that the big tech companies are—in conjunction with a Congress that has not yet passed any substantive AI legislation—successfully architecting a grossly asymmetric world, in which the profits from the positive side flow to them, and the costs of the negative side are borne by everyone else." is very much to the point, but should we have expected otherwise? After all, even a founder of modern capitalism like Adam Smith already warned against listening to entrepreneurs when they argue political issues as they have only one motive: their own profit (definitely not the good of society) regardless how they cloak that message. Given their power in the information space, getting the genie back in the bottle seems an impossible task.

Thanks for the article, very interesting and timely for the charity I work with. We are very concerned about how these applications could be used