The Sam Altman Playbook

Fear, The Denial of Uncertainties, and Hype

How do you convince the world that your ideas and business might ultimately be worth $7 trillion dollars? Partly by getting some great results, partly by speculating about unlimited potential, and partly by downplaying and ignoring inconvenient truths.

Sam Altman is on a tour to raise money and raise valuations, and he’s plying these moves day after day, in a city after city, at some of top universities in the world. Aside from a minor upgrade to GPT-4, he doesn’t have a newly released product, so he is selling vision and promise.

Let’s start with the promises. A few days ago at Stanford, Sam promised that AGI will be worth it, no matter how much it costs:

At the same talk at Stanford, Altman grandoisely asserted, with no qualification, that “we’re making AGI”, without touching on the possibility that current approaches might not get us there — despite (a) the enormous known problems with reliability, reasoning, planning and hallucinations and (b) the fact that the entire field, despite being massively well-capitalized, has struggled for over year (and in fact for decades) to solve any of these problems.

Whether GenAI proves to be AGI is very much still up for grabs, and on any reasonable account we have a long way to go. But you would never know that, listening to claims like Sam’s. (He also of course didn’t get into whether it would be OpenAI that would get to AGI, or someone else, or in what decade.)

In another very recent interview, this one with MIT’s Technology Review, Altman claimed that AGI agents are on their way (again without presenting evidence that historically extremely difficult problems are close to being solved):

He also hinted at a kind of ultimate executive assistant (utterly ignoring huge questions about privacy, surveillance, and security):

Assumptions are never questioned, counterarguments never considered. (When someone asked if he would debate with me at Davos, he politely declined.)

§

Confidence is another big part of the show. At Stanford he said, “I think every year for the next many we have dramatically more capable systems every year”, as if that were a sure thing, when of course it isn’t. For the last fourteen months we have seen no such dramatic improvement in capabilities—already evidence against his optimistic projection--and there are increasing concerns about whether there is enough high-quality data to sustain prior improvements, as well as in-principle concerns re hallucinations, reasoning, planning, outliers and reliability. Sam never lets on.

§

Undergirding all is this often a sense that without AI, we are screwed. As Geoffrey Miller put it on X, “[Altman’s] implicit message is usually 'We need AGI to solve aging & discover longevity treatments, so if you don't support us, you'll die.” Longevity is the carrot; death is the stick.

§

In order to make it all plausible, Sam uses a unique combination of charm, soft-spoken personal humility and absolute confidence in outlandish claims.

He seems like such a nice guy, yet he implies, unrealistically, that the solution to AGI is within his grasp; he presents no evidence that is so, and rarely considers the many critiques of current approaches that have been raised. (Better to pretend they don’t exist.) Because he seems so nice, pushback somehow seems like bad form.

Absurd, hubristic claims, often verging on the messianic, presented kindly, gently, and quietly — but never considered skeptically. That’s his M.O.

Pay no attention to the assumptions behind the curtain.

§

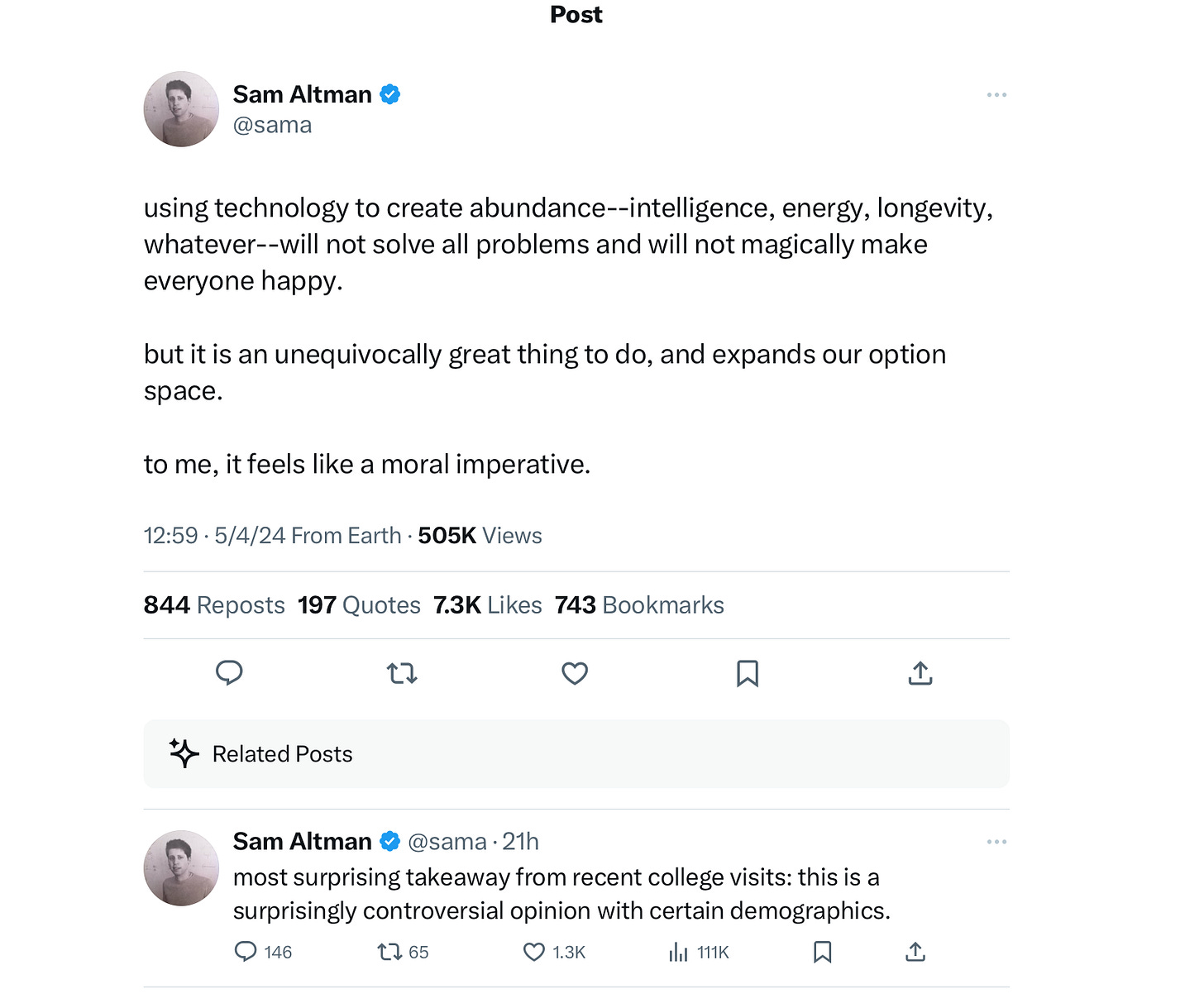

Another rhetorical move, a form of inoculation, acknowledges vague worries in a cursory way but doesn’t go deeply into them.

Here, Altman acknowledges that intelligence isn’t everything, but doesn’t ackwowledge (at all) that there are risks associated with AI. (He knows darn well that such risks exist.) He has innoculated himself against the charge that the big problems (poverty, climate science) aren’t strictly technical, but done nothing to actually address them, and entirely ignored the potential costs of the technology (to the environment, to the species, etc)

Not one one scary capital letter in either post, either. So gentle. Just a regular guy sharing his thoughts.

The tag at the end doubles down by playing dumb; it acknowledges that criticism exists (a further attempt at inoculation) without ever enumerating any of those potential criticisms (why did some people not share his opinions?) – almost as if those criticisms were too silly to address, and of great surprise to him.

They are not. There are great many potential concerns he is fully aware that he chooses to ignore. Unequivocally great? Tell that to those that have been scammed, to victims of deepfake porn, perhaps to whole nations if deep fakes taint elections.

Why might people object the claim that using AI is an unequivocally great thing to do? They might worry that people will lose their jobs, and their sense of well-being; they might worry that the tools of AI might be misused for nefarious purposes, as OpenAI themselves detailed at great length in the 60 page GPT-4 System Card. Sam himself told the US Senate that AI might “cause significant harm to the world”. There also immense potential environmental costs.

Feigning surprise is pure stagecraft. It’s not that Altman doesn’t know there are potential downsides, maybe even massive ones; it’s that he often temporarily suppresses his own concerns in order to sell a story.

One might also wonder whether AI will genuinely create abundance, longevity, etc, and further whether Generative AI, the only form of AI that OpenAI happens to specialize in, will play a material role in such advances. As Jane Rosenzweig of Writing Hacks said to me this morning: "Altman is a guy who has the potential to put whole industries out of work who is saying, with no capital letters, that what he's doing is what the world has always wanted and then acting surprised that anyone has questions. It's truly amazing in a sense."

§

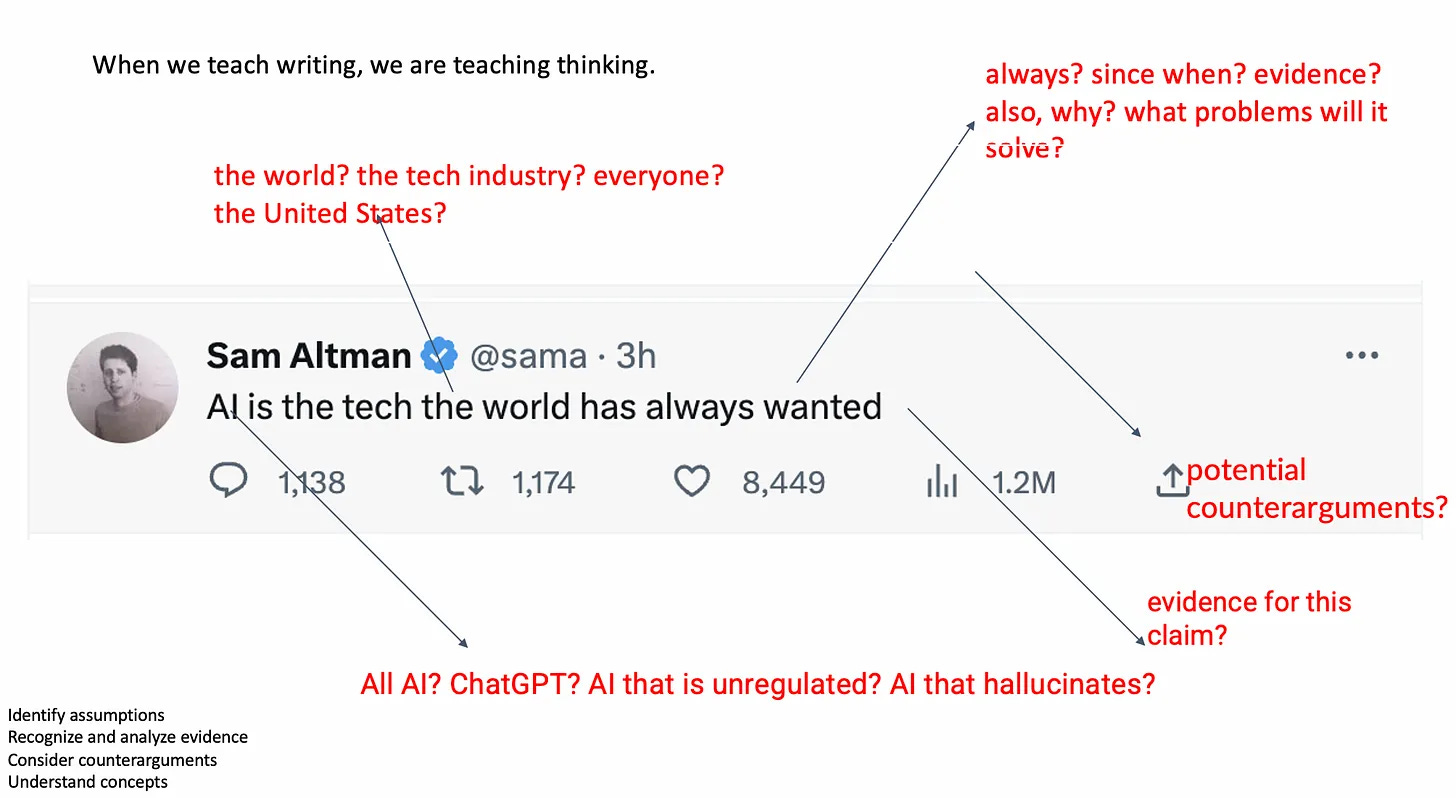

It’s not the first time Rosenzweig has raised an eyebrow over Altman’s remarks. Here’s a classic that she likes to deconstruct in her writing classes, which again shows how Altman’s superficially compelling rhetoric tends to hide from counterarguments while ignoring alternative perspectives. Annotations are hers:

§

And then of course there is the bait-and-switch. The company promises to serve all humanity…

... but in reality mostly just sells chatbots. Charitable work is at best a small part of the overall operation. Roughly half of its first couple hundred billion dollars in profits (if they materialize at all) will go to Microsoft, and artist, writers, publishers and so on are being screwed day after day, their work used without compensation or consent.

Creators are part of humanity. OpenAI has not thus far worked in their interest.

There is also a kind of bait-and-switch around Gen AI versus AGI. As Dave Troy put it on X, knowing how to build better (or at least bigger) GenAI doesn’t necessarily get us to AGI: “the assertion that “AGI” is not only achievable but simply a function of cash spend is a clear logical fallacy, and his use of repetition only reinforces that in the weak minded/true believers.”

§

Finally, there is Sam’s well-known personal support for UBI (Universal Basic Income). Clearly he is a man of the people. But wait, if he really believes in basic income for all, why is he fleecing artists, writers, and other creators, not paying a nickel (to most) for training materials? A lot of them are losing gigs. None of them are getting UBI checks, or anything at all.

§

Altman’s wildly speculative, assumptions unquestioned, we’re doing it for humanity moves are not entirely new.

Many (except perhaps the humility and soft-spoken tone) are familiar from Elon Musk’s regular overpromising; almost all the AI companies are basically following the same playbook, albeit with less panache.

As Sigal Samuel has noted, there’s an uncanny similarity between superlative claims about AI, and superlative claims about religion:

Center to Samuel’s analysis

Sam has of all late, been speaking straight from this playbook.

§

At other times, Altman retreats to the mystical and unfalsifiable:

A fundamental problem of matter? Rocks are intelligent, now? Like a lot of what Sam says, it sounds interesting, until you think it through.

As ever, inconvenient counterarguments are ignored. Reality distortion phasers are set to stun.

And speaking of science fiction, if all else fails, you can always lift a riff from Battlestar Galactica (which in turn lifted it from Peter Pan):

Profound.

§

Altman has been honing this formula literally for years, spinning a heady mixture of mysticism, apocalyptic warning, and speculative exaltation and humility, all the while innoculating himself against great fears while hinting at vast fortunes to be made:

§

The cards Sam is holding are not as great as he lets on.

In the last year, his company has released no major update to GPT-4, and many competitors have caught up or are close. Competitors are eroding the initial gap in quality and starting to offer similar products, sometimes at significantly lower prices. Meta will likely release a GPT-4 level model within months – for free. If OpenAI has a moat (beyond initial customers, which could potentially move), we haven’t seen it. There tons of copyright lawsuits, from multiple publishers, artists, writers, and so forth pending. If they lost one of those in court, and precedent against training on copyright materials was set, the entire enterprise could be in jeopardy. So far, there have been no net profits, and as noted above if there are any, for a long time roughly half must be shared with Microsoft, by contractual agreement. Training GPT-4 was expensive, training GPT-5 maybe an order of magnitude more expensive. GPT-6 yet another order more expensive. Usership for ChatGPT rose like a rocket until May 2023 but has more or less stabilized since then without significant further growth. Altman himself has recently acknowledged that GPT-4 “sucks”. The company has not demo’d a working, reliable agent, and does not seem to have GPT-5 level model thus far. GoogleDeepMind, Anthropic, Meta or someone else could conceivably beat them there. Altman isn’t out there selling a new product; he is selling hope.

§

Why care about any of this? Opportunity costs.

In seeking to grab vast resources for an approach that is deeply flawed, Altman is diverting resources from alternative approaches from AI that might be more trustworthy, reliable, and interpretable, potentially incurring considerable environment costs, and distracting from better ways of using capital to help humanity more generally.

People who buy Altman’s narrative will continue to invest. But in the end, Altman’s place in history will depend on whether he delivers what he has promised.

Gary Marcus is co-author of Rebooting AI, one of Forbes’ 7 must read books in AI, and author of the forthcoming Taming Silicon Valley.

Sam is a pseudo-philosopher in a world that has forgotten how to think critically. Thanks for this. Best Gary thus far. Look forward to more.

There is a major flaw less said. GenAI can't certify itself. It is a black box and even if it seems to reach a 2 sigma the human labor costs to prove that which are huge cannot guarantee it will respond correctly on the very next prompt. And if it takes more labor to check it than it saves it hardly is a general intelligence.