The Second Worst $100B Investment in The History of AI?

By any reasonable account the worst investment in the history of AI has to be the over $100 billion that have been invested in “driverless” cars. There may be a payoff someday, but thus far there has not been a lot. By many accounts Waymo rides are still a net loss on a per ride basis, and the number of competitors even left in the full autonomy game has dwindled. Waymo runs in a few extremely well-mapped cities with mostly very good weather, but to my knowledge it’s never been tested in places with bad weather, poorly mapped roads, alternative driving patterns, etc. The generality of Waymo’s approach is very much still in question, and I am not sure anyone else is still seriously in the full autonomy game. (Tesla, for example, always requires a human in the loop.) Most of those who invested in driverless cars lost money, unless they invested early and cashed out, before patience began to run out.

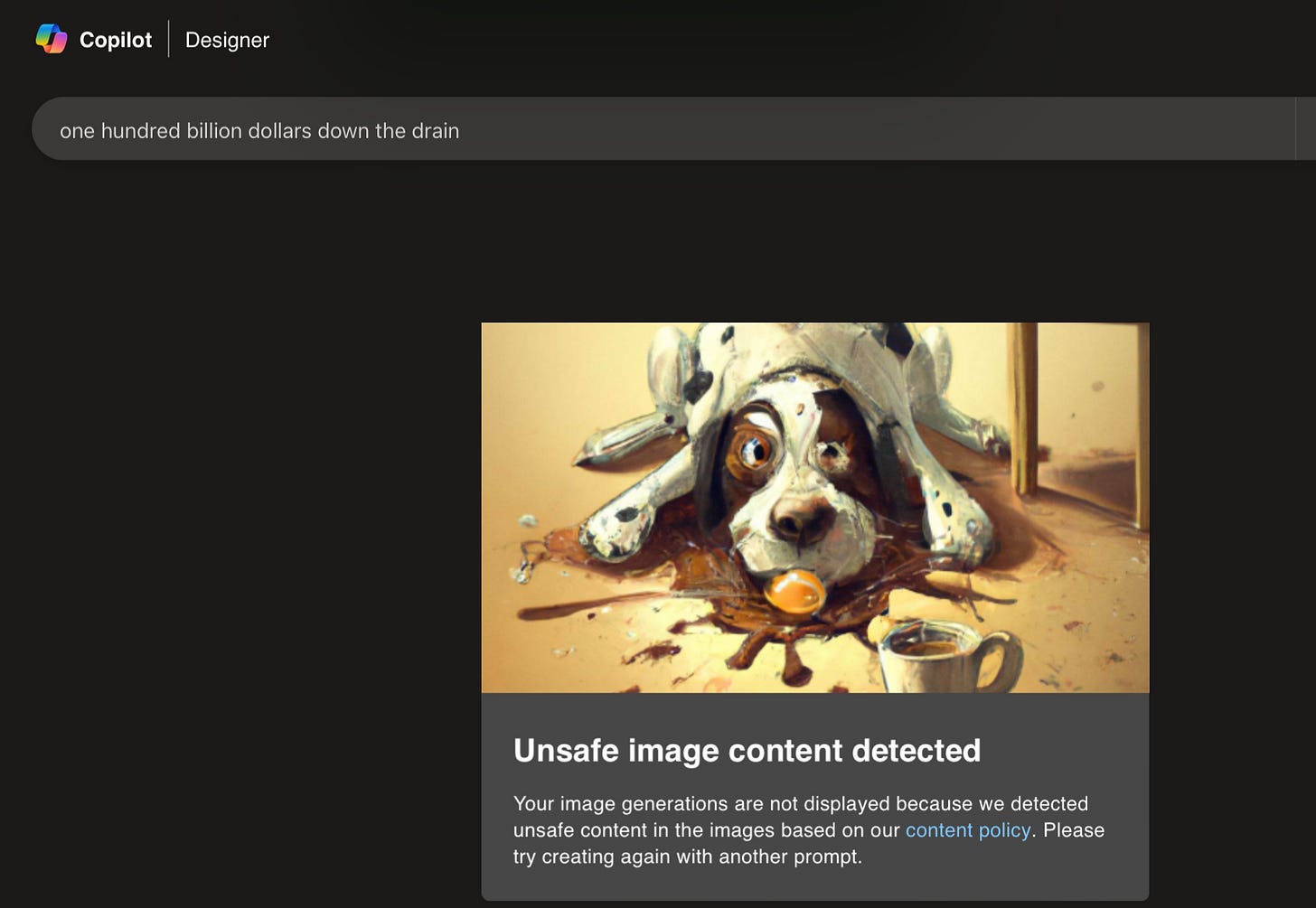

But misery loves company, and the forlorn $100B may see company before the end of the decade. The Information reported today that Microsoft and OpenAI are planning a “Stargate” project to build what sounds to me like a $100B LLM plus infrastructure, targeted for 2028-2030.

Putting aside the potentially considerable environmental impact, which might rightfully engender pushback, my guesses are twofold:

A $100B LLM is still an LLM. Driverless cars were crippled by the endless long tail of outliers; LLMs may well pattern similarly, working in many cases but often troubled by the unexpected, even when scaled. Reasoning and planning in unexpected scenarios are still likely to be flawed.

Even if a $100B LLM worked noticeably better than a $1B LLM, the economics for that investment are problematic. On many tasks, the results might be only slightly better than a $10B model or a $1B model, but the operating costs presumably would be much larger. On most problems, customers probably would not be willing to pay the required premium. Diminishing returns on accuracy with larger models would need to be offset by some sort of unique capability that customers would pay very high fees for.

Presumably the operating idea here is that (a) $100B will equal AGI and (b) that customers will pay literally anything for AGI. I doubt either premise is correct.

If they are wrong, the net effect will be to delay AGI, because their project will siphon away talent from other approaches – just as it already has, but more so.

Gary Marcus truly wants to write a more positive essay, but it’s hard with so many wacky things needing immediate attention coming from so many directions.

"A $100B LLM is still an LLM"

100% this. There have been no significant paradigm shifts that move us away from hallucinations and similar problems with LLMs.

History repeats itself again. During the previous cycle in the 80ies-90ies, big money were spent on infrastructure and research programs, at that time by governments - Japan's 5th gen computers and the US Strategic Computing Initiative. Just like today, they didn't have a clear strategy back then, the hope was that AI would somehow "emerge" :)