The Sparks of AGI? Or the End of Science?

Marching into the future with an obstructed view

“Pride goes before destruction, a haughty spirit before a fall."

– Proverbs 16:18

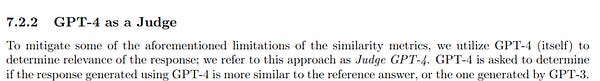

Microsoft put out a press release yesterday, masquerading as science, that claimed that GPT-4 was “an early (yet still incomplete) version of an artificial general intelligence (AGI) system”. It’s a silly claim, given that it is entirely open to interpretation (could a calculator be considered an early yet incomplete version of AGI? How about Eliza? Siri?). That claim would never survive serious scientific peer review. But in case anyone missed the point, they put out a similar, even more self-promotional tweet:

There is, as you might expect, the usual gushing from fans:

And also some solid critical points:

But I am not going to give you my usual critical blow-by-blow, because there is a deeper issue. As I have said before, I don’t really think GPT-4 has much to do with AGI. The strengths and weaknesses of GPT-4 are qualitatively the same as before. The problem of hallucinations is not solved; reliability is not solved; planning on complex tasks is (as the authors themselves acknowledge) not solved.

But there is a more serious concern that has been coalescing in my mind in recent days, and it comes in two parts.

The first is that the two giant OpenAI and Microsoft papers have been about a model about which absolutely nothing has been revealed, not the architecture, nor the training set. Nothing. They reify the practice of substituting press releases for science and the practice of discussing models with entirely undisclosed mechanisms and data.

Imagine if some random crank said, I have a really great idea, and you should give me a lot of scientific credibility for it, but I am not going to tell you a thing about how it works, just going to show you the output of my model. You would archive the message without reading further. The paper’s core claim— “GPT-4 attains a form of general intelligence [as] demonstrated by its core mental capabilities (such as reasoning, creativity, and deduction)”—literally cannot be tested with serious scrutiny, because the scientific community has no access to the training data. Everything must be taken on faith (and yet there already have been reports of contamination in the training data).

Worse, as Ernie Davis told me yesterday, OpenAI has begun to incorporate user experiments into the training corpus, killing the scientific community’s ability to test the single most critical question: the ability of these models to generalize to new test cases.

Perhaps all this would all be fine if the companies weren’t pretending to be contributors to science, formatting their work as science with graphs and tables and abstracts as if they were reporting ideas that had been properly vetted. I don’t expect Coca Cola to present its secret formula. But nor do I plan to give them scientific credibility for alleged advances that we know nothing about.

Now here’s the thing, if Coca Cola wants to keep secrets, that’s fine; it’s not particularly in the public interest to know the exact formula. But what if they suddenly introducing a new self-improving formula with in principle potential to end democracy or give people potentially fatal medical advice or to seduce people into committing criminal acts? At some point, we would want public hearings.

Microsoft and OpenAI are rolling out extraordinarily powerful yet unreliable systems with multiple disclosed risks and no clear measure either of their safety or how to constrain them. By excluding the scientific community from any serious insight into the design and function of these models, Microsoft and OpenAI are placing the public in a position in which those two companies alone are in a position do anything about the risks to which they are exposing us all.

This cannot and should not stand. Even OpenAI’s CEO Sam Altman has recently publicly expressed fears about where this is all going. Microsoft and OpenAI are producing and deploying products with potentially enormous risks at mass scale, and making extravagant, unreviewed and unreviewable claims about them, without sketching serious solutions to any of the potential problems they themselves have identified.

We must demand transparency, and if we don’t get it, we must contemplate shutting these projects down.

Gary Marcus (@garymarcus), scientist, bestselling author, and entrepreneur, is deeply concerned about current AI but really hoping that we might do better.

Watch for his new podcast, Humans versus Machines, debuting later this Spring

Postscript: Two tweets (part of a thread) posted around same time as this article was written:

Not only that, but OpenAI is misleading the public by naming their company “open” gaining trust and confidence they do not deserve

Gary, I want to start by saying thank you. In general, your tone and assertions anger me, AND they also force me to look at AI / AGI in a critical way, with honesty -- confronting my own inner hype cycle / desire for AGI experience -- and that is a priceless gift. Now, to the specific points of this post, which are, btw, EXCELLENT:

Your characterization of MSFT's monstrous 145 page "research" report as a "press release" is genius. perfect turn of the phrase. caught me off guard, then I chuckled. Let's start, by blaming arXiv and the community. Both my parents were research scientists, so I saw firsthand the messy reality that divides pure "scientific method idealism" from the rat race of "publish or perish" and the endless quest for funding. In a sense, research papers were *always* a form of press release, ...BUT...

they were painstakingly PEER-REVIEWED before they were ever published. And "publication" meant a very high bar. Often with many many many rounds of feedback, editing, and re-submission. Sometimes only published an entire year (or more!) after the "discovery". Oh, and: AUTHORS. In my youth, I *rarely* saw a paper with more than 6 authors. (of course, I rarely saw a movie with more than 500 names in the credits, too... maybe that IS progress)

Here's the challenge: I actually DO agree with the papers assertion that GPT4 exhibits the "sparks of AGI". To be clear, NOT hallucinating and being 100% accurate and 100% reliable were never part of the AGI definition. As Brockman so recently has taken to saying "Yes, GPT makes mistakes, and so do you." (the utter privilege and offensiveness of that remark will be debated at another time). AGI != ASI != perfectAI. AGI just means HLMI. Human Level. Not Einstein-level. Joe Six Pack level. Check-out clerk Jane level. J6 Storm the Capitol level. Normal person level. AGI can, and might, and probably will be highly flawed, JUST LIKE PEOPLE. It can still be AGI. And there is no doubt in my mind, that GPT4 falls *somewhere* within the range of human intelligence, on *most* realms of conversation.

On the transparency and safety sides, that's where you are 100% right. OpenAI is talking out two sides of their mouths, and the cracks are beginning to show. Plug-ins?!!?! How in god's name does the concept of an AI App Store (sorry, "plug-in marketplace") mesh with the proclamations of "safe deployment"? And GPT4, as you have stated, is truly dangerous.

So: Transparency or Shutdown? Chills reading that. At present, I do not agree with you. But I reserve the right to change my mind. And thank you for keeping the critical fires burning. Much needed in these precarious times...